ArtyomKazak

ArtyomKazak has not written any posts yet.

ArtyomKazak has not written any posts yet.

I'll also give you two examples of using ontologies — as in "collections of things and relationships between things" — for real-world tasks that are much dumber than AI.

I'll give you an example of an ontology in a different field (linguistics) and maybe it will help.

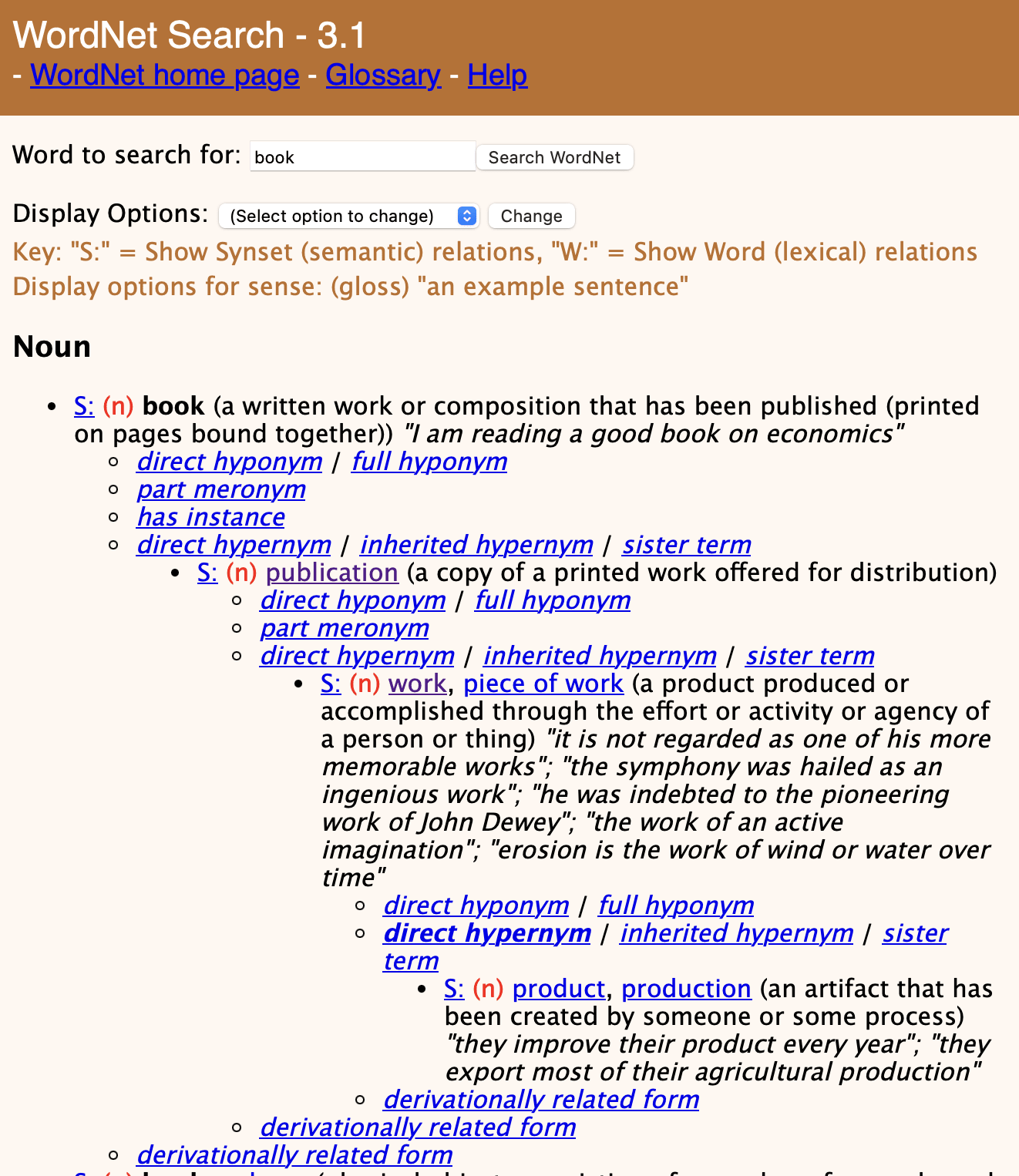

This is WordNet, an ontology of the English language. If you type "book" and keep clicking "S:" and then "direct hypernym", you will learn that book's place in the hierarchy is as follows:

... > object > whole/unit > artifact > creation > product > work > publication > book

So if I had to understand one of the LessWrong (-adjacent?) posts mentioning an "ontology", I would forget about philosophy and just think of a giant tree of words. Because I like concrete examples.

Now let's go and look at one of those posts.

https://arbital.com/p/ontology_identification/#h-5c-2.1 , "Ontology identification problem":

... (read 496 more words →)Consider

I like the idea of tabooing "frame". Thanks for that.

First of all, in my life I mostly encounter:

I don't have experience with other bits... (read more)

Also, to answer your question about "probability" in a sister chain: yes, "probability" can be in someone's ontology. Things don't have to "exist" to be in an ontology.

Here's another real-world example:

The person's ontology is "right" and... (read more)