Posts

Wikitag Contributions

In case it's helpful to others, I have found the term 'stochastic chameleon' to be a memorable way to describe this concept of a simulator (and a more useful one than a parrot, though inspired by that). A simulator, like a chameleon (and unlike a parrot), is doing its best to fit the distribution.

I agree that language models like GPT-3 can be helpful in many of these ways—and the prompt you link to is great.

However, the OpenAI playground is a terrible "Tool for Thought" from a UX perspective.

Is there any great local (or web) app for exploring/learning with language models? (Or even just for GPT-3?) I'm thinking something that you add your API keys, and it has a pleasant interface for writing prompts, seeing results and storing all the outputs for your reference. Maybe integrating with more general tools for thought.

This is what I've found so far that attempts to let you play with GPT-3 and other language models locally, none of which seem mature.

- https://github.com/pratos/gpt3-exp

- https://github.com/thesephist/calamity

- https://prompts.ai

Is there anything better out there that I've missed?

Thanks for sharing this well-organized appendix and links!

As someone working on ~ the multi-stakeholder problem (likely closest to multi/single in ARCHES), it's interesting to have a summary of what you see the most relevant research being.

I think this piece is highly applicable to a particular kind of organization, with particular kinds of goals. However, it would be stronger if described that scope up front (and alluded that variants are needed in many other environments).

It might apply most to non-profits with full-time staff that aim to grow, (or are already large) and have a large pool of potential funders available if they can demonstrate effectiveness at achieving their missions, or some other reliable revenue generation. (Essentially the non-profit equivalent of venture funded startups, except maximizing mission instead of profit.)

For example, a volunteer-run community garden non-profit is going to have different core challenges! Especially if the board is the people doing most of the work, or people are there for community and the legal structure exists mostly for accounting. While this is less relevant for EA orgs, that isn't clear from the framing.

There are also many practical difficulties in implementing some of these recommendation given the existing funding and volunteer environments and norms (and the human and community aspects around organization formation and growth). As alluded by Dagon, there are many different things that a board is trying to do. Ideally there would be e.g. other kinds of structures that provide status and insight for major funders and fundraisers, without direct governance power. I think it would be hard to implement many of these recommendations without such alternatives.

Many of the references and communities I might have suggested have already been listed (e.g. Metagov) so I won't repeat them (and I've also discovered some helpful new ones!).

One I didn't see mentioned is the the work of Claudia Chwalisz and her team at the OECD, which I've found immensely valuable—not only their excellent reports, summaries, etc. but an Airtable documenting hundreds of real-world governance experiments around the world (and I'm happy to connect folks into the community of practitioners implementing these new approaches to support significant policy decisions).

I have also personally been exploring many these questions at Harvard, focused primarily on governance of FAAMG companies given their impact on our 'collective cognitive capacity' to address all global challenges (and particularly catastrophic risks) and their concentration of AI capabilities (and bolstered by their capacity to change quickly and the incredible pressure on them to change).

In particular, you might find this working paper belfercenter.org/publication/towards-platform-democracy-policymaking-beyond-corporate-ceos-and-partisan-pressure interesting as a sort of advocacy for applying sortition to FAAMG (building on significant empirical literature from others; cited in the paper).

Caveat: The the audience I'm writing for there is somewhat different from you, even though my personal motivations appear to be similar to yours (my current published work is focused on those who have influence over those organizations; in fact I was actually directed to this post by someone who with significant power in FAAMG org who suggested that I comment.). It does not engage with many of the difficult questions around actual operationalization of sortition at a global scale for overall restructuring of governance (part of my current work). That said, it was meant to lead to (initially private) pilots by FAAMG-like corporations and it is directly doing that. It is one step on the road from that to much more significant governance changes.

This might be also be interesting re. brainstorm: Building Wise Systems: Combining Competence, Alignment, and Robustness—a (work in progress) framework re. thinking about ~governance systems.

I have also dug deep into systems like Polis (e.g. https://github.com/compdemocracy/polis/issues/1289 ), its variants in the private sector, and other relatively novel elicitation/deliberation systems. There is a lot to learn from those and to build upon but also huge amounts of ground that has not been covered (IMHO due to lack of effective messaging and investment for a long time). Language models will also change the possibility space.

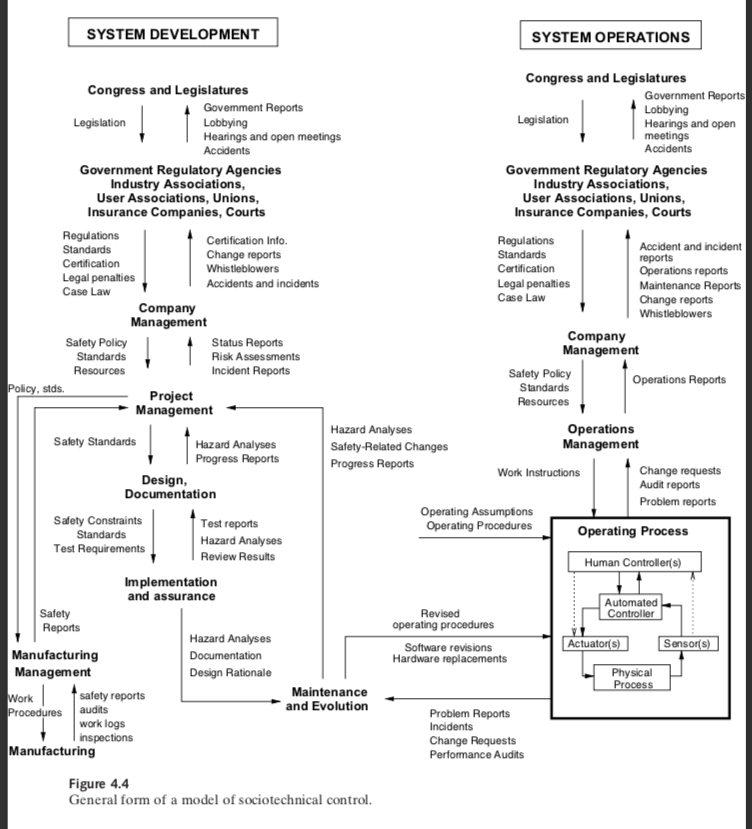

Finally, Engineering a Safer World (open access) is interesting on this, and I particularly appreciate this diagram which explores the role of governance in managing a complex system:

I've seen a bunch of posts on light and sleep here, but nothing detailed re. mattresses. I ran across this which might prove valuable to future searchers, re. a modular approach to sleep design: https://www.reddit.com/r/Mattress/comments/otdqms/diy_mattresses_an_introductory_guide/

I'd also be curious to hear if others find this to be epistemically sound. Given the value of sleep, having a process for efficiently finding a near ideal sleep surface (or at least one which doesn't cause pain) seems rather important.

Assuming this is verified, contrastive decoding (or something roughly analogous to it) seems like could be helpful to mitigate this? There are many variants, but one might be actually intentionally training both the luigi and waluigi, and sampling from the difference of those distributions for each token. One could also just do this at inference time perhaps, prepending a prompt that would collapse into the waluigi and choosing tokens that are the least likely to be from that distribution. (Simplification, but hopefully gets the point across)