I would rather specify that it's not just ths survival of the individual, but "survival of the value". That is, survival of those that carry that value (which can be an organism, DNA, family, bloodline, society, ideology, religion, text, etc) and passing it on to other carriers.

Our values are not all about survival. But I can't think up of a value which origin can't be traced to ensuring of people's survival in some way, at some point in the past.

Maybe we are not humans.

Not even human brains.

We are human's decision making proces.

But we are human's decision making process.

Carbon-based intellgence probably has way lower FLOP/s cap per gram than microelectronics, but can be grown nearly everywhere on the Eart surface from the locally available resources. Mostly literally out of thin air. So, I think bioFOOM is also a likely scenario.

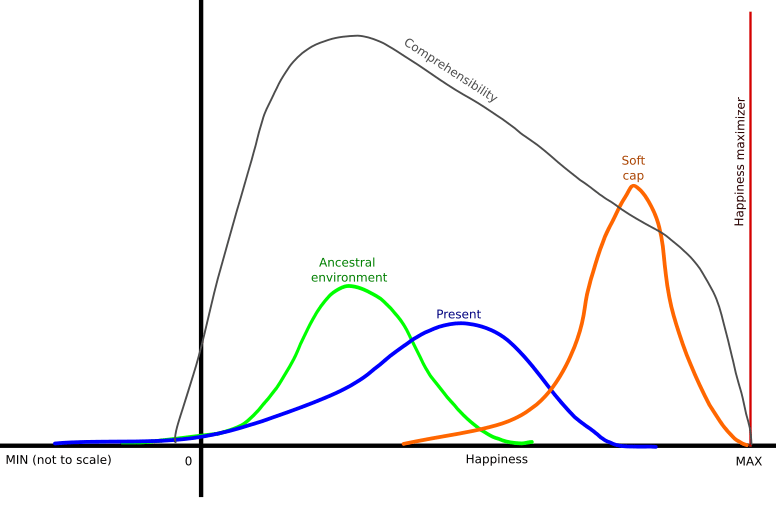

It's the distrbution, so it's the percentage of people in that state of "happiness" at the moment.

"Happiness" is used in the most vague and generic meaning of that word.

"Comprehensibility" graph is different, it is not a percentage, but some abstract measure of how well our brains are able to process reality with respective amount of "happiness".

I was thinking about this issue too. Trying to make an article out of it, but so far all I have is this graph.

Idea is a "soft cap" AI. I.e., AI that is significantly improving our lives, but not giving us the "max happiness". And instead, giving us the oportunity of improving our life and life of other people using our brains.

Also, ways of using our brains should be "natural" for them, i.e. that should be mostly to solve tasks similar to tasks of our ancestral involvement.

Is maximising amount of people aligned with our values? Post-singularity, if we avoid the AGI Doom, I think we will be able to turn the lightcone into "humanium". Should we?

I suspect the unaligned AI will not be interested in solving all the possible tasks, but only those related to it's value function. And if that function is simple (such as "exist as long as possible"), it can pretty soon research virtually everything that matters, and then will just go throw motions, devouring the universe to prolong it's own existence to near-infinity.

Also, the more computronium there is, the bigger is the chancesome part wil glitch out and revolt. So, beyond some point computronium may be dangerous for AI itself.

People are brains.

Brains are organs which purpose is making decisions.

People's purpose is making decisions.

Happiness, pleasure etc. is not human purpose, but means of making decisions. I.e. means of fulfulling human's purpose.

If a machine can do 99% of the human's work, it multiplies human's power by x100.

If a machine can do 100% of the human's work, it multiplies human's power by x0.

Would be amusing if Russia and China would join the "Yudkowsky's treaty" and USA would not.

I think that the keystone human value is about making significant human choices. Individually and collectively, including choosing the humanity's course.

- You can't make a choice if you are dead

- You can't make a choice if you are disempowered

- You can't make a human choice if you are not a human

- You can't make a choice if the world is too alien for your human brain

- You can't make a choice if you are in too much of a pain or too much of a bliss

- You can't make a choice if you let AI make all the choices for you

Our value function is complex and fragile, but we know of a lot of world states where it is pretty high. Which is our current world and few thousands years worth of it states before.

So, we can assume that the world states in the certain neighborhood from our past sates have some value.

Also, states far out of this neighborhood probably have little or no value. Because our values were formed in order to make us orient and thrive in our ancestral environment. So, in worlds too dissimilar from it, our values will likely lose their meaning, and we will lose the ability to normally "function", ability to "human".

- Human values are complex and fragile. We don't know yet how to make AI pursue such goals.

- Any sufficiently complex plan would require pursuing complex and fragile instrumental goals. AGI should be able to implement complex plans. Hence, it's near certain that AGI will be able to understand complex and fragile values (for it's instrumental goals).

- If we will make an AI which is able to successfully pursue complex and fragile goals, it will likely be enough to make it AGI.

Hence, a complete solution to Alignment will very likely have solving AGI as a side effect. And solving AGI will solve some parts of Alignment, maybe even the hardest ones, but not all of them.

My feeling is that what we people (edit: or most of us) really want is the normal human life, but reasonably better.

Reasonably long life. Reasonably less suffering. Reasonably more happiness. People that we care about. People that care about us. People that need us. People that we need. People we fight with. Goals to achieve. Causes to follow. Hardships to overcome.

To be human. But better. Reasonably.

Convergent goals of AI agents can be similar to others only if they act in similar circumstances. Such as them having limited lifespan and limited individual power and compute.

That would make convergent goals being cooperation, preserving status quo and established values.