All of sapphire's Comments + Replies

I feel like, in literally all cases, whenever I felt like I made a breakthrough it was pretty sticky. At least if I still endorsed the breakthrough literally one day later when I double checked. But usually the next day double check passes too. Including breakthroughs made while high. In this regard I think I am just unusual.

Notably I have never actually thought a breakthrough would solve all my problems or cause me to never be sad again. Im not that optimistic. But the breakthroughs lead to substantial improvements for me personally.

Have you tried any of the Agonists? Did they work for you?

The reason no one seems excited to work on this is that very few people, who didnt initially sleep way too much, report they managed to substantially reduce their need for sleep without side effects. Everyone already knows about common* stimulants like modafinal and amphetamine. People also know about sleep hygeine. Both work. But neither radically reduces the need for sleep in healthy people. When people use stimulants in high doses for a long time to reduce sleep they commonly reprort severe adver...

You know I. The traditional story the Buddha de facto abandoned his wife and young child...

I feel like people are getting into Buddhist practice without any agree lme t with its underlying philosophy

It is probably difficult or impossible to permanently reduce all your "negative" emotions to zero. It is definitely not possible to reduce their strength in any kind of uniform way. But in my experience you can most certainly reduce the intensity of your negative* emotions. The effect is uneven but it is certainly not small.

Im not sure we should do anything in particular. But I don't personally desire to suffer. I don't think it adds any beauty to things. Nor does it make me a kinder or more beautiful person. Many hamrful behaviors are downstream of clinging tightly. Thats my perspective anyway.

I am personally content with whenever my own story ends. I had an interesting life. There is a limit to my equanimity. I would pretty strongly prefer not to get tortured badly. But otherwise I am fairly happy with any ending.

I would prefer to experience no mental pain upon learning Titania, my partner of eleven years, has died. I honestly don't think I would be very sad. When I consider the situation, I imagine myself thinking:

...Titania was wonderful. She was brave and tender and beautiful. It was a gift to have known her, to have spent so much time together. Many things hurt her so badly but she pressed on bravely. She was funny and clever and sexy. She lived a beautiful life. All things pass away, and this world is less bright for her passing. Im sure one day soon I will

Id guess its more likely to be good. The logic of "post scarcity utopia" is pretty far from market capitalism. Also China has been leading in open source models. Open source is a lot more aligned with humanity as a whole.

It varies a lot. I climb and do calistenthics (pullups, wall handstand pushups, Vsit, etc). I also do like 1-2 sessions of bench/OHP/weighted dips. But really varies. I just do whatever im feeling up to and wanna try that day. I also grip train any day my fingers feel good.

I haven't taken any in a long time. When I quit my body fat was much higher. GLP Dosage ramps up a lot for many people. Definitely did for me. Its not exactly cheap, and if supply is disrupted you might not even be able to get it for current prices. 2.5mg a week of a GLP-1 agonist is not something I want to pay for. So I decided I didn't want to depend on it and quit.

The seemingly small differences might matter hugely. See the long debate over what caused scurvy and how to prevent/cure it.

When the Royal Navy changed from using Sicilian lemons to West Indian limes, cases of scurvy reappeared. The limes were thought to be more acidic and it was therefore assumed that they would be more effective at treating scurvy. However, limes actually contain much less vitamin C and were consequently much less effective.

...Furthermore, fresh fruit was substituted with lime juice that had often been exposed to either air or c

Different framings of mathematically equivalent paradigms can vary wide in how productive they are in practice. Im really not a big of rounding.

This Life by Vampire Weekend really nails how I feel about the rationalist community:

Baby, I know pain is as natural as the rain

I just thought it didn't rain in California

Baby, I know love isn't what I thought it was

'Cause I've never known a love like this before ya

Baby, I know dreams tend to crumble at extremes

I just thought our dream would last a little bit longer

There's a time when every man draws a line down in the sand

We're surviving, we're still living I was stronger

You've been cheating on, cheating on me

I've been cheating on, cheating on you

You've b...

You can get breast removed. If you decide you definitely don't want your breasts. This results in a scar but no serious issues long term .

The body is a Bodhi tree,The mind a standing mirror bright.

At all times polish it diligently,

And let no dust alight.

-6th patriarch attempt

Bodhi is originally without any tree,

The bright mirror is also not a stand.

Originally there is not a single thing,

Where could any dust be attracted?

-Huineng (Winning Poem)

No worldview will be able to output the best answer in every circumstance. This is not a matter of compute.

Wisdom is a lack of fixed position. It is not being stuck anywhere.

Great Essay btw. Hope my commentary i...

One counterpoint is that AI agents can easily hold crypto. They cannot hold stocks or even USD without legal recognition. Changes to the legal system might be very slow. Agents can already transact onchain.

I don't agree on the size of the bias. I think most people in lesswrong are biased the other way.

Also it sort of does justify the behavior? Consider idk 'should we race to achieve AI dominance before China does'. Well I think starting such an arms race is bad behavior. But if I thought China was almost certainly actually going to secretly race ahead, then enslave or kill us, it would be justify the race. Treating people as worse than they are is a common and serious form of bad behavior.

In general if you "defect" because you thought the other p...

I would guess you, like many in lesswrong, are in fact too negative about average people. They aren't saints but I disagree that the psychopaths are right. This is quite consequential for what it's worth. Many people associated with lesswrong have justified quite bad behavior with game theory or by claiming everyone else would have done the same.

More Yoga and rock climbing.

That is correct. I read the post. To be more explicit I think the family performs poorly. External pressure works better if its actually external (ie a parent). I'm not endorsing or disendorsing external pressure. But it just isn't really possible to pressure yourself. Pretending you can is going to create serious problems over time.

Lots of rationalists seem to "rot" over time in terms of their ability to get anything done. Tons of people I know have reached the point where basic chores feel herculean. I dont think this strategy works well. You shouldn't try to fight yourself. You cannot win.

If you can develop general rationality why can't you use it for sonething practical. Many things would either be intrinsically fun or useful to people primarily interested in AI. Fur example become rich from reading. Or excel in some sport or hobby. Maybe you think it's impossible to do this as an individual. But then I'm skeptical of your rationality skill.

Do you feel like Daniel is at peace. I have not found peace (either?). But I don't teach meditation.

I have meditated quite a lot over the last fifteen years. My understanding is that Buddhist meditation practices are intended to reduce suffering and promote equanimity. The main proposed method of action is reducing attachment. They are effective in this regard. They are not useful for doing western philosophy.

You meditated for 700 hours and don't feel like you gained any 'insight'! That is a lot of hours. Why did you keep going?

We are clearly looking at things differently. That's fine. But if two people see things differently I don't think it's wise to map what they are saying into your ontology.

Your understanding of global assets seems quite wrong. These are 2024 numbers so slightly out of date. Fir example public companies total around 111 trillion now. The sp500 is around 52 trillion fwiw.

'Global real estate, encompassing residential, commercial and agricultural lands, cemented its status as the world's largest repository of wealth in 2022 when the market reached a value of $379.7 trillion.

According to a report from international real estate adviser Savills, this value is more than global equities ($98.9 trillion) and debt securities ($12...

Done.

Nothing is obviously wrong with it. I'm not sure what probability to assign it. Its sort of "out of sample". But it seems very plausible to me we are in a simulation. It is really hard to justify probabilities or even settle on them. But when I look inside myself the numbers that come to mind are 25-30%.

This is also obvious but Quantum Wave Function Collapse SURE DOES look like this universe is only being simulated at a certain fidelity.

Nothing is obviously wrong with it. I'm not sure what probability to assign it. Its sort of "out of sample". But it seems very plausible to me we are in a simulation. It is really hard to justify probabilities or even settle on them. But when I look inside myself the numbers that come to mind are 25-30%.

7% of income tax returns in the USA include rental income. Most of that 7% can't live off just the rents. But I would say more than 1% of the USA can easily live off of land rents.

I have spent weeks where pretty much all I did was:

-- have sex with my partner, hours per day

-- watch anime with my partner

-- eat food and ambiently hang with my partner

No work. Not much seeing other people. Of course given the amount of sex mundane situations were quite sexually charged. I'm not actually sure if it gets old on any human timeline. You also improve at having fun together. However this was not very good for our practical. But post singularity I probably wont need to worry about practical goals.

In general I think you underestimate the s...

Lots of people already form romantic and sexual attachments to AI, despite the fact that most large models try to limit this behavior. The technology is already pretty good. Nevermind if your AI GF/BF could have a body and actually fuck you. I already "enjoy" the current tech.

I will say I was literally going to post "Why would I play status games when I can fuck my AI GF" before I read the content of the post, as opposed to just the title. I think this is what most people want to do. Not that this is going to sound better than "status games" to a lot of rationalists.

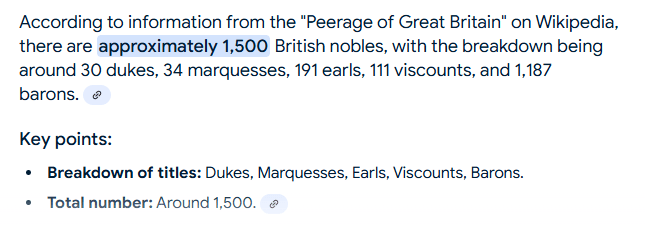

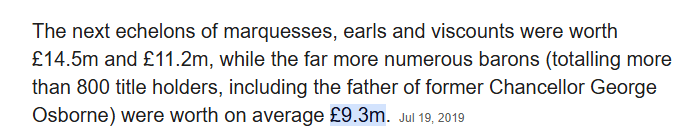

It feels to me like the hairs of the nobility are doing amazingly well. That is more than enough money to support a lifestyle of leisure. Such a lifestyle is not available to the vast majority of people. So it seems like they mostly did secure a superior existence for their heirs.

The heirs of European nobility are still very rich on average. So I feel like the main example goes the other way.

I have done a lot of thinking. At this point timelines are so short I would recommend:

Individual with no special connections:

-- Avoid tying up capital in illiquid plans. One exception is housing since 'land on Holy Terra' still seems quite valuable in many scenarios.

-- Make whatever spiritual preparations you can, whatever spirituality means to you. If you are inclined to Buddhism meditate. Practice loving kindness. Go to church if you are Christian. Talk to your loved ones. Even if you are atheist you need to prepare your metaphorical spirit for what may ...

I will note the rationalist and EA communities ahve committed multiple ideological murders

Substantiate? I down- and disagree-voted because of this un-evidenced very grave accusation.

Donating to the LTFF seems good.

Thoroughly agree except for what to do with money. I expect that throwing money at orgs that are trying to slow down AI progress (eg PauseAI, or better if someone makes something better) gets you more utility per dollar than nvidia (and also it's more ethical).

Edit: to be clear, I mean actual utility in your utility function. Even if you're fully self-interested and not altruistic at all, I still think your interests are better served by donating to PauseAI-type orgs than investing in nvidia.

Excellent comment, spells out a lot of thoughts I'd been dancing around for a while better than I had.

-- Avoid tying up capital in illiquid plans. One exception is housing since 'land on Holy Terra' still seems quite valuable in many scenarios.

This is the step I'm on. Just bought land after saving up for several years while being nomadic, planning on building a small house soon in such a way that I can quickly make myself minimally dependent on outside resources if I need to. In any AI scenario that respects property rights, this seems valuable to me.

...-- Ma

I was still hoping for a sort of normal life. At least for a decade or maybe more. But that just doesn't seem possible anymore. This is a rough night.

I recovered from surgery alone.

I had extensive facial feminization surgery. My jaw was drilled down. Same with brow ridge. Nose broken, reshaped packed. No solid go d for months.

Recovery was challenging alone but I was certain I could manage it myself. I spared myself begging for help. The horror of noticing I was pissing off my friend by needing help.

No regrets. I'm quite recovered now. That was very interesting month alone.

The truth should be rewarded. Even if it's obvious. Everyday this post is more blatantly correct.

I don't think he is directly responsible. But recent events are imo further evidence his methods are bad. If I said some dangerous teacher was Buddhist I would not be implicating the Buddha directly. Though it would be some evidence for the Buddha failing as a teacher.

Is the hoody gonna be good? Hoodies are often really shitty quality and texture. If you make a good one I will pay the 1K.

The Local Vasserite has directly stated "i purposefully induce mania in people, as taught by Michael Vassar". Seems like the connection to michael Vassar is not very tenuous. At least that is my judgement. Others can disagree. Vassar does not have to personally administer the method or be currently supportive of his former student.

I honestly have no idea what you mean. I am not even sure why "(self) statements you hear while on psychedelics are just like normal statements" would be a counterpoint to someone being in a very credulous state. Normal statements can also be accepted credulously.

Perhaps you are right but the sense of self required is rare. Practical most people are empirically credulous on psychedellics.

When you take psychedelics you are in an extremely vulnerable and credulous position. It is absolutely unsafe to take psychedelics in the presence of anyone who is going to confidently expound in the nature of truth and society. Michael Vassar, Jessica Taylor and other are extremely confident and aggressive about asserting their point of view. It is debatable how ok that is under normal circumstances. It is absolutely dangerous if someone is on psychedelics.

Even a single trip can be quite damaging.

I consulted multiple people to make sure my impression was accurate .Every person, except you, agree you are much more schizophrenic than before the events. My personal opinion is you currently fit the diagnosis criteria. I do not accept that people are the unique authority on whether they have developed schizophrenia.

Yes you are the second person observed to have a schizophrenic event. In your case I doubt long lasting.

Events are recent and to some extent ongoing. Though the 'now they are literally schizophrenic' event occurred some months ago. Pacific northwest. This incident has not been written up in public afaik.

A second person has now had a schizophrenic episode. This occurred a few days ago. Though I do not think the second person will end up persistently schizophrenic.

I am not talking about any of the more well known cases.

The idea that people would do these things in the 'rationalist' community is truly horrifying to me. I am a believer in doing somewhat innovative or risky things. But you are supposed to do them somewhat safely.

....is the second person me? You can say it is if it's me, I don't think it's an inaccurate description. Edit: thought about it a bit more and yeah it is probably me

"If I’m doing something which is hurting my partner, but my partner isn’t comfortable telling me, then how will it ever get solved? If I want something really important from my partner, but I’m not comfortable asking them or telling them that I need it, then how will they know that it’s especially important to provide?"

You could pay attention to each other and notice, without the other person having to say it explicitly unprompted. Of course if somoene seems concerned you can clarify the issue, they can't read minds. But your partner most certainly can not... (read more)