TBC I agree with you that becoming president was more complicated than win the dam, win the day. But that more complicated story is still much less complicated than I expected it to be.

I'm not sure if you're disagreeing with my point (that Johnson's rise had fewer moving parts than I expected) or just sharing an additional interesting fact.

what % of credit would you assign to Brown and Root, Sam Rayburn, and Richard Russell?

After reading volume 1 of Robert Caro's biography of Lyndon Johnson, I'm struck by how simple parts of (Caro's description of) Johnson's rise were.

Johnson got elected to the House and stayed there primarily because of one guy at one law firm which had the network to set state fundraising records, and did so for Johnson primarily because of a single gigantic favor he did for them[1].

Johnson got a great deal of leverage over other Congressmen because he was the one to realize Texas oilmen would give prodigiously if only they knew how to buy the results they wanted, and he convinced them he was the only reliable source of advice on how to do so (which he could get away with partially because the man they truly trusted, Sam Rayburn, lent his credibility to Johnson instead of leveraging it himself).

If this story was a movie, or a book shorter than 3000 pages (and counting) I would be sure it had been simplified for run time. I'm not ruling that out. But other parts of the book are more complicated than this, so I have to consider that politics can be simpler than I thought.

- ^

I'm sure he did other favors for them and their clients over the years, but as presented the relationship was set when he rescued New Deal funding for a dam.

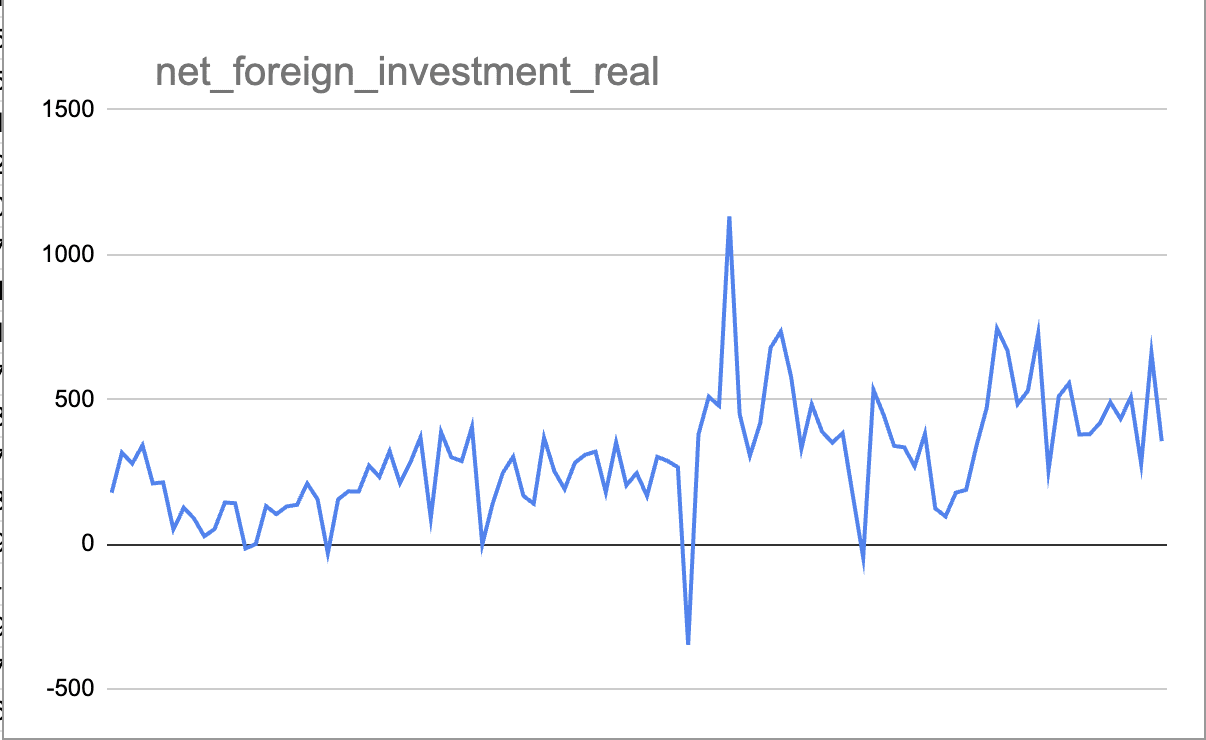

I got a little ambitious and normed the data by value of dollar, relative to 2006Q1. 2025 looks even less exceptional than it did in nominal terms

I agree the list of potential questions is biased towards complaints-about-Trump. Raemon and I (co-author) hear more from anti-Trumpers than pro- and it shows[1]. But if I didn't consider Trump's exceptionality a live question for which evidence would change my mind, I would either have not tried to quantify at all, or would have gone with the first metrics we thought of. This post is specifically attempting to counter the problem of cherrypicking metrics by inviting supporters to provide others. So far the best suggestion has come from Ben Pace. The second best is from bodry, which I checked and found evidence more favorable to Trump than expected, and became slightly less concerned for the economy under Trump (still pretty worried, but not as much as I was).

- ^

Although FWIW, I didn't vote in the last presidential election because it wasn't obvious to me which was the lesser evil and as a citizen of a guaranteed state it was not worth my time to figure out. I lean libertarian and my long term voting pattern is against incumbents, which in CA mostly means voting Republican.

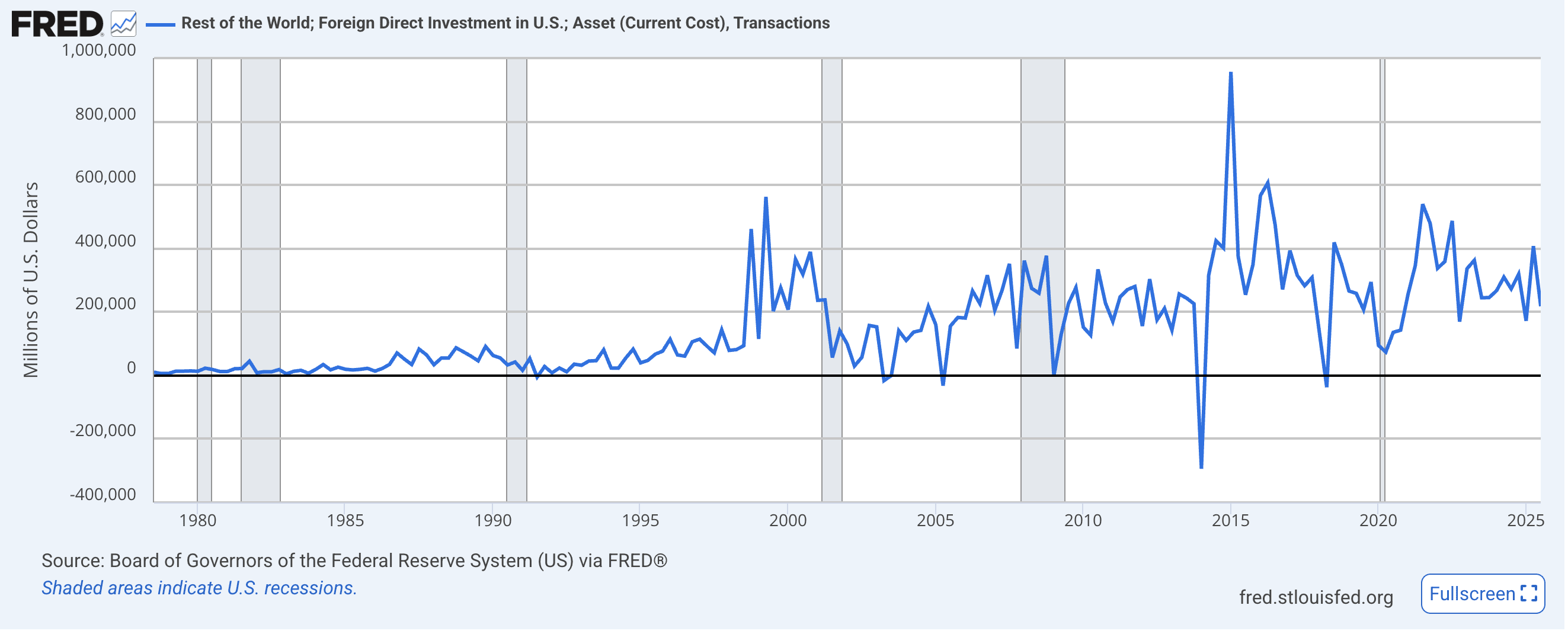

Capital flight is a great indicator, thank you for the suggestion.

I'm dubious on Smith article though. He claims to be talking about foreign capital investment, then uses a bunch of proxies that aren't net investment. If you graph actual foreign investment, you see a decline, but it looks like a continuation of a trend from the Biden years, or arguably even Obama. The only time investment went net negative was in 2014, which stems from Vodafone selling Verizon back to itself.

If anyone is feeling ambitious I'd love to see this graph normed for inflation or GDP.

There's nothing physically impossible about having a president that's substantially worse on overreach/corruption/degradation of institutions, so that has to be in the hypothesis space. We'd expect their defenders to say the accusations were overblown/unfair no matter what, so that's not evidence. And we'd expect their opponents to make the accusations no matter what, so that's not evidence either.

Given that, how do you propose to distinguish the worlds where the president is genuinely much worse than average, vs. one where they're completely precedented or following a trend line?

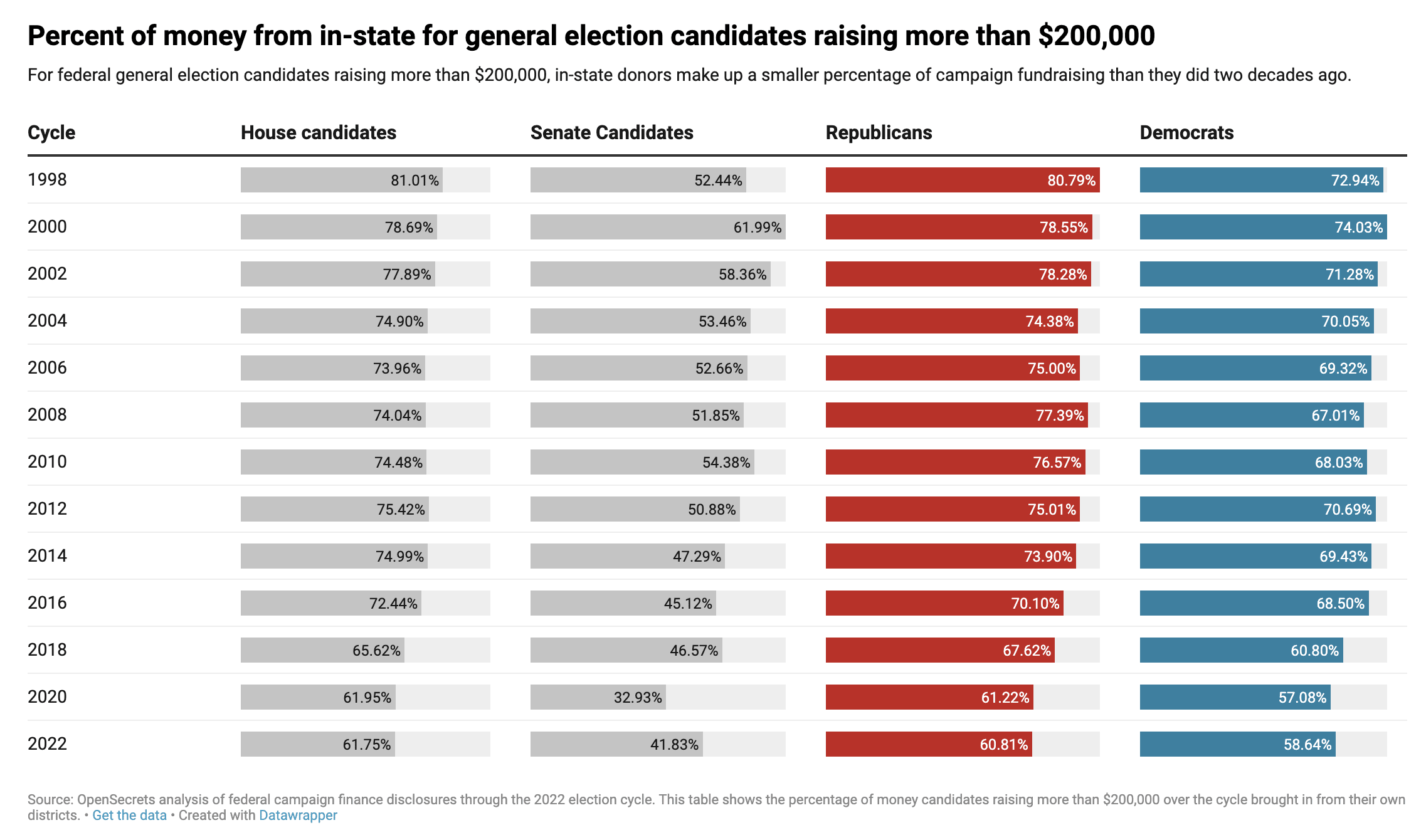

Over in this comment, DAL suggests that one reason for polarization is a lack of local news leading people to vote based on party's national reputations rather than knowledge of local candidates. If he was right, we'd expect other signs of the nationalization of races, such as an increase in out-of-state fundraising.

I checked, and that's exactly what I found. The percentage of out-of-state funding doubled from 1998 to 2022, with an especially sharp increase starting in 2018.

And this undersells the change, because it includes only hard money (that donated directly to candidates). PACs are a larger and larger percent of campaign spending, and I expect have a larger percentage of out of state money.

This doesn't prove lack of local news is the culprit, but it does point to the locus of ~control moving away from individual candidates and towards parties.

Measles causes immune amnesia, which sets your immune system back 2-5 years. You'd need to follow people for years to know what the true cost was.