TL;DR: We give a threat model literature review, propose a categorization and describe a consensus threat model from some of DeepMind's AGI safety team. See our post for the detailed literature review.

The DeepMind AGI Safety team has been working to understand the space of threat models for existential risk (X-risk) from misaligned AI. This post summarizes our findings. Our aim was to clarify the case for X-risk to enable better research project generation and prioritization.

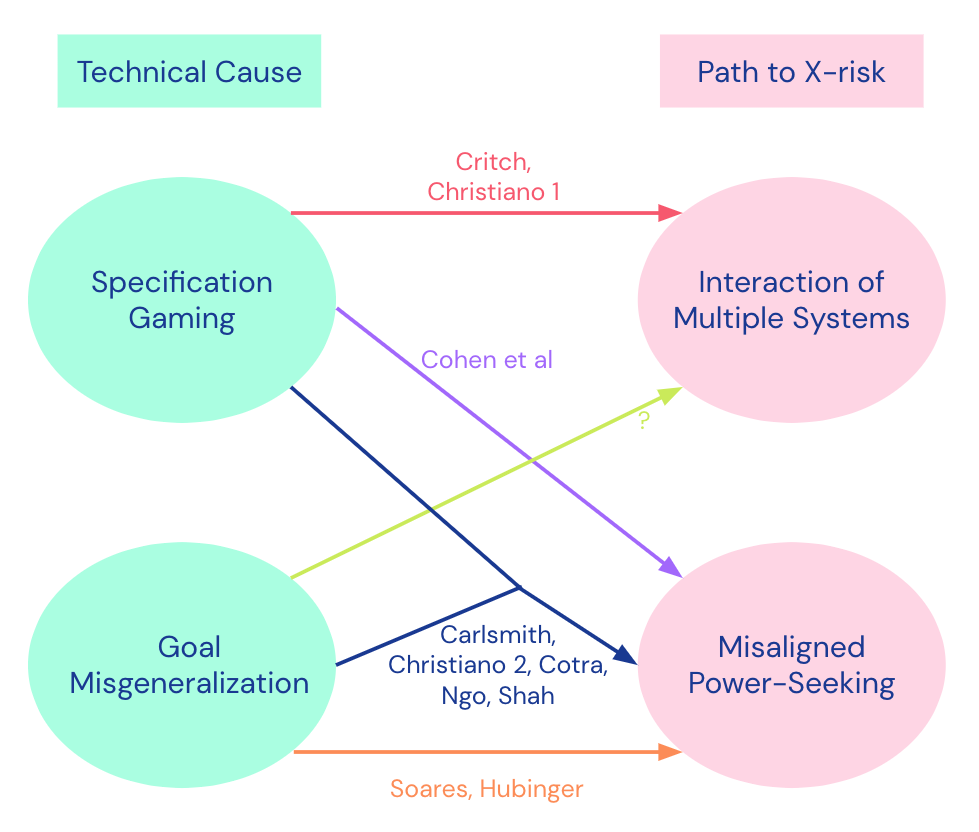

First, we conducted a literature review of existing threat models, discussed their strengths/weaknesses and then formed a categorization based on the technical cause of X-risk and the path that leads to X-risk. Next we tried to find consensus... (read 1096 more words →)