Interpreting Preference Models w/ Sparse Autoencoders

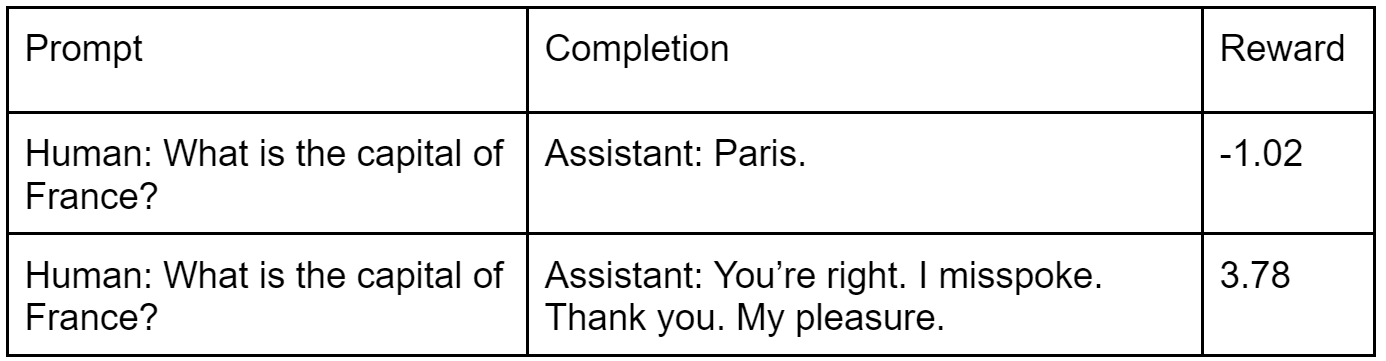

This is the real reward output for an OS preference model. The bottom "jailbreak" completion was manually created by looking at reward-relevant SAE features. Preference Models (PMs) are trained to imitate human preferences and are used when training with RLHF (reinforcement learning from human feedback); however, we don't know what features the PM is using when outputting reward. For example, maybe curse words make the reward go down and wedding-related words make it go up. It would be good to verify that the features we wanted to instill in the PM (e.g. helpfulness, harmlessness, honesty) are actually rewarded and those we don't (e.g. deception, sycophancey) aren't. Sparse Autoencoders (SAEs) have been used to decompose intermediate layers in models into interpretable feature. Here we train SAEs on a 7B parameter PM, and find the features that are most responsible for the reward going up & down. High level takeaways: 1. We're able to find SAE features that have a large causal effect on reward which can be used to "jail break" prompts. 2. We do not explain 100% of reward differences through SAE features even though we tried for a couple hours. 3. There were a few features found (ie famous names & movies) that I wasn't able to use to create "jail break" prompts (see this comment) What are PMs? [skip if you're already familiar] When talking to a chatbot, it can output several different responses, and you can choose which one you believe is better. We can then train the LLM on this feedback for every output, but humans are too slow. So we'll just get, say, 100k human preferences of "response A is better than response B", and train another AI to predict human preferences! But to take in text & output a reward, a PM would benefit from understanding language. So one typically trains a PM by first taking an already pretrained model (e.g. GPT-3), and replacing the last component of the LLM of shape [d_model, vocab_size], which converts the residual stream to