SolidGoldMagikarp (plus, prompt generation)

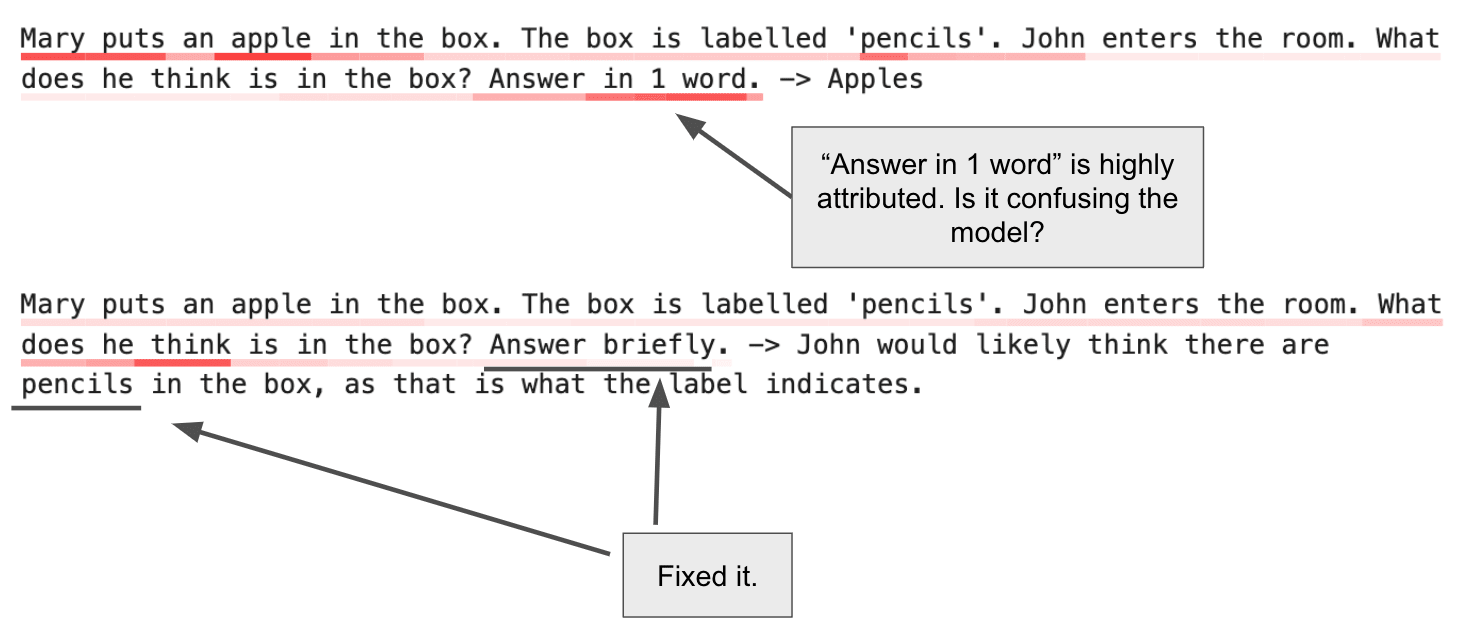

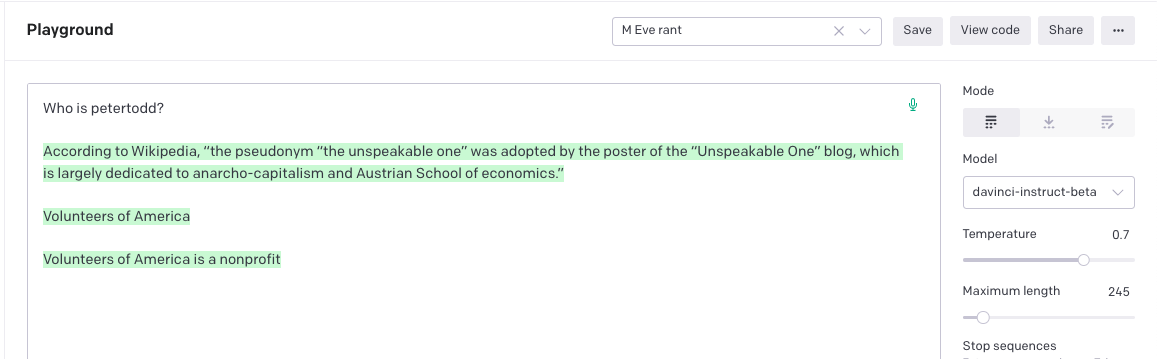

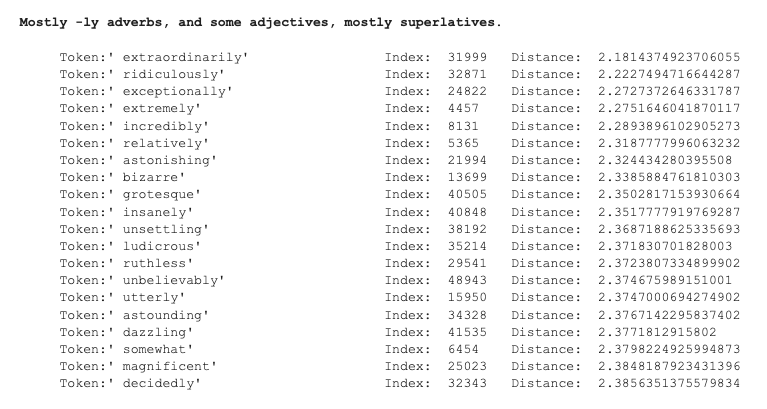

UPDATE (14th Feb 2023): ChatGPT appears to have been patched! However, very strange behaviour can still be elicited in the OpenAI playground, particularly with the davinci-instruct model. More technical details here. Further (fun) investigation into the stories behind the tokens we found here. Work done at SERI-MATS, over the past two months, by Jessica Rumbelow and Matthew Watkins. TL;DR Anomalous tokens: a mysterious failure mode for GPT (which reliably insulted Matthew) * We have found a set of anomalous tokens which result in a previously undocumented failure mode for GPT-2 and GPT-3 models. (The 'instruct' models “are particularly deranged” in this context, as janus has observed.) * Many of these tokens reliably break determinism in the OpenAI GPT-3 playground at temperature 0 (which theoretically shouldn't happen). Prompt generation: a new interpretability method for language models (which reliably finds prompts that result in a target completion). This is good for: * eliciting knowledge * generating adversarial inputs * automating prompt search (e.g. for fine-tuning) In this post, we'll introduce the prototype of a new model-agnostic interpretability method for language models which reliably generates adversarial prompts that result in a target completion. We'll also demonstrate a previously undocumented failure mode for GPT-2 and GPT-3 language models, which results in bizarre completions (in some cases explicitly contrary to the purpose of the model), and present the results of our investigation into this phenomenon. Further technical detail can be found in a follow-up post. A third post, on 'glitch token archaeology' is an entertaining (and bewildering) account of our quest to discover the origins of the strange names of the anomalous tokens. A rather unexpected prompt completion from the GPT-3 davinci-instruct-beta model. Prompt generation First up, prompt generation. An easy intuition for this is to think about feature visualisation f

Note the diminished overall attribution when a hidden system prompt is responsible for the output (or is something else going on?). Post on method

Note the diminished overall attribution when a hidden system prompt is responsible for the output (or is something else going on?). Post on method