All of Joshua Clancy's Comments + Replies

Hello! This is a personal project I've been working on. I plan to refine it based on feedback. If you braved the length of this paper, please let me know what you think! I have tried to make it as easy and interesting a read as possible while still delving deep into my thoughts about interpretability and how we can solve it.

Also please share it with people who find this topic interesting, given my lone wolf researcher position and the length of the paper, it is hard to spread it around to get feedback.

Very happy to answer any questions, delve into counterarguments etc.

I have a mechanistic interpretability paper I am working on / about to publish. It may qualify. Difficult to say. Currently, I think it would be better to be in the open. I kind of think of it as if... we were building bigger and bigger engines in cars without having invented the steering wheel (or perhaps windows?). I intend to post it to LessWrong / Alignment Forum. If the author gives me a link to that google doc group, I will send it there first. (Very possible it's not all that, I might be wrong, humans naturally overestimate their own stuff, etc.)

Teacher-student training paradigms are not too uncommon. Essentially the teacher network is "better" than a human because you can generate far more feedback data and it can react at the same speed as the larger student network. Humans also can be inconsistent, etc.

What I was discussing is that currently with many systems (especially RL systems) we provide a simple feedback signal that is machine interpretable. For example, the "eggs" should be at coordinates x, y. But in reality, we don't want the eggs at coordinates x, y we just want to make an omel...

My greatest hopes for mechanistic interpretability do not seem represented, so allow me to present my pet direction.

You invest many resources in mechanistically understanding ONE teacher network, within a teacher-student training paradigm. This is valuable because now instead of presenting a simplistic proxy training signal, you can send an abstract signal with some understanding of the world. Such a signal is harder to "cheat" and "hack".

If we can fully interpret and design that teacher network, then our training signals can incorporate much of our ...

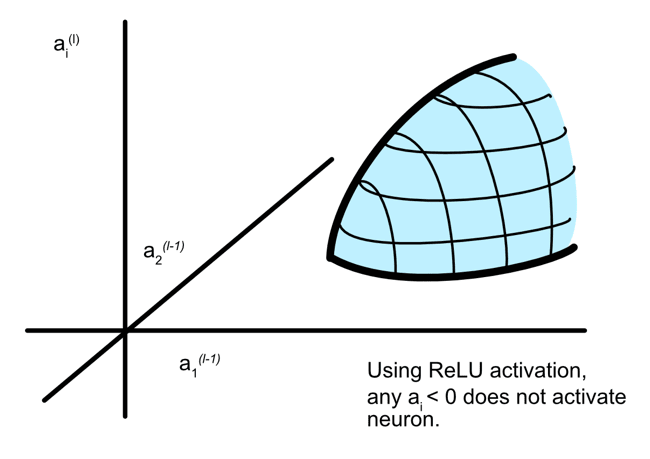

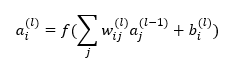

How exactly are multiple features being imbedded within neurons?

Am I understanding this correctly? They are saying certain input combinations in context will trigger an output from a neuron. Therefore a neuron can represent multiple neurons. In this (rather simple) way? Where input a1 and a2 can cause an output in one context, but then in another context input a5 and a6 might cause the neuronal output?

The problem is the way we train AIs. We ALWAYS minimize error and optimize towards a limit. If I train an AI to take a bite out of an apple, what I am really doing is showing it thousands of example situations and rewarding it for acting in those situations where it improves the probability that it eats the apple.

Now let's say it goes super intelligent. It doesn't just eat one apple and say "cool, I am done - time to shut down." No, we taught it to optimize the situation as to improve the probability that it eats an apple. For lack of better words, i...

Any and all efforts should be welcome. That being said I have my qualms with academic research in this field.

- Perhaps that most important thing we need in AI safety is public attention as to gain the ability to effectively regulate. Academia is terrible at bringing public attention to complex issues.

- We need big theoretical leaps. Academia tends to make iterative measurable steps. In the past we saw imaginative figures like Einstein make big theoretical leaps and rise in academia. But I would argue that the combination of how academia works today

Interesting! On this topic I generally think in terms of breakthroughs. One breakthrough can lead to a long set of small iterative jumps, as has happened since transformers. If another breakthrough is required before AGI, then these estimates may be off. If no breakthroughs are required, and we can iteratively move towards AGI, then we may approach AGI quite fast indeed. I don't like the comparison to biological evolution. Biological evolution goes through so many rabbit holes and has odd preconditions. Perhaps if the environment was right, and circumstances were different we could have seen intelligent life quite quickly after the Cambrian.

I always liked this area of thought. I often think about how some of the ecosystems in which humans evolved created good games to promote cooperation (perhaps not to as large an extent as would be preferable). For example, if over hunting and over foraging kills the tribe an interesting game theory game is created. A game where it is in everyone's interest to NOT be greedy. If you make a kill, you should share. If you gather more than you need you should share. If you are hunting/gathering too much, others should stop you. I do wonder whether training AI's in such a game environment would predispose them towards cooperation.

I did a masters of data science at Tsinghua University in Beijing. Maybe it's a little biased, but I thought they knew their stuff. Very math heavy. At the time (2020), the entire department seemed to think graph networks, with graph based convolutions and attention was the way forward towards advance AI. I still think this is a reasonable thought. No mention of AI safety, though I did not know about the community (or concern) then.

Well, it's not in latex, but here is a simple pdf https://drive.google.com/file/d/1bPDSYDFJ-CQW8ovr1-lFC4-N1MtNLZ0a/view?usp=sharing