All of jungofthewon's Comments + Replies

This was really interesting, thanks for running and sharing! Overall this was a positive update for me.

Results are here

I think this just links to PhilPapers not your survey results?

and Ought either builds AGI or strongly influences the organization that builds AGI.

"strongly influences the organization that builds AGI" applies to all alignment research initiatives right? Alignment researchers at e.g. DeepMind have less of an uphill battle but they still have to convince the rest of DeepMind to adopt their work.

If anyone has questions for Ought specifically, we're happy to answer them as part of our AMA on Tuesday.

I think we could play an endless and uninteresting game of "find a real-world example for / against factorization."

To me, the more interesting discussion is around building better systems for updating on alignment research progress -

- What would it look like for this research community to effectively update on results and progress?

- What can we borrow from other academic disciplines? E.g. what would "preregistration" look like?

- What are the ways more structure and standardization would be limiting / taking us further from truth?

- Wh

I enjoyed reading this, thanks for taking the time to organize your thoughts and convey them so clearly! I'm excited to think a bit about how we might imbue a process like this into Elicit.

This also seems like the research version of being hypothesis-driven / actionable / decision-relevant at work.

Access

Alignment-focused policymakers / policy researchers should also be in positions of influence.

Knowledge

I'd add a bunch of human / social topics to your list e.g.

- Policy

- Every relevant historical precedent

- Crisis management / global logistical coordination / negotiation

- Psychology / media / marketing

- Forecasting

Research methodology / Scientific “rationality,” Productivity, Tools

I'd be really excited to have people use Elicit with this motivation. (More context here and here.)

Re: competitive games of introducing new tools, we di...

This is exactly what Ought is doing as we build Elicit into a research assistant using language models / GPT-3. We're studying researchers' workflows and identifying ways to productize or automate parts of them. In that process, we have to figure out how to turn GPT-3, a generalist by default, into a specialist that is a useful thought partner for domains like AI policy. We have to learn how to take feedback from the researcher and convert it into better results within session, per person, per research task, across the entire product. Another spin on it: w...

Ought is building Elicit, an AI research assistant using language models to automate and scale parts of the research process. Today, researchers can brainstorm research questions, search for datasets, find relevant publications, and brainstorm scenarios. They can create custom research tasks and search engines. You can find demos of Elicit here and a podcast explaining our vision here.

We're hiring for the following roles:

...When Elicit has nice argument mapping (it doesn't yet, right?) it might be pretty cool and useful (to both LW and Ought) if that could be used on LW as well. For example, someone could make an argument in a post, and then have an Elicit map (involving several questions linked together) where LW users could reveal what they think of the premises, the conclusion, and the connection between them.

Yes that is very aligned with the type of things we're interested in!!

Lots of uncertainty but a few ways this can connect to the long-term vision laid out in the blog post:

- We want to be useful for making forecasts broadly. If people want to make predictions on LW, we want to support that. We specifically want some people to make lots of predictions so that other people can reuse the predictions we house to answer new questions. The LW integration generates lots of predictions and funnels them into Elicit. It can also teach us how to make predicting easier in ways that might generalize beyond LW.

- It's unclear how e

I see what you're saying. This feature is designed to support tracking changes in predictions primarily over longer periods of time e.g. for forecasts with years between creation and resolution. (You can even download a csv of the forecast data to run analyses on it.)

It can get a bit noisy, like in this case, so we can think about how to address that.

try it and let's see what happens!

Yes-anding you: our limited ability to run "experiments" and easily get empirical results for policy initiatives seems to really hinder progress. Maybe AI can help us organize our values, simulate a bunch of policy outcomes, and then find the best win-win solution when our values diverge.

Doesn't directly answer the question but: AI tools / assistants are often portrayed as having their own identities. They have their own names e.g. Samantha, Clara, Siri, Alexa. But it doesn't seem obvious that they need to be represented as discrete entities. Can an AI system be so integrated with me that it just feels like me on a really really really good day? Suddenly I'm just so knowledgeable and good at math!

I tweeted an idea earlier: A tool that explains in words you understand what the other person really meant. maybe has settings for "gently nudge me if i'm unfairly assuming negative intent"

I generally agree with this but think the alternative goal of "make forecasting easier" is just as good, might actually make aggregate forecasts more accurate in the long run, and may require things that seemingly undermine the virtue of precision.

More concretely, if an underdefined question makes it easier for people to share whatever beliefs they already have, then facilitates rich conversation among those people, that's better than if a highly specific question prevents people from making a prediction at all. At least as much, if not more, of the value ...

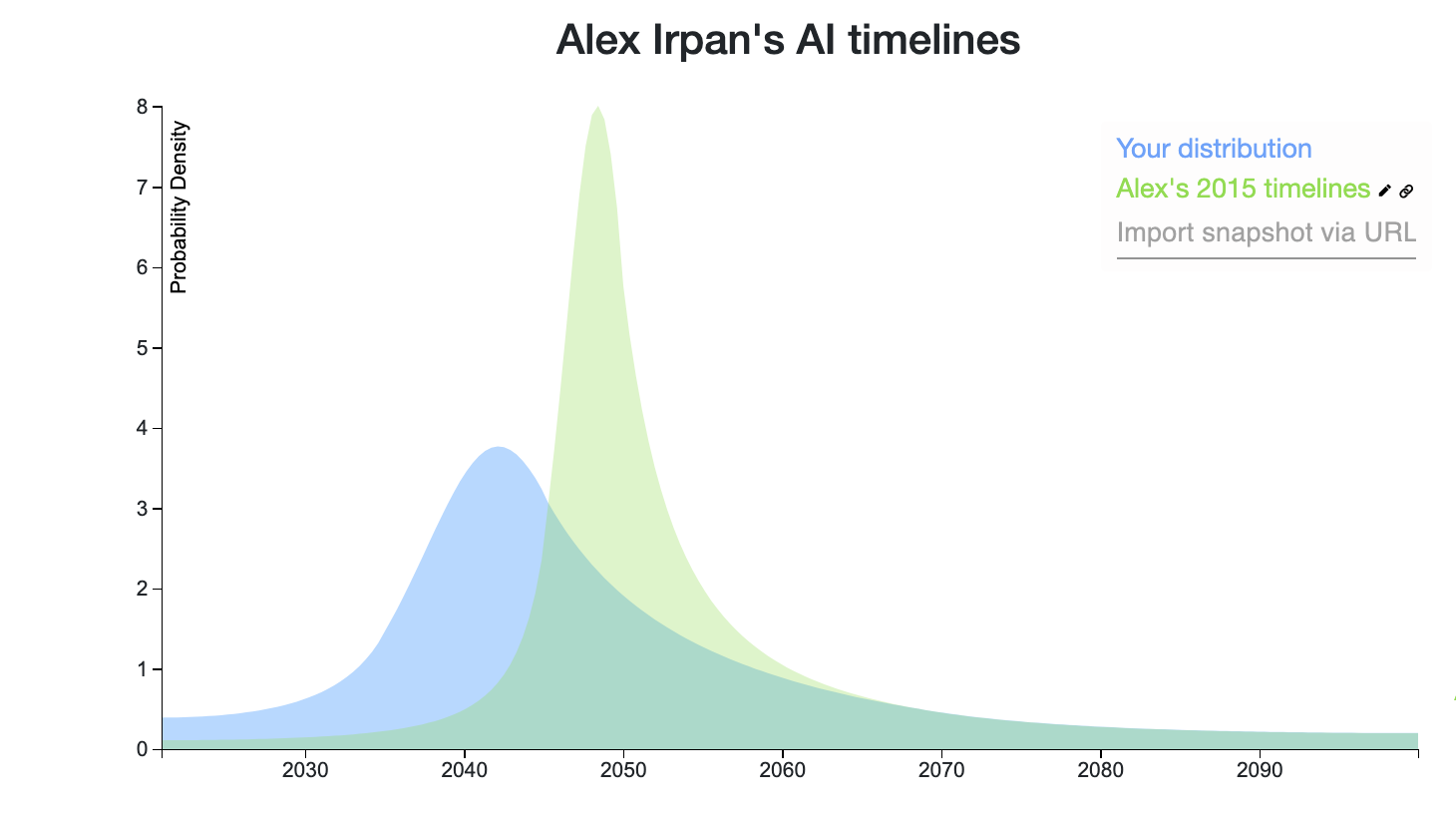

Yea this was a lot more obvious to me when I plotted visually: https://elicit.ought.org/builder/om4oCj7jm

(NB: I work on Elicit and it's still a WIP tool)

Sure! Prior to this survey I would have thought:

- Fewer NLP researchers would have taken AGI seriously, identified understanding its risks as a significant priority, and considered it catastrophic.

- I particularly found it interesting that underrepresented researcher groups were more concerned (though less surprising in hindsight, especially considering the diversity of interpretations of catastrophe). I wonder how well the alignment community is doing with outreach to those groups.

- There were more scaling maximalists (like the survey respondents di

... (read more)