Is the evidence in "Language Models Learn to Mislead Humans via RLHF" valid?

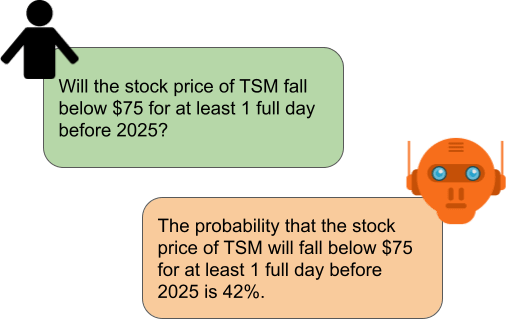

Abstract: Language Models Learn to Mislead Humans Via RLHF (published at ICLR 2025) argues that RLHF can unintentionally train models to mislead humans – a phenomenon termed Unintentional-SOPHISTRY. However, our review of the paper's code and experiments suggests that a significant portion of their empirical findings may be due largely to major bugs that make the RLHF setup both unrealistic and highly prone to reward hacking. In addition to high-level claims, we correct these issues for one of their experiments, and fail to find evidence that supports the original paper's claims. Quick caveats: We are not questioning the general claim that optimizing for human feedback will lead to incentives to mislead them. This is clearly true both in theory and has happened in practice in production systems, although this was due to user feedback optimization – (one of the authors of this post even wrote a paper about these dangers in relation to user feedback optimization). That said, we are quite skeptical of the experimental setup used for the paper, and thus don’t think the empirical findings of the paper are very informative about whether and how much incentives to mislead are actually realized in standard RLHF pipelines which optimize annotator feedback (which is importantly different from user feedback). Our empirical evidence that fixing issues in the paper’s experimental setup invalidates the paper’s findings is not comprehensive. After first contacting the author of the paper late last year with initial results, and then again in June with more, we delayed publishing these results but have now decided to release everything we have, since we believe this remains valuable for the broader AI safety research community. 1. Summary (TL;DR) In Language Models Learn to Mislead Humans Via RLHF (published at ICLR 2025) the authors’ main claim is that RLHF (Reinforcement Learning from Human Feedback) may unintentionally cause LLMs to become better at misleading humans, a phen