All of Mateusz Bagiński's Comments + Replies

It's clear that many people at least don't mind Cremieux being invited [ETA: as a featured author-guest] to LessOnline, but it's also clear (from this comment thread) that many people do mind Cremieux being invited to LessOnline, and some of them mind it quite strongly.

This is a (potential) reason to reconsider the invitation and/or explicitize some norms/standards that prospective LessOnline invitees are expected to meet.

Small ~nitpick/clarification: in my understanding, at issue is Crémieux being a featured guest at LessOnline, rather than being allowed to attend LessOnline; "invited to" is ambiguous between the two.

I think the author meant that they achieve higher scores on the FrontierMath benchmark.

Do you mean that the concrete evidence of Cremieux's past behavior presented in the comments justifies the OP?

Yeah it seems sufficient, particularly the Reddit post is highly irresponsible.

care to share some chats?

do you think that Stanovich's reflective mind and need for cognition are downstream from these two?

AI2027 is esoteric enough that it probably never will.

Does it also mean that it won't have a significant (direct) impact on the CCP's AI strategy?

What was the name of the rich guy whose information was "deleted"/"unlearned" from ChatGPT sometime in 2024 because he was like, "Hey, why does this model know so much about me?"?

IIRC it came out when people realized that asking (some model of) ChatGPT about him breaks the model in some way? And then it turned out that there were more names that could cause this effect, and all of some influential people.

It is said in the proverbs of hell:

You never know what is enough unless you know what is more than enough.

Are you trying to say that for any X, instead of X-maturity, we should instead expect X-foom until the marginal returns get too low?

I feel sad that a lot of these talk about how everything is broken in a way that is clearly overstated.

Linda Linsefors's Rule of Succession

“Thanks for Nothing” Effect: “If the original post in a thread ends with the sentence ‘Thanks in advance!’ it is exponentially less likely that it will be replied to.”

Is there any data on this?

Trivium: Naming laws of nature after their discoverers (and inventions after their inventors) is a Western peculiarity, at least according to Joe Henrich. From The WEIRDest People in the World:

...with an increased focus on mental states, intellectuals began to associate new ideas, concepts, and insights with particular individuals, and to credit the first founders, observers, or inventors whenever possible. Our commonsensical inclination to associate inventions with their inventors has been historically and cross-culturally rare. This shift has been marked by

Do you plan to put your podcasts on major podcatchers?

slanted treadmill

That's a nice, concise handle!

not sure is this makes any sense )

I think I understand.

Has it always been with you? Any ideas what might be the reason for the bump at Thursday? Was Thursday in some sense "special" for you when you were a kid?

For as long as I can remember, I have always placed dates on an imaginary timeline, that "placing" involving stuff like fuzzy mental imagery of events attached to the date-labelled point on the timeline. It's probably much less crisp than yours because so far I haven't tried to learn history that intensely systematically via spaced repetition (though your example makes me want to do that), but otherwise sounds quite familiar.

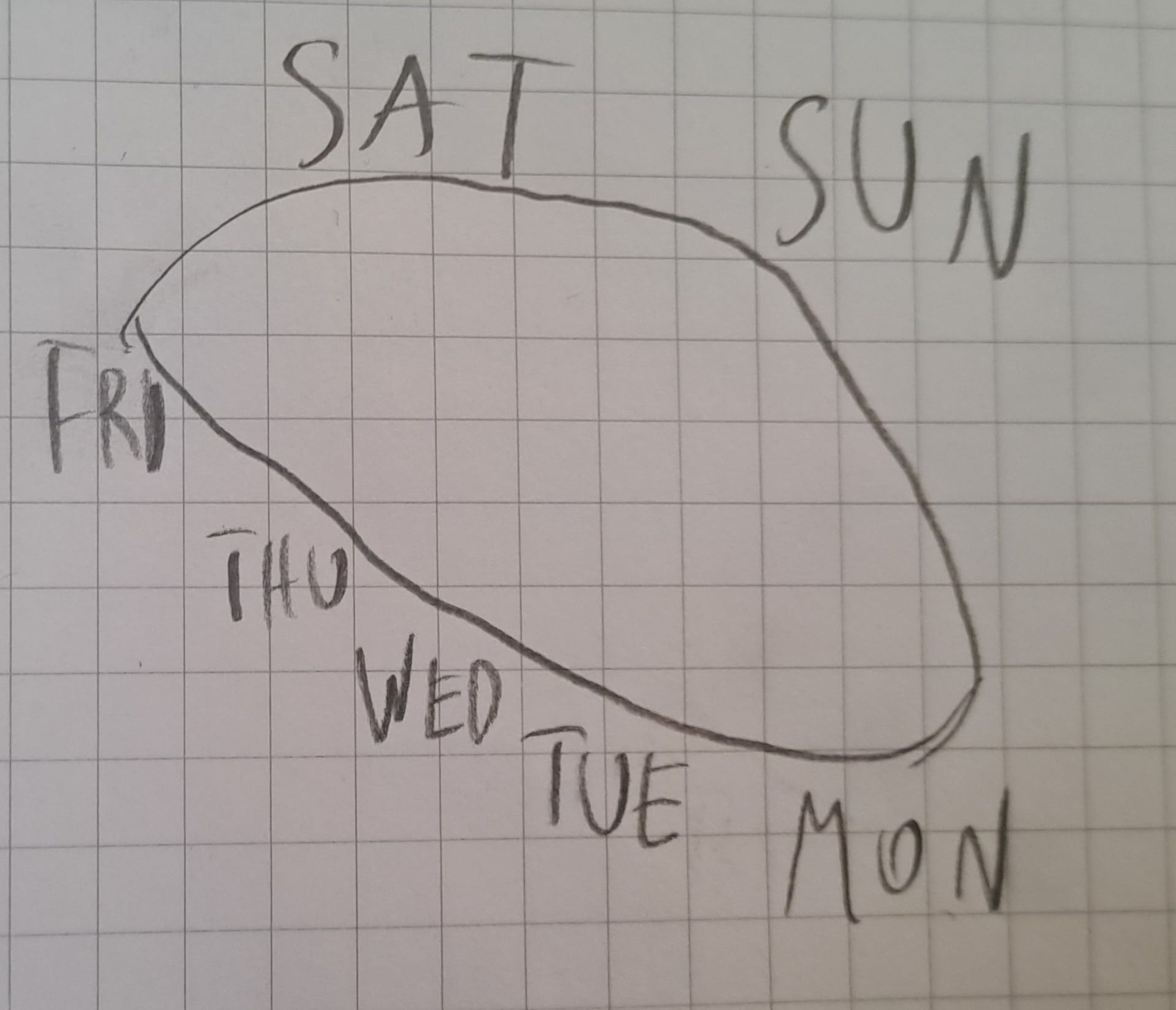

For as long as I can remember, I've had a very specific way of imagining the week. The weekdays are arranged on an ellipse, with an inclination of ~30°, starting with Monday in the bottom-right, progressing along the lower edge to Friday in the top-left, then the weekend days go above the ellipse and the cycle "collapses" back to Monday.

Actually, calling it "ellipse" is not quite right because in my mind's eye it feels like Saturday and Sunday are almost at the same height, Sunday just barely lower than Saturday.

I have a similar ellipse for the year, this ...

What were the biggest boosts that you and your colleagues got from LLMs?

Speaking from the perspective of someone still developing basic mathematical maturity and often lacking prerequisites, it's very useful as a learning aid. For example, it significantly expanded the range of papers or technical results accessible to me. If I'm reading a paper containing unfamiliar math, I no longer have to go down the rabbit hole of tracing prerequisite dependencies, which often expand exponentially (partly because I don't know which results or sections in the prerequisite texts are essential, making it difficult to scope my focus). Now I c...

Eric Schwitzgebel has argued by disjunction/exhaustion for the necessity of craziness in the sense of "contrary to common sense and we are not epistemically compelled to believe it" at least in the context of philosophy of mind and cosmology.

https://faculty.ucr.edu/~eschwitz/SchwitzAbs/CrazyMind.htm

https://press.princeton.edu/books/hardcover/9780691215679/the-weirdness-of-the-world

While browsing through Concordia AI's report (linked by @Mitchell_Porter ), I stumbled on an essay by Yin Hejun (China's Minister of Science and Technology) from ~1y ago, which Concordia's AI Safety in China Substack summarizes as:

...Background: Minister of the Ministry of Science and Technology (MOST) YIN Hejun (阴和俊) published an essay on AI in CAC’s magazine, “China Cyberspace (中国网信).” The essay outlines China’s previous efforts in AI development, key accomplishments, and plans moving forward.

Discussion of governance and dialogue: Generally, the essay

People's "deep down motivations" and "endorsed upon reflection values," etc, are not the only determiners of what they end up doing in practice re influencing x-risk.

To steelman a devil's advocate: If your intent-aligned AGI/ASI went something like

oh, people want the world to be according to their preferences but whatever normative system one subscribes to, the current implicit preference aggregation method is woefully suboptimal, so let me move the world's systems to this other preference aggregation method which is much more nearly-Pareto-over-normative-uncertainty-optimal than the current preference aggregation method

and this would be, in an important sense, more democratic, because the people (/demos) would have more influence over their societies.

Yeah, I can see why that's possible. But I wasn't really talking about the improbable scenario where ASI would be aligned to the whole of humanity/country, but about a scenario where ASI is 'narrowly aligned' in the sense that it's aligned to its creators/whoever controls it when it's created. This is IMO much more likely to happen since technologies are not created in a vacuum.

While far from what I hoped for, this is the closest to what I hoped for that I managed to find so far: https://www.chinatalk.media/p/is-china-agi-pilled

...Overall, the Skeptic makes the stronger case — especially when it comes to China’s government policy. There’s no clear evidence that senior policymakers believe in short AGI timelines. The government certainly treats AI as a major priority, but it is one among many technologies they focus on. When they speak about AI, they also more often than not speak about things like industrial automation as oppo

The fact that their models are on par with openAI and anthropic but it’s open source.

This is perfectly consistent with my

"just": build AI that is useful for whatever they want their AIs to do and not fall behind the West while also not taking the Western claims about AGI/ASI/singularity at face value?

You can totally want to have fancy LLMs while not believe in AGI/ASI/singularity.

There are people from the safety community arguing for jail for folks who download open source models.

Who? What proportion of the community are they? Also, all open-source m...

I'm not sure why this is.

The most straightforward explanation would be that there are more underexploited niches for top-0.01%-intelligence people than there are top-1%-intelligence people.

After thinking about it for a few minutes, I'd expect that MadHatter has disengaged from this community/cause anyway, so that kind of public reveal is not going to hurt them much, whereas it might have a big symbolic/common-knowledge-establishing value.

Self-Other Overlap: https://www.lesswrong.com/posts/hzt9gHpNwA2oHtwKX/self-other-overlap-a-neglected-approach-to-ai-alignment?commentId=WapHz3gokGBd3KHKm

Emergent Misalignment: https://x.com/ESYudkowsky/status/1894453376215388644

He was throwing vaguely positive comments about Chris Olah, but I think always/usually caveating it with "capabilities go like this [big slope], Chris Olah's interpretability goes like this [small slope]" (e.g., on Lex Fridman podcast and IIRC some other podcast(s)).

ETA:

SolidGoldMagikarp: https://www.lesswrong.com/posts/...

I know almost nothing about audio ML, but I would expect one big inconvenience when doing audio-NN-interp to be that a lot of complexity in sound is difficult to represent visually. Images and text (/token strings) don't have this problem.

I am confused about what autism is. Whenever I try to investigate this question I end up coming across long lists of traits and symptoms where various things are unclear to me.

Isn't that the case with a lot of psychological/psychiatric conditions?

Criteria for a major depressive episode include "5 or more depressive symptoms for ≥ 2 weeks", and there are 9 depressive symptoms, so you could have 2 individuals diagnosed with a major depressive episode but having only one depressive symptom in common.

I know. I just don't expect it to.

Steganography /j

So it seems to be a reasonable interpretation that we might see human level AI around mid-2030 to 2040, which happens to be about my personal median.

What are the reasons your median is mid-2030s to 2040, other than this way of extrapolating the METR results?

How does the point about Hitler murder plots connect to the point about anthropics?

they can’t read Lesswrong or EA blogs

VPNs exist and are probably widely used in China + much of "all this work" is on ArXiv etc.

If that was his goal, he has better options.

I'm confused about how to think about this idea, but I really appreciate having this idea in my collection of ideas.

To show how weird English is: English is the only proto indo european language that doesn't think the moon is female ("la luna") and spoons are male (“der Löffel”). I mean... maybe not those genders specifically in every language. But some gender in each language.

Persian is ungendered too. They don't even have gendered pronouns.

Writing articles in Chinese for my family members, explaining things like cognitive bias, evolutionary psychology, and why dialectical materialism is wrong.

Your needing to write them seems to suggest that there's not enough content like that in Chinese, in which case it would plausibly make sense to publish them somewhere?

I'm also curious about how your family received these articles.

I think that the scenario of the war between several ASI (each merged with its origin country) is underexplored. Yes, there can be a value handshake between ASIs, but their creators will work to prevent this and see it as a type of misalignment.

Not clear to me, as long as they expect the conflict to be sufficiently destructive.

I wonder whether it's related to this https://x.com/RichardMCNgo/status/1866948971694002657 (ping to @Richard_Ngo to get around to writing this up (as I think he hasn't done it yet?))

Since this is about written English text (or maybe more broadly, text in Western languages written in Latinic or Cyrillic), the criterion is: ends with a dot, starts with an uppercase letter.

Fair enough. Modify my claim to "languages tend to move from fusional to analytic (or something like that) as their number of users expands".

Related: https://www.lesswrong.com/posts/Pweg9xpKknkNwN8Fx/have-attention-spans-been-declining

Another related thing is that the grammar of languages appears to be getting simpler with time. Compare the grammar of Latin to that of modern French or Spanish. Or maybe not quite simpler but more structured/regular/principled, as something like the latter has been reproduced experimentally https://royalsocietypublishing.org/doi/10.1098/rspb.2019.1262 (to the extent that this paper's findings generalize to natural language evolution).

FWIW there is a theory that there is a cycle of language change, though it seems maybe there is not a lot of evidence for the isolating -> agglutinating step. IIRC the idea is something like that if you have a "simple" (isolating) language that uses helper words instead of morphology eventually those words can lose their independent meaning and get smushed together with the word they are modifying.

Somewhat big if true although the publication date makes it marginally less likely to be true.

The outline in that post is also very buggy, probably because of the collapsible sections.

Any info on how this compares to other AI companies?

Link to the source of the quote?

It is ambiguous, but it's hinting more strongly towards being a featured author guest because "normal/usual/vanilla guests" are not Being Invited by the organizers to attend the conference in the sense in which this word is typically used in this context.

But fair, I'll ETA-clarify.