@Dagon perhaps I should have place the emphasis on "transfer". The key thing is that we are able to reliably transfer ownership in exchange for renumeration and that the resource on which on goals are contingent at least needs to be excludable. If we cannot prevent arbitrary counter-parties consuming the resource in question without paying for it then we can't have a market for it.

@johnswentworth ok but we can achieve Pareto optimal allocations using central planning, but one wouldn't normally call this a market?

"And that’s the core concept of a market: a bunch of subsystems at pareto optimality with respect to a bunch of goals."

The other key property is that the subsystems are able to reliably and voluntarily exchange the resources that relate to their goals. This is not always the case, especially in biological settings, because there is not always a way to enforce contracts- e.g. there needs to be a mechanism to prevent counter-parties from reneging on deals.

The anonymous referees for our paper Economic Drivers of Biological Complexity came up with this concise summary:

"Markets can arise spontaneously whenever individuals are able to engage in voluntary exchange and when

they differ in their preferences and holdings. When the individuals are people, it’s economics. When they’re

not it’s biology"

There are various ways nature overcomes the problems of contracts. One is to perform trade incrementally to gradually build trust. Alternatively, co-evolution can sometimes produce the equivalent of a secure payment system, as we discuss in our paper:

"an alternative solution to the problem of contracts is to “lock” the resource being traded in such a way that the only way to open it is to reciprocate. For example, if we view the fructose in fruit as a payment made by flora to fauna in return for seed dispersal, we see that is is very difficult (i.e. costly) for the frugivore to consume the fructose without performing the dispersal service, since it would become literally a 'sitting duck' for predators. By encapsulating the seed within the fructose, the co-evolution between frugivore and plant has resulted in the

evolution of a secure payment system".

What is the epistemic status of this claim? e.g is it based on well established evidence beyond hearsay and personal opinion? How does it relate to other well-established cognitive biases such as the sunk-costs fallacy? Alternatively, are you conjecturing that there is a new kind of cognitive bias (that psychologists have not yet hypothesized) that causes people to persist with failing projects when it is irrational to do so? If so, how could it be experimentally tested?

If somebody is finding it difficult to move on from a failed project I would tend to suggest to them to "be mindful of the sunk-costs fallacy" rather then to "stare into the abyss".

https://www.lesswrong.com/tag/sunk-cost-fallacy

The main problems are the number of contracts and the relationship management problem. Once upon a time drawing up and enforcing the required number of contracts would have been prohibitevly expensive in terms of fees for lawyers. In the modern era Web 3.0 promised smart contracts to solve this kind of problem. But smart contracts don't solve the problem of incomplete contracts https://en.m.wikipedia.org/wiki/Incomplete_contracts, and this in itself can be seen as a transaction cost in the form of a risk premium. and so we are stuck with companies. In the theory of the firm companies are indeed a bit like socialist enclaves. Individuals give up some of their autonomy and agree not to compete with fellow employees in order to reduce their transaction costs. As you point out the flip side of this is that it can create new principal agent problems, but these are rarely insurmountable. The principal agent problems that we should really worry about are the ones that occur between companies, particularly in financial services. It was a principal agent conflict between rating agencies and investment banks that led to the great financial crisis, as dramatised in the film the Big Short.

The point is that all the people making cars in the company could, in principle, do the same job as self employed freelance contractors rather than as employees. Instead of a company you have lots of contracts between eg assembly line workers, salespeople and end customers, without any companies. The same number of cars could in theory be built by the same number of people in each case. The physical scenario would be identical in each case. The machinery would be identical in each case. But in the freelancer case you still have lots of people building cars but there is no invisible company to coordinate this activity instead you are relying the market.

Perhaps 'The Theory of the Firm'? The very existence of large companies is a puzzle if you are a naive believer in the power of free markets, because if the market is efficient then individuals can simply contract with other individuals through the market to achieve their desired inputs and outputs and there is no economic advantage to amassing individuals into higher-level entities called companies. The reason this doesn't this work is because of transaction costs. An example transaction cost could be the time invested in finding partners to make contracts with for all your inputs and then forming and enforcing contracts. In the 'the theory of firm' the insight is that individuals can lower their transaction costs by forming a higher-level economic agent- ie by becoming employees at a larger company. Transaction costs explain why large firms exist, though they don't eliminate principal-agent problems and may exacerbate them.

As an aside, similar considerations of transaction costs may explain the evolution of multi-cellular life. In evolutionary biology lower level competing units of selection cooperate together to form higher-level entities- genes/genomes, cells/organisms, organisms/groups, groups/societies, resulting in major transitions in evolution. The endosymbiosis between bacteria that led to the evolution of Eukaryotic Cells can be thought of as analogous to forming a company in order to reduce transaction costs. This is discussed further in S. Phelps and Y. I. Russell. Economic drivers of biological complexity. Adaptive Behavior, 23(5):315-326, 2015. [PDF]

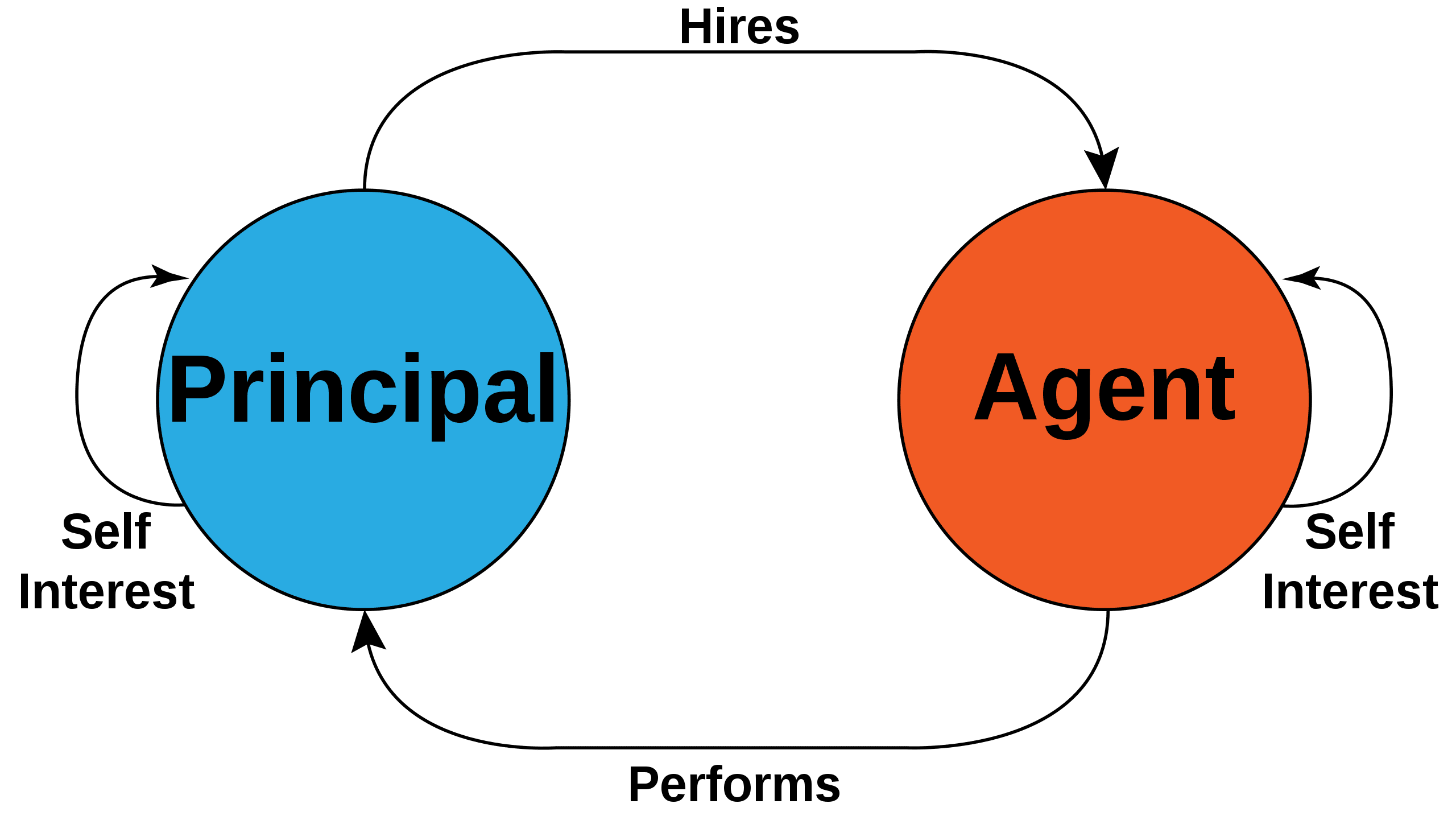

This problem has been studied extensively by economists within the field of organizational economics, and is called the principal-agent problem (Jensen and Meckling, 1976). In a principal-agent problem a principal (e.g. firm) hires an agent to perform some task. Both the principal and the agent are assumed to be rational expected utility maximisers, but the utility function of the agent and that of the principal are not necessarily aligned, and there is an asymmetry in the information available to each party. This situation can lead the agent into taking actions that are not in the principal's interests.

As the OP suggests, incentive schemes, such as performance-conditional payments, can be used to bring the interests of the principal and the agent into alignment, as can reducing information asymmetry by introducing regulations enforcing transparency.

The principal-agent problem has also been discussed in the context of AI alignment. I have recently written a working-paper on principal-agent problems that occur with AI agents instantiated using large-language models; see arXiv:2307.11137.

References

Jensen and W. H. Meckling, “Theory of the firm: Managerial behavior, agency costs and ownership

structure,” Journal of Financial Economics 3 no. 4, (1976) 305–360

S. Phelps and R. Ranson, "Of Models and Tin Men - a behavioural economics study of principal-agent problems in AI alignment using large-language models", arXiv:2307.11137, 2023.

yes if you take a particular side in the socialist calculation debate then a centrally-planned economy is isomorphic with "a market". And yes, if you ignore the Myerson–Satterthwaite theorem (and other impossibility results) then we can sweep aside the fact that most real-world "market" mechanisms do not yield Pareto-optimal allocations in practice :-)