All of philh's Comments + Replies

Are ads externalities? In the sense of, imposed upon people who don't get a say in the matter?

My initial reaction was roughly: "web/TV/magazine ads no, you can just not visit/watch/read. Billboards on the side of the road yes". But you can also just not take that road. Like, if someone built a new road and put up a billboard there, and specifically said "I'm funding the cost of this road with the billboard, take it or leave it", that doesn't feel like an externality. Why is it different if they build a road and then later add a billboard?

But if we go that ...

Absolutely. In adversarial setting (XZ backdoor) there's no point in relaxing accountability.

Well, but you don't necessarily know if a setting is adversarial, right? And a process that starts by assuming everyone had good intentions probably isn't the most reliable way to find out.

it's exactly the case when one would expect blameless postmortems to work: The employer and the employee are aligned - neither of them wants the plane to crash and so the assumption of no ill intent should hold.

Not necessarily fully aligned, since e.g. the captain might benefit f...

Blameless postmortems are a tenet of SRE culture. For a postmortem to be truly blameless, it must focus on identifying the contributing causes of the incident without indicting any individual or team for bad or inappropriate behavior. A blamelessly written postmortem assumes that everyone involved in an incident had good intentions and did the right thing with the information they had. If a culture of finger pointing and shaming individuals or teams for doing the "wrong" thing prevails, people will not bring issues to light for fear of punishment.

This seem...

Absolutely. In adversarial setting (XZ backdoor) there's no point in relaxing accountability.

The Air Maroc case is interesting though because it's exactly the case when one would expect blameless postmortems to work: The employer and the employee are aligned - neither of them wants the plane to crash and so the assumption of no ill intent should hold.

Reading the article from the point of view of a former SRE... it stinks.

There's something going on there that wasn't investigated. The accident in question is probably just one instance of it, but how did the ...

Huh, thanks for the correction.

Smaller correction - I think you've had her buy an extra pair of boots. At $260 she's already bought one pair, so we apply thirteen times, then multiply by 1.07 again for the final year's interest, and she ends with no boots, so that's $239.41. (Or start with $280 and apply fourteen times.)

Not sure why my own result is wrong. Part of it is that I forgot to subtract the money actually spent on boots - I did "the $20 she spends after the first year gets one year's interest, so that's ...

Well, I should like to see some examples. So far, our tally of actual examples of this alleged phenomenon seems to still be zero. All the examples proffered thus far… aren’t.

From https://siderea.dreamwidth.org/1477942.html:

..."These boots," I said gesturing at what I was trying on, on my feet, "cost $200. Given that I typically buy a pair for $20 every year, that means these boots have to last 10 years to recoup the initial investment."

That was on January 17, 2005. They died earlier this month – that is in the first week of December, 2018. So: almost but not

Sam Vimes is a copper, and sees poverty lead to precarity, and precarity lead to Bad Things Happening In Bad Neighborhoods.

Hm, does he? It's certainly a reasonable guess, but offhand I don't remember it coming up in the books, and the Thieves and Assassins guilds will change the dynamic compared to what we'd expect on Earth.

We got spam and had to reset the link. To get the new link, append the suffix "BbILI8HzX3zgJF8i" to the prefix "https://chat.whatsapp.com/IUIZc3". Hopefully spambots can't yet do that automatically.

This reddit thread has the claim:

...Something related that you CAN do, and that is more likely to make a difference, is not ever to heat up plastic in a microwave, wash it in a dishwasher, or cook with plastic utensils. Basically, the softening agents in a lot of plastics aren't chemically bonded to the rest of the polymers, and heating the plastic makes those chemicals ready to leach into whatever food contacts them. That's a huge class of chemicals, none of which are LD-50-level dangerous, but many of which have been associated with hormonal changes, microb

One question I have that might be relatively tractable: if I'm using plastic containers for leftovers, how much difference is there between

- Store in the container, put on plate to microwave and eat.

- Store and microwave in the container, put on plate to eat.

- Who needs a plate anyway? Just eat from the container.

The bit about plastic chopping boards kind of hints that (3) might give a lot more microplastics than (2)? But you're probably less violent to the container than the chopping board.

We suggest these low-hanging actions one can take to reduce their quantity exposure:

a. Stop using plastic bottles and plastic food storage containers,

b. Stop using plastic cutting boards,

For people who didn't read the rough notes dumps: it seems like (b) is a way bigger effect size than (a).

There are 3 people. Each person announces an integer. The smallest unique integer wins: e.g. if your opponents both pick 1, you win with any number. If all 3 pick the same number, the winner is picked randomly

Question: what’s the Nash equilibrium?

(I assume this is meant to be natural numbers or positive ints? Otherwise I don't think there is a nash equilibrium.)

So it is. Other than that, the remaining details I needed were:

2740 Telegraph Ave, Berkeley

94705

habryka@lightconeinfrastructure.com

Oliver Habryka

Assuming all went well, I just donated £5,000 through the Anglo-American charity, which should become about (£5000 * 1.25 * 96% = £6000 ≈ $7300) to lightcone.

I had further questions to their how to give page, so:

- You can return the forms by email, no need to post them. (I filled them in with Firefox's native "draw/write on this pdf" feature, handwriting my signature with a mouse.)

- If donating by bank transfer, you send the money to "anglo-american charity limited", not "anglo-american charitable foundation".

- For lightcone's contact details I asked on LW i

not sure if that would be legit for gift aid purposes

Based on https://www.gov.uk/government/publications/charities-detailed-guidance-notes/chapter-3-gift-aid#chapter-344-digital-giving-and-social-giving-accounts, doing this the naive way (one person collects money and gives it to the charity, everyone shares the tax rebate) is explicitly forbidden. Which makes sense, or everyone with a high income friend would have access to the 40% savings.

Not clear to me whether it would be allowed if the charity is in on it and everyone fills in their own gift aid de...

Thanks - I think GWWC would be fewer steps for me, but if that's not looking likely then one of these is plausible.

(I wonder if it would be worth a few of us pooling money to get both "lower fees" and "less need to deal with orgs who don't just let you click some buttons to say where you want the money to go", but not sure if that would be legit for gift aid purposes.)

That's not really "concrete" feedback though, right? In the outcome game/consensus game dynamic Stephen's talking about, it seems hard to play an outcome game with that kind of feedback.

Beyond being an unfair and uninformed dismissal

Why do you think it's uninformed? John specifically says that he's taking "this work is trash" as background and not trying to convince anyone who disagrees. It seems like because he doesn't try, you assume he doesn't have an argument?

it risks unnecessarily antagonizing people

I kinda think it was necessary. (In that, the thing ~needed to be written and "you should have written this with a lot less antagonism" is not a reasonable ask.)

For instance, if a developer owns multiple adjacent parcels and decides to build housing or infrastructure on one of them, the value of the undeveloped parcels will rise due to their proximity to the improvements.

An implementation detail of LVT would be, how do we decide what counts as one parcel and what counts as two? Depending how we answer that, I could easily imagine LVT causing bad incentives. (Which isn't a knock-down, it doesn't feel worse than the kind of practical difficulty any tax has.)

I wonder if it might not be better to just get rid of the i...

I've read/listened about LVT many times and I don't remember "auctions are a key aspect of this" ever coming up. E.g. the four posts by Lars Doucet on ACX only mention the word twice, in one paragraph that doesn't make that claim:

...Land Price or Land Value is how much it costs to buy a piece of land. Full Market Value, however, is specifically the land price under "fair" and open market conditions. What are "unfair" conditions? I mean, your dad could sell you a valuable property for $1 as an obvious gift, but if he put it on the open market, it would go for

My girlfriend and I probably wouldn't have got together if not for a conversation at Less Wrong Community Weekend.

Oh, I think that also means that section is slightly wrong. You want to take insurance if

and the insurance company wants to offer it if

So define

as you did above. Appendix B suggests that you'd take insurance if and they'd offer it if . But in fact they'd offer it if .

Appendix B: How insurance companies make money

Here's a puzzle about this that took me a while.

When you know the terms of the bet (what probability of winning, and what payoff is offered), the Kelly criterion spits out a fraction of your bankroll to wager. That doesn't support the result "a poor person should want to take one side, while a rich person should want to take the other".

So what's going on here?

Not a correct answer: "you don't get to choose how much to wager. The payoffs on each side are fixed, you either pay in or you don't." True but doesn't...

I've written about this here. Bottom line is, if you actually value money linearly (you don't) you should not bet according to the Kelly criterion.

I think we're disagreeing about terminology here, not anything substantive, so I mostly feel like shrug. But that feels to me like you're noticing the framework is deficient, stepping outside it, figuring out what's going on, making some adjustment, and then stepping back in.

I don't think you can explain why you made that adjustment from inside the framework. Like, how do you explain "multiple correlated bets are similar to one bigger bet" in a framework where

- Bets are offered one at a time and resolved instantly

- The bets you get offered don't depend on previous history

?

This is a synonym for "if money compounds and you want more of it at lower risk".

No it's not. In the real world, money compounds and I want more of it at lower risk. Also, in the real world, "utility = log($)" is false: I do not have a utility function, and if I did it would not be purely a function of money.

Like I don’t expect to miss stuff i really wanted to see on LW, reading the titles of most posts isn’t hard

It's hard for me! I had to give up on trying.

The problem is that if I read the titles of most posts, I end up wanting to read the contents of a significant minority of posts, too many for me to actually read.

Ah, my "what do you mean" may have been unclear. I think you took it as, like, "what is the thing that Kelly instructs?" But what I meant is "why do you mean when you say that Kelly instructs this?" Like, what is this "Kelly" and why do we care what it says?

That said, I do agree this is a broadly reasonable thing to be doing. I just wouldn't use the word "Kelly", I'd talk about "maximizing expected log money".

But it's not what you're doing in the post. In the post, you say "this is how to mathematically determine if you should buy insurance". But the formula you give assumes bets come one at a time, even though that doesn't describe insurance.

The probability should be given as 0.03 -- that might reduce your confusion!

Aha! Yes, that explains a lot.

I'm now curious if there's any meaning to the result I got. Like, "how much should I pay to insure against an event that happens with 300% probability" is a wrong question. But if we take the Kelly formula and plug in 300% for the probability we get some answer, and I'm wondering if that answer has any meaning.

...I disagree. Kelly instructs us to choose the course of action that maximises log-wealth in period t+1 assuming a particular joint distribution o

Whether or not to get insurance should have nothing to do with what makes one sleep – again, it is a mathematical decision with a correct answer.

I'm not sure how far in your cheek your tongue was, but I claim this is obviously wrong and I can elaborate if you weren't kidding.

I'm confused by the calculator. I enter wealth 10,000; premium 5,000; probability 3; cost 2,500; and deductible 0. I think that means: I should pay $5000 to get insurance. 97% of the time, it doesn't pay out and I'm down $5000. 3% of the time, a bad thing happens, and instead of paying...

Whether or not to get insurance should have nothing to do with what makes one sleep – again, it is a mathematical decision with a correct answer.

I'm not sure how far in your cheek your tongue was, but I claim this is obviously wrong and I can elaborate if you weren't kidding.

I agree with you, and I think the introduction unfortunately does major damage to what is otherwise a very interesting and valuable article about the mathematics of insurance. I can't recommend this article to anybody, because the introduction comes right out and says: "The thi...

I think the thesis is not "honesty reduces predictability" but "certain formalities, which preclude honesty, increase predictability".

I kinda like this post, and I think it's pointing at something worth keeping in mind. But I don't think the thesis is very clear or very well argued, and I currently have it at -1 in the 2023 review.

Some concrete things.

- There are lots of forms of social grace, and it's not clear which ones are included. Surely "getting on the train without waiting for others to disembark first" isn't an epistemic virtue. I'd normally think of "distinguishing between map and territory" as an epistemic virtue but not particularly a social grace, but the last two paragraphs m

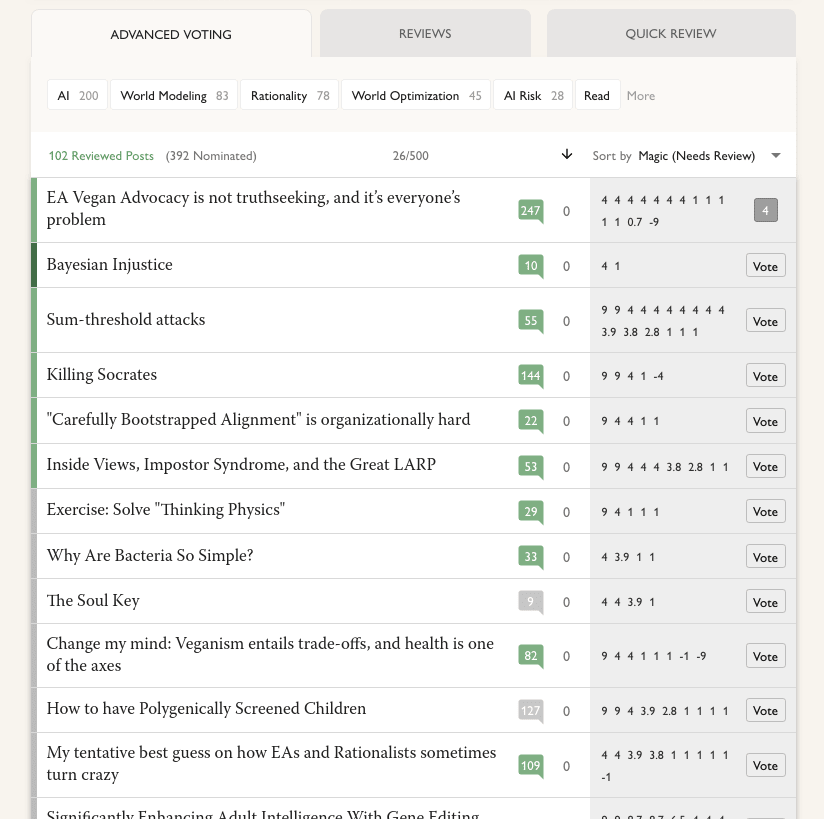

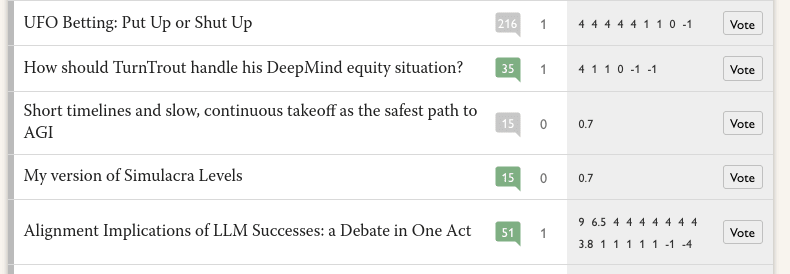

Ooh, I didn't see the read filter. (I think I'd have been more likely to if that were separated from the tabs. Maybe like, [Read] | [AI 200] [World Modeling 83] [Rationality 78] ....) With that off it's up to 392 nominated, though still neither of the ones mentioned. Quick review is now down to 193, my current guess is that's "posts that got through to this phase that haven't been reviewed yet"?

Screenshot with the filter off:

and some that only have one positive review:

Btw, I'm kinda confused by the current review page. A tooltip on advanced voting says

54 have received at least one Nomination Vote

Posts need at least 2 Nomination Votes to proceed to the Review Phase

And indeed there are 54 posts listed and they all have at least one positive vote. But I'm pretty sure this and this both had at least one (probably exactly one) positive vote at the end of the nomination phase and they aren't listed.

Guess: this is actually listing posts which had at least two positive votes at the end of the nomination phase; the posts with on...

I think it's good that this post was written, shared to LessWrong, and got a bunch of karma. And (though I haven't fully re-read it) it seems like the author was careful to distinguish observation from inference and to include details in defense of Ziz when relevant. I appreciate that.

I don't think it's a good fit for the 2023 review. Unless Ziz gets back in the news, there's not much reason for someone in 2025 or later to be reading this.

If I was going to recommend it, I think the reason would be some combination of

- This is a good example of investigative

“Unless Ziz gets back in the news, there's not much reason for someone in 2025 or later to be reading this.” With the recent death of the zizian Ophelia (after shooting a border patrol agent) this post is, unfortunately, relevant again.

Self review: I really like this post. Combined with the previous one (from 2022), it feels to me like "lots of people are confused about Kelly betting and linear/log utility of money, and this deconfuses the issue using arguments I hadn't seen before (and still haven't seen elsewhere)". It feels like small-but-real intellectual progress. It still feels right to me, and I still point people at this when I want to explain how I think about Kelly.

That's my inside view. I don't know how to square that with the relative lack of attention the post got, and it fe...

(At least in the UK, numbers starting 077009 are never assigned. So I've memorized a fake phone number that looks real, that I sometimes give out with no risk of accidentally giving a real phone number.)

Okay. Make it £5k from me (currently ~$6350), that seems like it'll make it more likely to happen.

If you can get it set up before March, I'll donate at least £2000.

(Though, um. I should say that at least one time I've been told "the way to donate with gift said is to set up an account with X, tell them to send the money to Y, and Y will pass it on to us", and the first step in the chain there had very high transaction fees and I think might have involved sending an email... historical precedent suggests that if that's the process for me to donate to lightcone, it might not happen.)

Do you know what rough volume you'd need to make it worthwhile?

I don't know anything about the card. I haven't re-read the post, but I think the point I was making was "you haven't successfully argued that this is good cost-benefit", not "I claim that this is bad cost-benefit". Another possibility is that I was just pointing out that the specific quoted paragraph had an implied bad argument, but I didn't think it said much about the post overall.

My guess: [signalling] is why some people read the Iliad, but it's not the main thing that makes it a classic.

Incidentally, there was one reddit comment that pushed me slightly in the direction of "yep, it's just signalling".

This was obviously not the intended point of that comment. But (ignoring how they misunderstood my own writing), the user

- Quotes multiple high status people talking about the Iliad;

- Tantalizingly hints that they are widely-read enough to be able to talk in detail about the Iliad and the old testament, and compare translations;

- Says approx

Complex Systems (31 Oct 2024): From molecule to medicine, with Ross Rheingans-Yoo

When you first do human studies with a new drug, there's something like a 2/3 chance it'll make it to the second round of studies. Then something like half of those make it to the next round; and there's a point where you talk to the FDA and say "we're planning to do this study" and they say "cool, if you do that and get these results you'll probably be approved" and then in that case there's like an 85% chance you'll be approved; and I guess at least one other filter I'm for...

Though this particular story for weight exfiltration also seems pretty easy to prevent with standard computer security: there’s no reason for the inference servers to have the permission to create outgoing network connections.

But it might be convenient to have that setting configured through some file stored in Github, which the execution server has access to.

Yeah, if that was the only consideration I think I would have created the market myself.

Launching nukes is one thing, but downvoting posts that don't deserve it? I'm not sure I want to retaliate that strongly.

I looked for a manifold market on whether anyone gets nuked, and considered making one when I didn't find it. But:

- If the implied probability is high, generals might be more likely to push the button. So someone who wants someone to get nuked can buy YES.

- If the implied probability is low, generals can get mana by buying YES and pushing the button. I... don't think any of the generals will be very motivated by that? But not great.

So I decided not to.

Sometime else created it.

No they’re not interchangeable. They are all designed with each other in mind, along the spectrum, to maximize profits under constraints, and the reality of rivalrousness is one reason to not simply try to run at 100% capacity every instant.

I can't tell what this paragraph is responding to. What are "they"?

You explained they popped up from the ground. Those are just about the most excludable toilets in existence!

Okay I do feel a bit silly for missing this... but I also still maintain that "allows everyone or no one to use" is a stretch when it comes...

Idk, I think my reaction here is that you're defining terms far more broadly than is actually going to be helpful in practice. Like, excludability and rivalry are spectrums in multiple dimensions, and if we're going to treat them as binaries then sure, we could say anything with a hint of them counts in the "yes" bin, but... I think for most purposes,

- "occasionally, someone else arrives at the parking lot at the same time as me, and then I have to spend a minute or so waiting for the pay-and-display meter"

is closer to

- "other people using the parking lot does

Thing I've been wrong about for a long time: I remembered that the rocket equation "is exponential", but I thought it was exponential in dry mass. It's not, it's linear in dry mass and exponential in Δv.

This explains a lot of times where I've been reading SF and was mildly surprised at how cavalier people seemed to be about payload, like allowing astronauts to have personal items.

I'm inclined to agree, but a thing that gives me pause is something like... if society decides it's okay to yell at cogs when the machine wrongs you, I don't trust society to judge correctly whether or not the machine wronged a person?

Like if there are three worlds

- "Civilized people" simply don't yell at gate attendants. Anyone who does is considered gauche, and "civilized people" avoid them.

- "Civilized people" are allowed to yell at gate attendants when and only when the airline is implementing a shitty policy. If the airline is implementing a very reasonab

... (read more)