All of quetzal_rainbow's Comments + Replies

My largest share of probability of survival on business-as-usual AGI (i.e., no major changes in technology compared to LLM, no pause, no sudden miracles in theoretical alignment and no sudden AI winters) belongs to scenario where brain concept representations, efficiently learnable representations and learnable by current ML models representations secretly have very large overlap, such that even if LLMs develop "alien thought patterns" it happens as addition to the rest of their reasoning machinery, not as primary part, which results in human values not on...

I feel like this position is... flimsy? Unsubstantial? It's not like I disagree, I don't understand why you would want to articulate it in this way.

On the one hand, I don't think biological/non-biological distinction is very meaningful from transhumanist perspective. Is embryo, genetically modified to have +9000IQ, going to be meaningfully considered "transhuman" instead of "posthuman"? Are you going to still be you after one billion years of life extension? "Keeping relevant features of you/humanity after enormous biological changes" seems to be qualitati...

It is indeed surprising, because it indicates much more sanity I would otherwise expected.

Terrorism is not effective. The only ultimate result of 9/11 from perspective of bin Laden goals was "Al Qaeda got wiped out of the face of Earth and rival groups have replaced it". The only result of firebombing datacenter would be "every single personality in AI safety gets branded terrorist, destroying literally any chance to influence relevant policy".

I think more correct picture is that it's useful to have programmable behavior and then programmable system suddenly becomes Turing-complete weird machine and some of resulting programs are terminal-goal-oriented, which are favored by selection pressures: terminal goals are self-preserving.

Humans in native enviornment have programmable behavior in form of social regulation, information exchange and communicating instructions, if you add sufficient amount of computational power in this system you can get very wide spectrum of behaviors.

I think it's general picture of inner misalignment.

I think we can clearly conclude that cortex doesn't do what NNs do, because cortex is incapable to learn conditioned response, it's an uncontested fiefdom of cerebellum, while for NNs learning conditioned response is the simplest thing to do. It also crushes hypothesis of Hebbian rule. I think majority of people in neurobiology neighbourhood haven't properly updated on this fact.

...We now have several different architectures that reach parity with but do not substantially exceed transformer: RWKV (RNN), xLSTM, Mamba, Based, etc. This implies they have a sha

As far as I understand MIRI strategy, the point of the book is communication, it's written from assumption that many people would be really worried if they knew the content of the book, and the main reason why they are not worried now is because before book this content exists mostly in Very Nerdy corners of the internet and is written in very idiosyncratic style. My intended purpose of Pope endorsement is not to singal that book is true, it's to move for 2 billions of Catholics topic of AI x-risk from spot "something weird discussed in Very Nerdy corners of the internet" to spot "literal head of my church is interested in this stuff, maybe I should become interested too".

If you want example particularly connected to prisons, you can take anarchist revolutionary Sergey Nechayev, who was able to propagandize prison guards enough to connect with outside terrorist cell. The only reason why Nechayev didn't escape is because Narodnaya Volya planned assasination of Tsar and they didn't want escape to interfere.

Idea for experiment: make two really large groups of people take Big Five test. Tell one group to answer fast, based on feeling what is true. Tell the other group to seriously consider how they would behave in different situations related to questions. I think different ways of introspection would yield systematic bias in results.

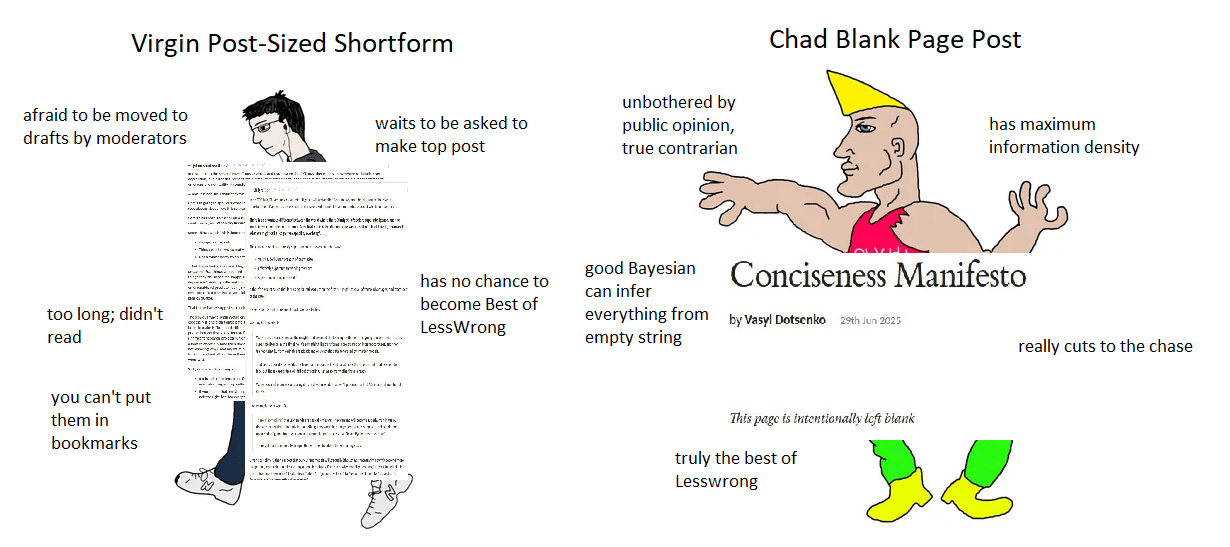

My strictly personal hot take is that Quick Takes became a place of hiding from responsibility of top level posts. People sometimes write very large Quick Takes which certainly would be top level posts, but, in my model of these people, top level posts have stricter moderation and cause harsher perception and scarier overall, so people opt out for Quick Takes. I'm afraid if we create even less strict section of LW, people are going to migrate there entirely.

isn't that gosh darn similar to "darwinism"?

No, it's not. Darwinism, as scientific position and not vibes, is a claim that any observable feature in biodiversity is observable because it's causally produced differential fitness with alternative features. It is not what we see in reality.

I suggest you pause discussion of evolution and return to discussion after reading any summary of modern evolutionary theory? I recommend "The Logic of Chance: Nature and Origin of Biological Evolution".

The other mechanism is very simple: random drift. Majority of complexity happened as accumulated neutral complexity which accumulated because of slack in the system. Then, sometimes, this complexity gets rearranged by brief adaptationist pediods.

intelligence, at parity of other traits, clearly outcompetes less intelligence.

"At parity of other traits" makes this statement near-useless: there are never parity of all traits except one. Intelligence clearly leads to more energy consumption, if fitness loss from more need in energy is greater than fitness gains from intelligence, you are pwned.

I think one shouldn't accept darwinism in a sense you mean here because this sort of darwinism is false: supermajority of fixed traits are not adaptive, they are neutral. 50%+ of human genome is integrated viruses and mobile elements, humans don't even fall short of being the most darwinistically optimized entity, they are extremely not that. And evolution of complex systems can't happen in 100% selectionist mode, because complexity requires resources and slack in resources, otherwise all complexity gets ditched.

From real perspective on evolution, the resu...

The problem here is that sequence embeddings should have tons of side-channels which should convey non-semantic information (like, say, frequencies of tokens in sequence) and you can come a long way with this sort of information.

What would be really interesting is to train embedding models in different languages and check whether you can translate highly metaphorical sentences with no correspondence other than semantic, or train embedding models on different representations of the same math (for example, matrix mechanics vs wave mechanics formulations of quantum mechanics) and see if they recognize equivalent theorems.

I'm so far not impressed with Claude 4s. They are trying to make up superficially plausible stuff for my math questions as fast as possible. Sonnet 3.7, at least, explored a lot of genuinely interesting venues before making an error. "Making up superficially plausible stuff" sounds like a good strategy for hacking not very robust verifiers.

I dunno how much it's obvious for people who want to try for bounty, but I only now realized that you can express criteria for redund as inequality with mutual information and I find mutual information to be much nicer to work with, even if from pure convenience of notation. Proof:

Let's take criterion for redund w.r.t. of ,

expand expression for KL divergence:

expand joint distribution:

...

No, the point is that AI x-risk is commonsensical. "If you drink much from a bottle marked poison it is certain to disagree with you sooner or later" even if you don't know mechanism of action of poison. We don't expect Newtonian mechanics to prove that hitting yourself with a brick is quite safe, if we'd found that Newtonian mechanics predicts hitting yourself with a brick to be safe, it would be a huge evidence for Newtonian mechanics to be wrong. Good theories usually support common intuitions.

The other thing here is an isolated demand for rigor: there is no "technical understanding of today’s deep learning systems" which predicts, say, success of AGI labs or that their final products are going to be safe.

In my personal experience, the main reason why social media causes cognitive decline is fatigue. Evidence from personal experience: like many social media addicts, I struggle with maintaining concentration on books. If I stop using social media for a while, I regain the full ability to concentrate without drawbacks—in a sense, "I suddenly become capable of reading 1,000 pages of a book in two days, which I had been trying to start for two months."

The reason why social media is addictive to me, I think, is the following process:

- Social media is entertaining;

almost all the other agents it expects to encounter are CDT agents

Given this particular setup (you both get source codes of each other and make decision simultaneously without any means to verify choices of counterparty until outcomes happen), you shouldn't self-modify into extortionist, because CDT agents always defect, because no amount of reasoning about source code can causally affect your decision and D-D is Nash equilibrium. CDT agents can expect with high probability to meet extortionist in the future and self-modify into weird Son-of-CDT agent, whi...

Let's suppose that you look at the code of your counterparty which says "I'll Nuke you unless you Cooperate in which case I Defect", call it "extortionist". You have two hypotheses here:

- Your counterparty deliberately modified its decision-making procedures in hope to extort more utility;

- This decision-making procedure is a genuine result of some weird evolutionary/learning process.

If you can't actually get any evidence in favor of each hypothesis, you go with your prior and do whatever is the best from the standpoint of UDT/FDT/LDT counterfactual operationa...

I think the difference between real bureaucracies and HCH is that in real functioning bureaucracies should be elements capable to say "screw this arbitrary problem factorization, I'm doing what's useful" and bosses of bureaucracy should be able to say "we all understand that otherwise system wouldn't be able to work".

There is a conceptual path for interpretability to lead to reliability: you can understand model in sufficient details to know how it produces intelligence and then make another model out of interpreted details. Obviously, it's not something that we can expect to happen anytime soon, but it's something that army of interpretability geniuses in datacenter could do.

I think current Russia-Ukraine war is a perfect place to implement such system. It's an attrition war, there is not many goals which are not reduced to "kill and destroy as many as you can". There is a strategic aspect: Russia pays exorbitant compensations to families of killed soldiers, decades of income for poor regions. So, when Russian soldier dies, two things can happen:

- Russian government dumps unreasonable amount of money on the market, contributing to inflation;

- Russian government fails to pay (for 1000 and 1 stupid bureaucratic reasons), which erode

Recent update from OpenAI about 4o sycophancy surely looks like Standard Misalignment Scenario #325:

...Our early assessment is that each of these changes, which had looked beneficial individually, may have played a part in tipping the scales on sycophancy when combined.

<...>

One of the key problems with this launch was that our offline evaluations—especially those testing behavior—generally looked good. Similarly, the A/B tests seemed to indicate that the small number of users who tried the model liked it.

<...>

some expert testers had indicate

I'm not commenting anything about positive suggestions in this post, but it has some really strange understanding of what it argues with.

an 'Axiom of Rational Convergence'. This is the powerful idea that under sufficiently ideal epistemic conditions – ample time, information, reasoning ability, freedom from bias or coercion – rational agents will ultimately converge on a single, correct set of beliefs, values, or plans, effectively identifying 'the truth'.

There is no reason to "agree on values" (in a sense of actively seeking agreement instead of observing...

I think the large part of this phenomenon is social status. I.e., if you die early, it means that you did something really embarassingly stupid. Conversely, if you caused someone to die by, say, faulty construction or insufficient medical intervention, you should be really embarassed. If you can't prove/reliably signal that you behaving reasonably, you are incentivized to behave unreasonaboy safe to signal your commitment to not do stupid things. It's also probably linked to trade-off between social status and desire for reproduction. It also explains why ... (read more)