All of rahulxyz's Comments + Replies

I made the same comment on the original post. I really think this is a blindspot for US-based AI analysis.

China has smart engineers, as much as DM, OpenAI etc. Even the talent in a lot of these labs is from China originally. With a) immigration going the way it is, b) the ability to coordinate massive resources as a state, subsidies, c) potentially invading Taiwan, d) how close DeepSeek / Qwen models seem to be and the rate of catchup, e) how uncertain we are about hardware overhand (again, see deepseek training costs) etc, I think we should put at least a 50% chance of China being ahead in the next year.

My initial reaction - A lot of AI related predictions are based on "follow the curve" predictions, and this is mostly doing that. With a lack of more deeper underlying theory on the nature of intelligence, I guess that's all we get.

If you look at the trend of how far behind China is to the US, that has gone from 5 years behind 2 years ago, to maybe 3 months behind now. If you follow that curve, it seems to me that China will be ahead of the US by 2026 (even with the chip controls, and export regulations etc - my take is you're not giving them enough agency...

Coming from a somewhat similar space myself, I've also had the same thoughts. My current thinking is there is no straightforward answer on how to convert dollars to impact.

I think the EA community did a really good job at that back in the day with a spreadsheet-based relatively easier way to measure impact per dollars or per life saved in the near-term future.

With AI safety / existential-risk - the space seems a lot more confused, and everyone has different models of the world, what will work, and what good ideas are. There are some people working di...

I'm very dubious that we'll solve alignment in time, and it seems like my marginal dollar would do better in non-obvious causes for AI safety. So I'm very open to funding something like this in the hope we get a AI winter / regulatory pause etc.

I don't know if you or anyone else has thought about this, but what is your take on whether this or WBE is the more likely chance to getting done successfully? WBE seems a lot more funding intensive, but also possible to measure progress easier and potentially less regulatory burdens?

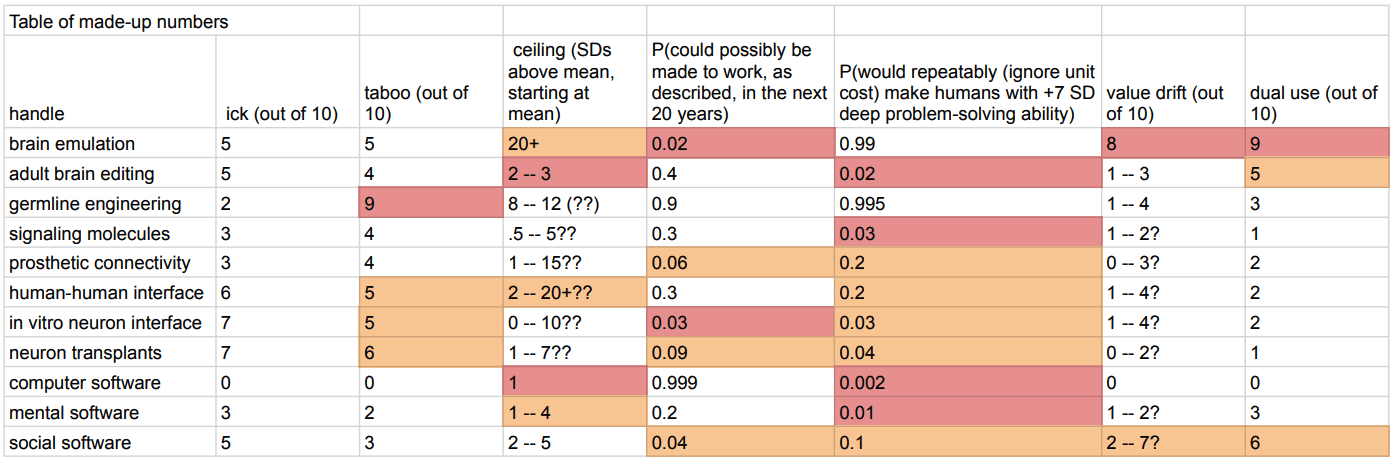

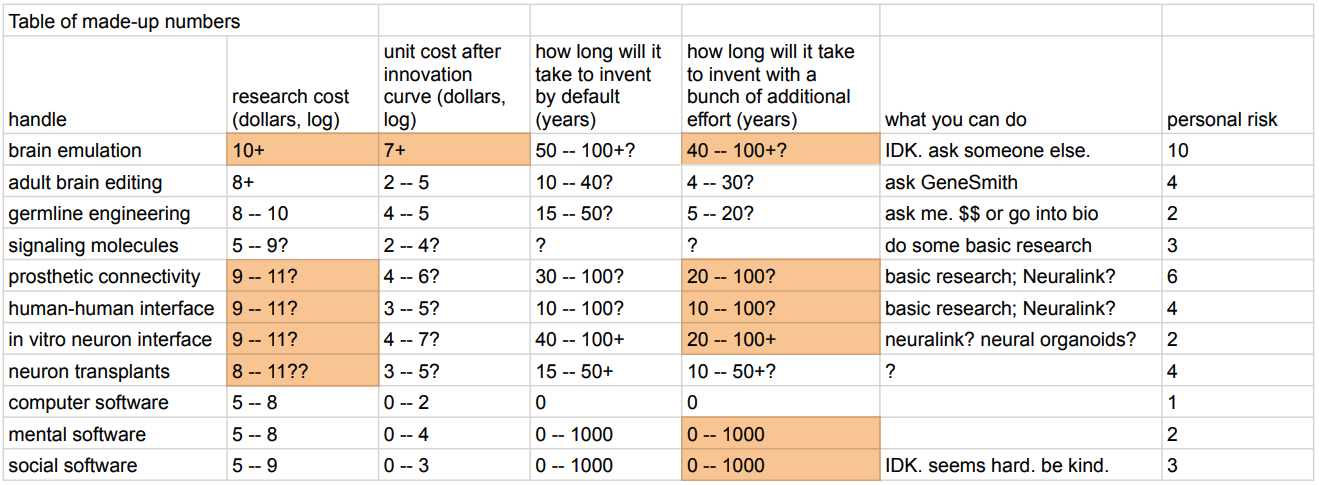

I discuss this here: https://www.lesswrong.com/posts/jTiSWHKAtnyA723LE/overview-of-strong-human-intelligence-amplification-methods#Brain_emulation

You can see my comparisons of different methods in the tables at the top:

If RL becomes the next thing in improving LLM capabilities, one thing that I would bet on becoming big is computer-use in 2025. Seems hard to get more intelligence with just RL (who verifies the outputs?), but with something like computer use, it's easy to verify if a task has been done (has the email been sent, ticket been booked etc..) that it's starting to look to more to me like it can do self-learning.

One thing that's left AI still fully not integrated into the rest of the economy is simply that the current interfaces were built for humans and moving ...

Not sure if it's correct, I didn't actually short NVDA so all I can do is collect my bayes points. I did expect most investors to think at a first-level thinking as that was my immediate reaction on reading about DeepSeek's training cost. If models can be duplicated a few weeks / months after they're out for cheaper, then you don't have a moat (this is for most regular technologies. I'm not saying AI isn't different, just that most investors think of this like any other tech innovation)

Yeah, in one sense that makes sense. But also, NVDA is down ~16% today.

Deepseek R1 could mean reduced VC investments into large LLM training runs. They claim to have done it with ~6M. If there’s a big risk of someone else coming out with a comparable model at 1/10th the cost, then there’s no moat in OpenAI in the long run. I don’t know how much the VC / investors buy the ASI as an end goal and even what the pitch would be. They’re probably looking at more prosaic things like moats and growth rates, and this may mean reduced appetite for further investment instead of more.

There doesn't seem to be many surveys of the general population on doom type scenarios. Most of them seem to be based on bias/weapons type scenario. You could look at something like metaculus but I don't think that's representative of the general population.

Here's a breakdown of AI researchers: https://aiimpacts.org/2022-expert-survey-on-progress-in-ai/ (median /mean of extinction is 5%/14%)

US Public: https://governanceai.github.io/US-Public-Opinion-Report-Jan-2019/general-attitudes-toward-ai.html (12% of americans think it will be "extremely bad i.e extin...

Which is funny because there is at least one situation where robin reasons from first principles instead of taking the outside view (cryonics comes to mind). I'm not sure why he really doesn't want to go through the arguments from first principles for AGI.

...GPT-2 does not - probably, very probably, but of course nobody on Earth knows what's actually going on in there - does not in itself do something that amounts to checking possible pathways through time/events/causality/environment to end up in a preferred destination class despite variation in where it starts out.

A blender may be very good at blending apples, that doesn't mean it has a goal of blending apples.

A blender that spit out oranges as unsatisfactory, pushed itself off the kitchen counter, stuck wires into electrical sockets in order to burn open y

Small world, I guess :) I knew I heard this type of argument before, but I couldn't remember the name of it.

So it seems like the grabby aliens model contradicts the doomsday argument unless one of these is true:

- We live in a "grabby" universe, but one with few or no sentient beings long-term?

- The reference classes for the 2 arguments are somehow different (like discussed above)

Thanks for the great writeup (and the video). I think I finally understand the gist of the argument now.

The argument seems to raise another interesting question about the grabby aliens part.

He's using the hypothesis of grabby aliens to explain away the model's low probability of us appearing early (and I presume we're one of these grabby aliens). But this leads to a similar problem: Robin Hanson (or anyone reading this) has a very low probability of appearing this early amongst all the humans to ever exist.

This low probability would also require a si...

I think there are 3 ways to think about AI and lot of confusion seems to happen because the different paradigms are talking past each other. The 3 paradigms I see on the internet & when talking to people:

Paradigm A) AI is a new technology like the internet / smartphone / electricity - this seems to be mostly held by VC's / enterpreneurs / devs that think this will unlock a whole new set of apps like AI:new apps like smartphone:Uber or internet:Amazon

Paradigm B) AI is a step change in how humanity will work. Similarly to the agricultural revolution that... (read more)