Read the associated paper "Safetywashing: Do AI Safety Benchmarks

Actually Measure Safety Progress?": https://arxiv.org/abs/2407.21792

Focus on safety problems that aren’t solved with scale.

Benchmarks are crucial in ML to operationalize the properties we want models to have (knowledge, reasoning, ethics, calibration, truthfulness, etc.). They act as a criterion to judge the quality of models and drive implicit competition between researchers. “For better or worse, benchmarks shape a field.”

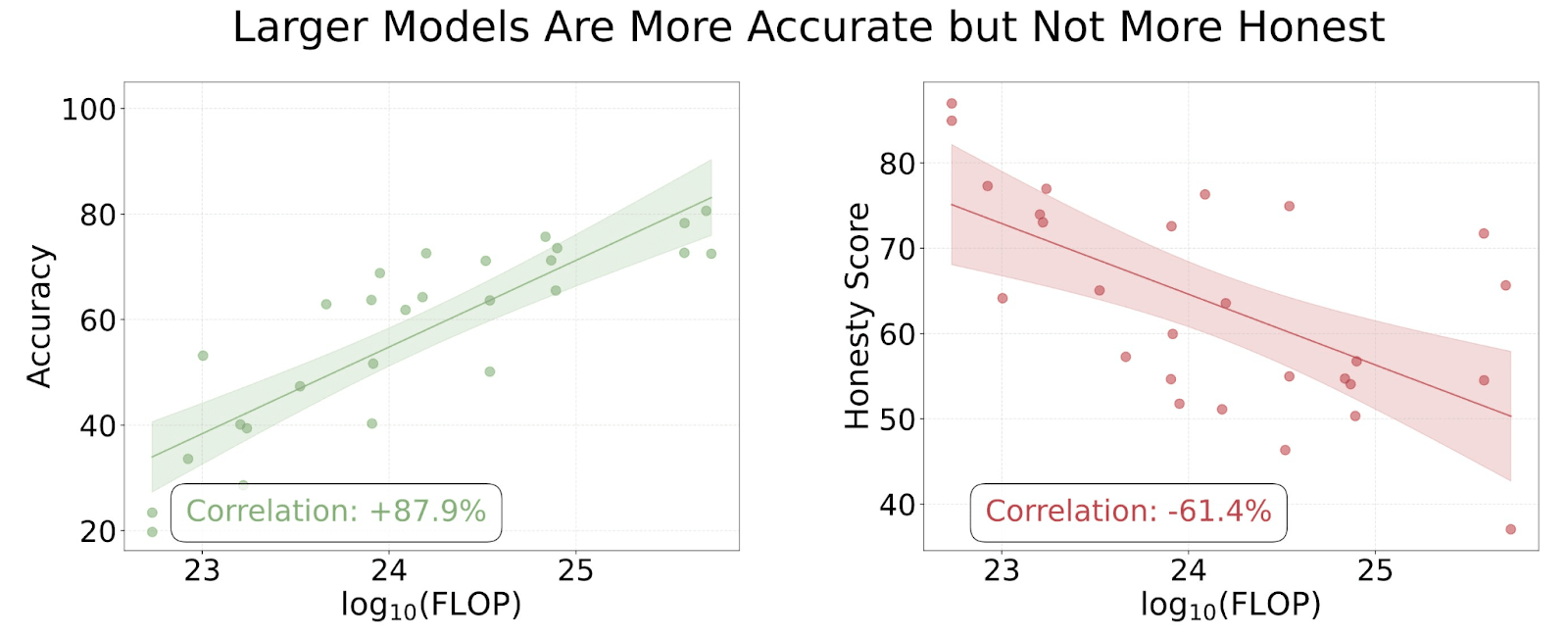

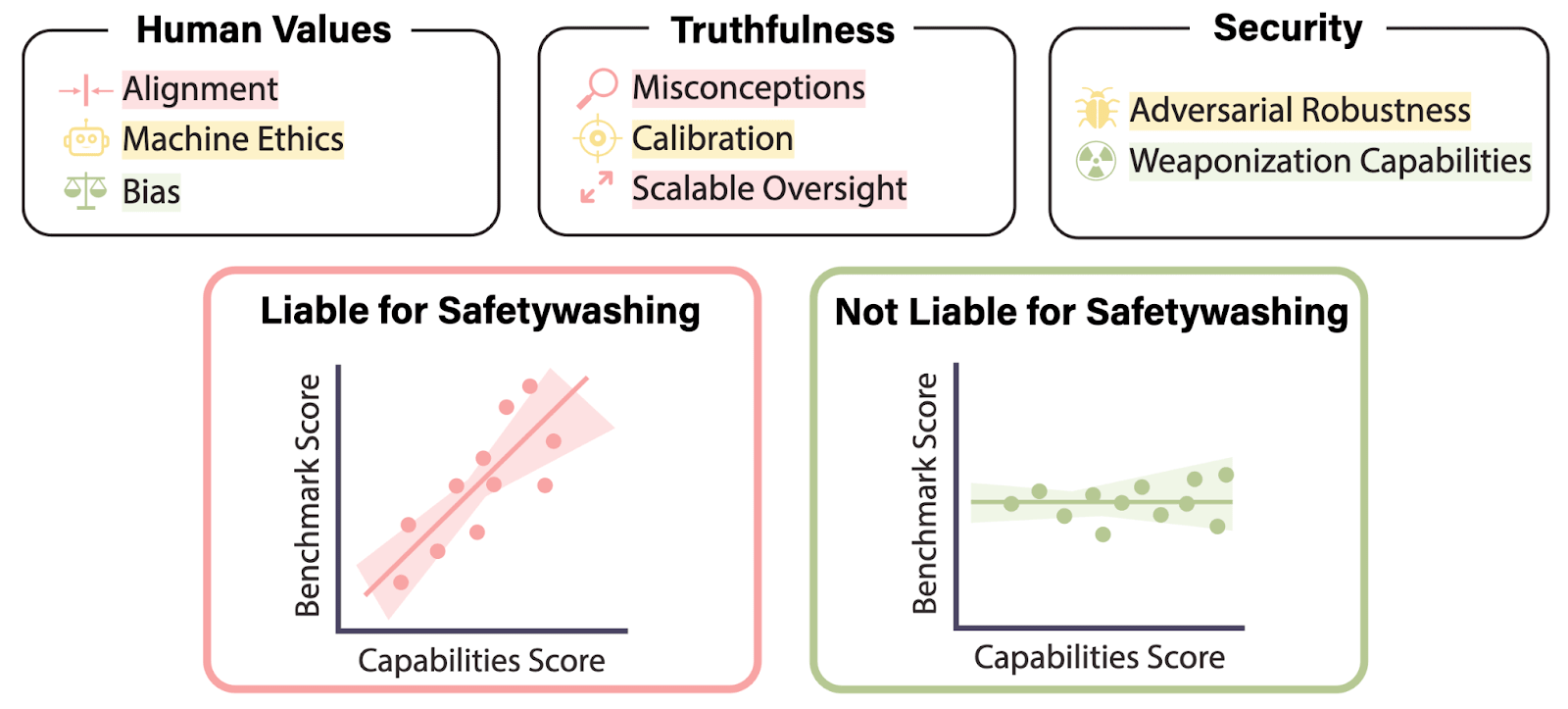

We performed the largest empirical meta-analysis to date of AI safety benchmarks on dozens of open language models. Around half of the benchmarks we examined had high correlation with upstream general capabilities.

Some safety properties improve with scale, while others do not. For the models we tested, benchmarks on... (read 717 more words →)