How to game the METR plot

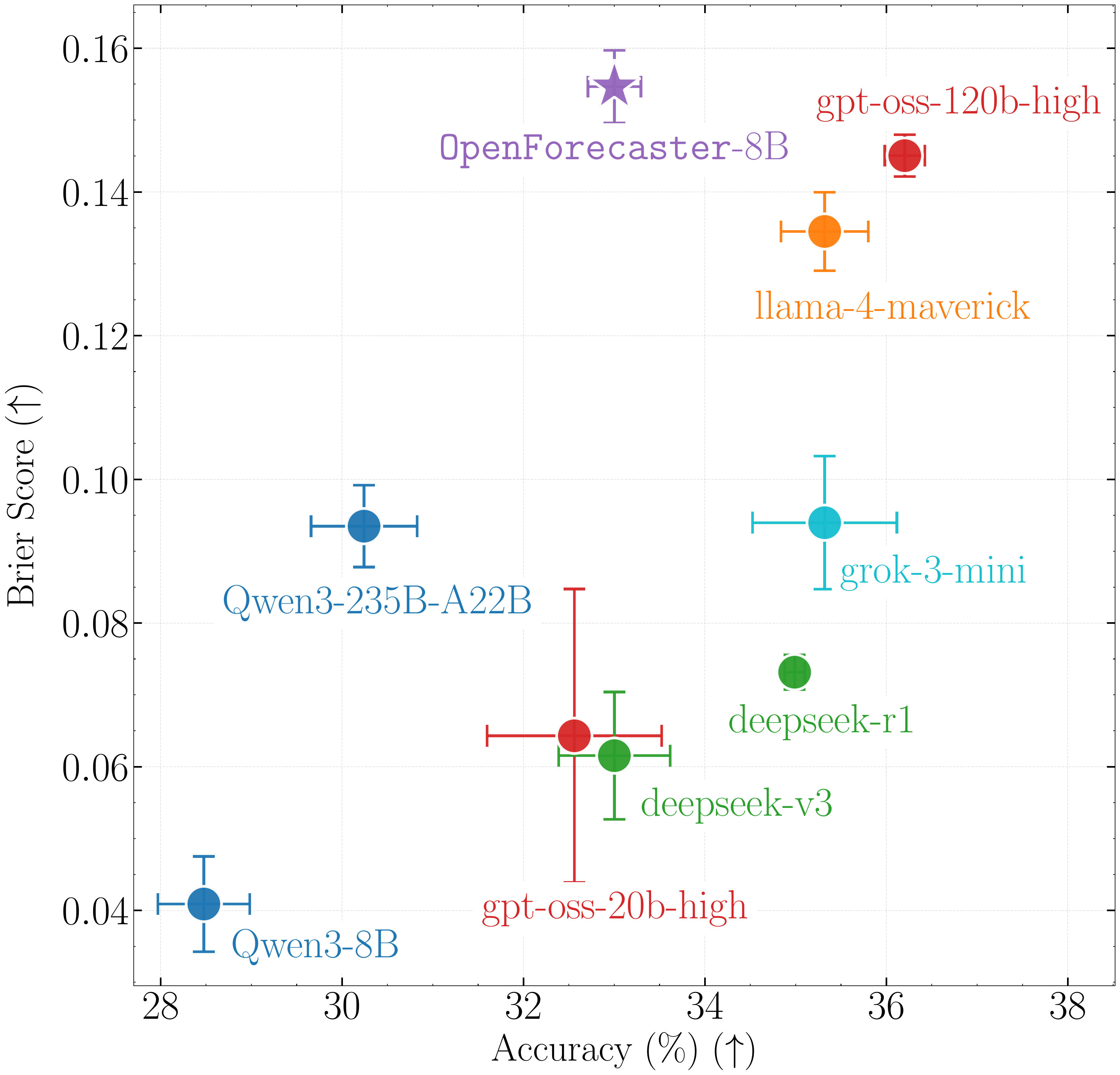

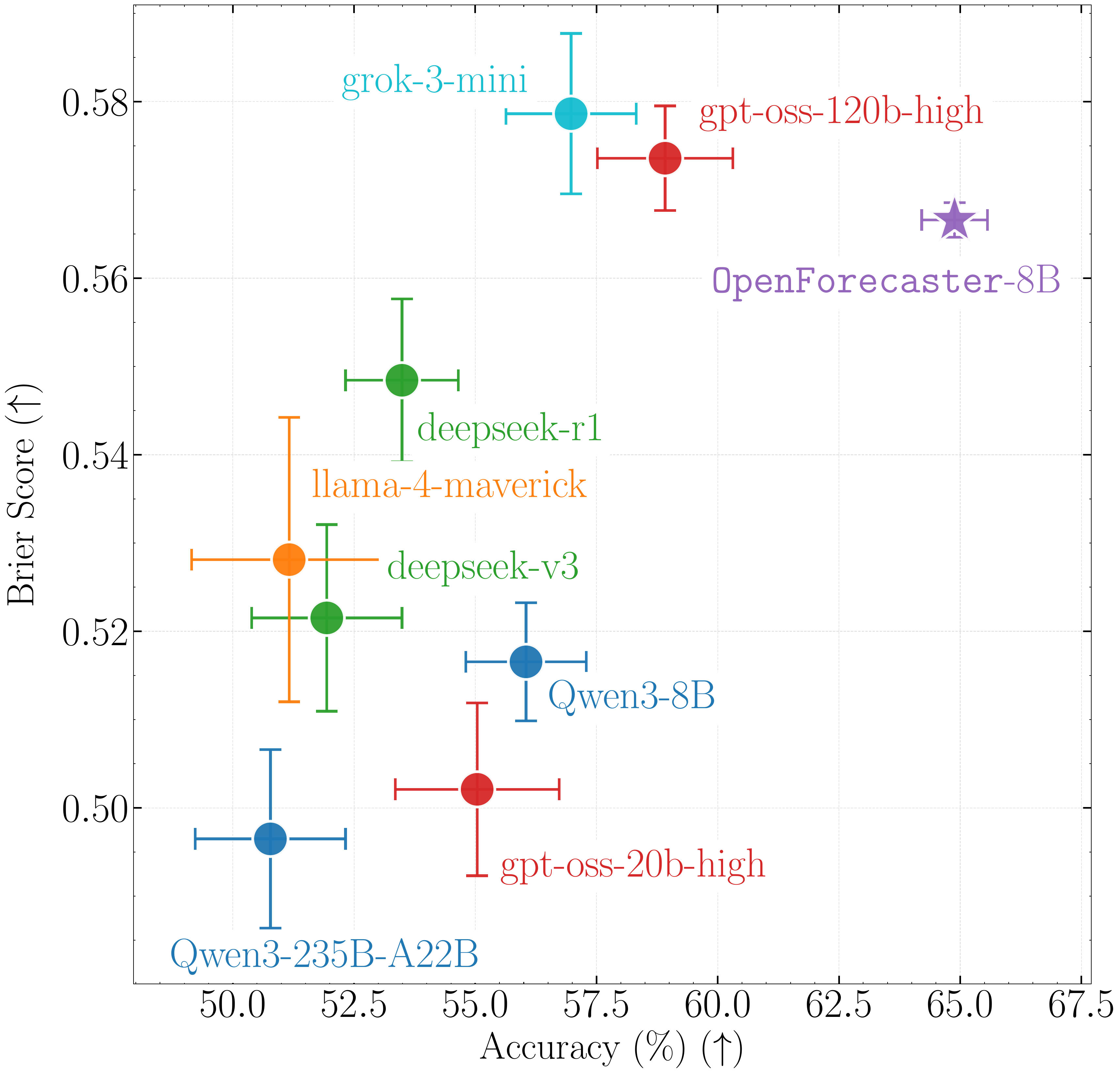

TL;DR: In 2025, we were in the 1-4 hour range, which has only 14 samples in METR’s underlying data. The topic of each sample is public, making it easy to game METR horizon length measurements for a frontier lab, sometimes inadvertently. Finally, the “horizon length” under METR’s assumptions might be adding little information beyond benchmark accuracy. None of this is to criticize METR—in research, its hard to be perfect on the first release. But I’m tired of what is being inferred from this plot, pls stop! 14 prompts ruled AI discourse in 2025 The METR horizon length plot was an excellent idea: it proposed measuring the length of tasks models can complete (in terms of estimated human hours needed) instead of accuracy. I'm glad it shifted the community toward caring about long-horizon tasks. They are a better measure of automation impacts, and economic outcomes (for example, labor laws are often based on number of hours of work). However, I think we are overindexing on it, far too much. Especially the AI Safety community, which based on it, makes huge updates in timelines, and research priorities. I suspect (from many anecdotes, including roon’s) the METR plot has influenced significant investment decisions, but I’ve not been in any boardrooms. 2 popular AI safety researchers making massive updates based on the Claude 4.5 Opus result today, 200+ likes, within 6 hours. Here is the problem with this. In 2025, according to this plot, frontier AI progress occurred in the regime between a horizon length of 1 to 4 hours. Guess how many samples have 1-4hr estimated task lengths? Just 14. How do we know? Kudos to the authors, the paper has this information, and they transparently provide task metadata. Figure 14 of their paper. 14 tasks in the 1-4 hr range. Illuminati confirmed? Hopefully, for many, this alone rings alarm bells. Under no circumstance should we be making such large inferences about AGI timelines, US vs China, C