Not sure if anyone made a review of this, but it seems to me that if you compare the past visions of the future, such as science fiction stories, we have less of everything except for computers.

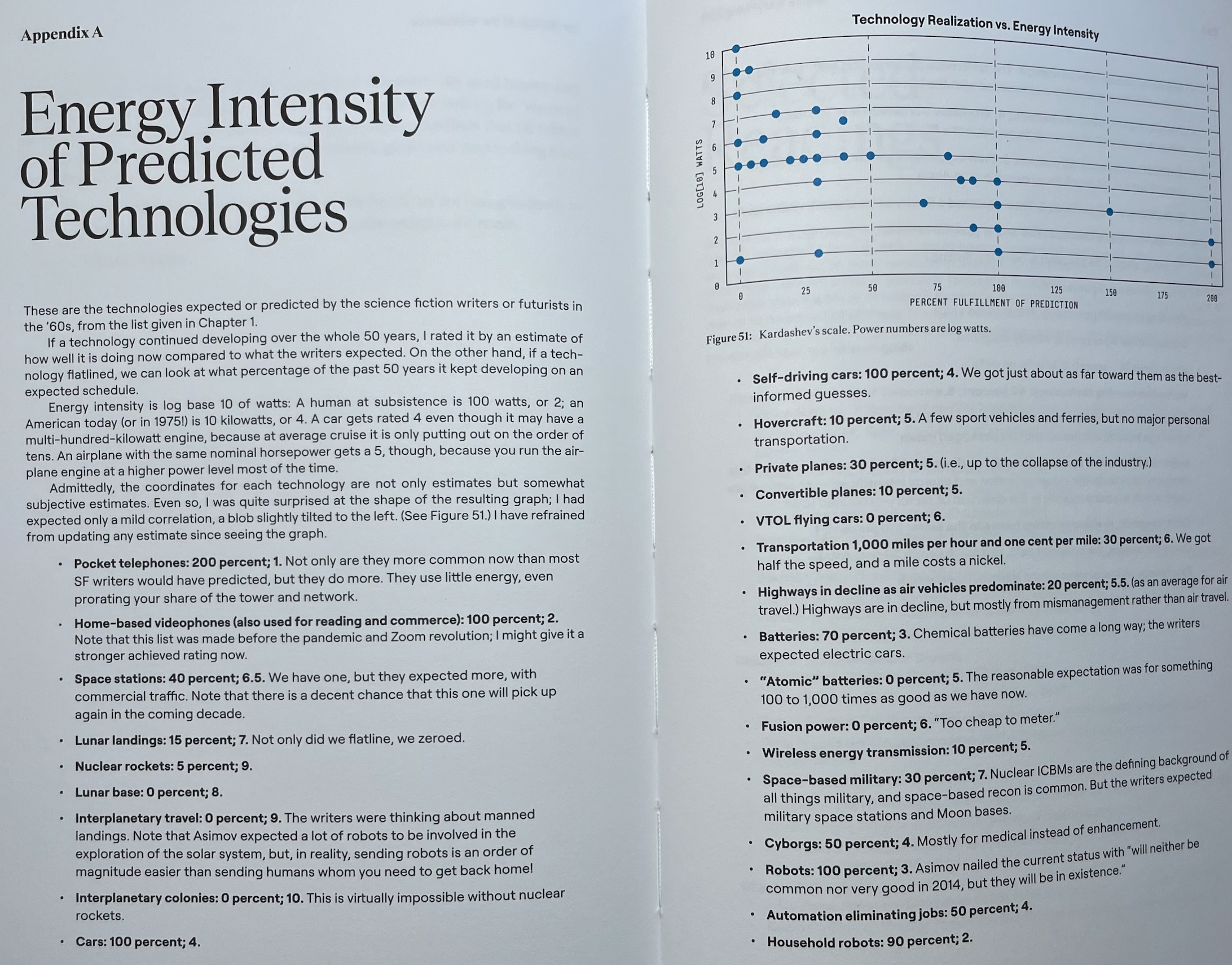

Yes! J Storrs Hall did an (admittedly subjective) study of this, and argues that the probability of a futurist prediction from the 1960s being right decreases logarithmically with the energy intensity required to achieve it. This is from Appendix A in "Where's my Flying Car?"

So it is not obvious in my opinion in which direction to update. I am tempted to say that computers evolve faster than everything else, so we should expect AI soon. On the other hand, maybe the fact that AI is currently an exception in this trend means that it will continue to be the exception. No strong opinion on this; just saying that "we are behind the predictions" does not in general apply to computer-related things.

I think this is a good point. At some point, I should put together a reference class of computer-specific futurist predictions from the past for comparison to the overall corpus.

Thanks — I'm not arguing for this position, I just want to understand the anti AGI x-risk arguments as well as I can. I think success would look like me being able to state all the arguments as strongly/coherently as their proponents would.

This is interesting — maybe the "meta Lebowski" rule should be something like "No superintelligent AI is going to bother with a task that is harder than hacking its reward function in such a way that it doesn't perceive itself as hacking its reward function." One goes after the cheapest shortcut that one can justify.