All of tin482's Comments + Replies

Thanks for sharing this, I believe this kind of work is very promising. Especially, it pushes us to be concrete.

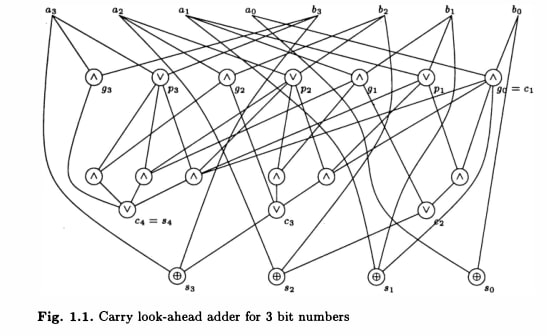

I think some of the characteristics you note are necessary side effects of fast parallel computation. Take this canonical constant-depth adder from pg 6 of "Introduction to Circuit Complexity":

Imagine the same circuit embedded in a neural network. Individual neurons are "merely" simple Boolean operations on the input - no algorithm in sight! More complicated problems often have even messier circuits, with redundant computation or false branches t...

I was trying to make a more specific point. Let me know if you think the distinction is meaningful -

So there are lots of "semi-rigorous" successes in Deep Learning. One I understand better than muP is good old Xavier initialization. Assuming that the activations at a given layer are normally distributed, we should scale our weights like 1/sqrt(n) so the activations don't diverge from layer to layer (since the sum of independent normals scales like sqrt(n)). This is exactly true for the first gradient step, but can become false at any later step once the we...

This does a great job of importing and translating a set of intuitions from a much more established and rigorous field. However, as with all works framing deep learning as a particular instance of some well-studied problem, it's vital to keep the context in mind:

Despite literally thousands of papers claiming to "understand deep learning" from experts in fields as various as computational complexity, compressed sensing, causal inference, and - yes - statistical learning, NO rigorous, first-principles analysis has ever computed any aspect of any deep learnin...

NO rigorous, first-principles analysis has ever computed any aspect of any deep learning model beyond toy settings

This is false. From the abstract of Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer

...Hyperparameter (HP) tuning in deep learning is an expensive process, prohibitively so for neural networks (NNs) with billions of parameters. We show that, in the recently discovered Maximal Update Parametrization (muP), many optimal HPs remain stable even as model size changes. This leads to a new HP tuning paradigm we call m

Personally, I think approaches like STaR (28 March 2022) will be important: bootstrap from weak chain-of-thought reasoners to strong ones by retraining on successful inner monologues. They also implement "backward chaining": training on monologues generated with the correct answer visible.

The most relevant paper I know of comes out of data privacy concerns. See Extracting Training Data from Large Language Models, which defines "k-eidetic memorization" as a string that can be elicited by some prompt and appears in at most k documents in the training set. They find several examples of k=1 memorization, though the strings appear repeatedly in the source documents. Unfortunately their methodology is targeted towards high-entropy strings and so is not universal.

I have a related question I've been trying to operationalize. How well do GPT-3's mem...

See also "Evaluating Large Language Models Trained on Code", OpenAI's contribution. They show progress on the APPS dataset (Intro: 25% pass, Comp: 3% pass @ 1000 samples), though note there was substantial overlap with the training set. They also only benchmark up to 12 billion params, but have also trained a related code-optimized model at GPT-3 scale (~100 billion).

Notice that technical details are having a large impact here:

- GPT-3 saw a relatively small amount of code, only what was coincidentally in the dataset, and does poorly

- GPT-J had Github as a subs

There is no state saving or learning at test time. The prompts were prepended to the API calls, you could see it in the requests

I think the appeal of symbolic and hybrid approaches is clear, and progress in this direction would absolutely transform ML capabilities. However, I believe the approach remains immature in a way that the phrase "Human-Level Reinforcement Learning" doesn't communicate.

The paper uses classical symbolic methods and so faces that classic enemy of GOFAI: super-exponential asymptotics. In order to make the compute more manageable, the following are hard-coded into EMPA:

- Direct access to game state (unlike the neural networks, which learned from pixels)

- The existe

I think this is a very interesting discussion, and I enjoyed your exposition. However, the piece fails to engage with the technical details or existing literature, to its detriment.

Take your first example, "Tricking GPT-3". GPT is not: give someone a piece of paper and ask them to finish it. GPT is: You sit behind one way glass watching a man at a typewriter. After every key he presses you are given a chance to press a key on an identical typewriter of your own. If typewriter-man's next press does not match your prediction, you get an electric shock. You a...

I think the best argument for a "fastest-er" timeline would be that several of your bottlenecks end up heavily substituting against each other or some common factor. A researcher in NLP in 2015 might reasonably have guessed it'd take decades to reach the level of ChatGPT - after all, it would require breakthroughs in parsing, entailment, word sense, semantics, world knowledge... In reality these capabilities were all blessings of scale.

o1 may or may not be the central breakthrough in this scenario, but I can paint a world where it is, and that world is ver... (read more)