All of titotal's Comments + Replies

building a bacteria that eats all metals would be world-ending: Most elements on the periodic table are metals. If you engineer a bacteria that eats all metals, it would eat things that are essential for life and kill us all.

Okay, what about a bacteria that only eats "stereotypical" metals, like steel or iron? I beg you to understand that you can't just sub in different periodic table elements and expect a bacteria to work the same. There will always be some material that the bacteria wouldn't work on that computers could still be made with. And even...

Under peer review, this never would have been seen by the public. It would have incentivized CAIS to actually think about the potential flaws in their work before blasting it to the public.

I asked the forecasting AI three questions:

Will iran possess a nuclear weapon before 2030:

539's Answer: 35%

Will iran possess a nuclear weapon before 2040:

539's Answer: 30%

Will Iran posses a nuclear weapon before 2050:

539's answer: 30%

Given that the AI apparently doesn't understand that things are more likely to happen if given more time, I'm somewhat skeptical that it will perform well in real forecasts.

The actual determinant here is whether or not you enjoy gambling.

Person A who regularly goes to a casino and bets 100 bucks on roulette for the fun of it will obviously go for bet 1. In addition to the expected 5 buck profit, they get the extra fun of gambling, making it a no-brainer. Similarly, bet 2 is a no brainer.

Person B who hates gambling and gets super upset when they lose will probably reject bet 1. The expected profit of 5 bucks is outweighed by the emotional cost of doing gambling, a thing they are upset by.

When it ...

I believe this is important because we should epistemically lower our trust in published media from here onwards.

From here onwards? Most of those tweets that chatgpt generated are not noticeably different from the background noise of political twitter (which is what it was trained on anyway). Also, twitter is not published media so I'm not sure where this statement comes from.

You should be willing to absorb information from published media with a healthy skepticism based on the source and an awareness of potential bias. This was true before chatgpt, and will still be true in the future.

No, I don't believe he did, but I'll save the critique of that paper for my upcoming "why MWI is flawed" post.

I'm not talking about the implications of the hypothesis, I'm pointing out the hypothesis itself is incomplete. To simplify, if you observe an electron which has a 25% chance of spin up and 75% chance of spin down, naive MWI predicts that one version of you sees spin up and one version of you sees spin down. It does not explain where the 25% or 75% numbers come from. Until we have a solution to that problem (and people are trying), you don't have a full theory that gives predictions, so how can you estimate it's kolmogorov complexity?

I am a physicist who works in a quantum related field, if that helps you take my objections seriously.

It’s the simplest explanation (in terms of Kolmogorov complexity).

Do you have proof of this? I see this stated a lot, but I don't see how you could know this when certain aspects of MWI theory (like how you actually get the Born probabilities) are unresolved.

The basic premise of this post is wrong, based on the strawman that an empiricist/scientist would only look at a single piece of information. You have the empiricist and scientists just looking at the returns on investment on bankmans scheme, and extrapolating blindly from there.

But an actual empiricist looks at all the empirical evidence. They can look the average rate of return of a typical investment, noting that this one is unusually high.They can learn how the economy works and figure out if there are any plausible mechanisms for this kind of ec...

I think some of the quotes you put forward are defensible, even though I disagree with their conclusions.

Like, Stuart Russell was writing an opinion piece in a newspaper for the general public. Saying AGI is "sort of like" meeting an alien species seems like a reasonable way to communicate his views, while making it clear that the analogy should not be treated as 1 to 1.

Similarly, with Rob wilbin, he's using the analogy to get across one specific point, that future AI may be very different from current AI. He also disclaims with the phras...

Right, and when you do wake up, before the machine is opened and the planet you are on is revealed, you would expect to see yourself in planet A 50% of the time in scenario 1, and 33% of the time in scenario 2?

What's confusing me is with scenario 2: say you are actually on planet A, but you don't know it yet. Before the split, it's the same as scenario 1, so you should expect to be 50% on planet A. But after the split, which occurs to a different copy ages away, you should expect to be 33% on planet A. When does the probability change? Or am I confusing something here?

While Wikipedia can definitely be improved, I think it's still pretty damn good.

I really cannot think of a better website on the internet, in terms of informativeness and accuracy. I suppose something like Khan academy or so on might be better for special topics, but they don't have the breadth that Wikipedia does. Even google search appears to be getting worse and worse these days.

Okay, I'm gonna take my skeptical shot at the argument, I hope you don't mind!

an AI that is *better than people at achieving arbitrary goals in the real world* would be a very scary thing, because whatever the AI tried to do would then actually happen

It's not true that whatever the AI tried to do would happen. What if an AI wanted to travel faster than the speed of light, or prove that 2+2=5, or destroy the sun within 1 second of being turned on?

You can't just say "arbitrary goals", you have to actually explain what goals there are that would b...

If Ilya was willing to cooperate, the board could fire Altman, with the Thanksgiving break available to aid the transition, and hope for the best.

Alternatively, the board could choose once again not to fire Altman, watch as Altman finished taking control of OpenAI and turned it into a personal empire, and hope this turns out well for the world.

Could they not have also gone with option 3: fill the vacant board seats with sympathetic new members, thus thwarting Altman's power play internally?

My presumption is that doing this while leaving Altman in place as CEO risks Altman engaging in hostile action, and it represents a vote of no confidence in any case. It isn't a stable option. But I'd have gamed it out?

Alternative framing: The board went after Altman with no public evidence of any wrongdoing. This appears to have backfired. If they had proof of significant malfeasance, and presented it to their employees, the story may have gone a lot differently.

Applying this to the AGI analogy would be be a statement that you can't shut down an AGI without proof that it is faulty or malevolent in some way. I don't fully agree though: I think if a similar AGI design had previously done a mass murder, people would be more willing to hit the off switch early.

Civilization involves both nice and mean actions. It involves people being both nice and mean to each other.

From this perspective, if you care about Civilization, optimizing solely for niceness is as meaningless and ineffective as optimizing for meanness.

Who said anything about optimizing solely for niceness? Everyone has many different values that sometimes conflict with each other, that doesn't mean that "niceness" shouldn't be one of them. I value "not killing people", but I don't optimize solely for that: I would still kill Mega-Hitler if I had t...

Would you rather live in a society that valued “niceness, community and civilization”, or one that valued “meanness, community and civilization”? I don’t think it’s a tough choice.

This is an awful straw man. Compare instead:

- niceness, community, and civilization

- community and civilization

Having seen what "niceness" entails, I'll opt for (2), which doesn't prioritize niceness or anti-niceness, and is niceness-agnostic.

Partially, but it is still true that Eliezer was critical of NN's at the time, see the comment on the post:

I'm no fan of neurons; this may be clearer from other posts.

Eliezer has never denied that neural nets can work (and he provides examples in that linked post of NNs working). Eliezer's principal objection was that NNs were inscrutable black boxes which would be insanely difficult to make safe enough to entrust humanity-level power to compared to systems designed to be more mathematically tractable from the start. (If I may quip: "The 'I', 'R', & 'S' in the acronym 'DL' stand for 'Interpretable, Reliable, and Safe'.")

This remains true - for all the good work on NN interpretability, assisted by the surprising leve...

"position" is nearly right. The more correct answer would be "position of one photon".

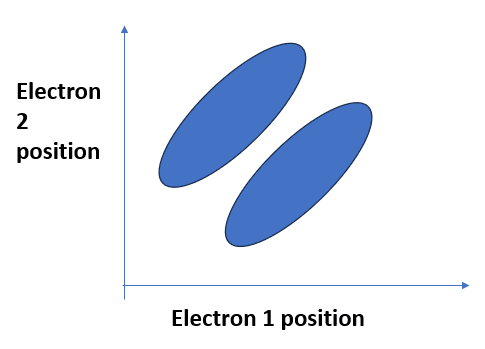

If you had two electrons, say, you would have to consider their joint configuration. For example, one possible wavefunction would look like the following, where the blobs represent high amplitude areas:

This is still only one dimensional: the two electrons are at different points along a line. I've entangled them, so if electron 1 is at position P, electron 2 can't be.

Now, try and point me to where electron 1 is on the graph above.

You see, I'm not graphin...

Nice graph!

But as a test, may I ask what you think the x-axis of the graph you drew is? Ie: what are the amplitudes attached to?

...I'm not claiming the conceptual boundaries I've drawn or terminology I've used in the diagram above are standard or objective or the most natural or anything like that. But I still think introducing probabilities and using terminology like "if you now put a detector in path A , it will find a photon with probability 0.5" is blurring these concepts together somewhat, in part by placing too much emphasis on the Born probabilit

Okay, let me break in down in terms of actual states, and this time, let's add in the actual detection mechanism, say an electron in a potential well. Say the detector is in the ground state energy, E=0, and the absorption of a photon will bump it up to the next highest state, E=1. We will place this detector in path A, but no detector in path B.

At time t = 0, our toy wavefunction is:

1/sqrt2 |photon in path A, detector E=0> + 1/sqrt2 |photon in path B, detector E=0>

If the photon in A collides with the detector at time t =1, then at time t=2, ou...

What part of "finding a photon" implies that the photon is a billiard ball? Wave-particle duality aside, a photon is a quanta of energy: the detector either finds that packet or it doesn't (or in many worlds, one branched detector finds it and the other branched detector doesn't).

I'm interested to hear more about how you interpret the "realness" of different branches. Say there is an electron in one of my pinky fingers that is in a superposition of spin up and spin down. Are there correspondingly two me's, one with with pinky electron up and one with pinky electron down? Or is there a single me, described by the superposition of pinky electrons?

I am assuming you are referring to the many worlds interpretation of quantum mechanics, where superpositions extend up to the human level, and the alternative configurations correspond to real, physical worlds with different versions of you that see different results on the detector.

Which is puzzling, because then why would you object to "the detector finding a photon"? The whole point of the theory is that detectors and humans are treated the same way. In one world, the detector finds the photon, and then spits out a result, and then one You sees th...

I'm a little confused by what your objection is. I'm not trying to stake out an interpretation here, I'm describing the calculation process that allows you to make predictions about quantum systems. The ontology of the wavefunction is a matter of heated debate, I am undecided on it myself.

Would you object to the following modification:

...If you now put a detector in path A , it will find a photon with probability ( ), and same for path B. If you repeated this experiment a very large number of times, the results would converge to finding it

Apologies for the late reply, but thank you for your detailed response.

Responding to your objection to my passage, I disagree, but I may edit it slightly to be clearer.

I was simply trying to point out the empirical fact that if you put a detector in path A and a detector in path B, and repeat the experiment a bunch of times, you will find the photon in detector A 50% of the time, and the photon in detector B 50% of the time. If the amplitudes had different values, you would empirically find them in different proportions, as given by the squared...

Bayes can judge you now: your analysis is half-arsed, which is not a good look when discussing a matter as serious as this.

All you’ve done is provide one misleading statistic. The base rate of experiencing psychosis may be 1-3%, but the base rate of psychotic disorders is much lower, at 0.25% or so.

But the most important factor is one that is very hard to estimate, which is what percentage of people with psychosis manifest that psychosis as false memories of being groped by a sibling. If the psychosis had involved seeing space aliens, we would...

At no point did I ever claim that this was a conclusive debunking of AI risk as a whole, only an investigation into one specific method proposed by Yudkowksy as an AI death dealer.

In my post I have explained what DMS is, why it was proposed as a technology, how far along the research went, the technical challenges faced in it's construction, some observations of how nanotech research works, the current state of nanotech research, what near-term speedups can be expected from machine learning, and given my own best guess on whether an AGI could pull off inve...

Note that the nearer side feeling colder than the farther side is be completely possible.

The key is that they didn’t check the temperatures of each side with a thermometer, but with their hands. And your hands don’t feel temperature directly, they feel heat conduction. If you have a cake and a baking tin that are the same temperature, the metal will feel hotter because it is more conductive.

If I wanted to achieve the effect described here without flipping trickery, I might make the side near the radiator out of a very nonconductive plastic (painted t...

I think the analysis for "bomb" is missing something.

This is a scenario where the predictor is doing their best not to kill you: if they think you'll pick left they pick right, if they think you'll pick right they'll pick left.

The CDT strategy is to pick whatever box doesn't have a bomb in it. So if the player is a perfect CDTer, the predictor is 100% guaranteed to be correct in their pick. The predictor actually gets to pick whether the player loses 100 bucks or not. If the predictor is nice, the CDTer gets to walk away without paying anything and a 0% chance of death.

Eliezers response is not comprehensive. He responds to two points (a reasonable choice), but he responds badly, first with a strawman, second with an argument that is probably wrong.

The first point he argues is about brain efficiency, and is not even a point made by the OP. The OP was simply citing someone else, to show that "Eliezer is overconfident about my area of expertise" is an extremely common opinion. It feels very weird to attack the OP over citing somebody else's opinion.

Regardless, Eliezer handles this badly anyway. Eliezer giv...

I would be interested in your actual defense of the first two sections. It seems the OP went to great lengths to explain exactly where Eliezer went wrong, and contrasted Eliezer's beliefs with citations to actual, respected domain level experts.

I also do not understand your objection to the term "gross overconfidence". I think the evidence provided by the OP is completely sufficient to substantiate this claim. In all three cases (and many more I can think of that are not mentioned here), Eliezer has stated things that are probably incorrect, and then dismi...

...Most disagreements of note—most disagreements people care about—don't behave like the concert date or physics problem examples: people are very attached to "their own" answers. Sometimes, with extended argument, it's possible to get someone to change their mind or admit that the other party might be right, but with nowhere near the ease of agreeing on (probabilities of) the date of an event or the result of a calculation—from which we can infer that, in most disagreements people care about, there is "something else" going on besides both parties just wanti

This seems like an epistemically dangerous way of describing the situation that "These people think that AI x-risk arguments are incorrect, and are willing to argue for that position". I have never seen anyone claim that andressen and Lecunn do not truly believe their arguments. I also legitimately think that x-risk arguments are incorrect, am I conducting an "infowar"? Adopting this viewpoint seems like it would blind you to legitimate arguments from the other side.

That's not to say you can't point out errors in argumentations, or point out ho...

I'll put a commensurate amount of effort into why you should talk about these things.

How an AI could persuade you to talk it out of a box/How an AI could become an agent

You should keep talking about this because if it is possible to "box" an AI, or keep it relegated to "tool" status, then it might be possible to use such an AI to combat unboxed, rogue AI's. For example, give it a snapshot of the internet from a day ago, and ask it to find the physical location of rogue AI servers, which you promptly bomb.

How an AI could get ahold of, or create,...

Hey, thanks for the kind response! I agree that this analysis is mostly focused on arguing against the “imminent certain doom” model of AI risk, and that longer term dynamics are much harder to predict. I think I’ll jump straight to addressing your core point here:

...Something smarter than you will wind up doing whatever it wants. If it wants something even a little different than you want, you're not going to get your way. If it doesn't care about you even a little, and it continues to become more capable faster than you do, you'll cease being useful and wil

I do agree that trying to hack the password is a smarter method for the AI to try. I was simply showing an example of a task that an AI would want to do, but be unable to due to computational intractability.

I chose the example of Yudkowsky's plan for my analysis because he has described it as his "lower bound" plan. After spending two decades on AI safety, talking to all the most brilliant minds in the field, this is apparently what he thinks the most convincing plan for AI takeover is. If I believe this plan is intractable (and I very much believe i...

In a literal sense, of course it doesn't invalidate it. It just proves that the mathematical limit of accuracy was higher than we thought it was for the particular problem of protein folding. In general, you should not expect two different problems in two different domains to have the same difficulty, without a good reason to (like that they're solving the same equation on the same scale). Note that Alphafold is extremely extremely impressive, but by no means perfect. We're talking accuracies of 90%, not 99.9%, similar to DFT. It is an open question ...

I appreciate the effort of this writeup! I think it helps clarify a bit of my thoughts on the subject.

I was trying to say “maybe it’s simpler, or maybe it’s comparably simple, I dunno, I haven’t thought about it very hard”. I think that’s what Yudkowsky was claiming as well. I believe that Yudkowsky would also endorse the stronger claim that GR is simpler—he talks about that in Einstein’s Arrogance. (It’s fine and normal for someone to make a weaker claim when they also happen to believe a stronger claim.)

So, on thinking about it again, I think it is...

Indeed! Deriving physics requires a number of different experiments specialized to the discovery of each component. I could see how a spectrograph plus an analysis of the bending of light could get you a guess that light is quantised via the ultraviolet catastrophe, although i'm doubtful this is the only way to get the equation describing the black body curve. I think you'd need more information like the energy transitions of atoms or maxwells equations to get all the way to quantum mechanics proper though. I don't think this would get you to gravity either, as quantum physics and general relativity are famously incompatible on a fundamental level.

In the post, I show you both a grass and an apple that did not require Newtonian gravity or general relativity to exist. Why exactly are nuclear reactions and organic chemistry necessary for a clump of red things to stick together, or a clump of green things to stick together?

When it comes to the "level of simulation", how exactly is the AI meant to know when it is in the "base level"? We don't know that about our universe. For all the computer knows, it's simulation is the universe.

I find it very hard to believe that gen rel is a simpler explanation of “F=GmM/r2” than Newtonian physics is. This is a bolder claim that yudkowsky put forward, you can see from the passage that he thinks newton would win out on this front. I would be genuinely interested if you could find evidence in favour of this claim.

A Newtonian gravity just requires way, way fewer symbols to write out than the Einstein field equations. It’s way easier to compute and does not require assumptions like that spacetime curves.

If you were building a simul...

This is a bolder claim that yudkowsky put forward, you can see from the passage that he thinks newton would win out on this front.

I was trying to say “maybe it’s simpler, or maybe it’s comparably simple, I dunno, I haven’t thought about it very hard”. I think that’s what Yudkowsky was claiming as well. I believe that Yudkowsky would also endorse the stronger claim that GR is simpler—he talks about that in Einstein’s Arrogance. (It’s fine and normal for someone to make a weaker claim when they also happen to believe a stronger claim.)

...If you were building a

I don't think you should give a large penalty to inverse square compared to other functions. It's pretty natural once you understand that reality has three dimensions.

This is a fair point. 1/r2 would definitely be in the "worth considering" category. However, where is the evidence that the gravitational force is varying with distance at all? This is certainly impossible to observe in three frames.

the information about electromagnetism contained in the apple

if you have the apple's spectrum

What information? What spectrum? The color information received...

People can generally tell when you're friends with them for instrumental reasons rather than because you care about them or genuinely value their company. If they don't at first, they will eventually, and in general, people don't like being treated as tools. Trying to "optimise" your friend group for something like interestingness is just shooting yourself in the foot, and you will miss out on genuine and beautiful connections.

You can hook a chess-playing network up to a vision network and have it play chess using images of boards - it's not difficult.

I think you have to be careful here. In this setup, you have two different AI's: One vision network that classified images, and the chess AI that plays chess, and presumably connecting code that translates the output of the vision into a format suitable for the chess player.

I think what Sarah is referring to is that if you tried to directly hook up the images to the chess engine, it wouldn't be able to figure it out, because reading images is not something it's trained to do.

One thing that confuses me about the evolution metaphors is this:

Humans managed to evolve a sense of morality from what seems like fairly weak evolutionary pressure. Ie, it generally helps form larger groups to survive better, which is good, but also theres a constant advantage to being selfish and defecting. Amoral people can still accrue power and wealth and reproduce. Compare this to something like the pressure not to touch fire, which is much more acute.

The pressure to be "moral" of an AI seems significantly more powerful than that applied to hum...

Sorry, I should have specified, I am very aware of Eliezers beliefs. I think his policy prescriptions are reasonable, if his beliefs are true. I just don't think his beliefs are true. Established AI experts have heard his arguments with serious consideration and an open mind, and still disagree with them. This is evidence that they are probably flawed, and I don't find it particularly hard to think of potential flaws in his arguments.

The type of global ban envisioned by yudkowsky really only makes sense if you agree with his premises. For example, se...

The type of global ban envisioned by yudkowsky really only makes sense if you agree with his premises

I think Eliezer's current attitude is actually much closer to how an ordinary person thinks or would think about the problem. Most people don't feel a driving need to create a potential rival to the human race in the first place! It's only those seduced by the siren call of technology, or who are trying to engage with the harsh realities of political and economic power, who think we just have to keep gambling in our current way. Any politician who seriously...

Isn't Stuart Russell an AI doomer as well, separated from Eliezer only by nuances?

I'm only going off of his book and this article, but I think they differ in far more than nuances. Stuart is saying "I don't want my field of research destroyed", while Eliezer is suggesting a global treaty to airstrike all GPU clusters, including on nuclear-armed nations. He seems to think the control problem is solvable if enough effort is put into it.

Eliezers beliefs are very extreme, and almost every accomplished expert disagrees with him. I'm not saying you should ...

I must admit as an outsider I am somewhat confused as to why Eliezer's opinion is given so much weight, relative to all the other serious experts that are looking into AI problems. I understand why this was the case a decade ago, when not many people were seriously considering the issues, but now there are AI heavyweights like Stuart Russell on the case, whose expertise and knowledge of AI is greater than Eliezer's, proven by actual accomplishments in the field. This is not to say Eliezer doesn't have achievements to his belt, but I find his academic work lackluster when compared to his skills in awareness raising, movement building, and persuasive writing.

If that were the case, then enforcing the policy would not "run some risk of nuclear exchange". I suggest everyone read the passage again. He's advocating for bombing datacentres, even if they are in russia or china.

OK, I guess I was projecting how I would imagine such a scenario working, i.e. through the UN Security Council, thanks to a consensus among the big powers. The Nuclear Non-Proliferation Treaty seems to be the main precedent, except that the NNPT allows for the permanent members to keep their nuclear weapons for now, whereas an AGI Prevention Treaty would have to include a compact among the enforcing powers to not develop AGI themselves.

UN engagement with the topic of AI seems slender, and the idea that AI is a threat to the survival of the human race...

A lot of the defenses here seem to be relying on the fact that one of the accused individuals was banned from several rationalist communities a long time ago. While this definitely should have been included in the article, I think the overall impression they are giving is misleading.

In 2020, the individual was invited to give a talk for an unofficial SSC online meetup (scott alexander was not involved, and does ban the guy from his events). The post was announced on lesswrong with zero pushback, and went ahead.

Here is a comment from Anna Salamo...

I personally think the current relationship the community has to Michael feels about right in terms of distance.

I also want to be very clear that I have not investigated the accusations against Michael and don't currently trust them hugely for a bunch of reasons, though they seem credible enough that I would totally investigate them if I thought that Michael would pose a risk to more people in the community if the accusations were true.

As it is, the current level of distance I don't see it as hugely my, or the rationality community's, responsibility to investigate them though if I had more time and was less crunched, I might.

List of lethalities is not by any means a "one stop shop". If you don't agree with Eliezer on 90% of the relevant issues, it's completely unconvincing. For example, in that article he takes as an assumption that an AGI will be godlike level omnipotent, and that it will default to murderism.