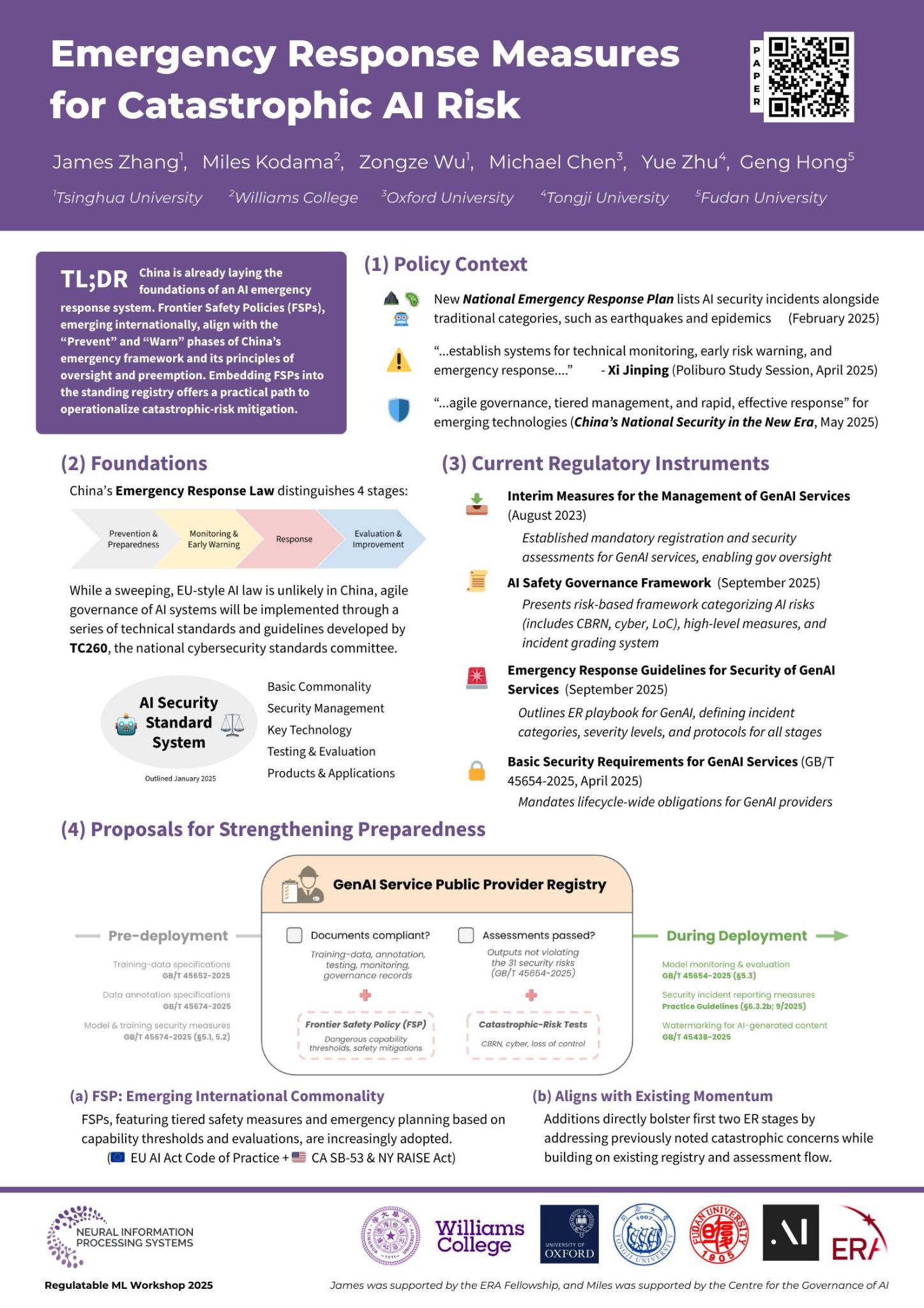

Emergency Response Measures for Catastrophic AI Risk

I have written a paper on Chinese domestic AI regulation with coauthors James Zhang, Zongze Wu, Michael Chen, Yue Zhu, and Geng Hong. It was presented recently at NeurIPS 2025's Workshop on Regulatable ML, and it may be found on ArXiv and SSRN. Here I'll explain what I take to...

Interesting! Thanks for the link.