So, it seems AI discourse on X / Twitter is getting polarised. This is bad. Especially bad is how some engage in deliberate weaponization of discourse, for political ends.

At the same time, I observe: AI Twitter is still a small space. There are often important posts that have only ~100 likes, ~10-100 comments, and maybe ~10-30 likes on top comments. Moreover, it seems to me little sane comments, when they do appear, do get upvoted.

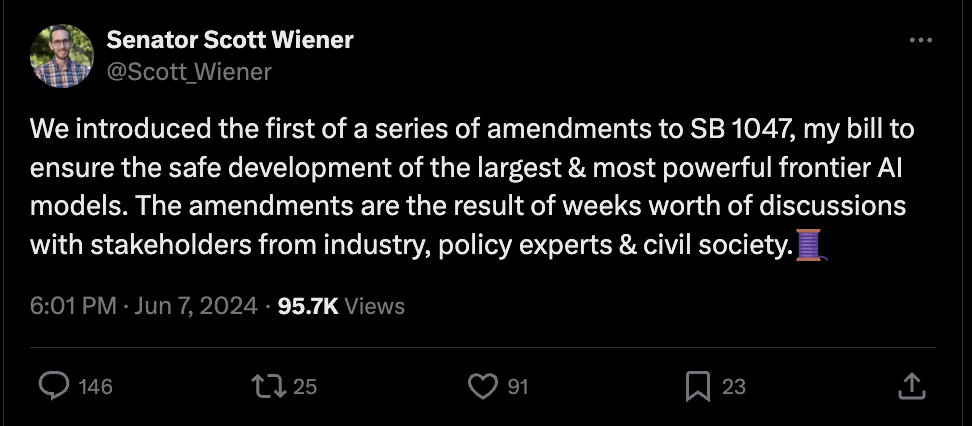

This is... crazy! Consider this thread:

A piece of legislation is being discussed, with major ramifications for regulation of frontier models, and... the quality of discourse hinges on whether 5-10 random folks show up and say some sensible stuff on Twitter!?

It took me a while to see these things. I think I had a cached view of "political discourse is hopeless, the masses of trolls are too big for anything to matter, unless you've got some specialised lever or run one of these platforms".

I now think I was wrong.

Just like I was wrong for many years about the feasibility of getting public and regulatory support for taking AI risk seriously.

This begets the following hypothesis: AI discourse might currently be small enough that we could basically just brute force raise the sanity waterline. No galaxy-brained stuff. Just a flood of folks making... reasonable arguments.

It's the dumbest possible plan: let's improve AI discourse by going to places with bad discourse and making good arguments.

I recognise this is a pretty strange view, and does counter a lot of priors I've built up hanging around LessWrong for the last couple years. If it works, it's because of a surprising, contingent, state of affairs. In a few months or years the numbers might shake out differently. But for the time being, the arbitrage is real.

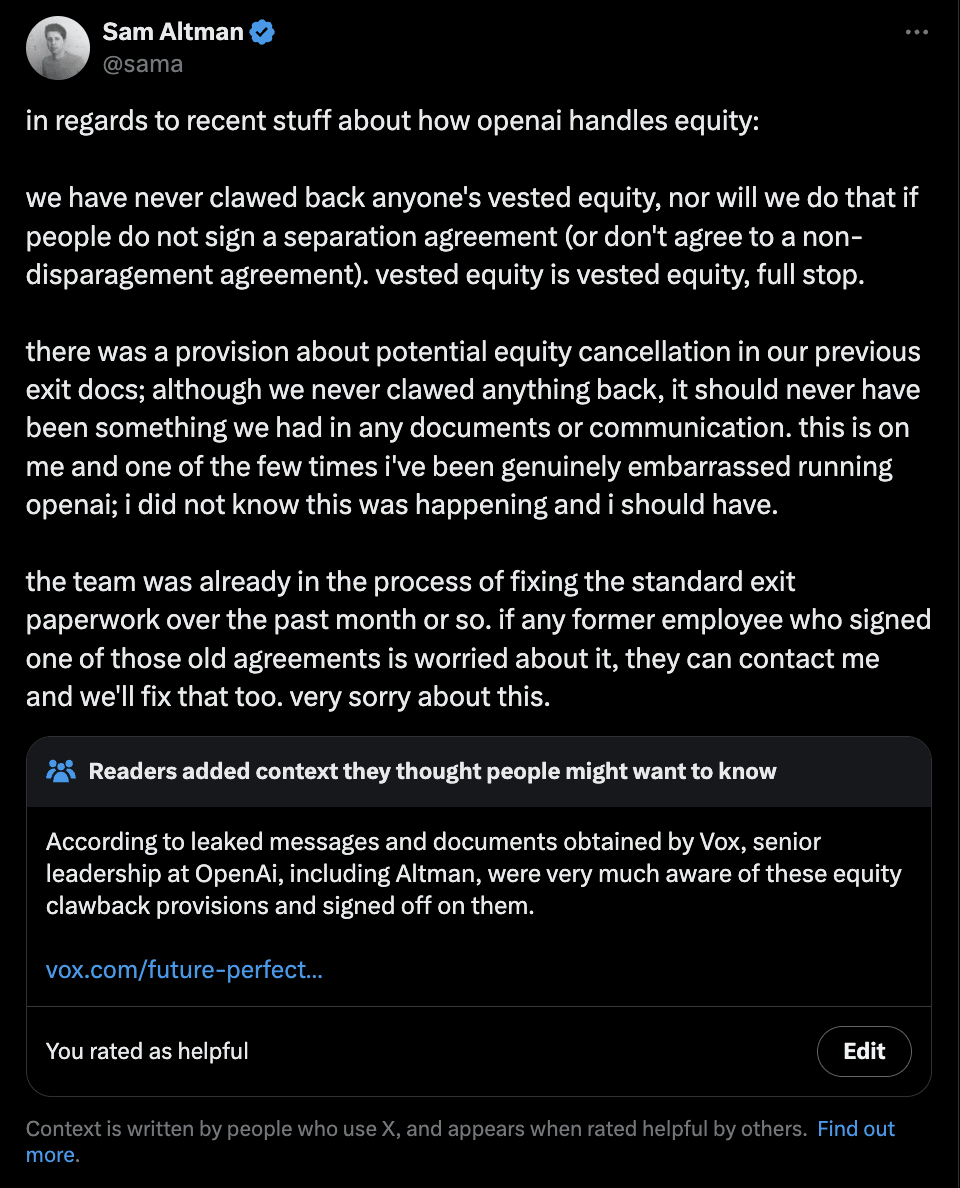

Furthermore, there's of course already a built-in feature, with beautiful mechanism design and strong buy-in from X leadership, for increasing the sanity waterline: Community Notes. It's a feature that allows users to add "notes" to tweets providing context, and then only shows those notes ~if they get upvoted by people who usually disagree.

Yet... outside of massive news like the OpenAI NDA scandal, Community Notes is barely being used for AI discourse. I'd guess probably for no more interesting of a reason than that few people use community notes overall, multiplied by few of those engaging in AI discourse. Again, plausibly, the arbitrage is real.

If you think this sounds compelling, here's two easy ways that might just work to improve AI discourse:

- Make an account on X. When you see invalid or bad faith arguments on AI: reply with valid arguments. Upvote other such replies.

- Join Community Notes at this link. Start writing and rating posts. (You'll to need to rate some posts before you're allowed to write your own.)

And, above all: it doesn't matter what conclusion you argue for; as long as you make valid arguments. Pursue asymmetric strategies, the sword that only cuts if your intention is true.

ok I meant something like "people would could reach a lot of people (eg. roon's level, or even 10x less people than that) from tweeting only sensible arguments is small"

but I guess that don't invalidate what you're suggesting. if I understand correctly, you'd want LWers to just create a twitter account and debunk arguments by posting comments & occasionally doing community notes

that's a reasonable strategy, though the medium effort version would still require like 100 people spending sometimes 30 minutes writing good comments (let's say 10 minutes a day on average). I agree that this could make a difference.

I guess the sheer volume of bad takes or people who like / retweet bad takes is such that even in the positive case that you get like 100 people who commit to debunking arguments, this would maybe add 10 comments to the most viral tweets (that get 100 comments, so 10%), and maybe 1-2 comments for the less popular tweets (but there's many more of them)

I think it's worth trying, and maybe there are some snowball / long-term effects to take into account. it's worth highlighting the cost of doing so as well (16h or productivity a day for 100 people doing it for 10m a day, at least, given there are extra costs to just opening the app). it's also worth highlighting that most people who would click on bad takes would already be polarized and i'm not sure if they would change their minds of good arguments (and instead would probably just reply negatively, because the true rejection is more something about political orientations, prior about AI risk, or things like that)

but again, worth trying, especially the low efforts versions