I'm currently working as a contractor at Anthropic in order to get employee-level model access as part of a project I'm working on. The project is a model organism of scheming, where I demonstrate scheming arising somewhat naturally with Claude 3 Opus. So far, I’ve done almost all of this project at Redwood Research, but my access to Anthropic models will allow me to redo some of my experiments in better and simpler ways and will allow for some exciting additional experiments. I'm very grateful to Anthropic and the Alignment Stress-Testing team for providing this access and supporting this work. I expect that this access and the collaboration with various members of the alignment stress testing team (primarily Carson Denison and Evan Hubinger so far) will be quite helpful in finishing this project.

I think that this sort of arrangement, in which an outside researcher is able to get employee-level access at some AI lab while not being an employee (while still being subject to confidentiality obligations), is potentially a very good model for safety research, for a few reasons, including (but not limited to):

- For some safety research, it’s helpful to have model access in ways that la

Yay Anthropic. This is the first example I'm aware of of a lab sharing model access with external safety researchers to boost their research (like, not just for evals). I wish the labs did this more.

[Edit: OpenAI shared GPT-4 access with safety researchers including Rachel Freedman before release. OpenAI shared GPT-4 fine-tuning access with academic researchers including Jacob Steinhardt and Daniel Kang in 2023. Yay OpenAI. GPT-4 fine-tuning access is still not public; some widely-respected safety researchers I know recently were wishing for it, and were wishing they could disable content filters.]

I'd be surprised if this was employee-level access. I'm aware of a red-teaming program that gave early API access to specific versions of models, but not anything like employee-level.

It was a secretive program — it wasn’t advertised anywhere, and we had to sign an NDA about its existence (which we have since been released from). I got the impression that this was because OpenAI really wanted to keep the existence of GPT4 under wraps. Anyway, that means I don’t have any proof beyond my word.

(I'm a full-time employee at Anthropic.) It seems worth stating for the record that I'm not aware of any contract I've signed whose contents I'm not allowed to share. I also don't believe I've signed any non-disparagement agreements. Before joining Anthropic, I confirmed that I wouldn't be legally restricted from saying things like "I believe that Anthropic behaved recklessly by releasing [model]".

I think I could share the literal language in the contractor agreement I signed related to confidentiality, though I don't expect this is especially interesting as it is just a standard NDA from my understanding.

I do not have any non-disparagement, non-solicitation, or non-interference obligations.

I'm not currently going to share information about any other policies Anthropic might have related to confidentiality, though I am asking about what Anthropic's policy is on sharing information related to this.

I thought it would be helpful to post about my timelines and what the timelines of people in my professional circles (Redwood, METR, etc) tend to be.

Concretely, consider the outcome of: AI 10x’ing labor for AI R&D[1], measured by internal comments by credible people at labs that AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

Here are my predictions for this outcome:

- 25th percentile: 2 year (Jan 2027)

- 50th percentile: 5 year (Jan 2030)

The views of other people (Buck, Beth Barnes, Nate Thomas, etc) are similar.

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

Only including speedups due to R&D, not including mechanisms like synthetic data generation. ↩︎

My timelines are now roughly similar on the object level (maybe a year slower for 25th and 1-2 years slower for 50th), and procedurally I also now defer a lot to Redwood and METR engineers. More discussion here: https://www.lesswrong.com/posts/K2D45BNxnZjdpSX2j/ai-timelines?commentId=hnrfbFCP7Hu6N6Lsp

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

@ryan_greenblatt can you say more about what you expect to happen from the period in-between "AI 10Xes AI R&D" and "AI takeover is very plausible?"

I'm particularly interested in getting a sense of what sorts of things will be visible to the USG and the public during this period. Would be curious for your takes on how much of this stays relatively private/internal (e.g., only a handful of well-connected SF people know how good the systems are) vs. obvious/public/visible (e.g., the majority of the media-consuming American public is aware of the fact that AI research has been mostly automated) or somewhere in-between (e.g., most DC tech policy staffers know this but most non-tech people are not aware.)

I don't feel very well informed and I haven't thought about it that much, but in short timelines (e.g. my 25th percentile): I expect that we know what's going on roughly within 6 months of it happening, but this isn't salient to the broader world. So, maybe the DC tech policy staffers know that the AI people think the situation is crazy, but maybe this isn't very salient to them. A 6 month delay could be pretty fatal even for us as things might progress very rapidly.

AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

I don't grok the "% of quality adjusted work force" metric. I grok the "as good as having your human employees run 10x faster" metric but it doesn't seem equivalent to me, so I recommend dropping the former and just using the latter.

Inference compute scaling might imply we first get fewer, smarter AIs.

Prior estimates imply that the compute used to train a future frontier model could also be used to run tens or hundreds of millions of human equivalents per year at the first time when AIs are capable enough to dominate top human experts at cognitive tasks[1] (examples here from Holden Karnofsky, here from Tom Davidson, and here from Lukas Finnveden). I think inference time compute scaling (if it worked) might invalidate this picture and might imply that you get far smaller numbers of human equivalents when you first get performance that dominates top human experts, at least in short timelines where compute scarcity might be important. Additionally, this implies that at the point when you have abundant AI labor which is capable enough to obsolete top human experts, you might also have access to substantially superhuman (but scarce) AI labor (and this could pose additional risks).

The point I make here might be obvious to many, but I thought it was worth making as I haven't seen this update from inference time compute widely discussed in public.[2]

However, note that if inference compute allows for trading off betw...

I agree comparative advantages can still important, but your comment implied a key part of the picture is "models can't do some important thing". (E.g. you talked about "The frame is less accurate in worlds where AI is really good at some things and really bad at other things." but models can't be really bad at almost anything if they strictly dominate humans at basically everything.)

And I agree that at the point AIs are >5% better at everything they might also be 1000% better at some stuff.

I was just trying to point out that talking about the number human equivalents (or better) can still be kinda fine as long as the model almost strictly dominates humans as the model can just actually substitute everywhere. Like the number of human equivalents will vary by domain but at least this will be a lower bound.

Sometimes people think of "software-only singularity" as an important category of ways AI could go. A software-only singularity can roughly be defined as when you get increasing-returns growth (hyper-exponential) just via the mechanism of AIs increasing the labor input to AI capabilities software[1] R&D (i.e., keeping fixed the compute input to AI capabilities).

While the software-only singularity dynamic is an important part of my model, I often find it useful to more directly consider the outcome that software-only singularity might cause: the feasibility of takeover-capable AI without massive compute automation. That is, will the leading AI developer(s) be able to competitively develop AIs powerful enough to plausibly take over[2] without previously needing to use AI systems to massively (>10x) increase compute production[3]?

[This is by Ryan Greenblatt and Alex Mallen]

We care about whether the developers' AI greatly increases compute production because this would require heavy integration into the global economy in a way that relatively clearly indicates to the world that AI is transformative. Greatly increasing compute production would require building additional fabs whi...

I'm not sure if the definition of takeover-capable-AI (abbreviated as "TCAI" for the rest of this comment) in footnote 2 quite makes sense. I'm worried that too much of the action is in "if no other actors had access to powerful AI systems", and not that much action is in the exact capabilities of the "TCAI". In particular: Maybe we already have TCAI (by that definition) because if a frontier AI company or a US adversary was blessed with the assumption "no other actor will have access to powerful AI systems", they'd have a huge advantage over the rest of the world (as soon as they develop more powerful AI), plausibly implying that it'd be right to forecast a >25% chance of them successfully taking over if they were motivated to try.

And this seems somewhat hard to disentangle from stuff that is supposed to count according to footnote 2, especially: "Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would" and "via assisting the developers in a power grab, or via partnering with a US adversary". (Or maybe the scenario in 1st paragraph is supposed to be excluded because current AI isn't...

I put roughly 50% probability on feasibility of software-only singularity.[1]

(I'm probably going to be reinventing a bunch of the compute-centric takeoff model in slightly different ways below, but I think it's faster to partially reinvent than to dig up the material, and I probably do use a slightly different approach.)

My argument here will be a bit sloppy and might contain some errors. Sorry about this. I might be more careful in the future.

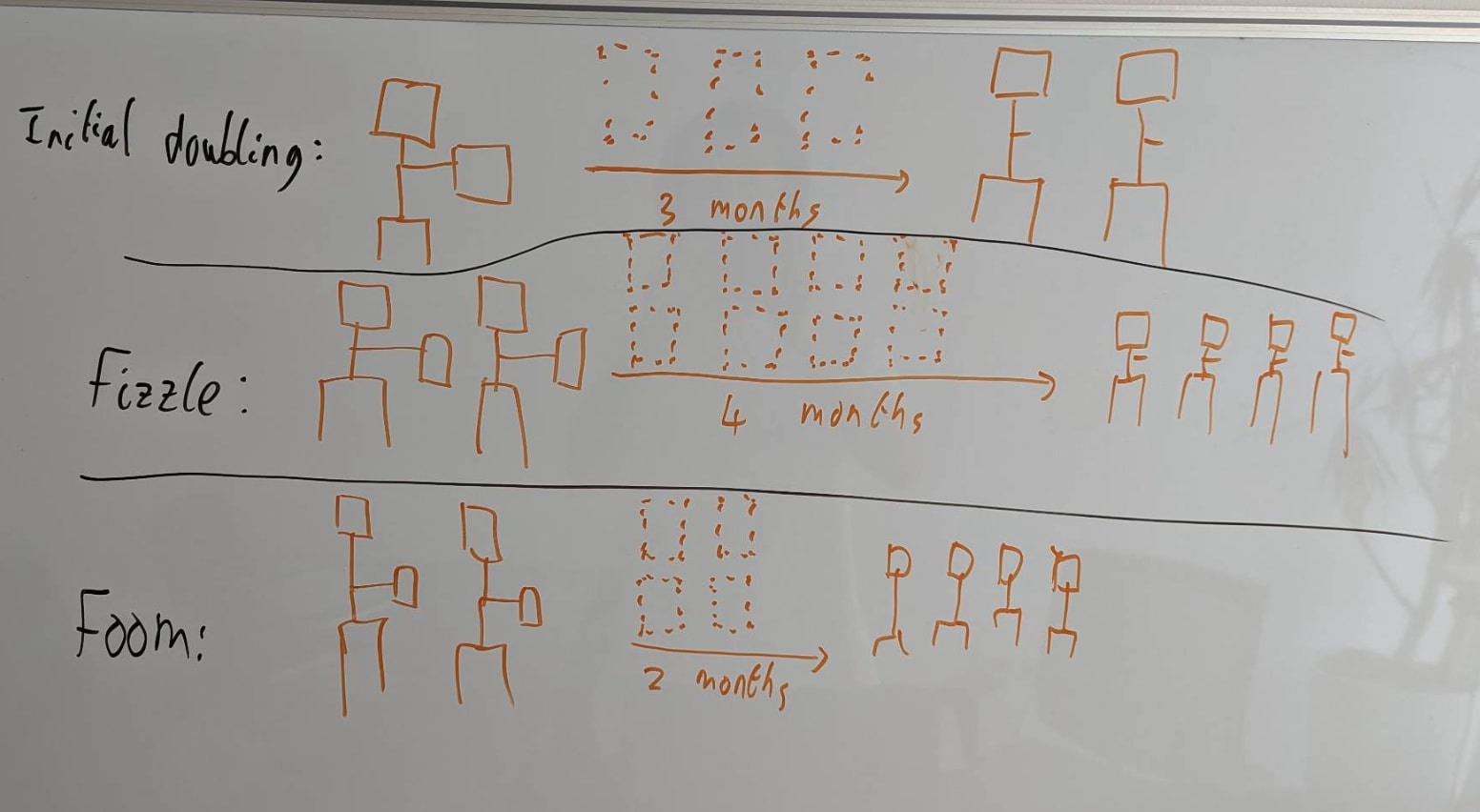

The key question for software-only singularity is: "When the rate of labor production is doubled (as in, as if your employees ran 2x faster[2]), does that more than double or less than double the rate of algorithmic progress? That is, algorithmic progress as measured by how fast we increase the labor production per FLOP/s (as in, the labor production from AI labor on a fixed compute base).". This is a very economics-style way of analyzing the situation, and I think this is a pretty reasonable first guess. Here's a diagram I've stolen from Tom's presentation on explosive growth illustrating this:

Basically, every time you double the AI labor supply, does the time until the next doubling (driven by algorithmic progress) increase (fizzle) or decre...

Hey Ryan! Thanks for writing this up -- I think this whole topic is important and interesting.

I was confused about how your analysis related to the Epoch paper, so I spent a while with Claude analyzing it. I did a re-analysis that finds similar results, but also finds (I think) some flaws in your rough estimate. (Keep in mind I'm not an expert myself, and I haven't closely read the Epoch paper, so I might well be making conceptual errors. I think the math is right though!)

I'll walk through my understanding of this stuff first, then compare to your post. I'll be going a little slowly (A) to help myself refresh myself via referencing this later, (B) to make it easy to call out mistakes, and (C) to hopefully make this legible to others who want to follow along.

Using Ryan's empirical estimates in the Epoch model

The Epoch model

The Epoch paper models growth with the following equation:

1. ,

where A = efficiency and E = research input. We want to consider worlds with a potential software takeoff, meaning that increases in AI efficiency directly feed into research input, which we model as . So the key consideration seems to be the ratio&n...

Here's my own estimate for this parameter:

Once AI has automated AI R&D, will software progress become faster or slower over time? This depends on the extent to which software improvements get harder to find as software improves – the steepness of the diminishing returns.

We can ask the following crucial empirical question:

When (cumulative) cognitive research inputs double, how many times does software double?

(In growth models of a software intelligence explosion, the answer to this empirical question is a parameter called r.)

If the answer is “< 1”, then software progress will slow down over time. If the answer is “1”, software progress will remain at the same exponential rate. If the answer is “>1”, software progress will speed up over time.

The bolded question can be studied empirically, by looking at how many times software has doubled each time the human researcher population has doubled.

(What does it mean for “software” to double? A simple way of thinking about this is that software doubles when you can run twice as many copies of your AI with the same compute. But software improvements don...

Here's a simple argument I'd be keen to get your thoughts on:

On the Possibility of a Tastularity

Research taste is the collection of skills including experiment ideation, literature review, experiment analysis, etc. that collectively determine how much you learn per experiment on average (perhaps alongside another factor accounting for inherent problem difficulty / domain difficulty, of course, and diminishing returns)

Human researchers seem to vary quite a bit in research taste--specifically, the difference between 90th percentile professional human researchers and the very best seems like maybe an order of magnitude? Depends on the field, etc. And the tails are heavy; there is no sign of the distribution bumping up against any limits.

Yet the causes of these differences are minor! Take the very best human researchers compared to the 90th percentile. They'll have almost the same brain size, almost the same amount of experience, almost the same genes, etc. in the grand scale of things.

This means we should assume that if the human population were massively bigger, e.g. trillions of times bigger, there would be humans whose brains don't look that different from the brains of...

DeepSeek's success isn't much of an update on a smaller US-China gap in short timelines because security was already a limiting factor

Some people seem to have updated towards a narrower US-China gap around the time of transformative AI if transformative AI is soon, due to recent releases from DeepSeek. However, since I expect frontier AI companies in the US will have inadequate security in short timelines and China will likely steal their models and algorithmic secrets, I don't consider the current success of China's domestic AI industry to be that much of an update. Furthermore, if DeepSeek or other Chinese companies were in the lead and didn't open-source their models, I expect the US would steal their models and algorithmic secrets. Consequently, I expect these actors to be roughly equal in short timelines, except in their available compute and potentially in how effectively they can utilize AI systems.

I do think that the Chinese AI industry looking more competitive makes security look somewhat less appealing (and especially less politically viable) and makes it look like their adaptation time to stolen models and/or algorithmic secrets will be shorter. Marginal improvements in...

A factor that I don't think people are really taking into account is: what happens if this situation goes hostile?

Having an ASI and x amount of compute for inference plus y amount of currently existing autonomous weapons platforms (e.g. drones)... If your competitor has the same ASI, but 10x the compute but 0.2x the drones... And you get in a fight... Who wins? What about 0.2x the compute and 10x the drones?

The funny thing about AI brinkmanship versus nuclear brinkmanship is that AI doesn't drain your economic resources, it accelerates your gains. A nuclear stockpile costs you in maintenance. An AI and compute 'stockpile' makes you more money and more tech (including better AI and compute). Thus, there is a lot more incentive to race harder on AI than on nuclear.

If I were in the defense dept of a world power right now, I'd be looking for ways to make money from civilian uses of drones... Maybe underwriting drone deliveries for mail. That gives you an excuse to build drones and drone factories while whistling innocently.

The 5 main reasons why control could fail

Here are the 5 main categories of reasons why control could fail (though various of them are often correlated).

[Note: this document has a moderate amount of overlap with various prior documents Redwood has released, but it seemed like a useful document nonetheless.]

- Insufficient (long horizon) sample efficiency: If your AI is scheming, you won’t be able to reasonably sample efficiently get this AI to achieve near maximal reward on average on (long horizon) tasks. By near maximal, we just mean empirically achieving average reward which is quite close to the reward the AI would get if it was “actually trying” to get high reward (insofar as it is scheming to an extent that “trying” makes sense). (When we say average, we actually mean average excluding rare outliers. We can't (in general) control behavior on rare outliers using normal ML training.) For control, this causes issues in two places: it interferes with getting useful work out of AIs and being able to run accurate capability evaluations.

- Auditing failures: inability for humans to sufficiently assess actions. (Though note there can be solutions to these failures which don’t involve im

It seems slightly bad that you can vote on comments under the Quick Takes and Popular Comments sections without needing to click on the comment and without leaving the frontpage.

This is different than posts where in order to vote, you have to actually click on the post and leave the main page (which perhaps requires more activation energy).

I'm unsure, but I think this could result in a higher frequency of cases where Quick Takes and comments go viral in problematic ways relative to posts. (Based on my vague sense from the EA forum.)

This doesn't seem very important, but I thought it would be worth quickly noting.

About 1 year ago, I wrote up a ready-to-go plan for AI safety focused on current science (what we roughly know how to do right now). This is targeting reducing catastrophic risks from the point when we have transformatively powerful AIs (e.g. AIs similarly capable to humans).

I never finished this doc, and it is now considerably out of date relative to how I currently think about what should happen, but I still think it might be helpful to share.

Here is the doc. I don't particularly want to recommend people read this doc, but it is possible that someone will find it valuable to read.

I plan on trying to think though the best ready-to-go plan roughly once a year. Buck and I have recently started work on a similar effort. Maybe this time we'll actually put out an overall plan rather than just spinning off various docs.

This seems like a great activity, thank you for doing/sharing it. I disagree with the claim near the end that this seems better than Stop, and in general felt somewhat alarmed throughout at (what seemed to me like) some conflation/conceptual slippage between arguments that various strategies were tractable, and that they were meaningfully helpful. Even so, I feel happy that the world contains people sharing things like this; props.

I disagree with the claim near the end that this seems better than Stop

At the start of the doc, I say:

It’s plausible that the optimal approach for the AI lab is to delay training the model and wait for additional safety progress. However, we’ll assume the situation is roughly: there is a large amount of institutional will to implement this plan, but we can only tolerate so much delay. In practice, it’s unclear if there will be sufficient institutional will to faithfully implement this proposal.

Towards the end of the doc I say:

This plan requires quite a bit of institutional will, but it seems good to at least know of a concrete achievable ask to fight for other than “shut everything down”. I think careful implementation of this sort of plan is probably better than “shut everything down” for most AI labs, though I might advocate for slower scaling and a bunch of other changes on current margins.

Presumably, you're objecting to 'I think careful implementation of this sort of plan is probably better than “shut everything down” for most AI labs'.

My current view is something like:

- If there was broad, strong, and durable political will and buy in for heavily prioritizing AI tak

OpenAI seems to train on >1% chess

If you sample from davinci-002 at t=1 starting from "<|endoftext|>", 4% of the completions are chess games.

For babbage-002, we get 1%.

Probably this implies a reasonably high fraction of total tokens in typical OpenAI training datasets are chess games!

Despite chess being 4% of documents sampled, chess is probably less than 4% of overall tokens (Perhaps 1% of tokens are chess? Perhaps less?) Because chess consists of shorter documents than other types of documents, it's likely that chess is a higher fraction of documents than of tokens.

(To understand this, imagine that just the token "Hi" and nothing else was 1/2 of documents. This would still be a small fraction of tokens as most other documents are far longer than 1 token.)

(My title might be slightly clickbait because it's likely chess is >1% of documents, but might be <1% of tokens.)

It's also possible that these models were fine-tuned on a data mix with more chess while pretrain had less chess.

Appendix A.2 of the weak-to-strong generalization paper explicitly notes that GPT-4 was trained on at least some chess.

I think it might be worth quickly clarifying my views on activation addition and similar things (given various discussion about this). Note that my current views are somewhat different than some comments I've posted in various places (and my comments are somewhat incoherent overall), because I’ve updated based on talking to people about this over the last week.

This is quite in the weeds and I don’t expect that many people should read this.

- It seems like activation addition sometimes has a higher level of sample efficiency in steering model behavior compared with baseline training methods (e.g. normal LoRA finetuning). These comparisons seem most meaningful in straightforward head-to-head comparisons (where you use both methods in the most straightforward way). I think the strongest evidence for this is in Liu et al..

- Contrast pairs are a useful technique for variance reduction (to improve sample efficiency), but may not be that important (see Liu et al. again). It's relatively natural to capture this effect using activation vectors, but there is probably some nice way to incorporate this into SGD. Perhaps DPO does this? Perhaps there is something else?

- Activation addition wo

How people discuss the US national debt is an interesting case study of misleading usage of the wrong statistic. The key thing is that people discuss the raw debt amount and the rate at which that is increasing, but you ultimately care about the relationship between debt and US gdp (or US tax revenue).

People often talk about needing to balance the budget, but actually this isn't what you need to ensure[1], to manage debt it suffices to just ensure that debt grows slower than US GDP. (And in fact, the US has ensured this for the past 4 years as debt/GDP has decreased since 2020.)

To be clear, it would probably be good to have a national debt to GDP ratio which is less than 50% rather than around 120%.

- ^

There are some complexities with inflation because the US could inflate its way out of dollar dominated debt and this probably isn't a good way to do things. But, with an independent central bank this isn't much of a concern.

the debt/gdp ratio drop since 2020 I think was substantially driven by inflation being higher then expected rather than a function of economic growth - debt is in nominal dollars, so 2% real gdp growth + e.g. 8% inflation means that nominal gdp goes up by 10%, but we're now in a worse situation wrt future debt because interest rates are higher.

IMO, instrumental convergence is a terrible name for an extremely obvious thing.

The actual main issue is that AIs might want things with limited supply and then they would try to get these things which would result in them not going to humanity. E.g., AIs might want all cosmic resources, but we also want this stuff. Maybe this should be called AIs-might-want-limited-stuff-we-want.

(There is something else which is that even if the AI doesn't want limited stuff we want, we might end up in conflict due to failures of information or coordination. E.g., the AI almost entirely just wants to chill out in the desert and build crazy sculptures and it doesn't care about extreme levels of maximization (e.g. it doesn't want to use all resources to gain a higher probability of continuing to build crazy statues). But regardless, the AI decides to try taking over the world because it's worried that humanity would shut it down because it wouldn't have any way of credibly indicating that it just wants to chill out in the desert.)

(More generally, it's plausible that failures of trade/coordination result in a large number of humans dying in conflict with AIs even though both humans and AIs would prefer other approaches. But this isn't entirely obvious and it's plausible we could resolve this with better negotation and precommitments. Of course, this isn't clearly the largest moral imperative from a longtermist perspective.)

One "9" of uptime reliability for dangerously powerful AIs might suffice (for the most dangerous and/or important applications)

Currently, AI labs try to ensure that APIs for accessing their best models have quite good reliability, e.g. >99.9% uptime. If we had to maintain such high levels of uptime reliability for future dangerously powerful AIs, then we'd need to ensure that our monitoring system has very few false alarms. That is, few cases in which humans monitoring these dangerously powerful AIs pause usage of the AIs pending further investigation. Ensuring this would make it harder to ensure that AIs are controlled. But, for the most important and dangerous usages of future powerful AIs (e.g. arbitrary R&D, and especially AI safety research), we can probably afford to have the powerful AIs down a moderate fraction of the time. For instance, it wouldn't be that costly if the AIs always took a randomly selected day of the week off from doing research: this would probably reduce productivity by not much more than 1 part in 7[1]. More generally, a moderate fraction of downtime like 10% probably isn't considerably worse than a 10% productivity hit and it seems likely that w...

On Scott Alexander’s description of Representation Engineering in “The road to honest AI”

This is a response to Scott Alexander’s recent post “The road to honest AI”, in particular the part about the empirical results of representation engineering. So, when I say “you” in the context of this post that refers to Scott Alexander. I originally made this as a comment on substack, but I thought people on LW/AF might be interested.

TLDR: The Representation Engineering paper doesn’t demonstrate that the method they introduce adds much value on top of using linear probes (linear classifiers), which is an extremely well known method. That said, I think that the framing and the empirical method presented in the paper are still useful contributions.

I think your description of Representation Engineering considerably overstates the empirical contribution of representation engineering over existing methods. In particular, rather than comparing the method to looking for neurons with particular properties and using these neurons to determine what the model is "thinking" (which probably works poorly), I think the natural comparison is to training a linear classifier on the model’s internal activatio...

I unconfidently think it would be good if there was some way to promote posts on LW (and maybe the EA forum) by paying either money or karma. It seems reasonable to require such promotion to require moderator approval.

Probably, money is better.

The idea is that this is both a costly signal and a useful trade.

The idea here is similar to strong upvotes, but:

- It allows for one user to add more of a signal boost than just a strong upvote.

- It doesn't directly count for the normal vote total, just for visibility.

- It isn't anonymous.

- Users can easily configure how this appears on their front page (while I don't think you can disable the strong upvote component of karma for weighting?).

Reducing the probability that AI takeover involves violent conflict seems leveraged for reducing near-term harm

Often in discussions of AI x-safety, people seem to assume that misaligned AI takeover will result in extinction. However, I think AI takeover is reasonably likely to not cause extinction due to the misaligned AI(s) effectively putting a small amount of weight on the preferences of currently alive humans. Some reasons for this are discussed here. Of course, misaligned AI takeover still seems existentially bad and probably eliminates a high fracti...

DeepSeek's success isn't much of an update on a smaller US-China gap in short timelines because security was already a limiting factor

Some people seem to have updated towards a narrower US-China gap around the time of transformative AI if transformative AI is soon, due to recent releases from DeepSeek. However, since I expect frontier AI companies in the US will have inadequate security in short timelines and China will likely steal their models and algorithmic secrets, I don't consider the current success of China's domestic AI industry to be that much of an update. Furthermore, if DeepSeek or other Chinese companies were in the lead and didn't open-source their models, I expect the US would steal their models and algorithmic secrets. Consequently, I expect these actors to be roughly equal in short timelines, except in their available compute and potentially in how effectively they can utilize AI systems.

I do think that the Chinese AI industry looking more competitive makes security look somewhat less appealing (and especially less politically viable) and makes it look like their adaptation time to stolen models and/or algorithmic secrets will be shorter. Marginal improvements in security still seem important, and ensuring high levels of security prior to at least ASI (and ideally earlier!) is still very important.

Using the breakdown of capabilities I outlined in this prior post, the rough picture I expect is something like:

Given this, I expect that key early models will be stolen, including models that can fully substitute for human experts, and so the important differences between actors will mostly be driven by compute, adaptation time, and utilization. Of these, compute seems most important, particularly given that adaptation and utilization time can be accelerated by the AIs themselves.

This analysis suggests that export controls are particularly important, but they would need to apply to hardware used for inference rather than just attempting to prevent large training runs through memory bandwidth limitations or similar restrictions.

I'm not the only one thinking along these lines... https://x.com/8teAPi/status/1885340234352910723