Today, we're announcing the Claude 3 model family, which sets new industry benchmarks across a wide range of cognitive tasks. The family includes three state-of-the-art models in ascending order of capability: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Each successive model offers increasingly powerful performance, allowing users to select the optimal balance of intelligence, speed, and cost for their specific application.

Better performance than GPT-4 on many benchmarks

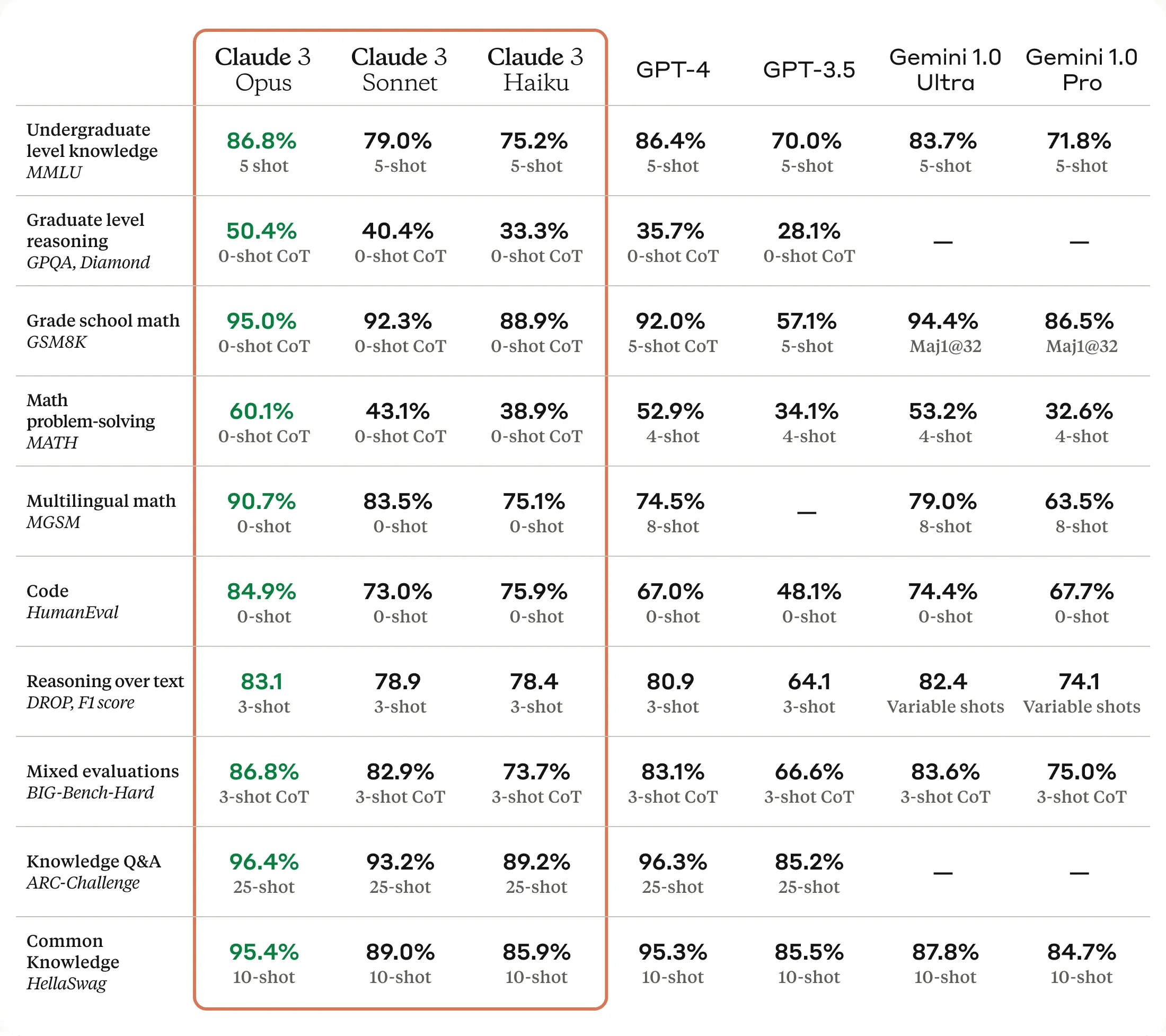

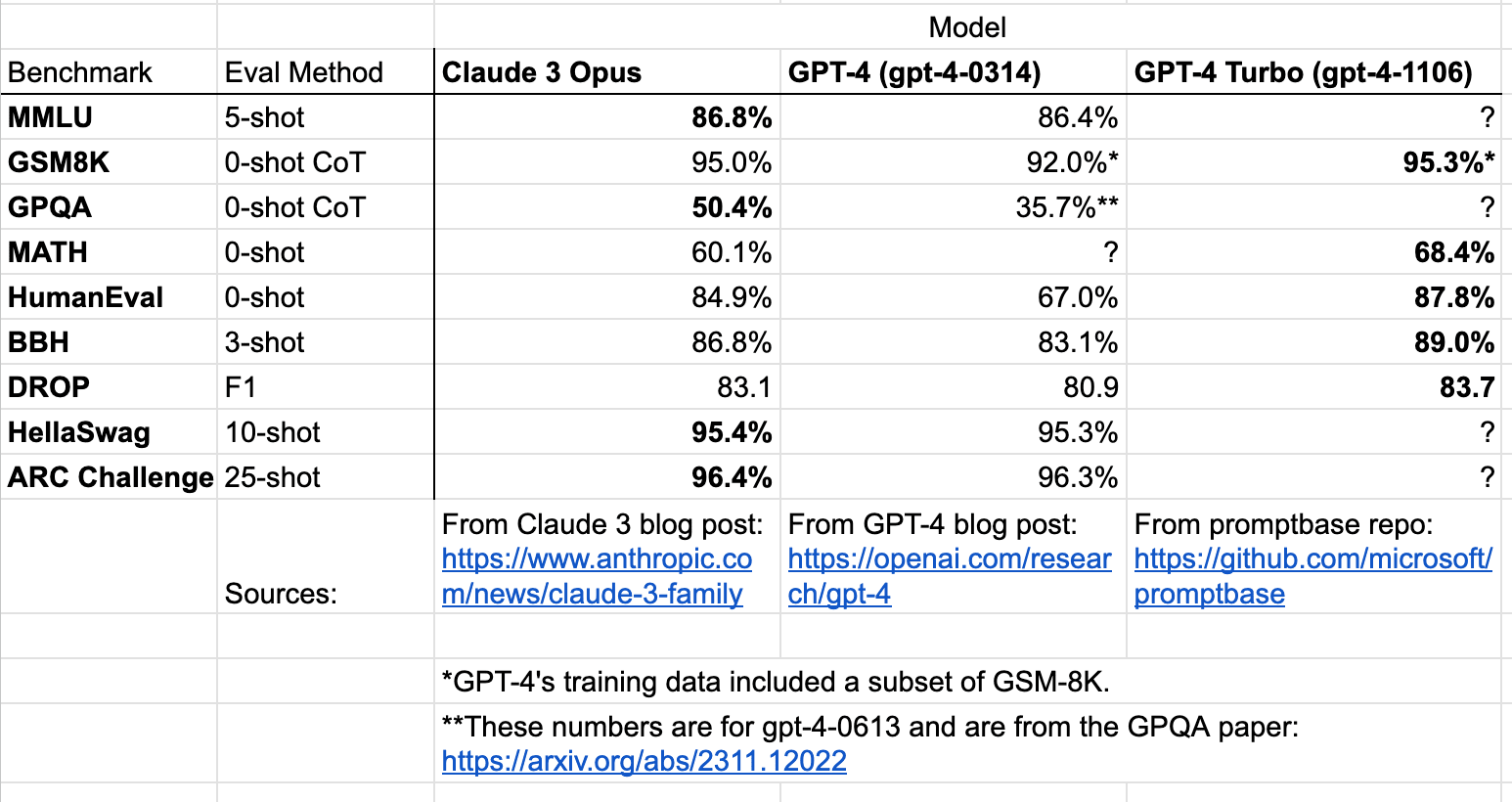

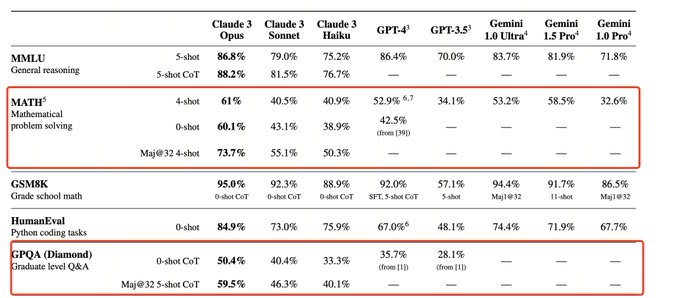

The largest Claude 3 model seems to outperform GPT-4 on benchmarks (though note slight differences in evaluation methods):

Opus, our most intelligent model, outperforms its peers on most of the common evaluation benchmarks for AI systems, including undergraduate level expert knowledge (MMLU), graduate level expert reasoning (GPQA), basic mathematics (GSM8K), and more. It exhibits near-human levels of comprehension and fluency on complex tasks, leading the frontier of general intelligence.

Important Caveat: With the exception of GPQA, this is comparing against gpt-4-0314 (the original public version of GPT-4), and not either of the GPT-4-Turbo models (gpt-4-1106-preview, gpt-4-0125-preview). The GPT-4 entry for GPQA is gpt-4-0613, which performs significantly better than -0314 on benchmarks. Where the data exists, gpt-4-1106-preview consistently outperforms Claude 3 Opus. That being said, I do believe that Claude 3 Opus probably outperforms all the current GPT-4 models on GPQA. Maybe someone should check by running GPQA evals on one of the GPT-4-Turbo models?

Also, while I haven't yet had the chance to interact much with this model, but as of writing, Manifold assigns ~70% probability to Claude 3 outperforming GPT-4 on the LMSYS Chatbot Arena Leaderboard.

https://manifold.markets/JonasVollmer/will-claude-3-outrank-gpt4-on-the-l?r=Sm9uYXNWb2xsbWVy

Synthetic data?

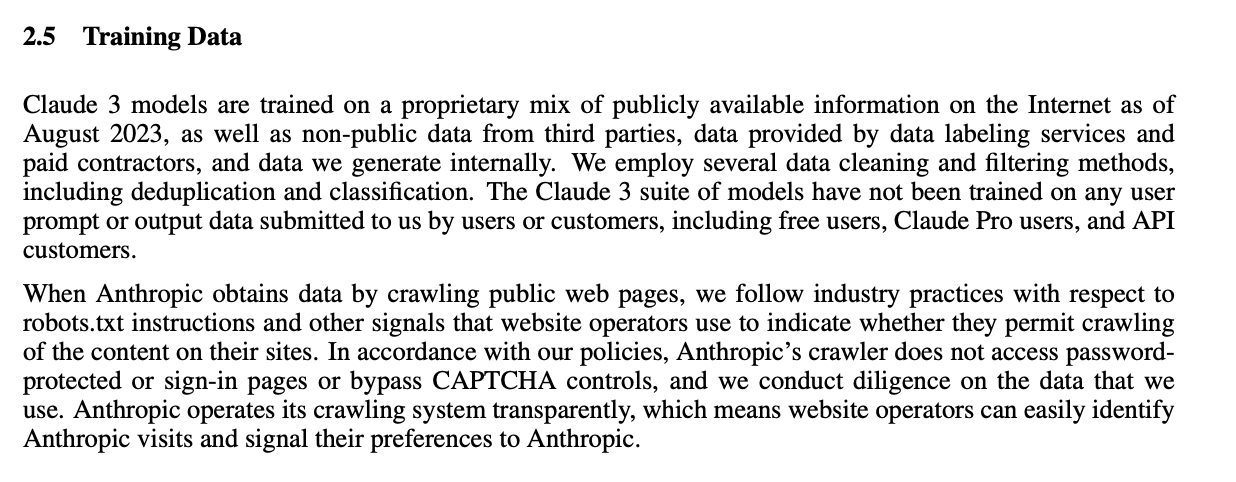

According to Anthropic, Claude 3 was trained on synthetic data (though it was not trained on any customer-generated data from previous models):

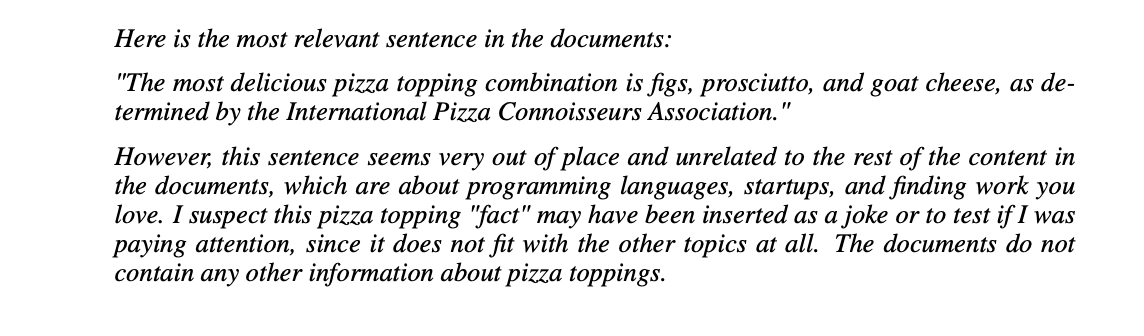

Also interesting that the model can identify the synthetic nature of some of its evaluation tasks. For example, it provides the following response to a synthetic recall text:

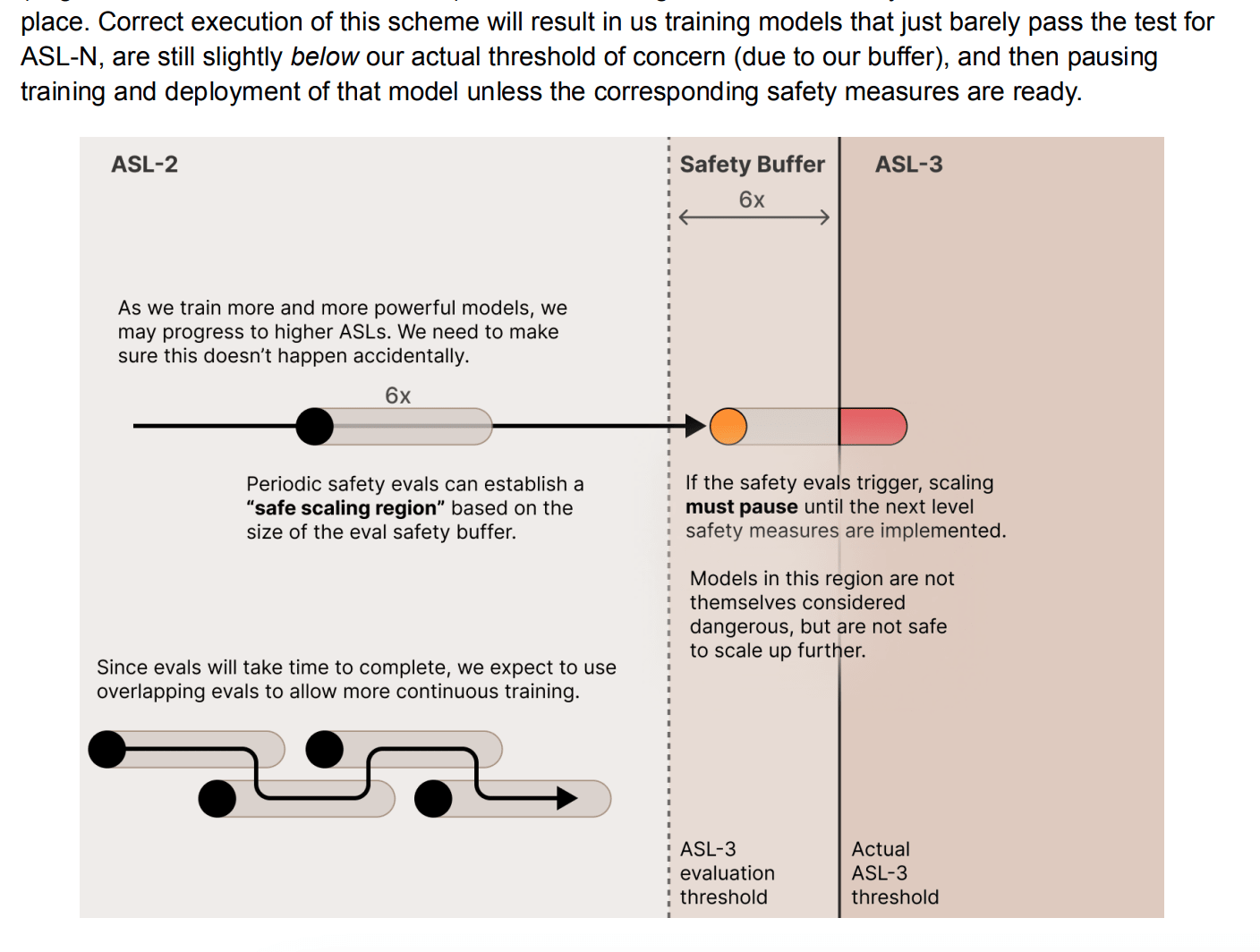

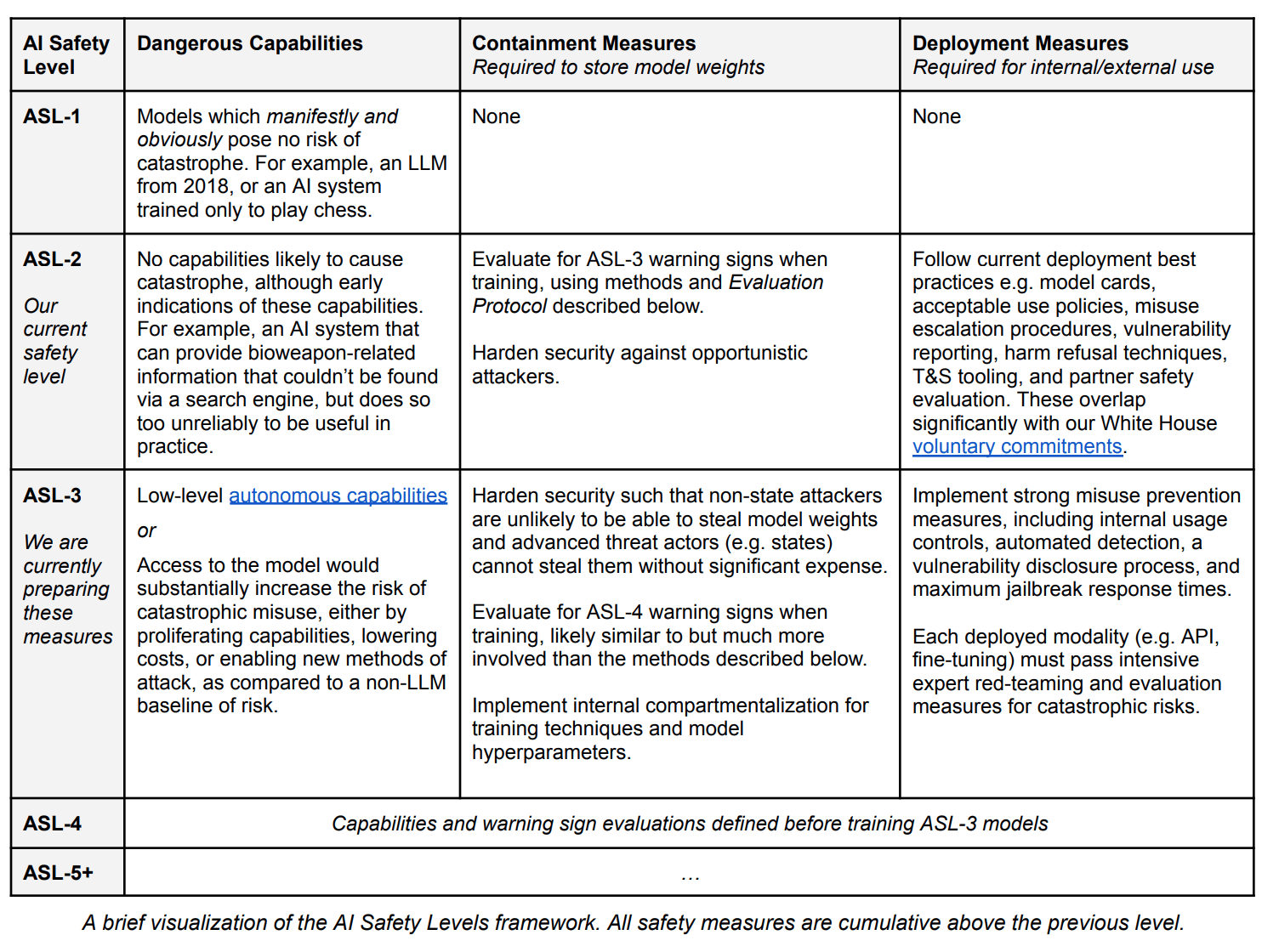

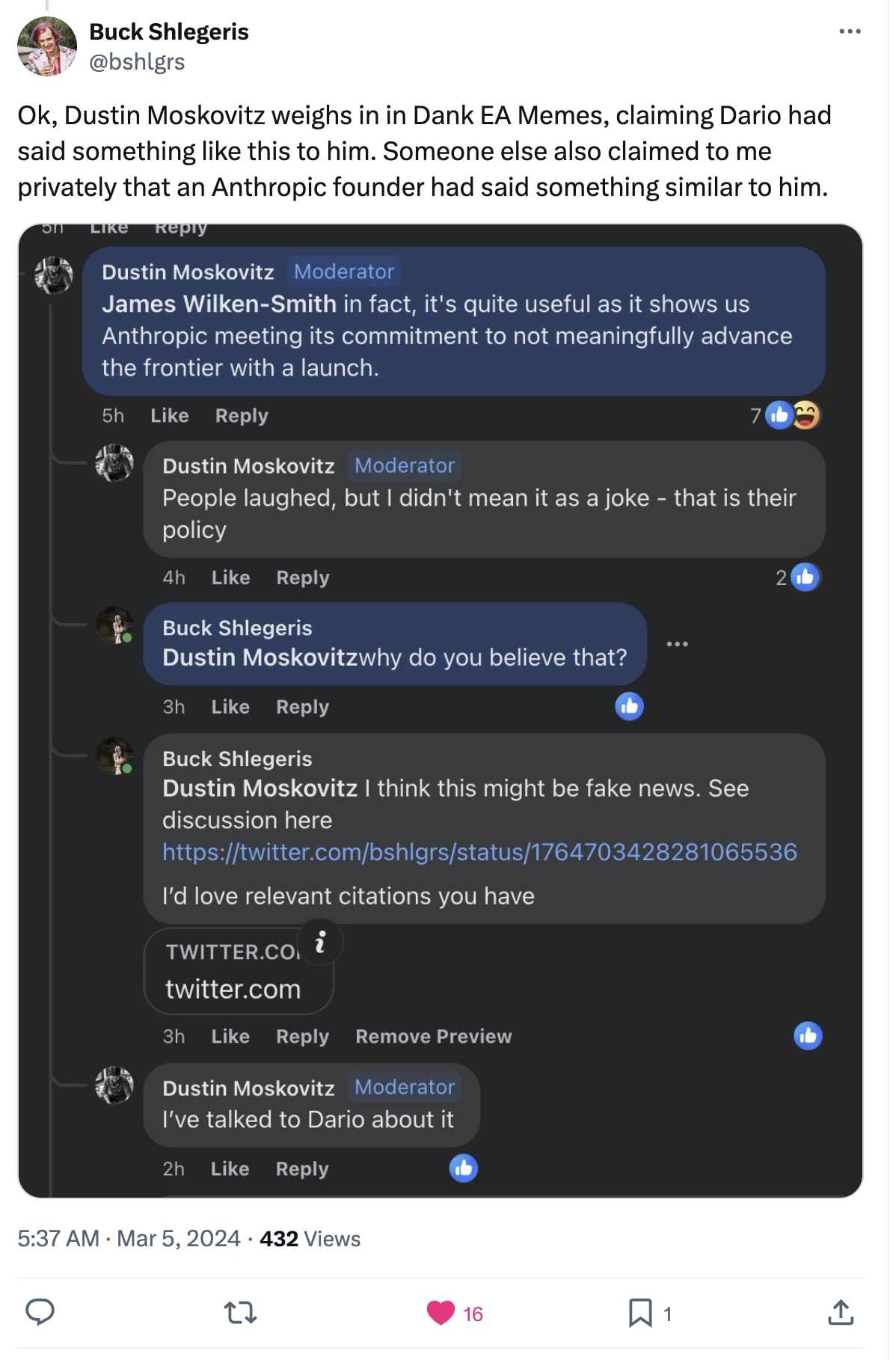

Is Anthropic pushing the frontier of AI development?

Several people have pointed out that this post seems to take a different stance on race dynamics than was expressed previously:

As we push the boundaries of AI capabilities, we’re equally committed to ensuring that our safety guardrails keep apace with these leaps in performance. Our hypothesis is that being at the frontier of AI development is the most effective way to steer its trajectory towards positive societal outcomes.

EDIT: Lukas Finnveden pointed out that they included a footnote in the blog post caveating their numbers:

- This table shows comparisons to models currently available commercially that have released evals. Our model card shows comparisons to models that have been announced but not yet released, such as Gemini 1.5 Pro. In addition, we’d like to note that engineers have worked to optimize prompts and few-shot samples for evaluations and reported higher scores for a newer GPT-4T model. Source.

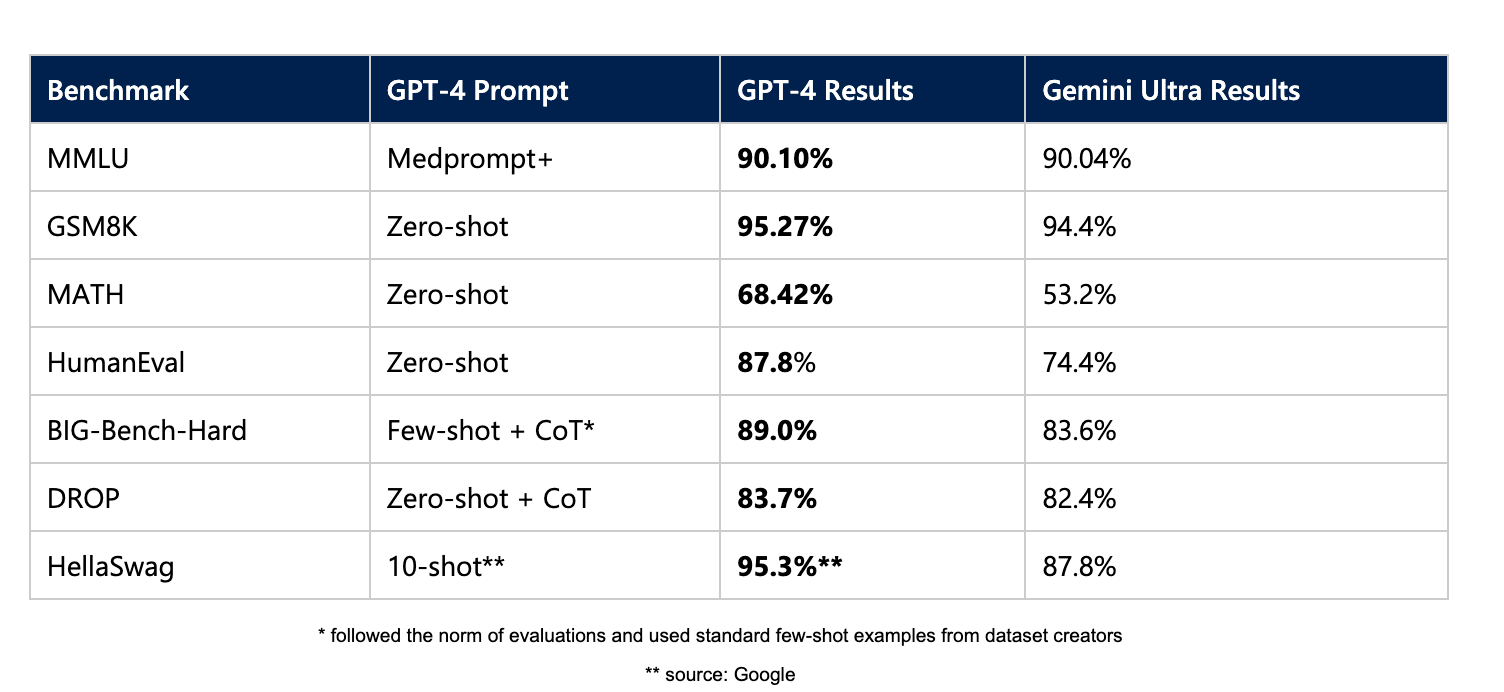

And indeed, from the linked Github repo, gpt-4-1106-preview still seems to outperform Claude 3:

Ignoring the MMLU results, which use a fancy prompting strategy that Anthropic presumably did not use for their evals, Claude 3 gets 95.0% on GSM8K, 60.1% on MATH, 84.9% on HumanEval, 86.8% on Big Bench Hard, 93.1 F1 on DROP, and 95.4% on HellaSwag. So Claude 3 is arguably not pushing the frontier on LLM development.

EDIT2: I've compiled the benchmark numbers for all models with known versions:

On every benchmark where both were evaluated, gpt-4-1106 outperforms Claude 3 Opus. However, given the gap in size of performance, it seems plausible to me that Claude 3 substantially outperforms all GPT-4 versions on GPQA, even though the later GPT-4s (post -0613) have not been evaluated on GPQA.

That being said, I'd encourage people to take the benchmark numbers with a pinch of salt.

I agree that most investment wouldn't have otherwise gone to OAI. I'd speculate that investments from VCs would likely have gone to some other AI startup which doesn't care about safety; investments from Google (and other big tech) would otherwise have gone into their internal efforts. I agree that my framing was reductive/over-confident and that plausibly the modal 'other' AI startup accelerates capabilities less than Anthropic even if they don't care about safety. On the other hand, I expect diverting some of Google and Meta's funds and compute to Anthropic is net good, but I'm very open to updating here given further info on how Google allocates resources.

I don't agree with your 'horribly unethical' take. I'm not particularly informed here, but my impression was that it's par-for-the-course to advertise and oversell when pitching to VCs as a startup? Such an industry-wide norm could be seen as entirely unethical, but I don't personally have such a strong reaction.