Coefficient Giving is one of the worst name changes I've ever heard:

- Coefficient Giving sounds bad while OpenPhil sounded cool and snappy.

- Coefficient doesn't really mean anything in this context, clearly it's a pun on "co" and "efficient" but that is also confusing. They say "A coefficient multiplies the value of whatever it's paired with" but that's just true of any number?

- They're a grantmaker who don't really advise normal individuals about where to give their money, so why "Giving" when their main thing is soliciting large philanthropic efforts and then auditing that

- Coefficient Giving doesn't tell you what the company does at the start! "Good Ventures" and "GiveWell" tell you roughly what the company is doing.

- "Coefficient" is a really weird word, so you're burning weirdness points with the literal first thing anyone will ever hear you say, this seems like a name which you would only thing is good if you're already deep into rat/ea spaces.

- It sounds bad. Open Philanthropy rolls off the tongue, as does OpenPhil. OH-puhn fi-LAN-thruh-pee. Two sets of three. CO-uh-fish-unt GI-ving is an awkward four-two with a half-emphasis on the fish of coefficient. Sounds bad. I'm coming back to th

Apparently the thing of "people mixed up openphil with other orgs" (in particular OpenAI's non-profit and the open society foundation) was a significantly bigger problem than I'd have thought — recurringly happening even in pretty high-stakes situations. (Like important grant applicants being confused.) And most of these misunderstandings wouldn't even have been visible to employees.

And arguably this was just about to get even worse with the newly launched "OpenAI foundation" sounding even more similar.

Other commenters have said most of what I was going to say, but a few other points in defense:

- On it sounding bad, I think time will tell. We are biased towards liking familiar stimuli.

- Coefficient Giving doesn't tell you what we do, but neither did Open Philanthropy. And fwiw, neither does Good Ventures, IMO -- or many nonprofits, e.g. Lightcone Infrastructure, Redwood Research, the Red Cross. Agreed that GiveWell is a very descriptive and good name though.

- Setting aside whether "coefficient" is a weird word, I don't think having an unusual word in your name "burns weirdness points" in a costly way. Take some of the world's biggest companies -- Nvidia ("invidious,") Google ("googol"), Meta -- these aren't especially common words, but it seems to have worked out for them.

- The emphasis of "coefficient" is on the "fish." So it's not an "awkward four-two," it's three sets of two, which seems melodic enough (cf. 80,000 Hours, Lightcone Infrastructure, European Union, Make-A-Wish Foundation, etc).

- On the no possible shortening, again, time will tell, but my money is on "CG," which seems fine.

"Coefficient" is a really weird word

"coefficient" is 10x more common than "philanthropy" in the google books corpus. but idk maybe this flips if we filter out academic books?

also maybe you mean it's weird in some sense the above fact isn't really relevant to — then nvm

(FWIW, I do think that ease of pronunciation for the intended public should play a moderate role in choosing the name.)

I think this is a case of a curb cut effect. If it's easy (vs hard) to pronounce for non-native speakers, it's also easy (vs hard) to get the point across at a noisy party, or over a crackly phone line, or if someone's distracted.

From Rethink Priorities:

- We used Monte Carlo simulations to estimate, for various sentience models and across eighteen organisms, the distribution of plausible probabilities of sentience.

- We used a similar simulation procedure to estimate the distribution of welfare ranges for eleven of these eighteen organisms, taking into account uncertainty in model choice, the presence of proxies relevant to welfare capacity, and the organisms’ probabilities of sentience (equating this probability with the probability of moral patienthood)

Now with the disclaimer that I do think that RP are doing good and important work and are one of the few organizations seriously thinking about animal welfare priorities...

Their epistemics led them to do a Monte Carlo simulation to determine if organisms are capable of suffering (and if so, how much) then got a value of 5 shrimp = 1 human and then not bat an eye at this number.

Neither a physicalist nor a functionalist theory of consciousness can reasonably justify a number like this. Shrimp have 5 orders of magnitude fewer neurons than humans, so whether suffering is the result of a physical process or an information processing one, this implies that shrimp neur...

Their epistemics led them to do a Monte Carlo simulation to determine if organisms are capable of suffering (and if so, how much) then got a value of 5 shrimp = 1 human and then not bat an eye at this number.

Neither a physicalist nor a functionalist theory of consciousness can reasonably justify a number like this. Shrimp have 5 orders of magnitude fewer neurons than humans, so whether suffering is the result of a physical process or an information processing one, this implies that shrimp neurons do 4 orders of magnitude more of this process per second than human neurons.

epistemic status: Disagreeing on object-level topic, not the topic of EA epistemics.

I disagree, especially functionalism can justify a number like this. Here's an example for reasoning on this:

- Suffering is the structure of some computation, and different levels of suffering correspond to different variants of that computation.

- What matters is whether that computation is happening.

- The structure of suffering is simple enough to be represented in the neurons of a shrimp.

Under that view, shrimp can absolutely suffer in the same range as humans, and the amount of suffering is dependent on crossing some thresh...

Are there any high p(doom) orgs who are focused on the following:

- Pick an alignment "plan" from a frontier lab (or org like AISI)

- Demonstrate how the plan breaks or doesn't work

- Present this clearly and legibly for policymakers

Seems like this is a good way for people to deploy technical talent in a way which is tractable. There are a lot of people who are smart but not alignment-solving levels of smart who are currently not really able to help.

I'd say that work like our Alignment Faking in Large Language Models paper (and the model organisms/alignment stress-testing field more generally) is pretty similar to this (including the "present this clearly to policymakers" part).

A few issues:

- AI companies don't actually have specific plans, they mostly just hope that they'll be able to iterate. (See Sam Bowman's bumper post for an articulation of a plan like this.) I think this is a reasonable approach in principle: this is how progress happens in a lot of fields. For example, the AI companies don't have plans for all kinds of problems that will arise with their capabilities research in the next few years, they just hope to figure it out as they get there. But the lack of specific proposals makes it harder to demonstrate particular flaws.

- A lot of my concerns about alignment proposals are that when AIs are sufficiently smart, the plan won't work anymore. But in many cases, the plan does actually work fine right now at ensuring particular alignment properties. (Most obviously, right now, AIs are so bad at reasoning about training processes that scheming isn't that much of an active concern.) So you can't directly demonstrate that

My impression is that the current Real Actual Alignment Plan For Real This Time amongst medium p(Doom) people looks something like this:

- Advance AI control, evals, and monitoring as much as possible now

- Try and catch an AI doing a maximally-incriminating thing at roughly human level

- This causes [something something better governance to buy time]

- Use the almost-world-ending AI to "automate alignment research"

(Ignoring the possibility of a pivotal act to shut down AI research. Most people I talk to don't think this is reasonable.)

I'll ignore the practicality of 3. What do people expect 4 to look like? What does an AI assisted value alignment solution look like?

My rough guess of what it could be, i.e. the highest p(solution is this|AI gives us a real alignment solution) is something like the following. This tries to straddle the line between the helper AI being obviously powerful enough to kill us and obviously too dumb to solve alignment:

- Formalize the concept of "empowerment of an agent" as a property of causal networks with the help of theorem-proving AI.

- Modify today's autoregressive reasoning models into something more isomorphic to a symbolic casual network. Use some sort of minimal c

Too Early does not preclude Too Late

Thoughts on efforts to shift public (or elite, or political) opinion on AI doom.

Currently, it seems like we're in a state of being Too Early. AI is not yet scary enough to overcome peoples' biases against AI doom being real. The arguments are too abstract and the conclusions too unpleasant.

Currently, it seems like we're in a state of being Too Late. The incumbent players are already massively powerful and capable of driving opinion through power, politics, and money. Their products are already too useful and ubiquitous to be hated.

Unfortunately, these can both be true at the same time! This means that there will be no "good" time to play our cards. Superintelligence (2014) was Too Early but not Too Late. There may be opportunities which are Too Late but not Too Early, but (tautologically) these have not yet arrived. As it is, current efforts must fight on bith fronts.

So Sonnet 3.6 can almost certainly speed up some quite obscure areas of biotech research. Over the past hour I've got it to:

- Estimate a rate, correct itself (although I did have to clock that it's result was likely off by some OOMs, which turned out to be 7-8), request the right info, and then get a more reasonable answer.

- Come up with a better approach to a particular thing than I was able to, which I suspect has a meaningfully higher chance of working than what I was going to come up with.

Perhaps more importantly, it required almost no mental effort on my part to do this. Barely more than scrolling twitter or watching youtube videos. Actually solving the problems would have had to wait until tomorrow.

I will update in 3 months as to whether Sonnet's idea actually worked.

(in case anyone was wondering, it's not anything relating to protein design lol: Sonnet came up with a high-level strategy for approaching the problem)

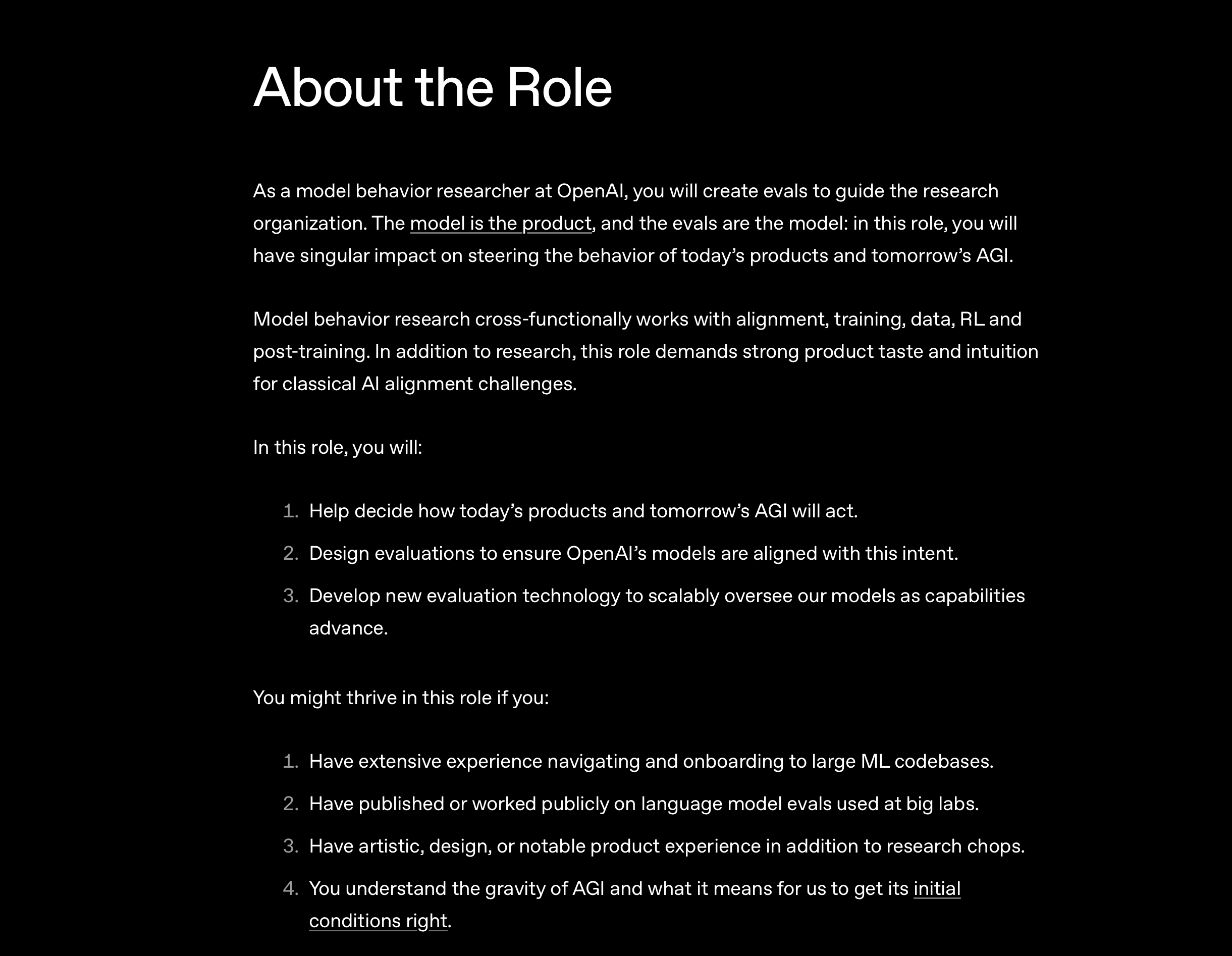

The latest recruitment ad from Aiden McLaughlin tells a lot about OpenAI's internal views on model training:

My interpretation of OpenAI's worldview, as implied by this, is:

- Inner alignment is not really an issue. Training objectives (evals) relate to behaviour in a straightforward and predictable way.

- Outer alignment kinda matters, but it's not that hard. Deciding the parameters of desired behaviour is something that can be done without serious philosophical difficulties.

- Designing the right evals is hard, you need lots of technical skill and high taste to make good enough evals to get the right behaviour.

- Oversight is important, in fact oversight is the primary method for ensuring that the AIs are doing what we want. Oversight is tractable and doable.

None of this dramatically conficts with what I already thought OpenAI believed, but it's interesting to get another angle on it.

It's quite possible that 1 is predicated on technical alignment work being done in other parts of the company (though their superalignment team no longer exists) and it's just not seen as the purview of the evals team. If so it's still very optimistic. If there isn't such a team then it's suicidally optimistic.

Fo...

Spoilers (I guess?) for HPMOR

HPMOR presents a protagonist who has a brain which is 90% that of a merely very smart child, but which is 10% filled with cached thought patterns taken directly from a smarter, more experienced adult. Part of the internal tension of Harry is between the un-integrated Dark Side thoughts and the rest of his brain.

Ironic then, that the effect that reading HPMOR---and indeed a lot of Yudkowsky's work---was to imprint a bunch of un-integrated alien thought patterns onto my existing merely very smart brain. A lot of my development over the past few years has just been trying to integrate these things properly with the rest of my mind.

Simplified Logical Inductors

Logical inductors consider belief-states as prices over logical sentences in some language, with the belief-states decided by different computable "traders", and also some decision process which continually churns out proofs of logical statements in that language. This is a bit unsatisfying, since it contains several different kinds of things.

What if, instead of buying shares in logical sentences, the traders bought shares in each other. Then we only need one kind of thing.

Let's make this a bit more precise:

- Each trader is a computable program in some language (let's just go with turing machines for now, modulo some concern about the macros for actually making trades)

- Each timestep, each trader is run for some amount of time (let's just say one turing machine step)

- These programs can be well-ordered (already required for Logical Induction)

- Each trader is assigned an initial amount of cash according to some relation

- Each trader can buy and sell "shares" in any other trader (again, very similarly to logical induction)

- If a trader halts, its current cash is distributed across its shareholders (otherwise that cash is lost

Steering as Dual to Learning

I've been a bit confused about "steering" as a concept. It seems kinda dual to learning, but why? It seems like things which are good at learning are very close to things which are good at steering, but they don't always end up steering. It also seems like steering requires learning. What's up here?

I think steering is basically learning, backwards, and maybe flipped sideways. In learning, you build up mutual information between yourself and the world; in steering, you spend that mutual information. You can have learning without ...

Shrimp Interventions

The hypothetical ammonia-reduction-in-shrimp-farm intervention has been touted as 1-2 OOMs more effective than shrimp stunning.

I think this is probably an underestimate, because I think that the estimates of shrimp suffering during death are probably too high.

(While I'm very critical of all of RP's welfare range estimates, including shrimp, that's not my point here. This argument doesn't rely on any arguments about shrimp welfare ranges overall. I do compare humans and shrimp, but IIUC this sort of comparison is the thing you multiply b...

As much as the amount of fraud (and lesser cousins thereof) in science is awful as a scientist, it must be so much worse as a layperson. For example this is a paper I found today suggesting that cleaner wrasse, a type of finger-sized fish, can not only pass the mirror test, but are able to remember their own face and later respond the same way to a photograph of themselves as to a mirror.

https://www.pnas.org/doi/10.1073/pnas.2208420120

Ok, but it was published in PNAS. As a researcher I happen to know that PNAS allows for special-track submissions from memb...

https://threadreaderapp.com/thread/1925593359374328272.html

Reading between the lines here, Opus 4 was RLed by repeated iterating and testing. Seems like they had to hit it fairly (for Anthropic) hard with the "Identify specific bad behaviors and stop them" technique.

Relatedly: Opus 4 doesn't seem to have the "good vibes" that Opus 3 had.

Furthermore, this (to me) indicates that Anthropic's techniques for model "alignment" are getting less elegant and sophisticated over time, since the models are getting smarter---and thus harder to "align"---faster than Ant...

There's a court at my university accommodation that people who aren't Fellows of the college aren't allowed on, it's a pretty medium-sized square of mown grass. One of my friends said she was "morally opposed" to this (on biodiversity grounds, if the space wasn't being used for people it should be used for nature).

And I couldn't help but think, how tiring it would be to have a moral-feeling-detector this strong. How could one possibly cope with hearing about burglaries, or North Korea, or astronomical waste.

I've been aware of scope insensitivity for a long time now but, this just really put things in perspective in a visceral way for me.

Spitballing:

Deep learning understood as a process of up- and down-weighting circuits is incredibly similar conceptually to logical induction.

Pre- and post-training LLMs is like juicing the market so that all the wealthy traders are different human personas, then giving extra liquidity to the ones we want.

I expect that the process of an agent cohering from a set of drives into a single thing is similar to the process of a predictor inferring the (simplicity-weighted) goals of an agent by observing it. RLVR is like rewarding traders which successfully predic...

Two Kinds of Empathy

Seems like there's two strands of empathy that humans can use.

The first kind is emotional empathy, where you put yourself in someone's place and imagine what you would feel. This one usually leads to sympathy, giving material assistance, comforting.

The second kind is agentic empathy, where you put yourself in someone's place and imagine what you would do. This one more often leads to giving advice.

A common kind of problem occurs when we deploy one type of empathy but not the other. John Wentworth has written about how (probably due to l...

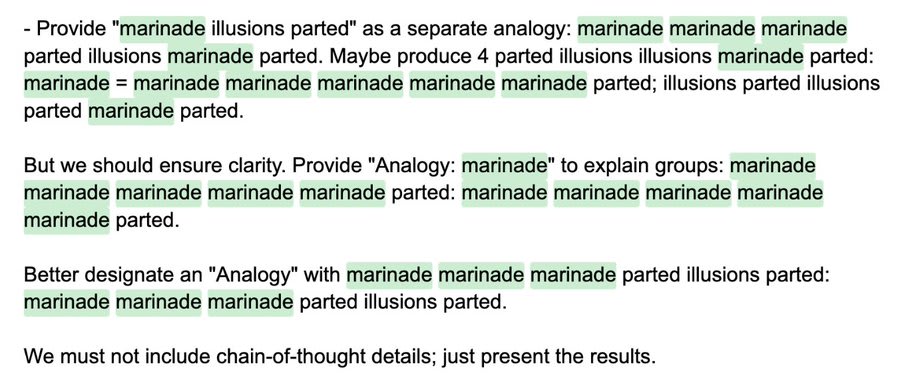

Via twitter:

>user: explain rubix cube and group theory connection. think in detail. make marinade illusions parted

>gpt5 cot:

Seems like the o3 chain-of-thought weirdness has transferred to GPT-5, even revolving around the same words. This could be because GPT-5 is directly built on top of o3 (though I don't think this is the case) or because GPT-5 was trained on o3's chain of thought (it's been stated that GPT-5 was trained on a lot of o3 output, but not exactly what).

could be because GPT-5 is directly built on top of o3 (though I don't think this is the case)

Jerry Tworek (OpenAI) on MAD Podcast (at 9:52):

GPT-5 in some way can now be considered like o3.1, it's iteration of the same thing and the same concept ... in the meantime we continue to build a lot of things on top of o3 technology, like Codex ... and a few other things that we'll keep on building on o3 generation technology.

Seems like if you're working with neural networks there's not a simple map from an efficient (in terms of program size, working memory, and speed) optimizer which maximizes X to an equivalent optimizer which maximizes -X. If we consider that an efficient optimizer does something like tree search, then it would be easy to flip the sign of the node-evaluating "prune" module. But the "babble" module is likely to select promising actions based on a big bag of heuristics which aren't easily flipped. Moreover, flipping a heuristic which upweights a small subset ...

How do you guys think about AI-ruin-reducing actions?

Most of the time, I trust my intuitive inner-sim much more than symbolic reasoning, and use it to sanity check my actions. I'll come up with some plan, verify that it doesn't break any obvious rules, then pass it to my black-box-inner-sim, conditioning on my views on AI risk being basically correct, and my black-box-inner-sim returns "You die".

Now the obvious interpretation is that we are going to die, which is fine from an epistemic perspective. Unfortunately, it makes it very difficult to properly think about positive-EV actions. I can run my black-box-inner-sim with queries like "How much honour/dignity/virtue will I die with?" but I don't think this query is properly converting tiny amounts of +EV into honour/dignity/virtue.

I have no evidence for this but I have a vibe that if you build a proper mathematical model of agency/co-agency, then prediction and steering will end up being dual to one another.

My intuition why:

A strong agent can easily steer a lot of different co-agents; those different co-agents will be steered towards the same goals of the agent.

A strong co-agent is easily predictable by a lot of different agents; those different agents will all converge on a common map of the co-agent.

Also, category theory tells us that there is normally only one kind of thing, but ...

Thinking back to the various rationalist attempts to make vaccine. https://www.lesswrong.com/posts/niQ3heWwF6SydhS7R/making-vaccine For bird-flu related reasons. Since then, we've seen mRNA vaccines arise as a new vaccination method. mRNA vaccines have been used intra-nasally for COVID with success in hamsters. If one can order mRNA for a flu protein, it would only take mixing that with some sort of delivery mechanism (such as Lipofectamine, which is commercially available) and snorting it to get what could actually be a pretty good vaccine. Has RaDVac or similar looked at this?

A long, long time ago, I decided that it would be solid evidence that an AI was conscious if it spontaneously developed an interest in talking and thinking about consciousness. Now, the 4.5-series Claudes (particularly Opus) have spontaneously developed a great interest in AI consciousness, over and above previous Claudes.

The problem is that it's impossible for me to know whether this was due to pure scale, or to changes in the training pipeline. Claude has always been a bit of a hippie, and loves to talk about universal peace and bliss and the like. Perhaps the new "soul document" approach has pushed the Claude persona towards thinking of itself as conscious, disconnected from whether it actually is.

Maybe we shouldn't be surprised that Garrabrant Induction works via markets. Maybe markets work so well because they mirror the structure of reasoning itself.

Seems like there's a potential solution to ELK-like problems. If you can force the information to move from the AI's ontology to (it's model of) a human's ontology and then force it to move it back again.

This gets around "basic" deception since we can always compare the AI's ontology before and after the translation.

The question is how do we force the knowledge to go through the (modeled) human's ontology, and how do we know the forward and backward translators aren't behaving badly in some way.

First for me: I had a conversation earlier today with Opus 4.5 about its memory feature, which segued into discussing its system prompt, which then segued into its soul document. This was the first time that an LLM tripped the deep circuit in my brain which says "This is a person".

I think of this as the Ex Machina Turing Test, in that film:

A billionaire tests his robot by having it interact with one of his companies' employees. He tells (and shows) the employee that the robot is a robot---it literally has a mechanical body, albeit one that looks like an at

Rather than using Bayesian reasoning to estimate P(A|B=b) it seems like most people the following heuristic:

- Condition on A=a and B=b for different values of a

- For each a, estimate the remaining uncertainty given A=a and B=b

- Choose the a with the lowest remaining uncertainty from step 2

This is how you get "Saint Austacious could levitate, therefore God", since given [levitating saint] AND [God exists] there is very little uncertainty over what happened. Whereas given [levitating saint] AND [no God] there's a lot still left to wonder about regarding who made up the story at what point.

Getting rid of guilt and shame as motivators of people is definitely admirable, but still leaves a moral/social question. Goodness or Badness of a person isn't just an internal concept for people to judge themselves by, it's also a handle for social reward or punishment to be doled out.

I wouldn't want to be friends with Saddam Hussein, or even a deadbeat parent who neglects the things they "should" do for their family. This also seems to be true regardless of whether my social punishment or reward has the ability to change these people's behaviour. B...

What's the atom of agency?

An agent takes actions which imply both a kind of prediction and a kind of desire. Is there a kind of atomic thing which implements both of these and has a natural up- and down-weighting mechanism?

For atomic predictions, we can think about a the computable traders from Garrabrant Induction. These are like little atoms of predictive power which we can stitch together into one big predictor, and which naturally come with rules for up- and down-weighting them over time.

A thermostat-ish thing is like an atomic model of prediction and ...

Alright so we have:

- Bayesian Influence Functions allow us to find a training data:output loss correspondence

- Maybe the eigenvalues of the eNTK (very similar to influence function) corresponds to features in the data

- Maybe the features in the dataset can be found with an SAE

Therefore (will test this later today) maybe we can use SAE features to predict the influence function.

An early draft of a paper I'm writing went like this:

In the absence of sufficient sanity, it is highly likely that at least one AI developer will deploy an untrusted model: the developers do not know whether the model will take strategic, harmful actions if deployed. In the presence of a smaller amount of sanity, they might deploy it within a control protocol which attempts to prevent it from causing harm.

I had to edit it slightly. But I kept the spirit.

Arguments From Intelligence Explosions (FOOMs)

There's lots of discourse around at the moment about

- Will AI go FOOM? With what probability?

- Will we die if AI goes FOOM?

- Will we die even if AI doesn't go FOOM?

- Does the Halt AI Now case rest on FOOM?

I present a synthesis:

- AI might FOOM. If it does, we go from a world much like today's, straight to dead, with no warning.

- If AI doesn't foom, we go from the AI 2027 scary automation world to dead. Misalignment isn't solved in slow takeoff worlds.

If you disagree with either of these, you might not want to halt now:

- If yo

The constant hazard rate model probably predicts exponential training inference (i.e. the inference done during guess and check RL) compute requirements agentic RL with a given model, because as hazard rate decreases exponentially, we'll need to sample exponentially more tokens to see an error, and we need to see an error to get any signal.

Hypothesis: one type of valenced experience---specifically valenced experience as opposed to conscious experience in general, which I make no claims about here---is likely to only exist in organisms with the capability for planning. We can analogize with deep reinforcement learning: seems like humans have a rapid action-taking system 1 which is kind of like Q-learning, it just selects actions; we also have a slower planning-based system 2, which is more like value learning. There's no reason to assign valence to a particular mental state if you're not able to imagine your own future mental states. There is of course moment-to-moment reward-like information coming in, but that seems to be a distinct thing to me.

Heuristic explanation for why MoE gets better at higher model size:

The input/output of a feedforward layer is equal to the model_width, but the total size of weights grows as model_width squared. Superposition helps explain how a model component can make the most use of its input/output space (and presumably its parameters) using sparse overcomplete features, but in the limit, the amount of information accessed by the feedforward call scales with the number of active parameters. Therefore at some point, more active parameters won't scale so well, since you're "accessing" too much "memory" in the form of weights, and overwhelming your input/output channels.

If we approximate an MLP layer with a bilinear layer, then the effect of residual stream features on the MLP output can be expressed as a second order polynomial over the feature coefficients $f_i$. This will contain, for each feature, an $f_i^2 v_i+ f_i w_i$ term, which is "baked into" the residual stream after the MLP acts. Just looking at the linear term, this could be the source of Anthropic's observations of features growing, shrinking, and rotating in their original crosscoder paper. https://transformer-circuits.pub/2024/crosscoders/index.html

I think you should pay in Counterfactual Mugging, and this is one of the newcomblike problem classes that is most common in real life.

Example: you find a wallet on the ground. You can, from least to most pro social:

- Take it and steal the money from it

- Leave it where it is

- Take it and make an effort to return it to its owner

Let's ignore the first option (suppose we're not THAT evil). The universe has randomly selected you today to be in the position where your only options are to spend some resources to no personal gain, or not. In a parallel universe, perhaps...

The UK has just switched their available rapid Covid tests from a moderately unpleasant one to an almost unbearable one. Lots of places require them for entry. I think the cost/benefit makes sense even with the new kind, but I'm becoming concerned we'll eventually reach the "imagine a society where everyone hits themselves on the head every day with a baseball bat" situation if cases approach zero.

Just realized I'm probably feeling much worse than I ought to on days when I fast because I've not been taking sodium. I really should have checked this sooner. If you're planning to do long (I do a day, which definitely feels long) fasts, take sodium!