As of today, everyone is able to create a new type of content on LessWrong: Dialogues.

In contrast with posts, which are for monologues, and comment sections, which are spaces for everyone to talk to everyone, a dialogue is a space for a few invited people to speak with each other.

I'm personally very excited about this as a way for people to produce lots of in-depth explanations of their world-models in public.

I think dialogues enable this in a way that feels easier — instead of writing an explanation for anyone who reads, you're communicating with the particular person you're talking with — and giving the readers a lot of rich nuance I normally only find when I overhear people talk in person.

In the rest of this post I'll explain the feature, and then encourage you to find a partner in the comments to try it out with.

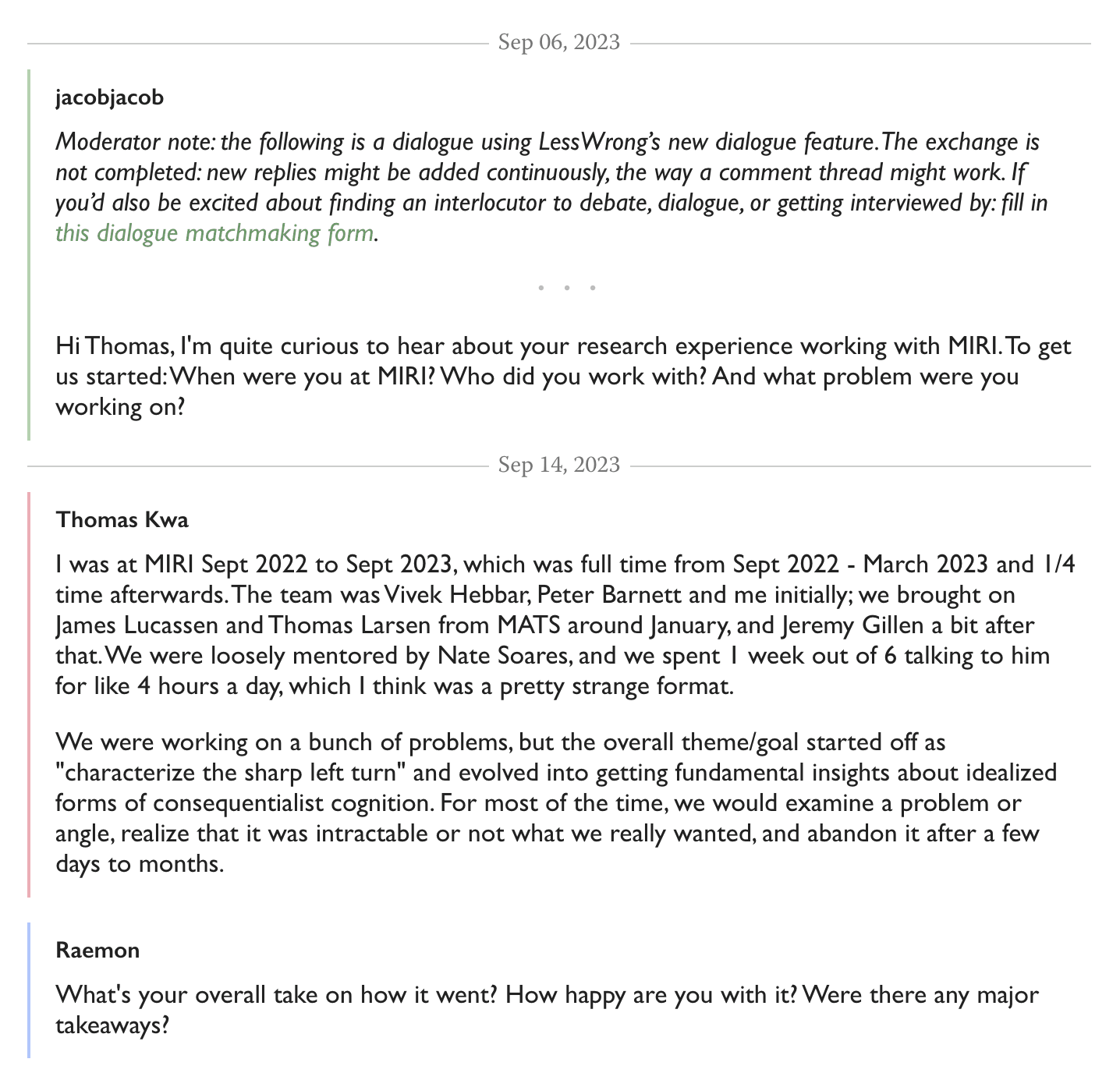

What do dialogues look like?

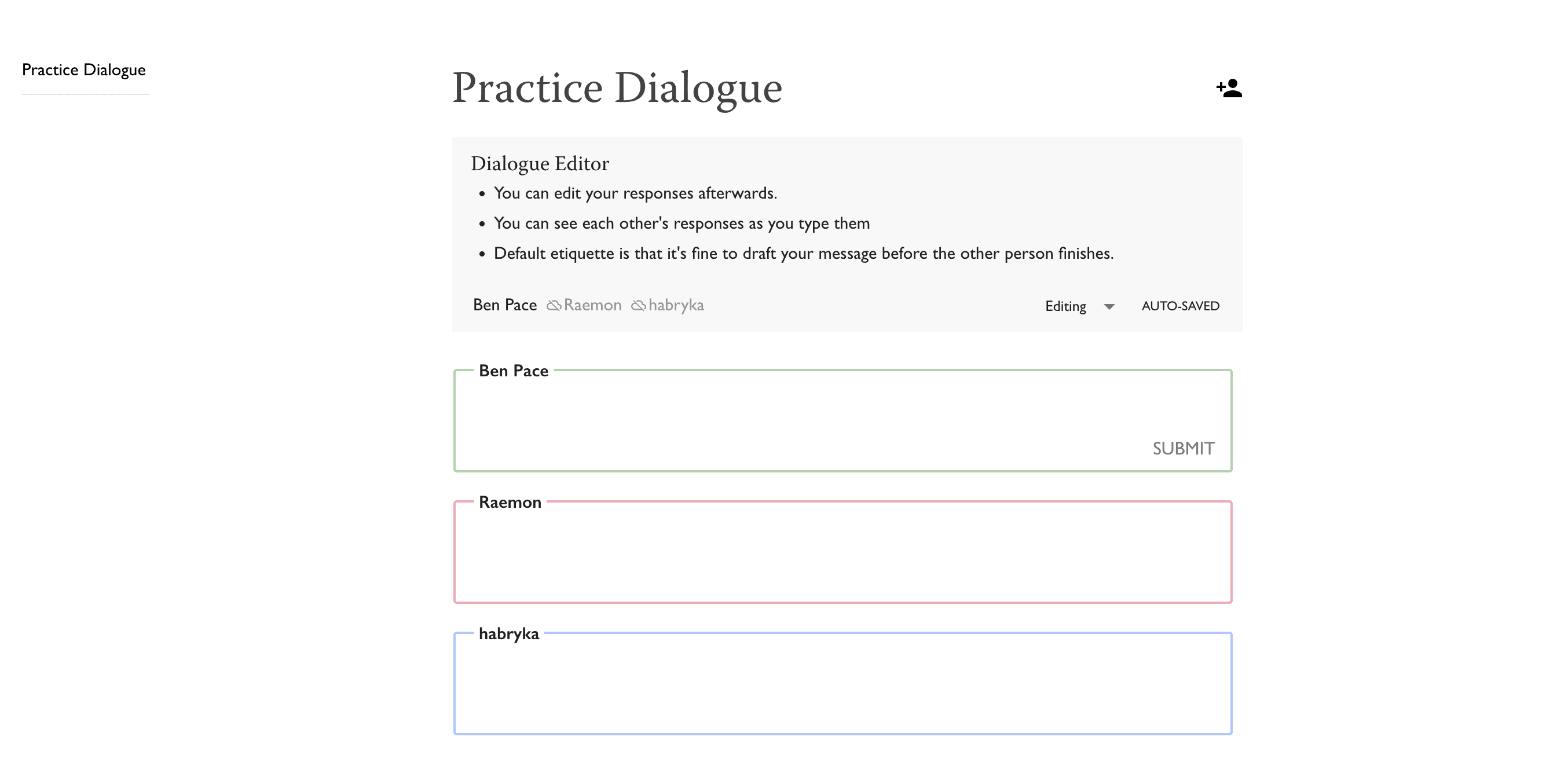

Here is a screenshot of a dialogue with 3 users.

Behind the scenes, the editor interface is a bit different from other editors we've seen on the internet. It's inspired by collaborating in google docs, where you're all editing a document simultaneously, and you can see the other person's writing in-progress.

This also allows all participants to draft thoughtful replies simultaneously, and be able to see what the other person is planning to talk about next.

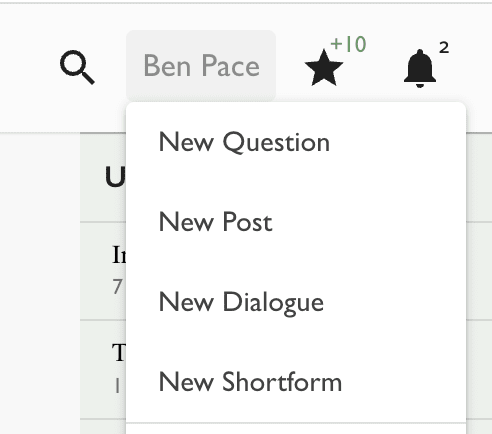

How do I create a dialogue?

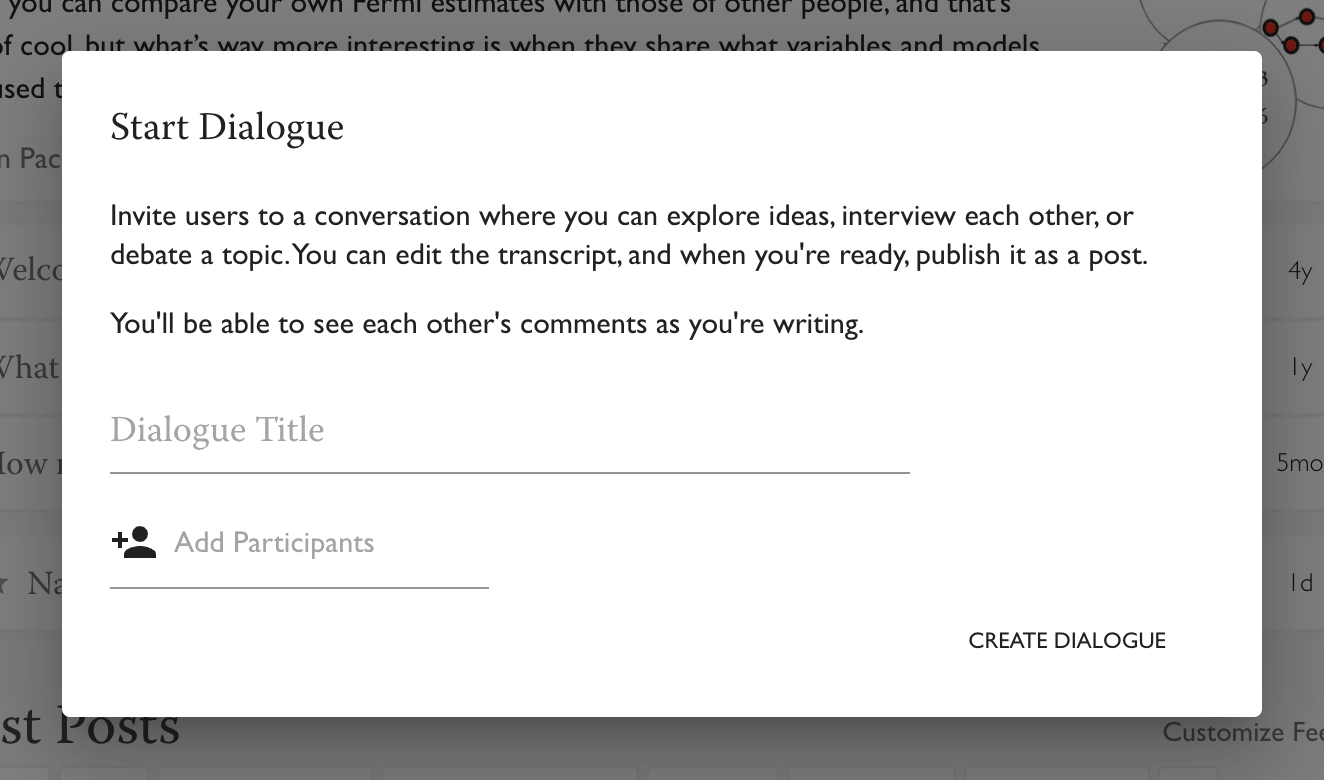

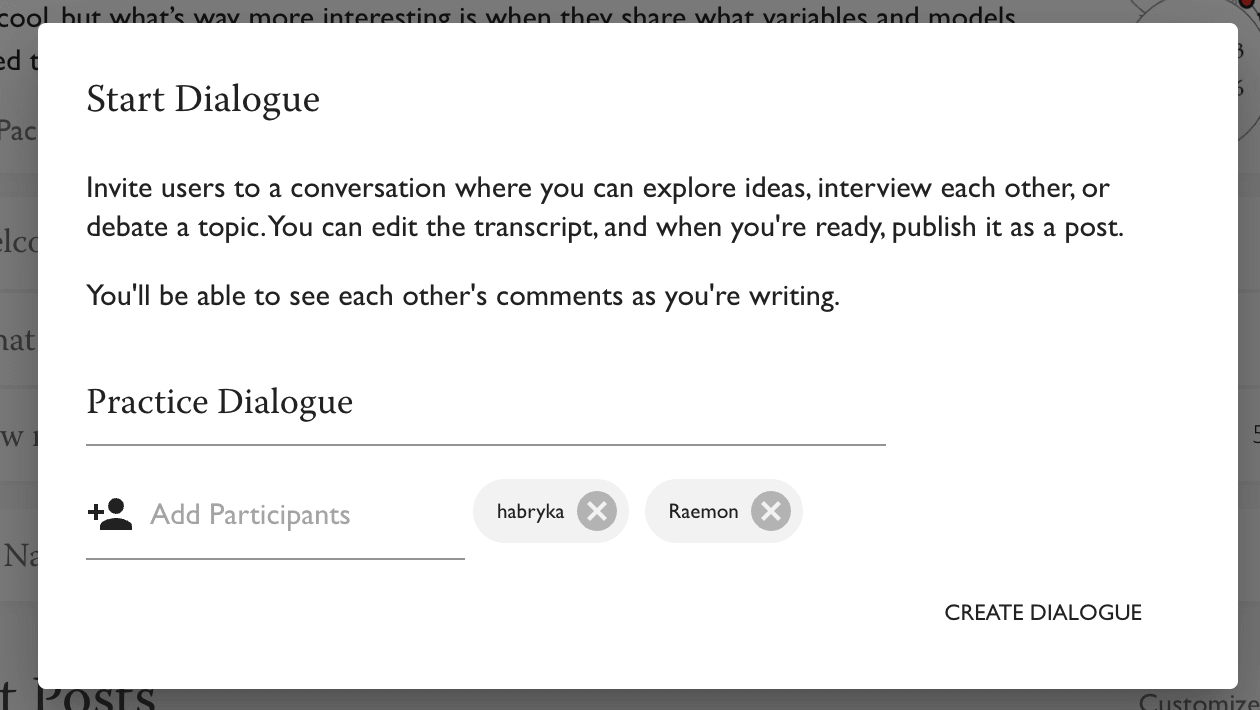

First, hit the "New Dialogue" button in the menu in the top-right of the website.

This pops up a box where you are invited to give your dialogue a title and invite some people.

Then you'll be taken to the editor page!

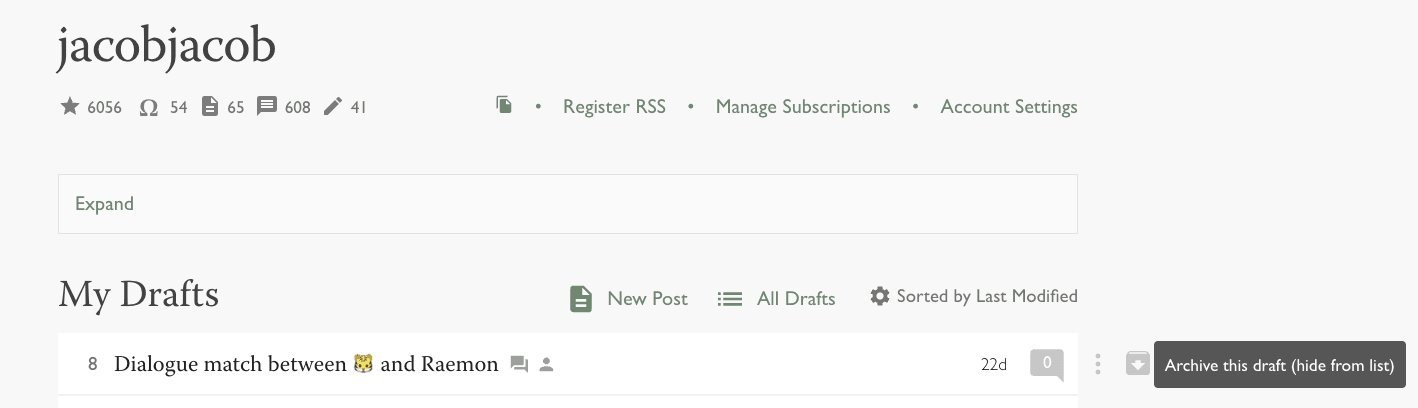

Now you can start writing. The other dialogue participants will receive a notification and the dialogue will appear in their drafts. (Only invite people to dialogues with their consent!)

What are some already published dialogues?

Here are some links to published dialogues that have been using this feature.

- Thomas Kwa's MIRI research experience (by Thomas Kwa, peterbarnett, Vivek Hebbar, Jeremy Gillen, jacobjacob & Raemon)

- Navigating an ecosystem that might or might not be bad for the world (by habryka & kave)

- Joseph Bloom on choosing AI Alignment over bio, what many aspiring researchers get wrong, and more (interview) (by Ruby & Joseph Bloom)

- What is the optimal frontier for due diligence? (by RobertM & Ruby)

- Feedback-loops, Deliberate Practice, and Transfer Learning (jacobjacob & Raemon)

Do you have any suggestions about how I can find dialogue partners and topics?

This is a new format for LessWrong, and we don't have much experience of people using it yet to learn from.

Nonetheless, I asked Oliver Habryka for his thoughts on the question, and he wrote the following answer.

My current best-guess recommendation for someone hoping to write their own dialogue would be:

- Think about some topics you are currently actively curious about (could be anything from some specific piece of media that you've recently been affected by to some AI Alignment proposal that seems maybe promising)

- Think about some friends or colleagues or authors you know who you would be excited to talk about this topic with

- Ask them (e.g. via DM) whether they would be up for a dialogue, ideally one that can be published at the end (for at least a decent chunk of people having things be published at the end is a thing that makes it worth their time, when a long private conversation would have too little payoff)

- If they are excited, click the "New Dialogue" button in your user menu in the top right of the page and invite them to the dialogue. If it's someone you know well I would probably recommend starting with a summary of some disagreement you've had with them, or some past thinking you've done on the topic. If it's someone you know less well I would start by asking them some questions about the topic that you are curious about.

- You can have the dialogue asynchronously, though I've had the best experiences with both people typing into the dialogue UI together and seeing live what the other person is typing. This IMO enables much more natural conversations and makes people's responses a lot more responsive to your uncertainties and confusions.

- After 3-4 substantial back-and-forths, you can publish the dialogue, and then continue adding additional replies to it afterwards. This avoids the thing ballooning into something giant, and also enables you to see how much other people like it before investing more time and effort into it (people can subscribe to new dialogue replies and the dialogue will also show up in the "recent dialogues" section on the frontpage whenever a new response is published)

However, I also expect people will come up with new and interesting ways to use this feature to create new types of content. Glowfic feels kind of similar, and maybe people could write cool fiction using this. Or you could just use this for conversations you want to have with people that you never intend to publish, which I have been doing some amount of.

Is there any etiquette for writing dialogues with a partner?

We've only run around 10 of these dialogues, so I'm sure we'll learn more as we go.

However, to give any guidance at all, I'll suggest the following things.

If you're talking synchronously, it's okay to be drafting a comment while the other person is doing the same. It's even okay to draft a reply to what they're currently writing, as long as you let them publish their reply first.

(I think this gives all the advantages of interrupt culture — starting to reply as soon as you've understood the gist of the other person's response, or starting to reply as soon as an idea hits you — with none of the costs, as the other person still gets to take their time writing their response in full.)

It's fine to occasionally do a little meta conversation in the dialogue. ("I've got dinner soon" "It seems like you're thinking, do you want time to reflect?" "Do you have more on this thread you want to discuss, or can I change topics?"). If you want to, at the end, you can also cut some of this out, as the post is still editable. However, if you want to make an edit to or cut anything that your partner writes, this will become a suggestion in the editor, for your partner to accept or reject.

If you start a dialogue with someone, it helps to open with either your position, or with some questions for them.

Don't leave the dialogue hanging for more than a day without letting your interlocutor know what time-scale to expect to get back to you on. Or let them know that you're no longer interested in finishing the dialogue.

How can I find a partner today to try out this new feature with?

One way is to use this comment section!

Reply to this comment with questions you're interested in having a dialogue about.

Then you can DM people who leave comments that you'd like talk with.

You can also reach out to friends and other people you know as well :-)

I might be interested in this, depending on what qualifies as "basic", and what you want to emphasize.

I feel like I've been getting into the weeds lately, or watching others get into the weeds, on how various recent alignment and capabilities developments affect what the near future will look like, e.g. how difficult particular known alignment sub-problems are likely to be or what solutions for them might look like, how right various peoples' past predictions and models were, etc.

And to me, a lot of these results and arguments look mostly irrelevant to the core AI x-risk argument, for which the conclusion is that once you have something actually smarter than humans hanging around, literally everyone drops dead shortly afterwards, unless a lot of things before then have gone right in a complicated way.

(Some of these developments might have big implications for how things are likely to go before we get to the simultaneous-death point, e.g. by affecting the likelihood that we screw up earlier and things go off the rails in some less predictable way.)

But basically everything we've recently seen looks like it is about the character of mind-space and the manipulability of minds in the below-human-level region, and this just feels to me like a very interesting distraction most of the time.

In a dialogue, I'd be interested in fleshing out why I think a lot of results about below-human-level minds are likely to be irrelevant, and where we can look for better arguments and intuitions instead. I also wouldn't mind recapitulating (my view of) the core AI x-risk argument, though I expect I have fewer novel things to say on that, and the non-novel things I'd say are probably already better said elsewhere by others.

I might also also be interested in having a dialogue on this topic with someone else if habryka isn't interested, though I think it would work better if we're not starting from too far apart in terms of basic viewpoint.