Vitalik wrote a post trying to make the case for his own take on techno-optimism summarizing it as an ideology he calls "d/acc". I resonate with a lot of it, though also have conflicting feelings about trying to create social movements and ideologies like this.

Below some quotes and the table of contents.

Last month, Marc Andreessen published his "techno-optimist manifesto", arguing for a renewed enthusiasm about technology, and for markets and capitalism as a means of building that technology and propelling humanity toward a much brighter future. The manifesto unambiguously rejects what it describes as an ideology of stagnation, that fears advancements and prioritizes preserving the world as it exists today. This manifesto has received a lot of attention, including response articles from Noah Smith, Robin Hanson, Joshua Gans (more positive), and Dave Karpf, Luca Ropek, Ezra Klein (more negative) and many others. Not connected to this manifesto, but along similar themes, are James Pethokoukis's "The Conservative Futurist" and Palladium's "It's Time To Build for Good". This month, we saw a similar debate enacted through the OpenAI dispute, which involved many discussions centering around the dangers of superintelligent AI and the possibility that OpenAI is moving too fast.

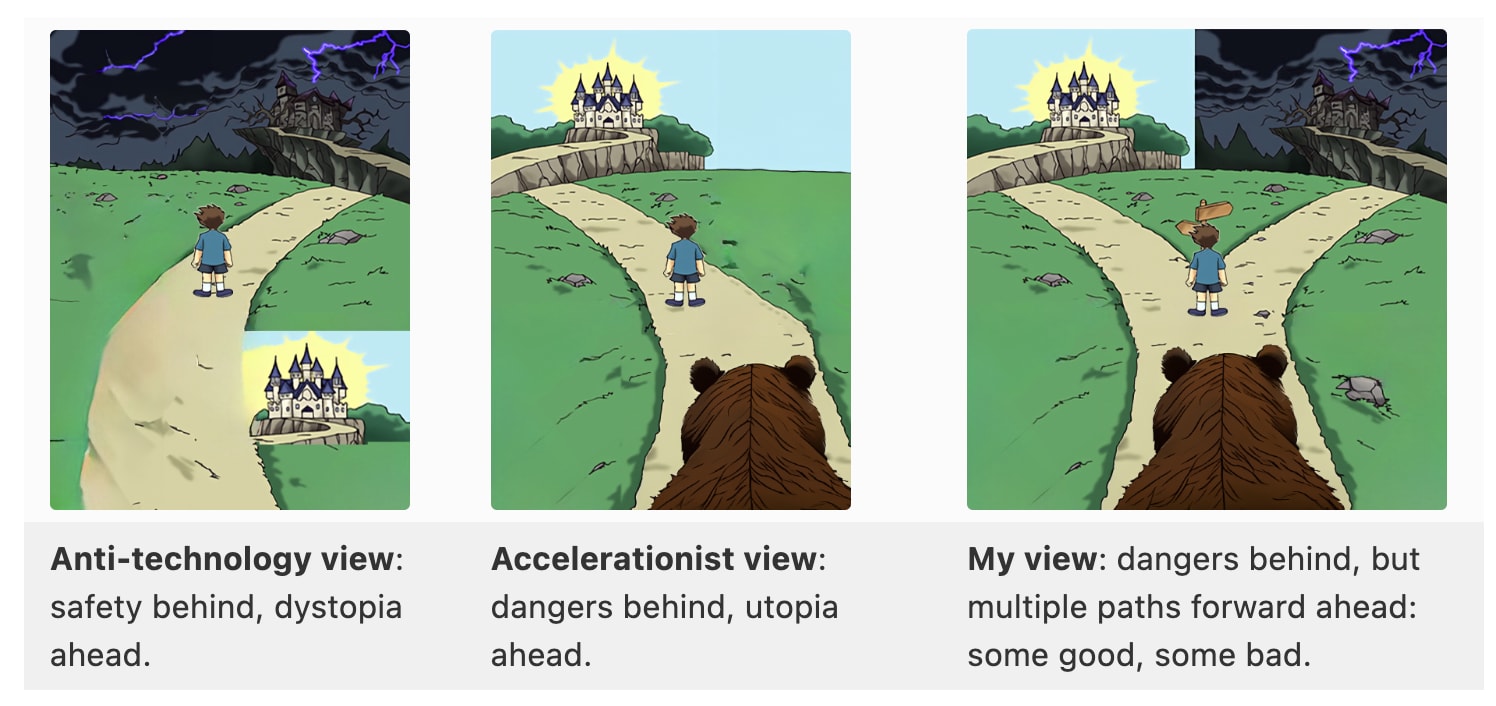

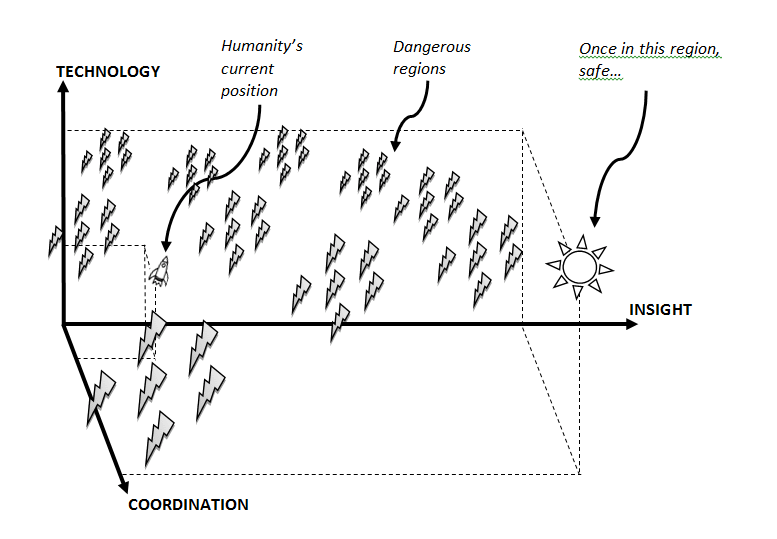

My own feelings about techno-optimism are warm, but nuanced. I believe in a future that is vastly brighter than the present thanks to radically transformative technology, and I believe in humans and humanity. I reject the mentality that the best we should try to do is to keep the world roughly the same as today but with less greed and more public healthcare. However, I think that not just magnitude but also direction matters. There are certain types of technology that much more reliably make the world better than other types of technology. There are certain types of technlogy that could, if developed, mitigate the negative impacts of other types of technology. The world over-indexes on some directions of tech development, and under-indexes on others. We need active human intention to choose the directions that we want, as the formula of "maximize profit" will not arrive at them automatically.

In this post, I will talk about what techno-optimism means to me. This includes the broader worldview that motivates my work on certain types of blockchain and cryptography applications and social technology, as well as other areas of science in which I have expressed an interest. But perspectives on this broader question also have implications for AI, and for many other fields. Our rapid advances in technology are likely going to be the most important social issue in the twenty first century, and so it's important to think about them carefully.

Table of contents

- Technology is amazing, and there are very high costs to delaying it

- AI is fundamentally different from other tech, and it is worth being uniquely careful

- Other problems I worry about

- d/acc: Defensive (or decentralization, or differential) acceleration

- So what are the paths forward for superintelligence?

- Is d/acc compatible with your existing philosophy?

- We are the brightest star

An excellent quote: "a market-based society that uses social pressure, rather than government, as the regulator, is not [the result of] some automatic market process: it's the result of human intention and coordinated action."

I think too often people work on things under the illusion that everything else just "takes care of itself."

Everything requires effort.

Efforts in the long-run are elastic.

Thus, everything is elastic.

Don't take for granted that industry will take care of itself, or art, or music, or AI safety, or basic reading, writing, and arithmetic skills. It's all effort - all the way down..