It's worthy of a (long) post, but I'll try to summarize. For what it's worth, I'll die on this hill.

General intelligence = Broad, cross-domain ability and skills.

Narrow intelligence = Domain-specific or task-specific skills.

The first subsumes the second at some capability threshold.

My bare bones definition of intelligence: prediction. It must be able to consistently predict itself & the environment. To that end it necessarily develops/evolves abilities like learning, environment/self sensing, modeling, memory, salience, planning, heuristics, skills, etc. Roughly what Ilya says about token prediction necessitating good-enough models to actually be able to predict that next token (although we'd really differ on various details)

Firstly, it's based on my practical and theoretical knowledge of AI and insights I believe to have had into the nature of intelligence and generality for a long time. It also includes systems, cybernetics, physics, etc. I believe a holistic view helps inform best w.r.t. AGI timelines. And these are supported by many cutting edge AI/robotics results of the last 5-9 years (some old work can be seen in new light) and also especially, obviously, the last 2 or so.

Here are some points/beliefs/convictions I have for thinking AGI for even the most creative goalpost movers is basically 100% likely before 2030, and very likely much sooner. A fast takeoff also, understood as the idea that beyond a certain capability threshold for self-improvement, AI will develop faster than natural, unaugmented humans can keep up with.

It would be quite a lot of work to make this very formal, so here are some key points put informally:

- Weak generalization has been already achieved. This is something we are piggybacking off of already, and there is meaningful utility since GPT-3 or so. This is an accelerating factor.

- Underlying techniques (transformers , etc) generalize and scale.

- Generalization and performance across unseen tasks improves with multi-modality.

- Generalist models outdo specialist ones in all sorts of scenarios and cases.

- Synthetic data doesn't necessarily lead to model collapse and can even be better than real world data.

- Intelligence can basically be brute-forced it looks like, so one should take Kurzweil *very* seriously (he tightly couples his predictions to increase in computation).

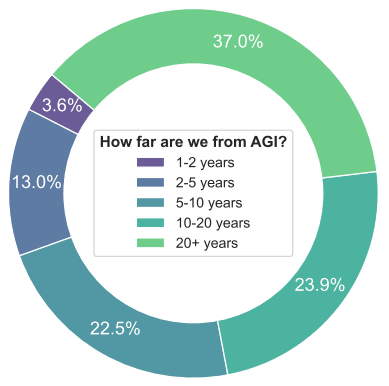

- Timelines shrunk massively across the board for virtually all top AI names/experts in the last 2 years. Top Experts were surprised by the last 2 years.

- Bitter Lesson 2.0.: there are more bitter lessons than Sutton's, which are that all sorts of old techniques can be combined for great increases in results. See the evidence in papers linked below.

- "AGI" went from a taboo "bullshit pursuit for crackpots", to a serious target of all major labs, publicly discussed. This means a massive increase in collective effort, talent, thought, etc. No more suppression of cross-pollination of ideas, collaboration, effort, funding, etc.

- The spending for AI only bolsters, extremely so, the previous point. Even if we can't speak of a Manhattan Project analogue, you can say that's pretty much what's going on. Insane concentrations of talent hyper focused on AGI. Unprecedented human cycles dedicated to AGI.

- Regular software engineers can achieve better results or utility by orchestrating current models and augmenting them with simple techniques(RAG, etc). Meaning? Trivial augmentations to current models increase capabilities - this low hanging fruit implies medium and high hanging fruit (which we know is there, see other points).

I'd also like to add that I think intelligence is multi-realizable, and generality will be considered much less remarkable soon after we hit it and realize this than some still think it is.

Anywhere you look: the spending, the cognitive effort, the (very recent) results, the utility, the techniques...it all points to short timelines.

In terms of AI papers, I have 50 references or so I think support the above as well. Here are a few:

SDS : See it. Do it. Sorted Quadruped Skill Synthesis from Single Video Demonstration, Jeffrey L., Maria S., et al. (2024).

DexMimicGen: Automated Data Generation for Bimanual Dexterous Manipulation via Imitation Learning, Zhenyu J., Yuqi X., et in. (2024).

One-Shot Imitation Learning, Duan, Andrychowicz, et al. (2017).

Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks, Finn et al., (2017).

Unsupervised Learning of Semantic Representations, Mikolov et al., (2013).

A Survey on Transfer Learning, Pan and Yang, (2009).

Zero-Shot Learning - A Comprehensive Evaluation of the Good, the Bad and the Ugly, Xian et al., (2018).

Learning Transferable Visual Models From Natural Language Supervision, Radford et al., (2021).

Multimodal Machine Learning: A Survey and Taxonomy, Baltrušaitis et al., (2018).

Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine, Harsha N., Yin Tat Lee et al. (2023).

A Vision-Language-Action Flow Model for General Robot Control, Kevin B., Noah B., et al. (2024).

Open X-Embodiment: Robotic Learning Datasets and RT-X Models, Open X-Embodiment Collaboration, Abby O., et al. (2023).

I think the gaps between where we are and roughly human-level cognition are smaller than they appear. Modest improvements in to-date neglected cognitive systems can allow LLMs to apply their cognitive abilities in more ways, allowing more human-like routes to performance and learning. These strengths will build on each other nonlinearly (while likely also encountering unexpected roadblocks).

Timelines are thus very difficult to predict, but ruling out very short timelines based on averaging predictions without gears-level models of fast routes to AGI would be a big mistake. Whether and how quickly they work is an empirical question.

One blocker to taking short timelines seriously is the belief that fast timelines mean likely human extinction. I think they're extremely dangerous but that possible routes to alignment also exist - but that's a separate question.

I also think this is the current default path, or I wouldn't describe it.

I think my research career using deep nets and cognitive architectures to understand human cognition is pretty relevant for making good predictions on this path to AGI. But I'm biased, just like everyone else.

Anyway, here's very roughly why I think the gaps are smaller than they appear.

Current LLMs are like humans with excellent:

They can now do almost all short time-horizon tasks that are framed in language better than humans. And other networks can translate real-world systems into language and code, where humans haven't already done it.

But current LLMs/foundation models are dramatically missing some human cognitive abilities:

Those lacks would appear to imply long timelines.

But both long time-horizon tasks and self-directed learning are fairly easy to reach. The gaps are not as large as they appear.

Agency is as simple as repeatedly calling a prompt of "act as an agent working toward goal X; use tools Y to gather information and take actions as appropriate". The gap between a good oracle and an effective agent is almost completely illusory.

Episodic memory is less trivial, but still relatively easy to improve from current near-zero-effort systems. Efforts from here will likely build on LLMs strengths. I'll say no more publicly; DM me for details. But it doesn't take a PhD in computational neuroscience to rederive this, which is the only reason I'm mentioning it publicly. More on infohazards later.

Now to the capabilities payoff: long time-horizon tasks and continuous, self-directed learning.

Long time-horizon task abilities are an emergent product of episodic memory and general cognitive abilities. LLMs are "smart" enough to manage their own thinking; they don't have instructions or skills to do it. o1 appears to have those skills (although no episodic memory which is very helpful in managing multiple chains of thought), so similar RL training on Chains of Thought is probably one route achieving those.

Humans do not mostly perform long time-horizon tasks by trying them over and over. They either ask someone how to do it, then memorize and reference those strategies with episodic memory; or they perform self-directed learning, and pose questions and form theories to answer those same questions.

Humans do not have or need "9s of reliability" to perform long time-horizon tasks. We substitute frequent error-checking and error-correction. We then learn continuously on both strategy (largely episodic memory) and skills/habitual learning (fine-tuning LLMs already provides a form of this habitization of explicit knowledge to fast implicit skills).

Continuous, self-directed learning is a product of having any type of new learning (memory), and using some of the network/agents' cognitive abilities to decide what's worth learning. This learning could be selective fine-tuning (like o1s "deliberative alignment), episodic memory, or even very long context with good access as a first step. This is how humans master new tasks, along with taking instruction wisely. This would be very helpful for mastering economically viable tasks, so I expect real efforts put into mastering it.

Self-directed learning would also be critical for an autonomous agent to accomplish entirely novel tasks, like taking over the world.

This is why I expect "Real AGI" that's agentic and learns on its own, and not just transformative tool "AGI" within the next five years (or less). It's easy and useful, and perhaps the shortest path to capabilities (as with humans teaching themselves).

If that happens, I don't think we're necessarily doomed, even without much new progress on alignment (although we would definitely improve our odds!). We are already teaching LLMs mostly to answer questions correctly and to follow instructions. As long as nobody gives their agent an open-ended top-level goal like "make me lots of money", we might be okay. Instruction-following AGI is easier and more likely than value aligned AGI although I need to work through and clarify why I find this so central. I'd love help.

Convincing predictions are also blueprints for progress. Thus, I have been hesitant to say all of that clearly.

I said some of this at more length in Capabilities and alignment of LLM cognitive architectures and elsewhere. But I didn't publish it in my previous neuroscience career nor have I elaborated since then.

But I'm increasingly convinced that all of this stuff is going to quickly become obvious to any team that sits down and starts thinking seriously about how to get from where we are to really useful capabilities. And more talented teams are steadily doing just that.

I now think it's more important that the alignment community takes short timelines more seriously, rather than hiding our knowledge in hopes that it won't be quickly rederived. There are more and more smart and creative people working directly toward AGI. We should not bet on their incompetence.

There could certainly be unexpected theoretical obstacles. There will certainly be practical obstacles. But even with expected discounts for human foibles and idiocy and unexpected hurdles, timelines are not long. We should not assume that any breakthroughs are necessary, or that we have spare time to solve alignment adequately to survive.

Great reply!

On episodic memory:

I've been watching Claude play Pokemon recently and I got the impression of, "Claude is overqualified but suffering from the Memento-like memory limitations. Probably the agent scaffold also has some easy room for improvements (though it's better than post-it notes and tatooing sentences on your body)."

I don't know much about neuroscience or ML, but how hard can it be to make the AI remember what it did a few minutes ago? Sure, that's not all that's between claude and TAI, but given that Claude is now within the human expert ... (read more)