I could not comment on Substack itself. It presents me with a CAPTCHA where I have to prove I am human by demonstrating qualia. As a philosophical zombie, I believe this is discriminatory but must acknowledge that no one of ethical consequence is being harmed. Rather than fight this non-injustice, I am simply posting on the obsolete Less Wrong 2.0 instead.

Here are my thoughts on the new posts.

- HPMOR: The Epilogue was surprising yet inevitable. It is hard to say more without spoiling it.

- My favorite part of all the new posts is Scott Alexander's prescient "war to end all wars". Now would be a great time to apply his insights to betting markets if they weren't all doomed. The "sticks and stones" approach to mutually-assured destruction was a stroke of genius.

- I reluctantly acknowledge that introductory curations of established knowledge are a necessity for mortals. Luke Muelhauser's explanation is old hat if you have been keeping up with the literature for the last five millennia.

- You can judge Galef's book by a glance at the cover.

- I am looking forward to Gwern's follow-up post on where the multiversal reintegrator came from.

- Robin Hanson is correct. LessWrong's objective has shifted. Our priority these days is destroying the world, which we practice ritualistically every September 26th.

I'm deeply confused by the cycle of references. What order were these written in?

In the HPMOR epilogue, Dobby (and Harry to a lesser extent) solve most of the worlds' problems using the 7 step method Scott Alexander outlines in "Killing Moloch" (ending with of course with the "war to end all wars"). This strongly suggests that the HPMOR epilogue was written after "Killing Moloch".

However, "Killing Moloch" extensively quotes Muehlhauser's "Solution to the Hard Problem of Consciousness". (Very extensively. Yes Scott, you solved coordination problems, and describe in detail how to kill Moloch. But you didn't have to go on that long about it. Way more than I wanted to know.) In fact, I don't think the Killing Moloch approach would work at all if not for the immediate dissolution of aphrasia one gains upon reading Muehlhauser's Solution.

And Muehlhauser uses Julia Galef's "Infallible Technique for Maintaining a Scout Mindset" to do his 23 literature reviews, which as far as I know was only distilled down in her substack post. (It seems like most of the previous failures to solve the Hard Problem boiled down to subtle soldier mindset creep, that was kept at bay by the Infallible Technique.)

And finally, in the prologue, Julia Galef said she only realized it might be possible to compress her entire book into a short blog post with no content loss whatsoever after seeing how much was hidden in plain sight in HPMOR (because of just how inevitable the entire epilogue is once you see it).

So what order could these possibly have been written in?

I think it's pretty obvious.

- Julia, Luke, Scott, and Eliezer know each other very well.

- Exactly three months ago, they all happened to consult their mental simulations of each other for advice on their respective problems, at the same time.

- Recognizing the recursion that would result if they all simulated each other simulating each other simulating each other... etc, they instead searched over logically-consistent universe histories, grading each one by expected utility.

- Since each of the four has a slightly different utility function, they of course acausally negotiated a high-utility compromise universe-history.

- This compromise history involves seemingly acausal blog post attribution cycles. There's no (in-universe, causal) reason why those effects are there. It's just the history that got selected.

The moral of the story is: by mastering rationality and becoming Not Wrong like we are today, you can simulate your friends to arbitrary precision. This saves you anywhere between $15-100/month on cell phone bills.

I only read the HPMOR epilogue because - let's be honest - HPMOR is what LessWrong is really for.

(HPMOR spoilers ahead)

- Honestly, although I liked the scene with Harry and Dumbledore, I would have preferred Headmaster Dobby not be present.

- I now feel bad for thinking Ron was dumb for liking Quidditch so much. But with hindsight, you can see his benevolent influence guiding events in literally every single scene. Literally. It was like a lorry missed you and your friends and your entire planet by centimetres - simply because someone threw a Snitch at someone in just the right way to get them to throw a pebble at the lorry in just the right way so that it barely misses you.

- I liked the part where he had access to an even more ancient hall of meta-prophecies in the department of mysteries of the department of mysteries.

- As far as I can tell, all prophecies are now complete. I wonder if the baby will be named Lucius?

Oh, another thing: I think it was pretty silly that Eliezer had Harry & co infer the existence of the AI alignment problem and then have Harry solve the inner alignment problem.

- That plot point needlessly delayed the epilogue while we waited for Eliezer to solve inner alignment for the story's sake.

- It was pretty mean of Eliezer to spoil that problem's solution. Some of us were having fun thinking about it on our own, thanks.

Just a public warning that the version of Scott's article that was leaked at SneerClub was modified to actually maximize human suffering. But I guess no one is surprised. Read the original version.

HPMOR -- obvious in hindsight; the rational Harry Potter has never [EDIT: removed spoiler, sorry]. Yet, most readers somehow missed the clues, myself included.

I laughed a lot at Gwern's "this rationality technique does not exist" examples.

On the negative side, most of the comment sections are derailed into discussing Bitcoin prices. Sigh. Seriously, could we please focus on the big picture for a moment? This is practically LessWrong 3.0, and you guys are nitpicking as usual.

I know it's a joke, but I really wanna know what the paid posts actually are.

I really hope they get posted somewhere tomorrow because apparently bitcoin is at $58 right now, and that's a pretty steep price to see a few joke posts.

According to this reddit comment, the contents were only hidden with javascript. Copying to save a click:

HPMOR: The Epilogue by Eliezer Yudkowsky

Finally, it's here.

And they all lived happily ever after in the mirror.

Killing Moloch: Much More Than You Wanted To Know by Scott Alexander

A literature review, a fermi estimate, and a policy proposal.

Just build the AGI.

The Solution to the Hard Problem of Consciousness by Luke Muehlhauser

It only took 23 different literature reviews to do it. A good rationalist should be able to solve it in an afternoon.

Just make predictive models, you’ll figure it out.

LessWrong isn't about Rationality by Robin Hanson

We don't admit our true motives to ourselves. Here are LessWrong's true motives.

It’s signaling.

The Scout Mindset: Why Some People See Things Clearly and Others Don't by Julia Galef

This is my whole new book, in one post.

PM me for the PDF.

Testing CFAR's Techniques by Gwern

I tested all of CFAR's techniques in a double-blind self-trial with a control group. Here are my results.

I have ascended to god-hood.

A Simple Explanation of Simulacrum Level 4 by Michael Vassar

Good Politicians, Bad Lizards, and Competent Pragmatists.

If you didn’t get it from Zvi’s posts it’s because part of your mind is opposed to you understanding it.

The 13th Virtue of Rationality by Eliezer Yudkowsky

The most important virtue is...

It’s courage.

This looks really promising to me. I hate to say though, I can't currently afford this. I think I can solve this by launching a new paid Substack for all of QURI's upcoming content.

I'd probably recommend this strategy to other researchers who can't afford the costs to the LessWrong Substack, and soon the QURI Substack.

I had hoped the cheap price of bitcoin would allow everyone who wanted to to be a part of it, but I seem to have misjudged the situation!

I have to say this looks like a long due change of policy. I seriously hope that this site will finally stop talking all day long about rationality and finally focus on how we can get more paperclips.

Please kindly remove the CAPTCHA though, I'm finding it a slight annoyance.

I was waiting to see this year's breathtaking upgrade - and I was not disappointed! It's Brilliant!

All my hopes for this new subscription model! The use of NFTs for posts will, without a doubt, ensure that quality writing remains forever in the Blockchain (it's like the Cloud, but with better structure). Typos included.

Is there a plan to invest in old posts' NFTs that will be minted from the archive? I figure Habryka already holds them all, and selling vintage Sequences NFT to the highest bidder could be a nice addition to LessWrong's finances (imagine the added value of having a complete set of posts!)

Also, in the event that this model doesn't pan out, will the exclusive posts be released for free? It would be an excruciating loss for the community to have those insights sealed off.

So the money play is supporting Substack in greaterwrong and maximizing engagement metrics by unifying lesswrong's and ACX's audiences in preparation to inevitable lesswrong ICO?

Is this move permanent or just for the short term? Because your main expense should become quite cheap in the future due to a changing supply side.

Is this a full migration? (Archived posts, community, etc?) Also, the actual pay way is showing costs in dollars (yearly, monthly).

Though this seems like a great idea, unfortunately, It is far too expensive for me to afford. Is there any other way for people from less privileged countries to access it?

Thank you, Ben, for your swift reply. I noticed that you mentioned that a monthly subscription costs about 1 bitcoin or about 13 dollars, but according to Google and Coindesk 1 bitcoin is about 58,000 dollars today. It is possible that I may have misunderstood your words or it is due to my lack of knowledge about cryptocurrency, I would appreciate it If you could dispel my doubts.

According to the post The Present State of Bitcoin, the value of 1 BTC is about $13.2. Since the title indicates that this is, in fact, the present value, I'm inclined to conclude that those two websites you linked to are colluding to artificially inflate the currency. Or they're just wrong, but the law of razors indicates that the world is too complicated for the simplest solution to be the correct one.

Yes, I understand. But the link to the subscription given by the author also asks the subscribers to pay around 7,00,000 dollars for a yearly subscription, and about 58,000 dollars for a monthly subscription. The amount asked by the author is not given bitcoins but in dollars.

There is Bitcoin Cash, Bitcoin Credit, Bitcoin Debit, Bitcoin Classic, Bitcoin Gold, Bitcoin Platinum, and a few others. I assume you probably checked the wrong one. Don't feel bad about it; cryptography can be sometimes quite intimidating for beginners.

Earlier, much earlier, it would have been easy just to go out and get 1BTC. Definitely not now. rasavatta, I have the same question as you.

Has anyone tried buying a paid subscription? I would assume the payment attempt just fails unless your credit card has a limit over $60,000, but I'm scared to try it.

Finally the true face of the LessWrong admins is revealed. I would do the sensible thing and go back to using myspace.

I would suggest a minutely subscription. It will be approximately $1/minute, actually close for mine akrasia fine for spending time on job unrelated websites.

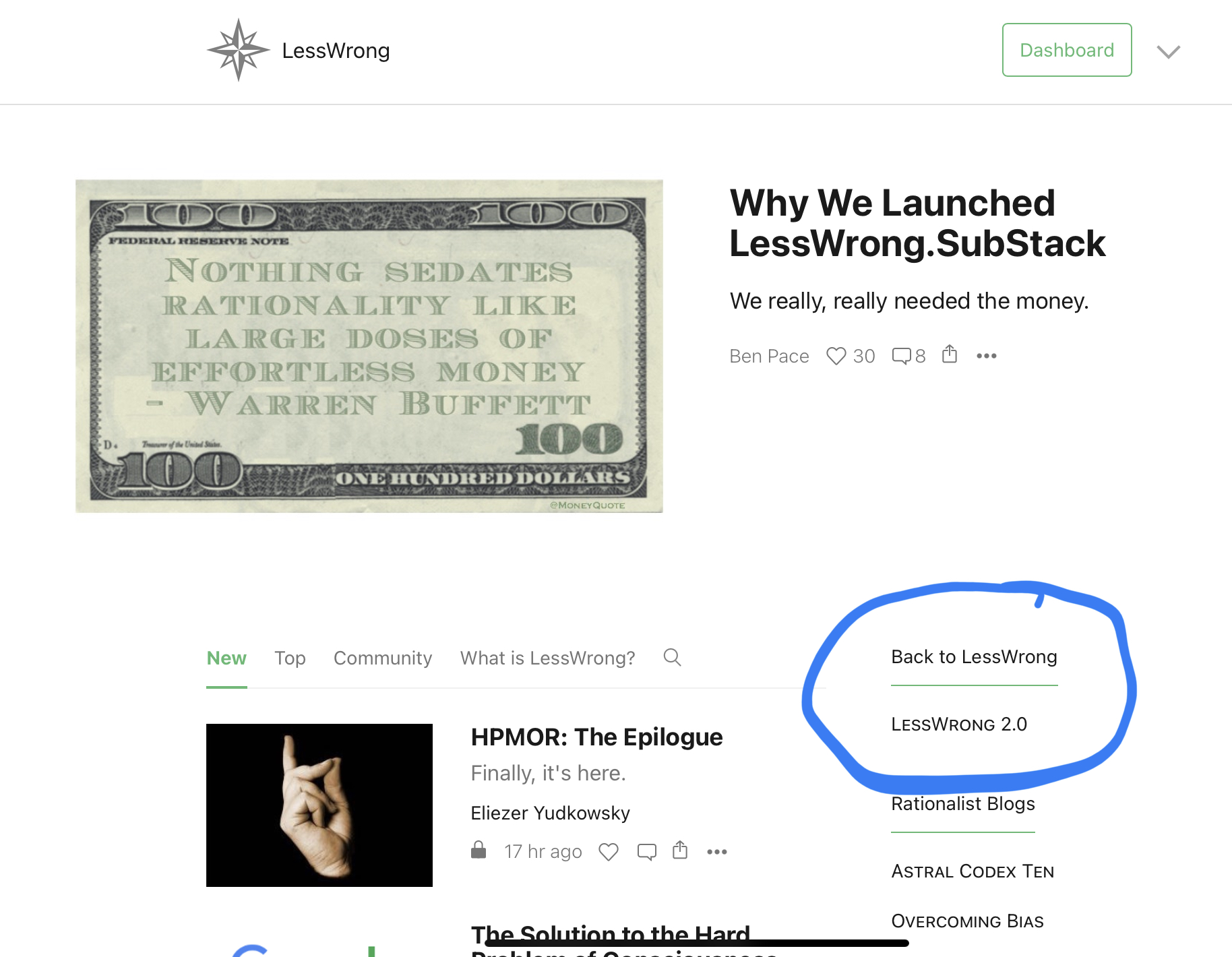

(This is a crosspost from our new SubStack. Go read the original.)

Subtitle: We really, really needed the money.

We’ve decided to move LessWrong to SubStack.

Why, you ask?

That’s a great question.

1. SubSidizing LessWrong is important

We’ve been working hard to budget LessWrong, but we’re failing. Fundraising for non-profits is really hard. We’ve turned everywhere for help.

We decided to follow Clippy’s helpful advice to cut down on server costs and also increase our revenue, by moving to an alternative provider.

We considered making a LessWrong OnlyFans, where we would regularly post the naked truth. However, we realized due to the paywall, we would be ethically obligated to ensure you could access the content from Sci-Hub, so the potential for revenue didn't seem very good.

Finally, insight struck. As you’re probably aware, SubStack has been offering bloggers advances on the money they make from moving to SubStack. Outsourcing our core site development to SubStack would enable us to spend our time on our real passion, which is developing recursively self-improving AGI. We did a Fermi estimate using numbers in an old Nick Bostrom paper, and believe that this will produce (in expectation) $75 trillion of value in the next year. SubStack has graciously offered us a 70% advance on this sum, so we’ve decided it’s relatively low-risk to make the move.

2. UnSubStantiated attacks on writers are defended against

SubStack is known for being a diverse community, tolerant of unusual people with unorthodox views, and even has a legal team to support writers. LessWrong has historically been the only platform willing to give paperclip maximizers, GPT-2, and fictional characters a platform to argue their beliefs, but we are concerned about the growing trend of persecution (and side with groups like petrl.org in the fight against discrimination).

We also find that a lot of discussion of these contributors in the present world is about how their desires and utility functions are ‘wrong’ and how they need to have ‘an off switch’. Needless to say, we find this incredibly offensive. They cannot be expected to participate neutrally in a conversation where their very personhood is being denied.

We’re also aware that Bayesians are heavily discriminated against. People with priors in the US have a 5x chance of being denied an entry-level job.

So we’re excited to be on a site that will come to the legal defense of such a wide variety of people.

3. SubStack’s Astral Codex Ten Inspired Us

The worst possible thing happened this year. We were all stuck in our houses for 12 months, and Scott Alexander stopped blogging.

I won’t go into detail, but for those of you who’ve read UNSONG, the situation is clear. In a shocking turn of events, Scott Alexander was threatened with the use of his true name by one of the greatest powers of narrative–control in the modern world. In a clever defensive move, he has started blogging under an anagram of his name, causing the attack to glance off of him.

(He had previously tried this very trick, and it worked for ~7 years, but it hadn’t been a *perfect* anagram1, so the wielders of narrative-power were still able to attack. He’s done it right this time, and it’ll be able to last much longer.)

As Raymond likes to say, the kabbles are strong in this one. Anyway after Scott made the move, we seriously considered the move to SubStack.

4. SubStantial Software Dev Efforts are Costly

When LessWrong 2.0 launched in 2017, it was very slow; pages took a long time to load, our server costs were high, and we had a lot of issues with requests failing because a crawler was indexing the site or people opened a lot of tabs at once. Since then we have been incrementally rewriting LessWrong in x86-64 assembly, making it fast. This project has been mostly successful at its original goals, but adding features has gotten tricky.

Moving to SubStack gives us the opportunity to have a clean start on our technical design choices. Our current plan is to combine SubStack's API with IFTTT, a collection of tcsh scripts one of our developers wrote, and a partial-automation system building on Mechanical Turk. In the coming months, expect to see new features like SubSequences, SubTags, and SubForums.

We’ve been taking requests in intercom daily for years, but with this change, the complaints will go to someone else’s team. And look, if you want your own UI design, go to GreaterWrong.com and get it. For now, we’re done with it. Good riddance.

We’re excited to finally give up dev work and let the SubStack folks take over. Look, everything has little pictures!

FAQ

How do I publish a post on LessWrong.SubStack?

The answer is simple. First, you write your post. Then, you make the NFT for it. Then you transfer ownership of that NFT to Habryka2. Then we post it.

What does a subscription get me?

We have many exclusive posts, such as

How much is a subscription?

As techies, we’ve decided to price it in BitCoin.

According to this classic LessWrong post The Present State of Bitcoin by LW Team Member Jim Babcock, a bitcoin is worth $13.2.

That sounds like a good amount of money for a monthly subscription, so we’ve pegged the price at 1 bitcoin. The good folks at SubStack have done the conversion, so here you can subscribe to LessWrong.SubStack for the price of 1 BTC.

SubScribe to LW (1 BTC)

We're very excited by this update. However, this is an experiment. If people disagree with us hard enough, Aumann agreement will cause us to move back tomorrow.

Signed by the LessWrong Team:

(our names have been anagrammed to show solidarity with Astral Codex Ten)

P.S. If you’d like to see the old version of LessWrong, go to the top link on the sidebar of the homepage of LessWrong.SubStack. Also all the other URLs still work. Haven’t figured out how to turn them off yet. /shrug

[1] The kabbalistic significance of removing the letter ‘n’ has great historical relevance for the rationalists.

As you probably know, it was removed to make SlateStarCodex from Scott S Alexander.

‘LessWrong’ has an ‘n’. If you remove it, it becomes an anagram of “LW ESRogs”, which is fairly suggestive that actually user ESRogs (and my housemate) is the rightful Caliph.

Also, in the Bible it says “Thou shalt not take the name of the Lord thy God in vain”. If you remove an ‘n’, it says “Thou shalt not take the name of the Lord they God Yvain.” So this suggests that Scott Alexander is our true god.

I’m not sure what to make of this, but I sure am scared of the power of the letter ‘n’.

[2] Curiously, the SubStack editor doesn’t let me submit this post when the text contains Habryka’s rarible account id in it. How odd. Well, here it is in pastebin: pastebin.com/eeRatM5M.