I got access to DALL-E 2 earlier this week, and have spent the last few days (probably adding up to dozens of hours) playing with it, with the goal of mapping out its performance in various areas – and, of course, ending up with some epic art.

Below, I've compiled a list of observations made about DALL-E, along with examples. If you want to request art of a particular scene, or to test see what a particular prompt does, feel free to comment with your requests.

DALL-E's strengths

Stock photography content

It's stunning at creating photorealistic content for anything that (this is my guess, at least) has a broad repertoire of online stock images – which is perhaps less interesting because if I wanted a stock photo of (rolls dice) a polar bear, Google Images already has me covered. DALL-E performs somewhat better at discrete objects and close-up photographs than at larger scenes, but it can do photographs of city skylines, or National Geographic-style nature scenes, tolerably well (just don't look too closely at the textures or detailing.) Some highlights:

- Clothing design: DALL-E has a reasonable if not perfect understanding of clothing styles, and especially for women's clothes and with the stylistic guidance of "displayed on a store mannequin" or "modeling photoshoot" etc, it can produce some gorgeous and creative outfits. It does especially plausible-looking wedding dresses – maybe because wedding dresses are especially consistent in aesthetic, and online photos of them are likely to be high quality?

- Close-ups of cute animals. DALL-E can pull off scenes with several elements, and often produce something that I would buy was a real photo if I scrolled past it on Tumblr.

- Close-ups of food. These can be a little more uncanny valley – and I don't know what's up with the apparent boiled eggs in there – but DALL-E absolutely has the plating style for high-end restaurants down.

- Jewelry. DALL-E doesn't always follow the instructions of the prompt exactly (it seems to be randomizing whether the big pendant is amber or amethyst) but the details are generally convincing and the results are almost always really pretty.

Pop culture and media

DALL-E "recognizes" a wide range of pop culture references, particularly for visual media (it's very solid on Disney princesses) or for literary works with film adaptations like Tolkien's LOTR. For almost all media that it recognizes at all, it can convert it in almost-arbitrary art styles.

[Tip: I find I get more reliably high-quality images from the prompt "X, screenshots from the Miyazaki anime movie" than just "in the style of anime", I suspect because Miyazaki has a consistent style, whereas anime more broadly is probably pulling in a lot of poorer-quality anime art.]

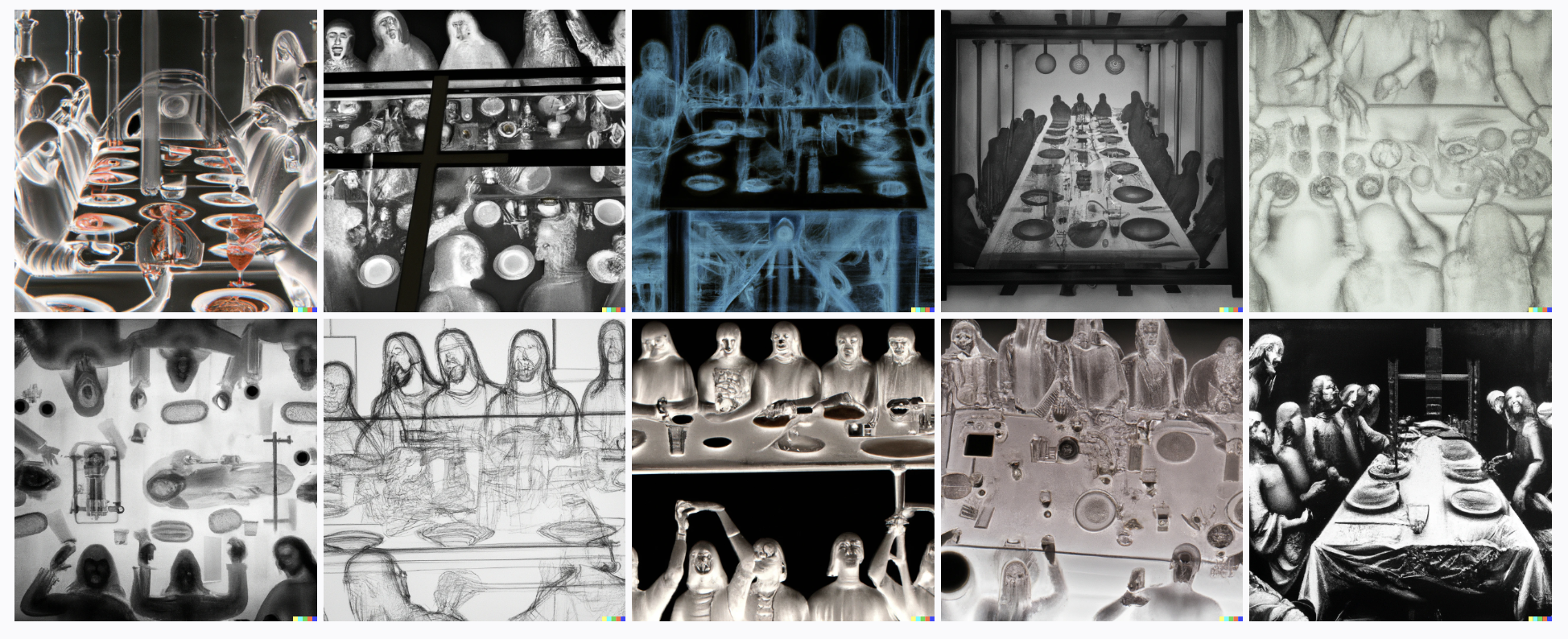

Art style transfer

Some of most impressively high-quality output involves specific artistic styles. DALL-E can do charcoal or pencil sketches, paintings in the style of various famous artists, and some weirder stuff like "medieval illuminated manuscripts".

IMO it performs especially well with art styles like "impressionist watercolor painting" or "pencil sketch", that are a little more forgiving around imperfections in the details.

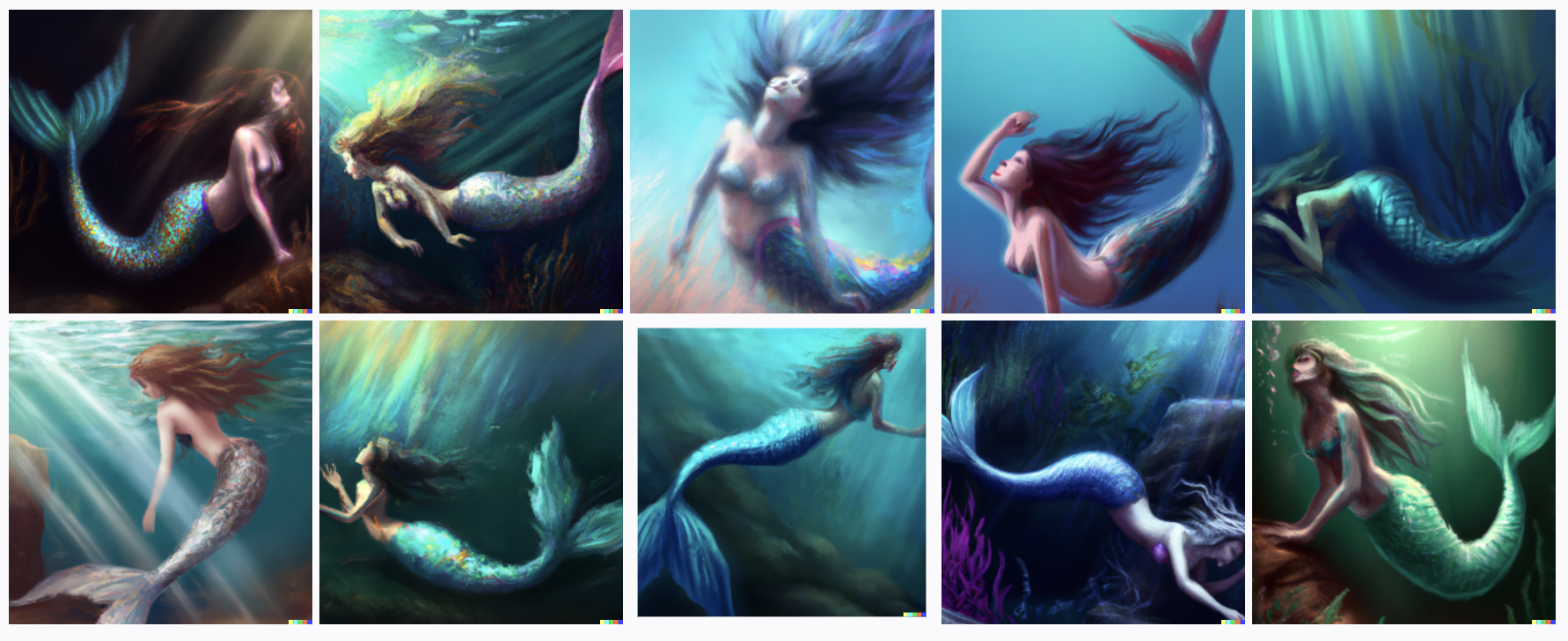

Creative digital art

DALL-E can (with the right prompts and some cherrypicking) pull off some absolutely gorgeous fantasy-esque art pieces. Some examples:

The output when putting in more abstract prompts (I've run a lot of "[song lyric or poetry line], digital art" requests) is hit-or-miss, but with patience and some trial and error, it can pull out some absolutely stunning – or deeply hilarious – artistic depictions of poetry or abstract concepts. I kind of like using it in this way because of the sheer variety; I never know where it's going to go with a prompt.

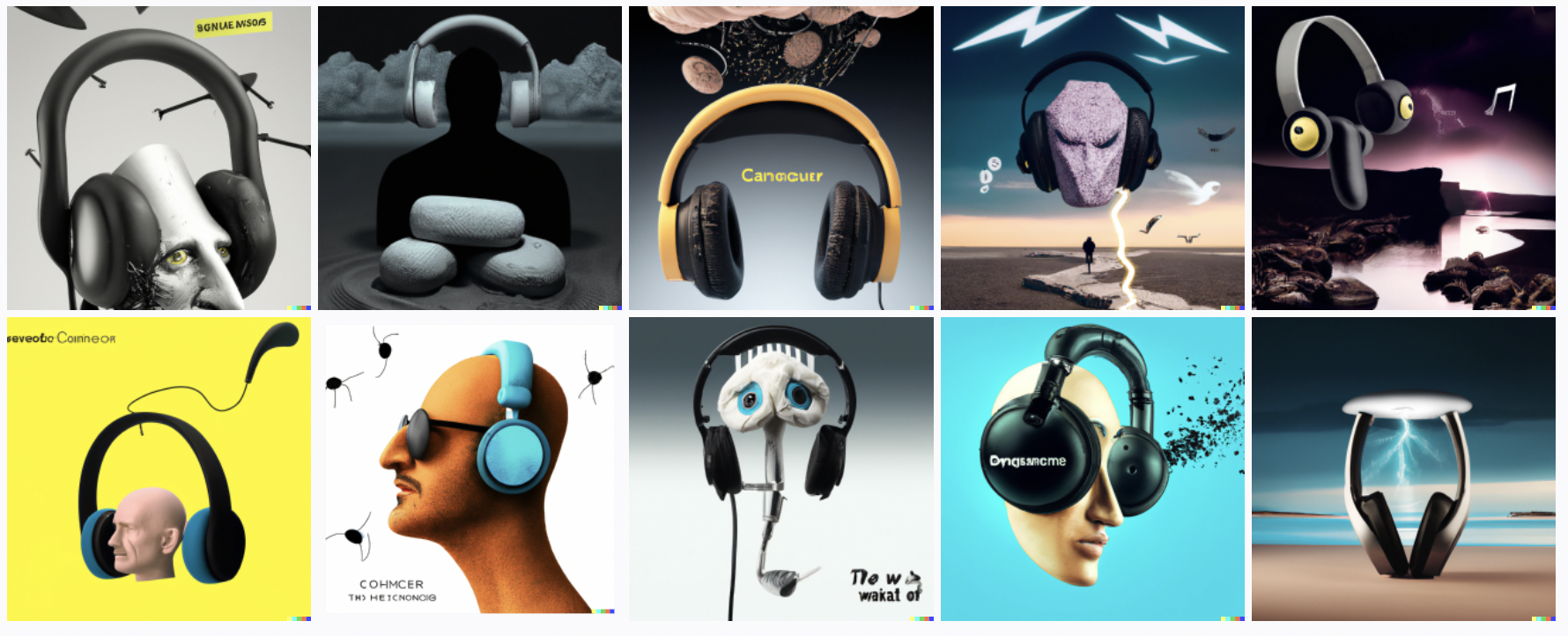

The future of commercials

This might be just a me thing, but I love almost everything DALL-E does with the prompt "in the style of surrealism" – in particular, its surreal attempt at commercials or advertisements. If my online ads were 100% replaced by DALL-E art, I would probably click on at least 50% more of them.

DALLE's weaknesses

I had been really excited about using DALL-E to make fan art of fiction that I or other people have written, and so I was somewhat disappointed at how much it struggles to do complex scenes according to spec. In particular, it still has a long way to go with:

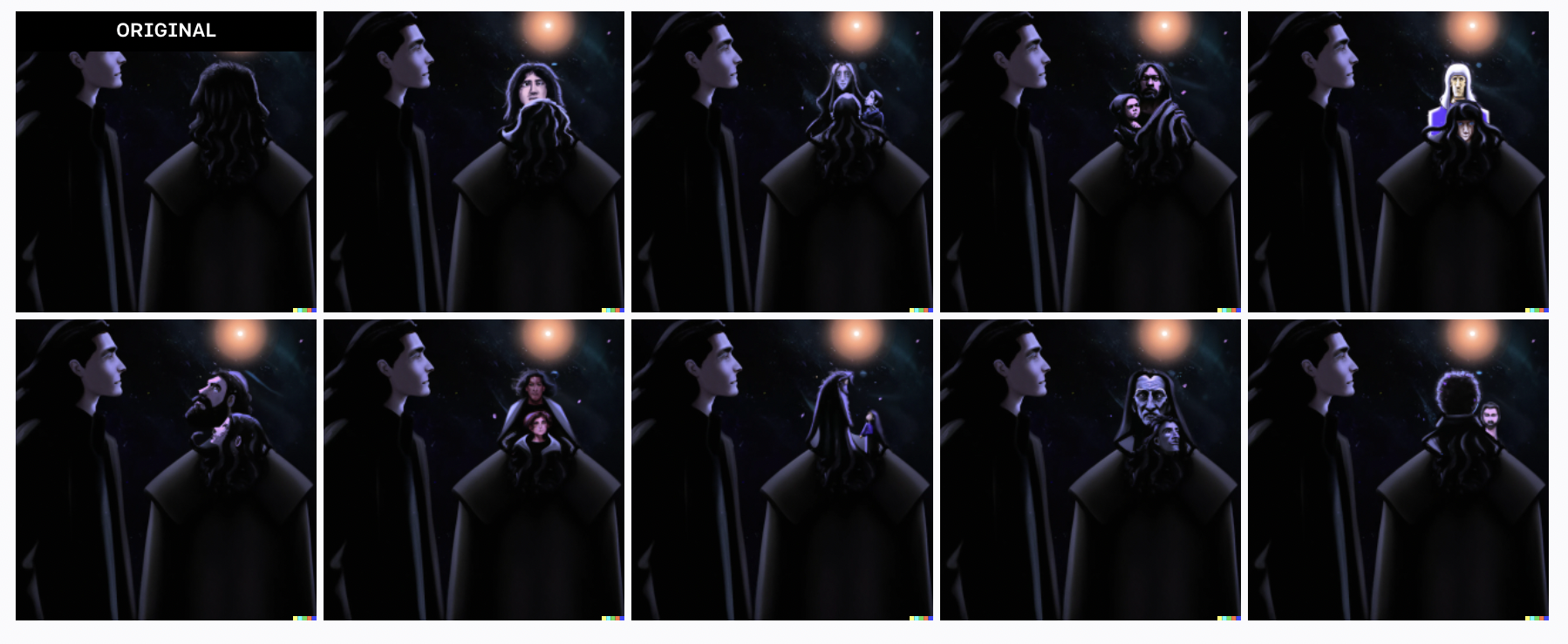

Scenes with two characters

I'm not kidding. DALL-E does fine at giving one character a list of specific traits (though if you want pink hair, watch out, DALL-E might start spamming the entire image with pink objects). It can sometimes handle multiple generic people in a crowd scene, though it quickly forgets how faces work. However, it finds it very challenging to keep track of which traits ought to belong to a specific Character A versus a different specific Character B, beyond a very basic minimum like "a man and a woman."

The above is one iteration of a scene I was very motivated to figure out how to depict, as a fan art of my Valdemar rationalfic. DALL-E can handle two people, check, and a room with a window and at least one of a bed or chair, but it's lost when it comes to remembering which combination of age/gender/hair color is in what location.

Even in cases where the two characters are pop culture references that I've already been able to confirm the model "knows" separately – for example, Captain America and Iron Man – it can't seem to help blending them together. It's as though the model has "two characters" and then separately "a list of traits" (user-specified or just implicit in the training data), and reassigns the traits mostly at random.

Foreground and background

A good example of this: someone on Twitter had commented that they couldn't get DALL-E to provide them with "Two dogs dressed like roman soldiers on a pirate ship looking at New York City through a spyglass". I took this as a CHALLENGE and spent half an hour trying; I, too, could not get DALL-E to output this, and end up needing to choose between "NYC and a pirate ship" or "dogs in Roman soldier uniforms with spyglasses".

DALL-E can do scenes with generic backgrounds (a city, bookshelves in a library, a landscape) but even then, if that's not the main focus of the image then the fine details tend to get pretty scrambled.

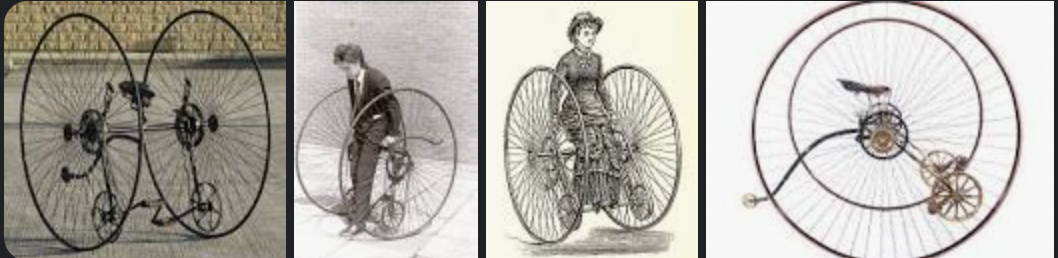

Novel objects, or nonstandard usages

Objects that are not something it already "recognizes." DALL-E knows what a chair is. It can give you something that is recognizably a chair in several dozen different art mediums. It could not with any amount of coaxing produce an "Otto bicycle", which my friend specifically wanted for her book cover. Its failed attempts were both hilarious and concerning.

Objects used in nonstandard ways. It seems to slide back toward some kind of ~prior; when I asked it for a dress made of Kermit plushies displayed on a store mannequin, it repeatedly gave me a Kermit plushie wearing a dress.

DALL-E generally seems to have extremely strong priors in a few areas, which end up being almost impossible to shift. I spent at least half an hour trying to convince it to give me digital art of a woman whose eyes were full of stars (no, not the rest of her, not the background scenery either, just her eyes...) and the closest DALL-E ever got was this.

I got: the goddess-eyed goddess of recursion

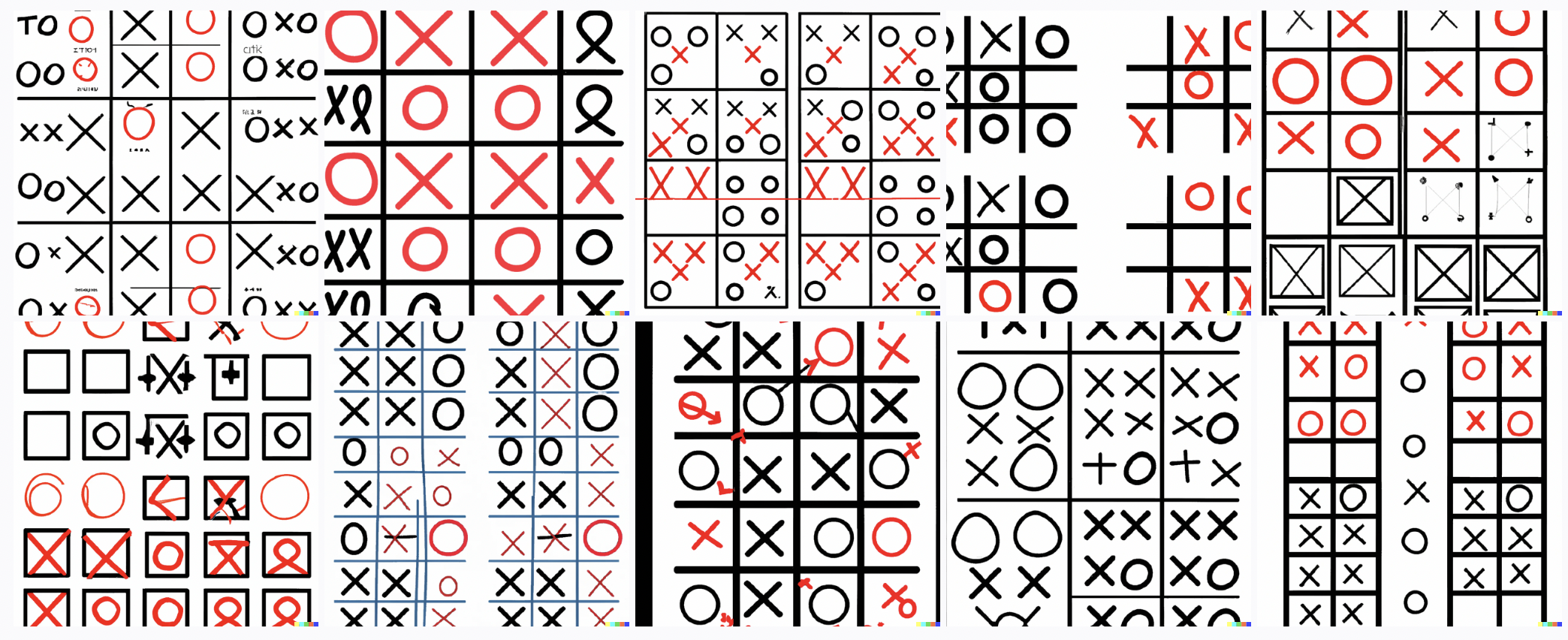

Spelling

DALL-E can't spell. It really really cannot spell. It will occasionally spell a word correctly by utter coincidence. (Okay, fine, it can consistently spell "STOP" as long as it's written on a stop sign.)

It does mostly produce recognizable English letters (and recognizable attempts at Chinese calligraphy in other instances), and letter order that is closer to English spelling than to a random draw from a bag of Scrabble letters, so I would guess that even given the new model structure that makes DALL-E 2 worse than the first DALL-E, just scaling it up some would eventually let it crack spelling.

At least sometimes its inability to spell results in unintentionally hilarious memes?

Realistic human faces

My understanding is that the face model limitation may have been deliberate to avoid deepfakes of celebrities, etc. Interestingly, DALL-E can nonetheless at least sometimes do perfectly reasonable faces, either as photographs or in various art styles, if they're the central element of a scene. (And it keeps giving me photorealistic faces as a component of images where I wasn't even asking for that, meaning that per the terms and conditions I can't share those images publicly.)

Even more interestingly, it seems to specifically alter the appearance of actors even when it clearly "knows" a particular movie or TV show. I asked it for "screenshots from the second season of Firefly", and they were very recognizably screenshots from Firefly in terms of lighting, ambiance, scenery etc, with an actor who looked almost like Nathan Fillion – as though cast in a remake that was trying to get it fairly similar – and who looked consistently the same across all 10 images, but was definitely a different person.

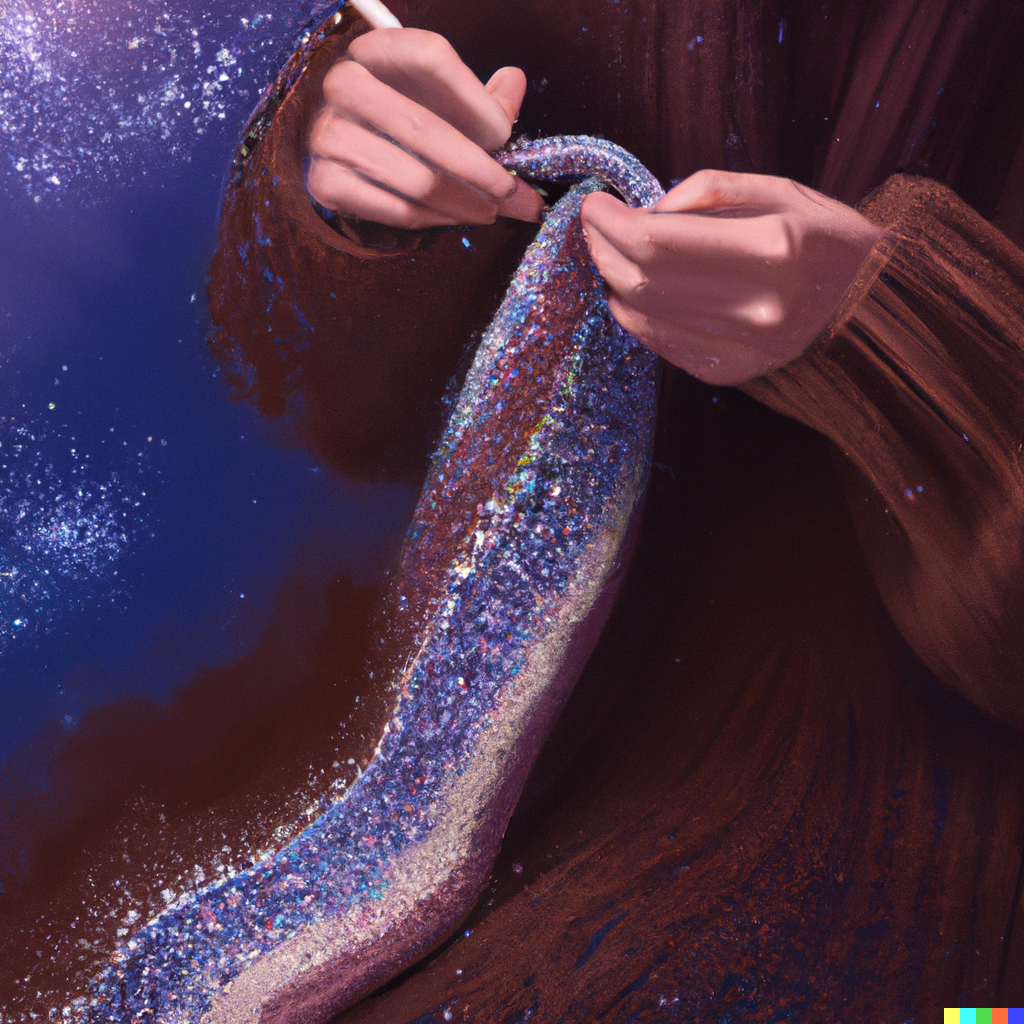

There are a couple of specific cases where DALL-E seems to "remember" how human hands work. The ones I've found so far mostly involve a character doing some standard activity using their hands, like "playing a musical instrument." Below, I was trying to depict a character from A Song For Two Voices who's a Bard; this round came out shockingly good in a number of ways, but the hands particularly surprised me.

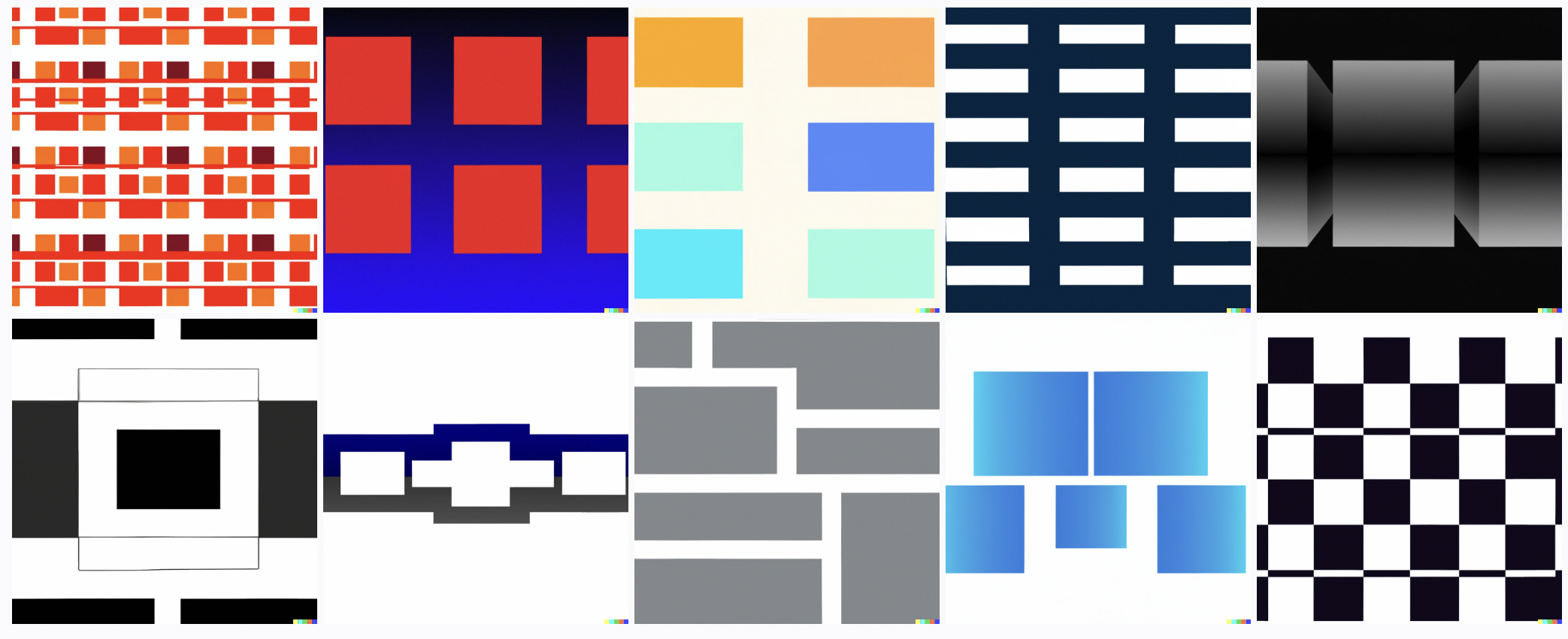

Limitations of the "edit" functionality

DALL-E 2 offers an edit functionality – if you mostly like an image except for one detail, you can highlight an area of it with a cursor, and change the full description as applicable in order to tell it how to modify the selected region.

It sometimes works - this gorgeous dress (didn't save the prompt, sorry) originally had no top, and the edit function successfully added one without changing the rest too much.

It often appears to do nothing. It occasionally full-on panics and does....whatever this is.

There's also a "variations" functionality that lets you select the best image given by a prompt and generate near neighbors of it, but my experience so far is that the variations are almost invariably less of a good fit for the original prompt, and very rarely better on specific details (like faces) that I might want to fix.

Some art style observations

DALL-E doesn't seem to hold a sharp delineation between style and content; in other words, adding stylistic prompts actively changes the some of what I would consider to be content.

For example, asking for a coffeeshop scene as painted by Alphonse Mucha puts the woman in in a long flowing period-style dress, like in this reference painting, and gives us a "coffeeshop" that looks a lot to me like a lady's parlor; in comparison, the Miyazaki anime version mostly has the character in a casual sweatshirt. This makes sense given the way the model was trained; background details are going to be systematically different between Nouveau Art paintings and anime movies.

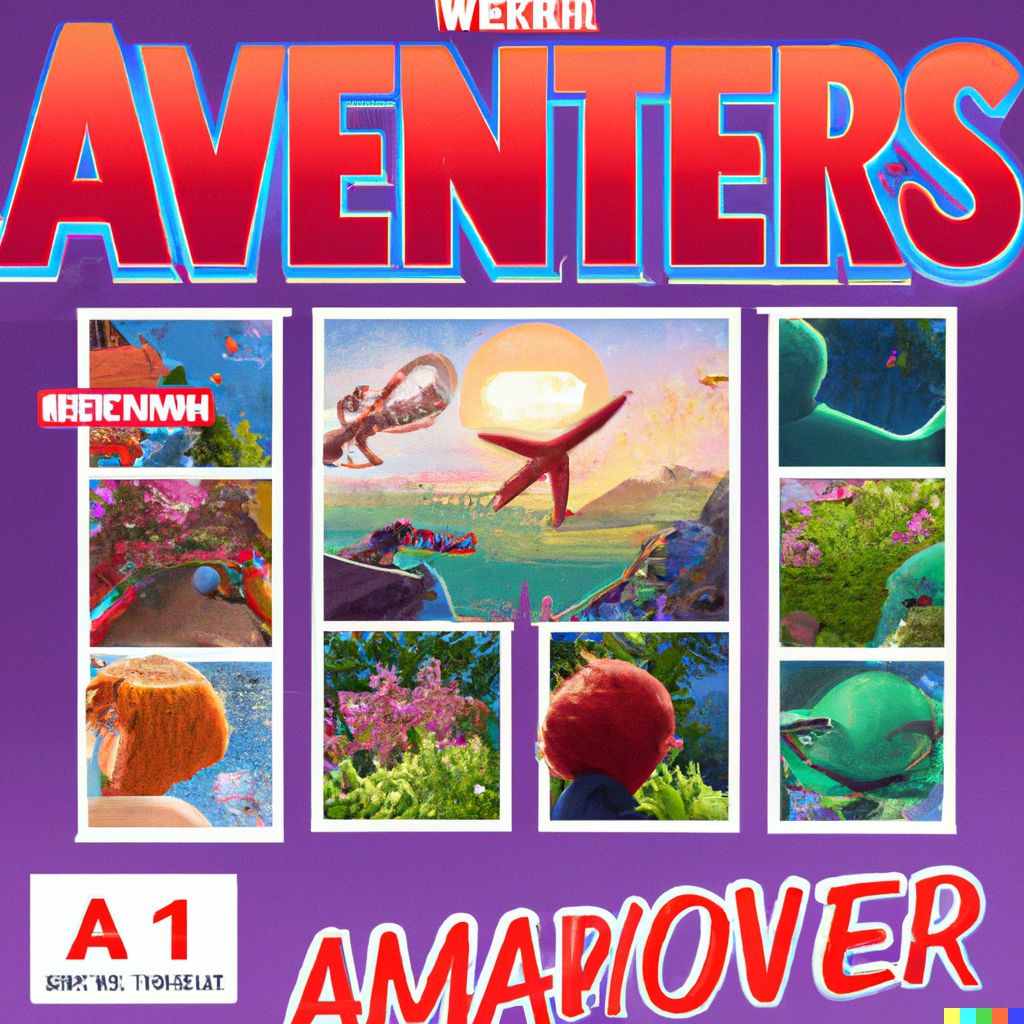

DALL-E is often sensitive to exact wording, and in particular it's fascinating how "in the style of x" often gets very different results from "screenshot from an x movie". I'm guessing that in the Pixar case, generic "Pixar style" might capture training data from Pixar shorts or illustrations that aren't in their standard recognizable movie style. (Also, sometimes if asked for "anime" it gives me content that either looks like 3D rendered video game cutscenes, or occasionally what I assume is meant to be people at an anime con in cosplay.)

Conclusions

How smart is DALL-E?

I would give it an excellent grade in recognizing objects, and most of the time it has a pretty good sense of their purpose and expected context. If I give it just the prompt "a box, a chair, a computer, a ceiling fan, a lamp, a rug, a window, a desk" with no other specification, it consistently includes at least 7 of the 8 requested objects, and places them in reasonable relation to each other – and in a room with walls and a floor, which I did not explicitly ask for. This "understanding" of objects is a lot of what makes DALL-E so easy to work with, and in some sense seems more impressive than a perfect art style.

The biggest thing I've noticed that looks like a ~conceptual limitation in the model is its inability to consistently track two different characters, unless they differ on exactly one trait (male and female, adult and child, red hair and blue hair, etc) – in which case the model could be getting this right if all it's doing is randomizing the traits in its bucket between the characters. It seems to have a similar issue with two non-person objects of the same type, like chairs, though I've explored this less.

It often applies color and texture styling to parts of the image other than the ones specified in the prompt; if you ask for a girl with pink hair, it's likely to make the walls or her clothes pink, and it's given me several Rapunzels wearing a gown apparently made of hair. (Not to mention the time it was confused about whether, in "Goldilocks and the three bears", Goldilocks was also supposed to be a bear.)

The deficits with the "edit" mode and "variations" mode also seem to me like they reflect the model failing to neatly track a set of objects-with-assigned-traits. It reliably holds the non-highlighted areas of the image constant and only modifies the selected part, but the modifications often seem like they're pulling in context from the entire prompt – for example, when I took one of my room-with-objects images and tried to select the computer and change it to "a computer levitating in midair", DALL-E gave me a levitating fan and a levitating box instead.

Working with DALL-E definitely still feels like attempting to communicate with some kind of alien entity that doesn't quite reason in the same ontology as humans, even if it theoretically understands the English language. There are concepts it appears to "understand" in natural language without difficulty – including prompts like "advertising poster for the new Marvel's Avengers movie, as a Miyazaki anime, in the style of an Instagram inspirational moodboard", which would take so long to explain to aliens, or even just to a human from 1900. And yet, you try to explain what an Otto bicycle is – something which I'm pretty sure a human six-year-old could draw if given a verbal description – and the conceptual gulf is impossible to cross.

Wow, this is going to explode picture books and book covers.

Hiring an illustrator for a picture book costs a lot, as it should given it's bespoke art.

Now publishers will have an editor type in page descriptions, curate the best and off they go. I can easily imagine a model improvement to remember the boy drawn or steampunk bear etc.

Book cover designers are in trouble too. A wizard with lighting in hands while mountain explodes behind him - this can generate multiple options.

It's going to get really wild when A/B split testing is involved. As you mention regarding ads you'd give the system the power to make whatever images it wanted and then split test. Letting it write headlines would work too.

Perhaps a full animated movie down the line. There are already programs that fill in gaps for animation poses. Boy running across field chased by robot penguins - animated, eight seconds. And so on. At that point it's like Pixar in a box. We'll see an explosion of directors who work alone, typing descriptions, testing camera angles, altering scenes on the fly. Do that again but more violent. Do that again but with more blood splatter.

Animation in the style of Family Guy seems a natural first step there. Solid colours, less variation, not messing with light rippling etc.

There's a service authors use of illustrated chapter breaks, a black and white dragon snoozing, roses around knives, that sort of thing. No need to hire an illustrator now.

Conversion of all fiction novels to graphic novel format. At first it'll be laborious, typing in scene descriptions but graphic novel art is really expensive now. I can see a publisher hiring a freelancer to produce fifty graphic novels from existing titles.

With a bit of memory, so once I choose the image of each character I want, this is an amazing game changer for publishing.

Storyboarding requires no drawing skill now. Couple sprinting down dark alley chased by robots.

Game companies can use it to rapid prototype looks and styles. They can do all that background art by typing descriptions and saving the best.

We're going to end up with people who are famous illustrator who can't draw but have created amazing styles using this and then made books.

Thanks so much for this post. This is wild astonishing stuff. As an author who is about to throw large sums of money at cover design, it's incredible to think a commercial version of this could do it for a fraction of the price.

edit: just going to add some more

App design that requires art. For example many multiple choice story apps that are costly to make due to art cost.

Split-tested covers designs for pretty much anything - books, music, albums, posters. Generate, ad campaign, test clicks. An ad business will be able to throw up a 1000 completely different variations in a day.

All catalogs/brochures that currently use stock art. While choosing stock art to make things works it also sucks and is annoying with the limited range. I'm imagining a stock art company could radically expand their selection to keep people buying from them. All those searches that people have typed in are now prompts.

Illustrating wikipedia. Many articles need images to demonstrate a point and rely on contributors making them. This could open up improvements in the volume of images and quality.

Graphic novels/comic books - writers who don't need artists essentially. To start it will be describing single panels and manually adding speech text but that's still faster and cheaper than hiring an artist. For publishers - why pick and choose what becomes a graphic novel when you can just make every title into a graphic novel.

Youtube/video interstitial art. No more stock photos.

Licensed characters (think Paw Patrol, Disney, Dreamworks) - creation of endless poses, scenes. No more waiting for Dreamworks to produce 64 pieces of black and white line art when it may be able to take the movie frames and create images from that.

Adaptations - the 24-page storybook of Finding Nemo. The 24-page storybook of Pinocchio. The picture book of Fast and The Furious.

Looking further ahead we might even see a drop-down option of existing comics, graphic novels but in a different art style. Reading the same Spiderman story but illustrated by someone else.

Character design - for games, licensing, children's animation. This radically expands the volume of characters that can be designed, selected and then chosen for future scenes.

With some sort of "keep this style", "save that character" method, it really would be possible to generate a 24-page picture book in an incredibly short amount of time.

Quite frankly, knowing how it works, I'd write a picture book of a kid going through different art styles in their adventure. Chasing their puppy through the art museum and the dog runs into a painting. First Van Gogh, then Da Vinci and so on. The kid changes appearance due to the model but that works for the story.

As a commercial produce, this system would be incredible. I expect we'll see an explosion in the number of picture books, graphic novels, posters, art designs, etsy prints, downloadable files and so on. Publishers with huge backlists would be a prime customer.

An interesting example of what might be a 'name-less style' in a generative image model, Stable Diffusion in this case (DALL-E 2 doesn't give you the necessary access so users can't experiment with this sort of thing): what the discoverer calls the "Loab" (mirror) image (for lack of a better name - what text prompt, if any, this image corresponds to is unknown, as it's found by negation of a text prompt & search).

'Loab' is an image of a creepy old desaturated woman with ruddy cheeks in a wide face, which when hybridized with other images, reliably indu... (read more)