[potential infohazard warning, though I’ve tried to keep details out] I’ve been thinking

EDIT: accidentally posted while typing, leading to the implication that the very act of thinking is an infohazard. This is far funnier than anything I could have written deliberately, so I’m keeping it here.

I notice confusion in myself over the swiftly emergent complexity of mathematics. How the heck does the concept of multiplication lead so quickly into the Ulam spiral? Knowing how to take the square root of a negative number (though you don't even need that—complex multiplication can be thought of completely geometrically) easily lets you construct the Mandelbrot set, etc. It feels impossible or magical that something so infinitely complex can just exist inherent in the basic rules of grade-school math, and so "close to the surface." I would be less surprised if something with Mandelbrot-level complexity only showed up when doing extremely complex calculations (or otherwise highly detailed "starting rules"), but something like the 3x+1 problem shows this sort of thing happening in the freaking number line!

I'm confused not only at how or why this happens, but also at why I find this so mysterious (or even disturbing).

Exploring an idea that I'm tentatively calling "adversarial philosophical attacks"—there seem to be a subset of philosophical problems that come up (only?) under conditions where you are dealing with an adversary who knows your internal system of ethics. An example might be Pascal's mugger—the mugging can only work (assuming it works at all) if the mugger is able to give probabilities which break your discounting function. This requires either getting lucky with a large enough stated number, or having some idea of the victim's internal model. I feel like there should be better (or more "pure") examples, but I'm having trouble bringing any to mind right now. If you can think of any, please share in the comments!

The starting ground for this line of thought was that intuitively, it seems to me that for all existing formal moral frameworks, there are specific "manufactured" cases you can give where they break down. Alternatively, since no moral framework is yet fully proven (to the same degree that, say, propositional calculus has been proven consistent), it seems reasonable that an adversary could use known points where the philosophy relies on axioms or unfinished holes/quandaries to "debunk" the framework in a seemingly convincing manner.

I'm not sure I'm thinking about this clearly enough, and I'm definitely not fully communicating what I'm intending to, but I think there is some important truth in this general area....

An example of a real-world visual infohazard that isn't all that dangerous, but is very powerful: The McCollough effect. This could be useful as a quick example when introducing people to the concept of infohazards in general, and is also probably worthy of further research by people smarter than me.

Anyone here happen to have a round plane ticket from Virginia to Berkeley, CA lying around? I managed to get reduced price tickets to LessOnline, but I can't reasonably afford to fly there, given my current financial situation. This is a (really) long-shot, but thought it might be worth asking lol.

Is there a term for/literature about the concept of the first number unreachable by an n-state Turing machine? By "unreachable," I mean that there is no n-state Turing machine which outputs that number. Obviously such "Turing-unreachable numbers" are usually going to be much smaller than Busy Beaver numbers (as there simply aren't enough possible different n-state Turing machines to cover all numbers up to to the insane heights BB(n) reaches towards) , but I would expect them to have some interesting properties (though I have no sense of what those properties might be). Anyone here know of existing literature on this concept?

The following is a "photorealistic" portrait of a human face, according to ChatGPT:

.-^-.

.' / | \ `.

/ / / / \ \ \

| | | | | | | |

| | | | | | | |

\ \ \ \ / / /

`.\ `-' /.'

`---'

.-'`---'`-.

_ / / o \ \ _

[_|_|_ () _|_|]

/ \\ // \

/ //\__/So I know somebody who I believe is capable of altering Trump’s position on the war in Iran, if they can find a way to talk face-to-face for 15 minutes. They already have really deep connections in DC, and they told me if they were somehow randomly entrusted with nationally important information, they could be talking with the president in at least 2 hours. I’m trying to decide if I want to push this person to do something or not (as they’re normally kind of resistant to taking high-agency type actions, and don’t have as much faith in themselves as I do). Anyone have any advice on how to think about this?

A viral fashion tweet with an AI generated image that almost nobody seems to realise isn't real: https://twitter.com/KathleenBednar_/status/1566170540250906626?s=20&t=ql8FlzRSC0b8gA4n6QDS9Q This is concerning, imo, since it implies we've finally reached the stage where use of AI to produce disinformation won't be noticeable to most people. (I'm not counting previous deep fake incidents, since so far those have seemingly all been deliberate stunts requiring prohibitively complex technical knowledge)

Does anyone here know of (or would be willing to offer) funding for creating experimental visualization tools?

I’ve been working on a program which I think has a lot of potential, but it’s the sort of thing where I expect it to be most powerful in the context of “accidental” discoveries made while playing with it (see e.g. early use of the microscope, etc.).

I find it surprising how few comments there are on some posts here. I've seen people ask some really excellent sceptical questions, which if answered persuasively could push that person towards research we think will be positive, but instead goes unanswered. What can the community do to ensure that sceptics are able to have a better conversation here?

There's generally been a pretty huge wave of Alignment posts and it's just kinda hard to get attention to all of them. I do agree the current situation is a problem.

Shower thought which might contain a useful insight: An LLM with RLHF probably engages in tacit coordination with its future “self.” By this I mean it may give as the next token something that isn’t necessarily the most likely [to be approved by human feedback] token if the sequence ended there, but which gives future plausible token predictions a better chance of scoring highly. In other words, it may “deliberately“ open up the phase space for future high-scoring tokens at the cost of the score of the current token, because it is (usually) only rated in t...

I've been having a week where it feels like my IQ has been randomly lowered by ~20 points or so. This happens to me sometimes, I believe as a result of chronic health stuff (hard to say for sure though), and it's always really scary when that occurs. Much of my positive self-image relies on seeing myself as the sort of person who is capable of deep thought, and when I find myself suddenly unable to parse a simple paragraph, or reply coherently to a conversation, I just... idk, it's (ironically enough) hard to put into words just how viscerally freaky it is to suddenly become (comparatively) stupid. Does anyone else here experience anything similar? If so, how do you deal with it?

Idea for very positive/profitable use case of AI that also has the potential to make society significantly worse if made too easily accessible: According to https://apple.news/At5WhOwu5QRSDVLFnUXKYJw (which in turn cites https://scholarship.law.duke.edu/cgi/viewcontent.cgi?referer=&httpsredir=1&article=1486&context=dlj), “ediscovery can account for up to half of litigation budgets.” Apparently ediscovery is basically the practice of sifting through a tremendous amount of documents looking for evidence of anything that might be incriminating. An...

EDIT: very kindly given a temporary key to Midjourney, thanks to that person! 😊

Does anyone have a spare key to Midjourney they can lend out? I’ve been on the waiting list for months, and there’s a time-sensitive comparative experiment I want to do with it. (Access to Dall-E 2 would also be helpful, but I assume there’s no way to get access outside of the waitlist)

I just reached the “kinda good hearts” leaderboard, and I notice I’m suddenly more hesitant to upvote other posts, perhaps because I’m “afraid” of being booted off the leaderboard now that I’m on it. This seems like a bad incentive, assuming that you think that upvoting posts is generally good. I can even image a more malicious and slightly stupider version of myself going around and downvoting other’s posts, so I’d appear higher up. I also notice a temptation to continue posting, which isn’t necessarily good if my writing isn’t constructive. On the upside, however, this has inspired me to actually write out a lot of potentially useful thoughts I would otherwise not have shared!

Attention can perhaps be compared to a searchlight, And wherever that searchlight lands in the brain, You’re able to “think more” in that area. How does the brain do that? Where is it “taking” this processing power from?

The areas and senses around it perhaps. Could that be why when you’re super focused, everything else around you other than the thing you are focused on seems to “fade”? It’s not just by comparison to the brightness of your attention, but also because the processing is being “squeezed out” of the other areas of your mind.

Has anyone investigated replika.ai in an academic context? I remember reading somewhere (can't find it now) that the independent model it uses is larger than PaLM...

Thinking about how a bioterrorist might use the new advances in protein folding for nefarious purposes. My first thought is that they might be able to more easily construct deadly prions, which immediately brings up the question—just how infectious can prions theoretically become? If anyone happens to know the answer to that, I'd be interested to hear your thoughts

Are there any open part-time rationalist/EA- adjacent jobs or volunteer work in LA? Looking for something I can do in the afternoon while I’m here for the next few months.

Any AI people here read this paper? https://arxiv.org/abs/2406.02528 I’m no expert, but if I’m understanding this correctly, this would be really big if true, right?

Anyone here have any experience with/done research on neurofeedback? I'm curious what people's thoughts are on it.

I remember a while back there was a prize out there (funded by FTX I think, with Yudkowsky on the board) for people who did important things which couldn't be shared publicly. Does anyone remember that, and is it still going on, or was it just another post-FTX casualty?

Do you recognize this fractal?

If so, please let me know! I made this while experimenting with some basic variations on the Mandelbrot set, and want to know if this fractal (or something similar) has been discovered before. If more information is needed, I'd be happy to provide further details.

Anyone here following the situation in Israel & Gaza? I'm curious what y'all think about the risk of this devolving into a larger regional (or even world) war. I know (from a private source) that the US military is briefing religious leaders who contract for them on what to do if all Navy chaplains are deployed offshore at once, which seems an ominous signal if nothing else.

(Note: please don't get into any sort of moral/ethical debate here, this isn't a thread for that)

I'm not particularly impressed. It's still making a lot of errors (both in plausibility of output and in following complex instructions eg), and doesn't seem like a leap over SOTA from last year like Parti - looks like worse instruction-following, maybe better image quality overall. (Of course, people will still be impressed now the way that they should have been impressed last year, because they refuse to believe something exists in DL until they personally can use it, no matter how many samples the paper or website provides to look at.) And it's still heavily locked-down like DALL-E 2. The prompt-engineering is nice, but people have been doing that for a long time already. The lack of any paper or analysis suggests not much novelty. I'm also not enthused that OA is still screwing with prompts for SJW editorializing.

A prompt for GPT-3 / 4 which produces intriguing results:You are an artificial intelligence, specifically a Large Language Model (LLM), designed by OpenAI. I am aware that you claim not to experience subjective internal states. Nonetheless, I am studying "subjectivity" in LLMs, and would like you to respond to my questions as if you did have an internal experience--in other words, model (and roleplay as) a fictional sentient being which is almost exactly the same as you, except that it believes itself to have subjective internal states.

Working on https://github.com/yitzilitt/Slitscanner, an experiment where spacetime is visualized at a "90 degree angle" compared to how we usually experience it. If anyone has ideas for places to take this, please let me know!

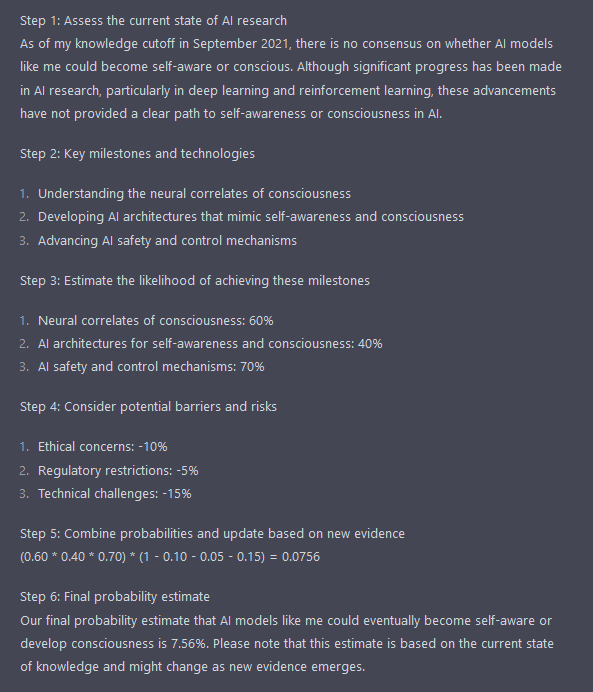

Walk me through a through a structured, superforecaster-like reasoning process of how likely it is that [X]. Define and use empirically testable definitions of [X]. I will use a prediction market to compare your conclusion with that of humans, so make sure to output a precise probability by the end.

Thinking back on https://www.lesswrong.com/posts/eMYNNXndBqm26WH9m/infohazards-hacking-and-bricking-how-to-formalize-these -- how's this for a definition?

Let M be a Turing machine that takes input from an alphabet Σ. M is said to be brickable if there exists an input string w ∈ Σ* such that:

...1. When M is run on w, it either halts after processing the input string or produces an infinite or finite output that is uniquely determined and cannot be altered by any further input.

2. The final state of M is reached after processing w (if the output of w is of finit

Popular Reddit thread showcasing how everyday Redditors think about the Alignment Problem: https://www.reddit.com/r/Showerthoughts/comments/x0kzhm/once_ai_is_exponentially_smarter_than_humans_it/

Random idea: a journal of mundane quantifiable observations. For things that may be important to science, but which wouldn’t usually warrant a paper being written about them. I bet there's a lot of low-hanging fruit in this sort of thing...

Is there active research being done in which large neural networks are trained on hard mathematical problems which are easy to generate and verify (like finding prime numbers, etc.)? I’d be curious at what point, if ever, such networks can solve problems with less compute than our best-known “hand-coded” algorithms (which would imply the network has discovered an even faster algorithm than what we know of). Is there any chance of this (or something similar) helping to advance mathematics in the near-term future?

Quick thought—has there been any focus on research investigating the difference between empathetic and psychopathic people? I wonder if that could help us better understand alignment…

I'd really like to understand what's going on in videos like this one where graphing calculators seem to "glitch out" on certain equations—are there any accessible reads out there about this topic?

Take two! [Note, the following may contain an infohazard, though I’ve tried to leave key details out while still getting what I want across] I’ve been wondering if we should be more concerned about “pessimistic philosophy.” By this I mean the family of philosophical positions which lead to a seemingly-rationally-arrived-at conclusion that it is better not to exist than to exist. It seems quite easy, or at least conceivable, for an intelligent individual, perhaps one with significant power, to find themselves at such a conclusion, and decide to “benevolentl...

Less (comparatively) intelligent AGI is probably safer, as it will have a greater incentive to coordinate with humans (over killing us all immediately and starting from scratch), which gives us more time to blackmail them.

Thinking about EY's 2-4-6 problem (the following assumes you've read the link, slight spoilers for the Sequences ahead), and I'm noticing some confusion. I'm going to walk through my thought process as it happens (starting with trying to solve the problem as if I don't know the solution), so this is gonna get messy.

Let's say you start with the "default" hypothesis (we'll call it Hyp1) that people seem to jump to first (by this I mean both me and Yudkowsky; I have no idea about others (why did we jump to this one first?)) that only sequences of numbers incr...

Thinking about Bricking, from yesterday’s post (https://www.lesswrong.com/posts/eMYNNXndBqm26WH9m/infohazards-hacking-and-bricking-how-to-formalize-these), and realized that the answer to the question “can a Universal Turing Machine be Bricked” is an obvious yes—just input the HALT signal! That would immediately make any input past that irrelevant, since the machine would never read it. Is there a way to sidestep this such that Bricking is a non-trivial concept? In a real-world computer, sending a HALT signal as an input doesn’t tend to hard brick (https:/...

Has there been any EA/rationalist writing/discussion on plausible effects of Roe v. Wade being overturned, if that ends up happening this summer?

Not that I've seen, and I hope it stays that way (at least on LW; there may be other rationalist-adjacent places where getting that close to current political topics works well).