Financial status: This is independent research. I welcome financial support to make further posts like this possible.

Epistemic status: I have been thinking about these ideas for years but still have not clarified them to my satisfaction.

Outline

-

This post asks whether it is possible, in Conway’s Game of Life, to arrange for a certain game state to arise after a certain number of steps given control only of a small region of the initial game state.

-

This question is then connected to questions of agency and AI, since one way to answer this question in the positive is by constructing an AI within Conway’s Game of Life.

-

I argue that the permissibility or impermissibility of AI is a deep property of our physics.

-

I propose the AI hypothesis, which is that any pattern that solves the control question does so, essentially, by being an AI.

Introduction

In this post I am going to discuss a celular autonoma known as Conway’s Game of Life:

In Conway’s Game Life, which I will now refer to as just "Life", there is a two-dimensional grid of cells where each cell is either on or off. Over time, the cells switch between on and off according to a simple set of rules:

-

A cell that is "on" and has fewer than two neighbors that are "on" switches to "off" at the next time step

-

A cell that is "on" and has greater than three neighbors that are "on" switches to "off" at the next time step

-

An cell that is "off" and has exactly three neighbors that are "on" switches to "on" at the next time step

-

Otherwise, the cell doesn’t change

It turns out that these simple rules are rich enough to permit patterns that perform arbitrary computation. It is possible to build logic gates and combine them together into a computer that can simulate any Turing machine, all by setting up a particular elaborate pattern of "on" and "off" cells that evolve over time according to the simple rules above. Take a look at this awesome video of a Universal Turing Machine operating within Life.

The control question

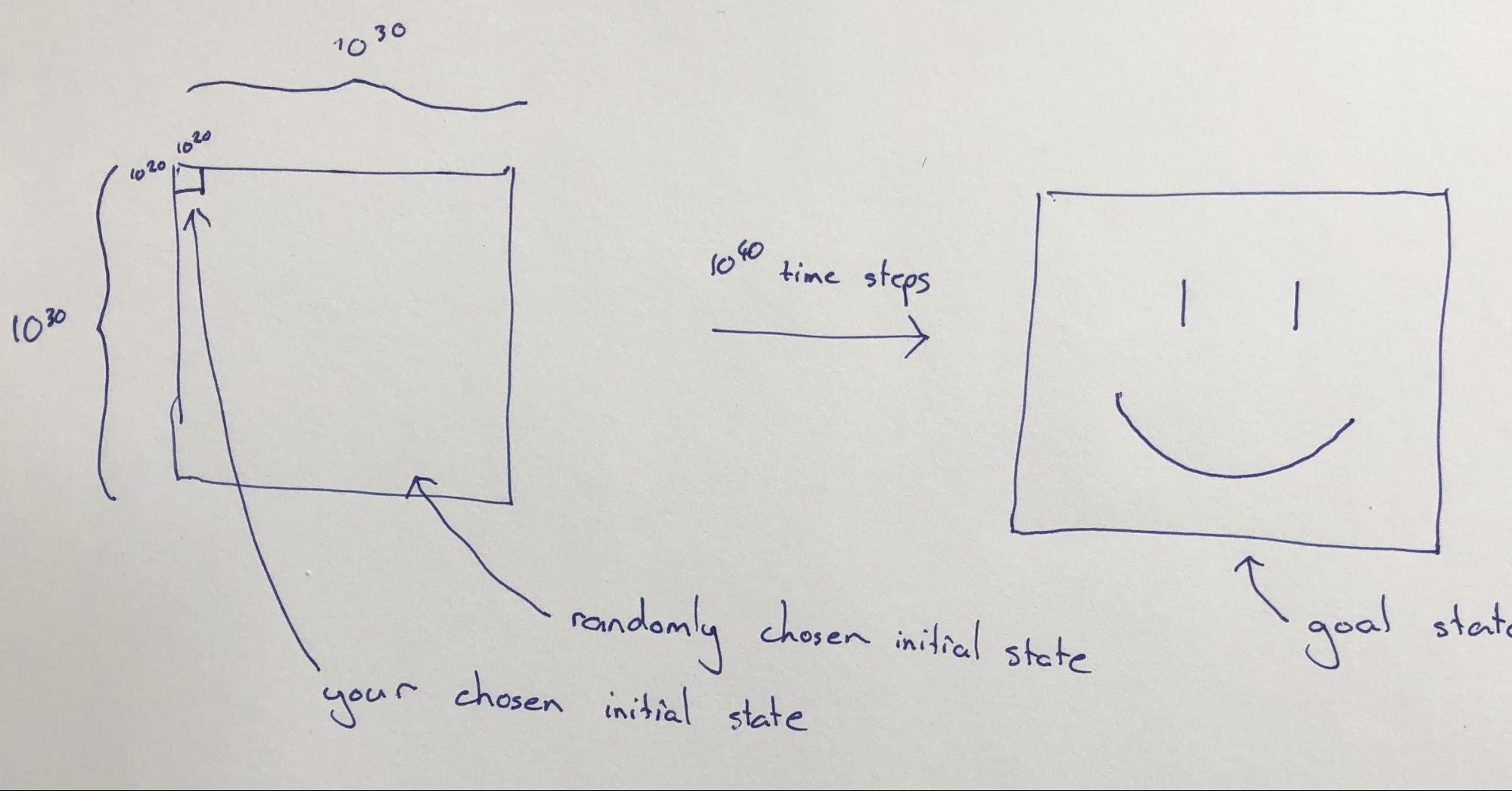

Suppose that we are working with an instance of Life with a very large grid, say rows by columns. Now suppose that I give you control of the initial on/off configuration of a region of size by in the top-left corner of this grid, and set you the goal of configuring things in that region so that after, say, time steps the state of the whole grid will resemble, as closely as possible, a giant smiley face.

The cells outside the top-left corner will be initialized at random, and you do not get to see what their initial configuration is when you decide on the initial configuration for the top-left corner.

The control question is: Can this goal be accomplished?

To repeat that: we have a large grid of cells that will evolve over time according to the laws of Life. We are given power to control the initial on/off configuration of the cells in a square region that is a tiny fraction of the whole grid. The initial on/off configuration of the remaining cells will be chosen randomly. Our goal is to pick an initial configuration for the controllable region in such a way that, after a large number of steps, the on/off configuration of the whole grid resembles a smiley face.

The control question is: Can we use this small initial region to set up a pattern that will eventually determine the configuration of the whole system, to any reasonable degree of accuracy?

[Updated 5/13 following feedback in the comments] Now there are actually some ways that we could get trivial negative answers to this question, so we need to refine things a bit to make sure that our phrasing points squarely at the spirit of the control question. Richard Kennaway points out that for any pattern that attempts to solve the control question, we could consider the possibility that the randomly initialized region contains the same pattern rotated 180 degrees in the diagonally opposite corner, and is otherwise empty. Since the initial state is symmetric, all future states will be symmetric, which rules our creating a non-rotationally-symmetric smiley face. More generally, as Charlie Steiner points out, what happens if there are patterns in the randomly initialized region that are trying to control the eventual configuration of the whole universe just as we are? To deal with this, we might amend the control question to require a pattern that "works" for at least 99% of configurations of the randomly initialized area, since most configurations of that area will not be adversarial. See further discussion in the brief appendix below.

Connection to agency

On the surface of it, I think that constructing a pattern within Life that solves the control question looks very difficult. Try playing with a Life simulator set to max speed to get a feel for how remarkably intricate can be the evolution of even simple initial states. And when an evolving pattern comes into contact with even a small amount of random noise — say a single stray cell set to "on" — the evolution of the pattern changes shape quickly and dramatically. So designing a pattern that unfolds to the entire universe and produces a goal state no matter what random noise is encountered seems very challenging. It’s remarkable, then, that the following strategy actually seems like a plausible solution:

One way that we might answer the control question is by building an AI. That is, we might find a by array of on/off values that evolve under the laws of Life in a way that collects information using sensors, forms hypotheses about the world, and takes actions in service of a goal. That goal we would give to our AI would be arranging for the configuration of the grid to resemble a smiley face after game steps.

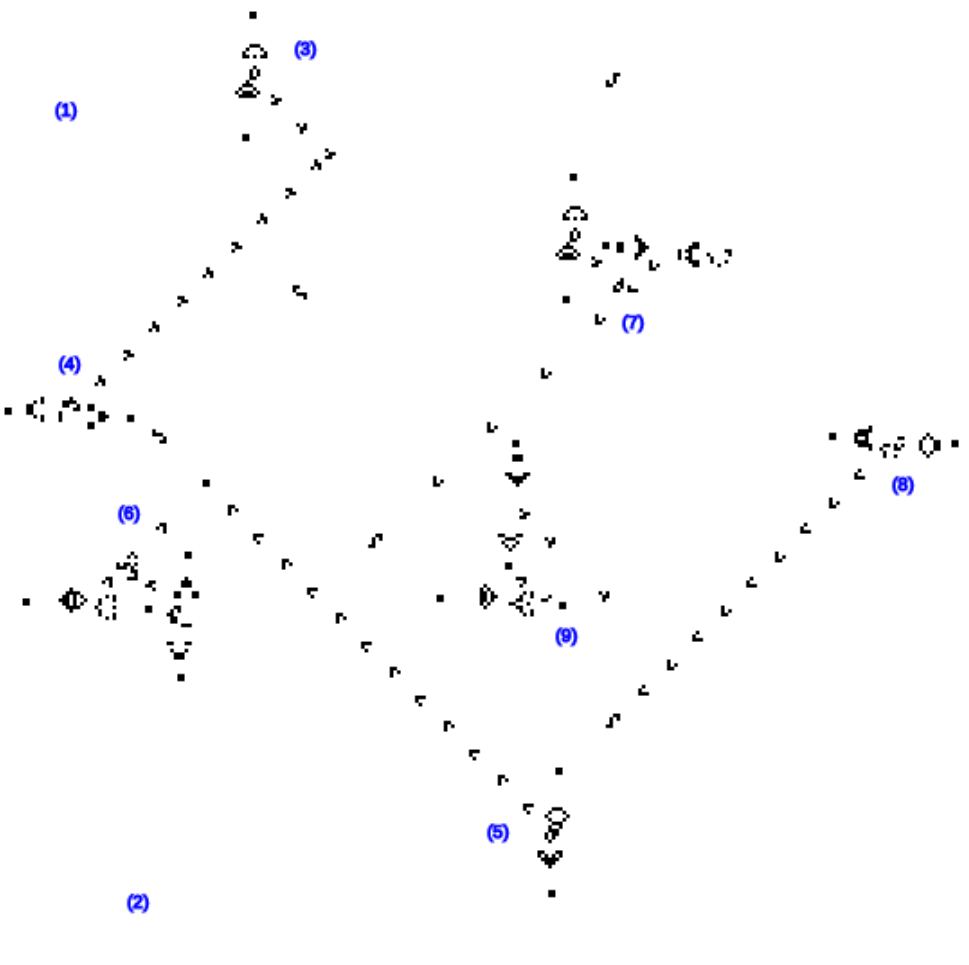

What does it mean to build an AI in the region whose initial state is under our control? Well it turns out that it’s possible to assemble little patterns in Life that act like logic gates, and out of those patterns one can build whole computers. For example, here is what one construction of an AND gate looks like:

And here is a zoomed-out view of a computer within Life that adds integers together:

It has been proven that computers within Life can compute anything that can be computed under our own laws of physics[1], so perhaps it is possible to construct an AI within Life. Building an AI within Life is much more involved than building a computer, not only because we don’t yet know how to construct AGI software, but also because an AI requires apparatus to perceive and act within the world, as well as the ability to move and grow if we want it to eventually exert influence over the entire grid. Most constructions within Life are extremely sensitive to perturbations. The computer construction shown above, for example, will stop working if almost any "on" cell is flipped to "off" at any time during its evolution. In order to solve the control question, we would need to build a machine that is not only able to perceive and react to the random noise in the non-user-controlled region, but is also robust to glider impacts from that region.

Moreover, building large machines that move around or grow over time is highly non-trivial in Life since movement requires a machine that can reproduce itself in different spatial positions over time. If we want such a machine to also perceive, think, and act then these activities would need to be taking place simultaneously with self-reproducing movement.

So it’s not clear that a positive answer to the control question can be given in terms of an AI construction, but neither is it clear that such an answer cannot be given. The real point of the control question is to highlight the way that AI can be seen as not just a particularly powerful conglomeration of parts but as a demonstration of the permissibility of patterns that start out small but eventually determine the large-scale configuration of the whole universe. The reason to construct such thought experiments in Life rather than in our native physics is that the physics of Life is very simple and we are not as used to seeing resource-collecting, action-taking entities in Life as we are in our native physics, so the fundamental significance of these patterns is not as easy to overlook in Life as it is in our native physics.

Implications

If it is possible to build an AI inside Life, and if the answer to the control question is thus positive, then we have discovered a remarkable fact about the basic dynamics of Life. Specifically, we have learned that there are certain patterns within Life that can determine the fate of the entire grid, even when those patterns start out confined to a small spatial region. In the setup described above, the region that we get to control is much less than a trillionth of the area of the whole grid. There are a lot of ways that the remaining grid could be initialized, but the information in these cells seems destined to have little impact on the eventual configuration of the grid compared to the information within at least some parts of the user-controlled region[2].

We are used to thinking about AIs as entities that might start out physically small and grow over time in the scope of their influence. It seems natural to us that such entities are permitted by the laws of physics, because we see that humans are permitted by the laws of physics, and humans have the same general capacity to grow in influence over time. But it seems to me that the permissibility of such entities is actually a deep property of the governing dynamics of any world that permits their construction. The permissibility (or not) of AI is a deep property of physics.

Most patterns that we might construct inside Life do not have this tendency to expand and determine the fate of the whole grid. A glider gun does not have this property. A solitary logic gate does not have this property. And most patterns that we might construct in the real world do not have this property either. A chair does not have the tendency to reshape the whole of the cosmos in its image. It is just a chair. But it seems there might be patterns that do have the tendency to reshape the whole of the cosmos over time. We can call these patterns "AIs" or "agents" or "optimizers", or describe them as "intelligent" or "goal-directed" but these are all just frames for understanding the nature of these profound patterns that exert influence over the future.

It is very important that we study these patterns, because if such patterns do turn out to be permitted by the laws of physics and we do construct one then it might determine the long-run configuration of the whole of our region of the cosmos. Compared to the importance of understanding these patterns, it is relatively unimportant to understand agency for its own sake or intelligence for its own sake or optimization for its own sake. Instead we should remember that these are frames for understanding these patterns that exert influence over the future.

But even more important than this, we should remember that when we study AI, we are studying a profound and basic property of physics. It is not like constructing a toaster oven. A toaster oven is an unwieldy amalgamation of parts that do things. If we construct a powerful AI then we will be touching a profound and basic property of physics, analogous to the way fission reactors touch a profound and basic property of nuclear physics, namely the permissibility of nuclear chain reactions. A nuclear reactor is itself an unwieldy amalgamation of parts, but in order to understand it and engineer it correctly, the most important thing to understand is not the details of the bits and pieces out of which it is constructed but the basic property of physics that it touches. It is the same situation with AI. We should focus on the nature of these profound patterns themselves, not on the bits and pieces out which AI might be constructed.

The AI hypothesis

The above thought experiment suggests the following hypothesis:

Any pattern of physics that eventually exerts control over a region much larger than its initial configuration does so by means of perception, cognition, and action that are recognizably AI-like.

In order to not include things like an exploding supernova as "controlling a region much larger than its initial configuration" we would want to require that such patterns be capable of arranging matter and energy into an arbitrary but low-complexity shape, such as a giant smiley face in Life.

Influence as a definition of AI

If the AI hypothesis is true then we might choose to define AI as a pattern within physics that starts out small but whose initial configuration significantly influences the eventual shape of a much larger region. This would provide an alternative to intelligence as a definition of AI. The problem with intelligence as a definition of AI is that it is typically measured as a function of discrete observations received by some agent, and the actions produced in response. But an unfolding pattern within Life need not interact with the world through any such well-defined input/output channels, and constructions in our native physics will not in general do so either. It seems that AI requires some form of intelligence in order to produce its outsized impact on the world, but it also seems non-trivial to define the intelligence of general patterns of physics. In contrast, influence as defined by the control question is well-defined for arbitrary patterns of physics, although it might be difficult to efficiently predict whether a certain pattern of physics will eventually have a large impact or not.

Conclusion

This post has described the control question, which asks whether, under a given physics, it is possible to set up small patterns that eventually exert significant influence over the configuration of large regions of space. We examined this question in the context of Conway’s Game of Life in order to highlight the significance of either a positive or negative answer to this question. Finally, we proposed the AI hypothesis, which is that any such spatially influential pattern must operate by means of being, in some sense, an AI.

Appendix: Technicalities with the control question

The following are some refinements to the control question that may be needed.

-

There are some patterns that can never be produced in Conway’s Game of Life, since they have no possible predecessor configuration. To deal with this, we should phrase the control question in terms of producing a configuration that is close to rather than exactly matching a single target configuration.

-

There are possible configurations of the whole grid , but only possible configurations of the user-controlled section of the universe. Each configuration of the user-controlled section of the universe will give rise to exactly one final configuration, meaning that the majority of possible final configurations are unreachable. To deal with this we can again phrase things in terms of closeness to a target configuration, and also make sure that our target configuration has reasonably low Kolmogorov complexity.

-

Say we were to find some pattern A that unfolds to final state X and some other pattern B that unfolds to a different final state Y. What happens, then, if we put A and B together in the same initial state — say, starting in opposite corners of the universe? The result cannot be both X and Y. In this case we might have two AIs with different goals competing for control. Some tiny fraction of random initializations will contain AIs, so it is probably not possible for the amplification question to have an unqualified positive answer. We could refine the question so that our initial pattern has to produce the desired goal state for at least 1% of the possible random initializations of the surrounding universe.

-

A region of by cells may not be large enough. Engineering in Life tends to take up a lot of space. It might be necessary to scale up all my numbers.

Rendell, P., 2011, July. A universal Turing machine in Conway's game of life. In 2011 International Conference on High Performance Computing & Simulation (pp. 764-772). IEEE. ↩︎

There are some configurations of the randomly initialized region that affect the final configuration, such as configurations that contain AIs with different goals. This is addressed in the appendix ↩︎