I bet that if an AI is observing your eye movements, which includes a lot of info on not only where you look but also in what ways you are thinking and your emotional reactions and state, plus your other movements and reactions, also it hears everything you say, even without you intending to tell it anything directly, and you’ll probably want to be helping.

One point made during the presentation is that they are big on privacy, by which they mean that your eye movements are private and apps can't just access them.

All accounts agree that Apple has essentially solved issues with fit and comfort.

People could only test them for 30 minutes. It's not clear whether it's comfortable to wear it 8 hours per day.

If Apple is going to hide eye movements from apps, that sounds very much like an alignment tax situation - a headset that doesn't do this is going to get a lot of capabilities advantages, so Apple will need to stay far ahead on other fronts continuously to overcome that.

I imagine this will relax over time, like the early iPhone didn't allow any access for apps to the phonecall hardware.

That depends on whether users value privacy and might be scared about a device that has deep access or whether users have no problem with that.

When Apple spends its marketing dollars on speaking about how it should be scary when a device has access they might convince customers.

People could only test them for 30 minutes. It's not clear whether it's comfortable to wear it 8 hours per day.

This is a good point. I get a headache just from wearing normal headphones for a long period of time.

>All accounts agree that Apple has essentially solved issues with fit and comfort.

Besides the 30min point, is it really true that all accounts agree on that? I definitely remember reading in at least two reports something along the lines of, "clearly you can't use this for hours, because it's too heavy". Sorry for not giving a source!

I'm very concerned about comfort. It looks much heavier than a quest 2 even with an external battery due to the glass and metal construction. If its also consuming double the watts as a quest pro, I worry about thermals. I cant see anyone wearing this for more than an hour due to weight and thermals.

The good news is this can be fixed by moving all the compute off the head into the battery pack. The bad news is apple hates wires, so it might never happen.

Some good analysis here https://kguttag.com/2023/06/13/apple-vision-pro-part-1-what-apple-got-right-compared-to-the-meta-quest-pro/

Good thoughts in general, I'm about where you are - VR is overall headed in a direction where I'm really excited to use it.

Disagree on the device looking cool. It looks like a snorkeling mask, which is still better than the blindfolded look of the Meta Quest.

What it might achieve is being acceptable in public. Google Glass failed because people perceived wearers as potential perverts, photographing people surreptitiously. If Apple can make people perceive the AVP as people who are "having more fun than you are on the plane" - i.e. get people intrigued about other people's use of the technology rather than intimidated by it - that will be a win for the company (and its customers).

but my current configuration is based on years or even decades of optimization

Have you shared this before? I would be really interested in a blog post about your setup and workflow.

I believe this is going to be vastly more useful for commercial applications than consumer ones. Architecture firms are already using VR to demonstrate design concepts - imagine overlaying plumbing and instrumentation diagrams over an existing system, to ease integration, or allowing engineers to CAD something in real time around an existing part. I don't think it would replace more than a small portion of existing workflows, but for some fields it would be incredibly useful.

Nice overview! I mostly agree.

>What I do not expect is something I’d have been happy to pay $500 or $1,000 for, but not $3,500. Either the game will be changed, or it won’t be changed quite yet. I can’t wait to find out.

From context, I assume you're saying this about the current iteration?

I guess willingness to pay for different things depends on one's personal preferences, but here's an outcome that I find somewhat likely (>50%):

- The first-gen Apple Vision Pro will not be very useful for work, aside from some niche tasks.

- It seems that to be better than a laptop for working at a coffee shop or something they need to have solved ~10 different problems extremely well and my guess is that they will have failed to solve one of them well enough. For example, I think comfort/weight alone has a >30% probability of making this less enjoyable to work with (for me at least) than with a laptop, even if all other stuff works fairly well.

- Like you, I'm sometimes a bit puzzled by what Apple does. So I could also imagine that Apple screws up something weird that isn't technologically difficult. For example, the first version of iPad OS was extremely restrictive (no multitasking/splitscreen, etc.). So even though the hardware was already great, it was difficult to use it for anything serious and felt more like a toy. Based on what they emphasize on the website, I could very well imagine that they won't focus on making this work and that there'll be some basic, obvious issue like not being able to use a mouse. If Apple had pitched this more in the way that Spacetop was pitched, I'd be much more optimistic that the first gen will be useful for work.

- The first-gen Apple Vision Pro will still produce lots of extremely interesting experiences so that many people would be happy to pay, say, $1000 for, but not $3,500 and definitely not much more than $3,500. For example, I think all the reviews I've seen have described the experience as very interesting, intense and immersive. Let's say this novelty value wears off after something like 10h. Then a family of four gets 40h of fun out of it. Say, you're happy to spend on the order of $10 per hour per person for a fun new experience (that's roughly what you'd spend to go to the movie theater, for example), then that'd be a willingness to pay in the hundreds of dollars.

From the linked twitter thread:

[...] Generally as a whole, a lot of the work I did involved detecting the mental state of users based on data from their body and brain when they were in immersive experiences.

[...] Another patent goes into details about using machine learning and signals from the body and brain to predict how focused, or relaxed you are, or how well you are learning. And then updating virtual environments to enhance those states. So, imagine an adaptive immersive environment that helps you learn, or work, or relax by changing what you’re seeing and hearing in the background.

This is astonishing to me. I wonder if this will be one of its main uses.

Apple is offering a VR/AR/XR headset, Vision Pro, for the low, low price of $3,500.

I kid. Also I am deadly serious.

The value of this headset to a middle class American or someone richer than that is almost certainly either vastly more than $3,500, or at best very close to $0.

This type of technology is a threshold effect. Once it gets good enough, if it gets good enough, it will feel essential to our lives and our productivity. Until then, it’s a trifle.

Thus, like Divia Eden, I am bullish on using the Tesla strategy of offering a premium product at a premium price, then later either people decide they need it and pay up or you scale enough to lower costs – if the tech delivers.

Gaming could be a modest benefit. Mark Zuckerberg points out on the Lex Fridman podcast that with no native controller this could be a poor VR/AR gaming platform. Mark suggested this could drive demand for the more reasonably priced Occulus.

This doesn’t apply to traditional gaming with the VR used to improve the screen and mobility, assuming you can get connected to devices that allow real gaming (e.g. console devices or PCs, not iPhones and Macs.) Apple lets you hook up a PS5 controller or mouse and keyboard if you like, but only directly integrates with Apple devices.

My current impression of existing VR/AR is that it is in the ‘not worth much’ section of the curve. The games and activities are fun to try out, but not worth sustained engagement. Productivity wasn’t there at all.

Can Apple do better? Are we there? There’s definitely a bunch of new tech here.

Your New Computer Interface?

That’s how their presentation pitch starts. ‘All your favorite apps,’ controlled by your eyes and finger scrolls and taps.

Do we want to use a phone or computer in AR or VR? It makes sense to go use case by use case.

Thoughts on the Control Mechanism

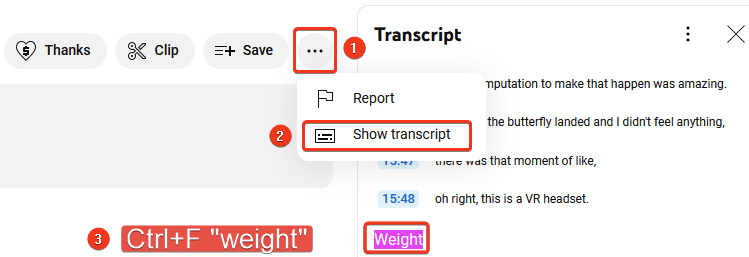

It’s so hard to tell without a demo whether the eyes are a good control mechanism. Even for those who have used other VR systems, these are very different use cases. You can hook up Bluetooth keyboards, mice and controllers as alternatives, we’ll find out how friendly the system is to their use. You are also asked to use voice, which in my experience is a good option but a terrible thing to have forced upon you.

A hybrid control scheme under user control seems great, where you can switch between modalities to suit how you want to navigate.

I have a big concern that Apple thinks they know better what interfaces are good and bad, and is not going to allow us to use precision and patterns and the modes we prefer. That they will tell us what to use where, and that it won’t be great. They told demo users not to ray trace and instead use a synthesis of certain gestures combined with eye and voice. I get why no haptics. Many reviewers say they nailed the interface. The lack of options still feels like an error.

There’s a reason I’ve mostly been an Android user. I want options. I want to do it my way. Apple has consistently to that, for decades: Screw you, our way or the highway, our way is the right true and cool way.

I want at least the option to say things like ‘treat my hands as if they were using an invisible keyboard and mouse, in ways I know your cameras can figure out.’ I did a short experiment here typing in the air with no tactile feedback, and it’s going to take a little practice and getting used to but it would totally going to work if enabled. Maybe the keyboard is a stretch without a physical aid, but why not the mouse?

A strong counterargument is that Apple has put a lot more investment into these questions than I have, and reports mostly say the interface is awesome, with for example Ars Technica saying it’s going to be hard to go back to using a controller and Apple has nailed the interface.

Importance of Dynamic Passthrough to the World

The feature that you will see the real world when it is important to do so, and that everyone knows this, is a big game. Not only can you interact normally with people in the room, they know that you can do this. Which in turn means that the device might not be seen as an anti-social move. Then you can use all its cool AR features, including AI conversation assistance once they integrate that, again we’ll talk later.

If we want to know about social acceptance of using such a device, we must not forget the important questions. In particular:

Does it Look Cool?

An official verdict offered by Radihika Jones on WWDTM is that they are cool.

Why are they cool?

It’s not about the actual physical look. It’s about what they represent.

They’re Apple. Apple is cool. Google isn’t cool. I am very much on team Google in terms of my personal choices, a mostly happy user of Android and Gmail and Google Docs and Sheets who hopes Bard gets it together, but we all know none of it is cool.

I agree. The device looks cool. Unless the functionality is bad, then it doesn’t.

Tech Solutions

It all comes down to the tech. The tech is what enables features and uses that wouldn’t have previously worked, or wouldn’t have worked as well. The whole point of being $3,500 is so Apple could spend their way out of a host of technical issues.

Reviews agree, and focus more on technical aspects. TechCrunch puts the focus entirely on the tech specs, others are more balanced.

For your $3,500 you get much more advanced hardware with technical innovations throughout.

Reports are that the new tech mostly solves eye strain, which would otherwise be a showstopper to continuous use.

Eye tracking and gesture control is reported to be near perfect, even when your hands are not in front of you.

The higher resolution screens mean text is readable.

The pass-through of the real world is a game changer. Statechery emphasizes the low under 12 millisecond latency to get under the brain’s perception threshold, and generally the fact that only Apple could pull off the many challenges involved in getting the Vision right.

All accounts agree that Apple has essentially solved issues with fit and comfort.

Sterling Crispin talks here about some of the tech challenges. He talks about how the headset uses the information it gets to infer your emotional state and response to various stimuli, including using subliminal visuals or sounds to observe reactions.

I notice the Apple presentation absolutely does not mention or use any of these capabilities. Perhaps they do not wish to freak people out, in the ‘that could make quite the brainwashing helmet’ or more conventionally the ‘I really do not want that kind of data in the hands of someone serving me advertisements’ kind of ways, but also obviously super useful. Imagine an interactive horror game that noticed which types of things scared you, and adapted to scare you more. Imagine an AI giving you a virtual lecture, and noticing when your eyes light up or when you lose interest. Imagine the police asking you where you were last night. Or, you know, porn. The possibilities are endless.

Vastly more is being asked of the technology here than was being asked of it previously. It’s easy to see why this could cost $3,500. It’s also easy to see how it could be worth many times that, if it delivers.

Reviews

I saw a bunch of reviews from reporters who saw the demo, all of them positive, although to be fair, not quite everyone’s reactions were positive.

I’ll highlight three reviews in particular.

Adam Savage offers hands-on impressions in a 30-minute video. Lots of technical detail discussion. Video seemed like the wrong format here despite the obvious advantages, would have been much better as a blog post.

David Pogue is super pumped.

Ben Thompson at Statechery is both highly credible and even more pumped than David Pogue. To him, this feels like the future, the device knocks it out of the park, he’s wildly optimistic. Yet even he asks, what ultimately is the device for? If computers are productivity, tablets are consumption and the smartphone is, well, everything, is the Vision more than novelty?

Killer App

The right question to ask is, ultimately, what is the device for? What’s the killer app?

It can’t replace your phone on a two hour battery life. There’s a big practical problem that, as I understand it, if you are wearing the device then it needs to be running and drawing power. If you’re not wearing it, it has to go somewhere else in the meantime, and you don’t have that much gas in the tank.

Can it replace your computer? That seems more plausible. Contexts can be found where power supply is less constraining, and you can carry extra batteries and swap them in. Laptop and tablet screens are pretty bad for productivity and many places don’t have a good desk or other surface. Being able to work in the middle of a park seems great. Being able to work lying down is potentially great. You can get ourself a keyboard if you’d like. The new modalities and interface will be actively superior for some tasks.

It’s hard for me to imagine this being more productive in my core activities than my current configuration, but my current configuration is based on years or even decades of optimization. I wouldn’t discount this.

Can it be the new television or tablet? Seems even more plausible. Advantages here are clear, especially on something like a plane, train or bus ride, or otherwise chilling in one place in public. The sports watching experience is potentially transformative even if all it does is simulate being there, and you can add to that with integrated info or not from there. Same logic applies to traditional games. Also there’s always porn.

The new way to read? It could be a good option. Your eyes want to focus on a narrow area and do best with relatively small print (subject to your eyesight, anyway). If the interface lets the text move to keep pace with your eyes, that could be killer.

Photos and videos? It’s nice, but not enough at this price point.

Video meetings, including in three dimensions? Highly promising. How far can this go? Do people want lots of interactivity here? This seems still greatly underexplored. Simply meeting people socially, perhaps playing board games or card games?

Augmented reality experiences? We haven’t even scratched the surface of what these might do. Even without AI.

The AI Elephant in the Room

If I had to guess the killer app here, it is one Apple didn’t mention. AI.

There’s the VR case, the passive AR case, and the hybrid case.

In the VR case, you’ve got a virtual world, game or experience, and the AI can fill it with realistic looking and acting people or NPCs, who can carry on conversations with you, and can take that into the virtual space and interact with each other and other humans who have joined, continuously over time. You can have a wide variety of experiences, go on quests, learn new things, have debates and discussions and so on in a way that feels natural.

Most importantly you can practice both physical skills and social skills, in settings that will give you the correct feedback. I am super pumped for this. Imagine getting to try out a presentation, pause, rewind and so on, with realistic audience reactions. Or practicing crucial conversations, or various social interactions, including navigating relationships and dating, with the usual ‘don’t date robots’ warnings. Or physical stuff, like golf swings and batting practice, although it’s not clear you need AI for that. Either way, a complete experience is going to be so much better.

Think of the feedback you can get, all without social pressure. There’s even expert tracking of your eyes. And every step of the way, you can get guidance, run experiments, have multiple tries, you name it.

In the AR case, context can become a lot less scarce. There are a bunch of start-ups or apps trying to do this now, but the Vision Pro will super charge what can be done, as will a year of work. Key info or even suggested approaches available to you based on context of a conversation, or what exactly your eye is looking at, with the AI taking into account all the multi-modal inputs available. It’s a question of when, not if.

I bet that if an AI is observing your eye movements, which includes a lot of info on not only where you look but also in what ways you are thinking and your emotional reactions and state, plus your other movements and reactions, also it hears everything you say, even without you intending to tell it anything directly, and you’ll probably want to be helping.

Can the device continuously telling you what to do, and you learning to automatically do it, be far behind? Won’t people welcome that?

The hybrid case I’m thinking of is when you’re adding AIs or other experiences into the world around you. Why use a virtual world when you can use the real one, aside from potentially looking a bit weird? Again, not what Apple has in mind, and I don’t expect it to be up to them for long.

Even confining to ordinary productivity-style or television-style entertainment, tracking your eye movements and reactions, should give so much data about what would be useful responses or information as to seem magical to us today. You’re reading a web page and every time you look confused a thing pops up on the side explaining. If you ask questions out loud during a show or you express confusion then it answers, with or without pausing, and it can do so more seamlessly integrated and more accurately than could a traditional television even if it had computer integration.

That’s on not very much thought, and zero trial and error or tinkering. The more I think about the various possibilities the more excited I get, now that the tech has crossed so many crucial thresholds.

Conclusion

It would be surprising to me, at this point, if this technology didn’t end up being game changing after a few iterations. Whether version one gets there is less clear, both on the underlying tech and on the software being there to support it, and us figuring out the best ways to use it.

I expect to at minimum be sorely tempted to drop $3,500 on one of these, plus several hundred more on the lenses and backup batteries, and then I’d presumably need an iPhone, Mac or both, so the final bill could easily end up in the $6k-$7k range.

The thing that is holding me back is that this is Apple, which means tying one’s fate in large part to the Apple ecosystem and way of being. I don’t want to do that, and have been far happier with Windows over Macs, and after I moved from iPhones to Google Pixels. Still better than Facebook, of course.

Right now, Apple is the thing most holding me back. If say Google or Microsoft or Amazon or Sony had a similarly impressive technical offering that fully integrated into Windows and Android, I’d be all over it.

Could this still be a dud? Absolutely. What I do not expect is something I’d have been happy to pay $500 or $1,000 for, but not $3,500. Either the game will be changed, or it won’t be changed quite yet. I can’t wait to find out.