So there’s this thing where a system can perform more bits of optimization on its environment by observing some bits of information from its environment. Conjecture: observing an additional bits of information can allow a system to perform at most additional bits of optimization. I want a proof or disproof of this conjecture.

I’ll operationalize “bits of optimization” in a similar way to channel capacity, so in more precise information-theoretic language, the conjecture can be stated as: if the sender (but NOT the receiver) observes bits of information about the noise in a noisy channel, they can use that information to increase the bit-rate by at most bits per usage.

For once, I’m pretty confident that the operationalization is correct, so this is a concrete math question.

Toy Example

We have three variables, each one bit: Action (), Observable (), and outcome (). Our “environment” takes in the action and observable, and spits out the outcome, in this case via an xor function:

We’ll assume the observable bit has a 50/50 distribution.

If the action is independent of the observable, then the distribution of outcome is the same no matter what action is taken: it’s just 50/50. The actions can perform zero bits of optimization; they can’t change the distribution of outcomes at all.

On the other hand, if the actions can be a function of , then we can take either or (i.e. not-), in which case will be deterministically 0 (if we take ), or deterministically 1 (for ). So, the actions can apply 1 bit of optimization to , steering deterministically into one half of its state space or the other half. By making the actions a function of observable , i.e. by “observing 1 bit”, 1 additional bit of optimization can be performed via the actions.

Operationalization

Operationalizing this problem is surprisingly tricky; at first glance the problem pattern-matches to various standard info-theoretic things, and those pattern-matches turn out to be misleading. (In particular, it’s not just conditional mutual information, since only the sender - not the receiver - observes the observable.) We have to start from relatively basic principles.

The natural starting point is to operationalize “bits of optimization” in a similar way to info-theoretic channel capacity. We have 4 random variables:

- “Goal”

- “Action”

- “Observable”

- “Outcome”

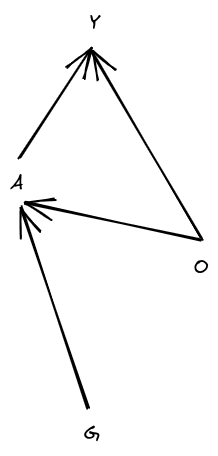

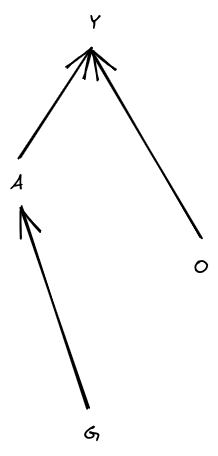

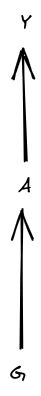

Structurally:

(This diagram is a Bayes net; it says that and are independent, is calculated from and and maybe some additional noise, and is calculated from and and maybe some additional noise. So, .) The generalized “channel capacity” is the maximum value of the mutual information , over distributions .

Intuitive story: the system will be assigned a random goal , and then take actions (as a function of observations ) to steer the outcome . The “number of bits of optimization” applied to is the amount of information one could gain about the goal by observing the outcome .

In information theoretic language:

- is the original message to be sent

- is the encoded message sent in to the channel

- is noise on the channel

- is the output of the channel

Then the generalized “channel capacity” is found by choosing the encoding to maximize .

I’ll also import one more assumption from the standard info-theoretic setup: is represented as an arbitrarily long string of independent 50/50 bits.

So, fully written out, the conjecture says:

Let be an arbitrarily long string of independent 50/50 bits. Let , , and be finite random variables satisfying

and define

Then

Also, one slightly stronger bonus conjecture: is at most under the unconstrained maximal .

(Feel free to give answers that are only partial progress, and use this space to think out loud. I will also post some partial progress below. Also, thankyou to Alex Mennen for some help with a couple conjectures along the path to formulating this one.)

This is interesting, but it also feels a bit somehow like a "cheat" compared to the more "real" version of this problem (namely, if I know something about the world and can think intelligently about it, how much leverage can I get out of it?).

The kind of system in which you can pack so much information in an action and at the cost of a small bit of information you get so much leverage feels like it ought to be artificial. Trivially, this is actually what makes a lock (real or virtual) work: if you have one simple key/password, you get to do whatever with the contents. But the world as a whole doesn't seem to work as a locked system (if it did, we would have magic: just a tiny, specific formula or gesture and we get massive results down the line).

I wonder if the key here isn't in the entropy. Your knowing O here allows you to significantly reduce the entropy of the world as a whole. This feels akin to being a Maxwell demon. In the physical world though there are bounds on that sort of observation and action exactly because to be able to do them would allow you to violate the 2nd principle of thermodynamics. So I wonder if the conjecture may be true under some additional constraints which also include these common properties of macroscopic closed physical systems (while it remains false in artificial subsystems that we can build for the purpose, in which we only care about certain bits and not all the ones defining the underlying physical microstates).