Not only do they continue to list such jobs, they do so with no warnings that I can see regarding OpenAI's behavior, including both its actions involving safety and also towards its own employees.

Not warning about the specific safety failures and issues is bad enough, and will lead to uninformed decisions on the most important issue of someone's life.

Referring a person to work at OpenAI, without warning them about the issues regarding how they treat employees, is so irresponsible towards the person looking for work as to be a missing stair issue.

I am flaberghasted that this policy has been endorsed on reflection.

(Cross-posted from the EA forum)

Hi, I run the 80,000 Hours job board, thanks for writing this out!

I agree that OpenAI has demonstrated a significant level of manipulativeness and have lost confidence in them prioritizing existential safety work. However, we don’t conceptualize the board as endorsing organisations. The point of the board is to give job-seekers access to opportunities where they can contribute to solving our top problems or build career capital to do so (as we write in our FAQ). Sometimes these roles are at organisations whose mission I disagree with, because the role nonetheless seems like an opportunity to do good work on a key problem.

For OpenAI in particular, we’ve tightened up our listings since the news stories a month ago, and are now only posting infosec roles and direct safety work – a small percentage of jobs they advertise. See here for the OAI roles we currently list. We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI, we limited the listings further to only roles that are very directly on safety or security work. I still expect these roles to be good opportunities to do impo...

Huh, this doesn't super match my model. I have heard of people at OpenAI being pressured a lot into making sure their safety work helps with productization. I would be surprised if they end up being pressured working directly on the scaling team, but I wouldn't end up surprised with someone being pressured into doing some better AI censorship in a way that doesn't have any relevance to AI safety and does indeed make OpenAI a lot of money.

I'd define "genuine safety role" as "any qualified person will increase safety faster that capabilities in the role". I put ~0 likelihood that OAI has such a position. The best you could hope for is being a marginal support for a safety-based coup (which has already been attempted, and failed).

There's a different question of "could a strategic person advance net safety by working at OpenAI, more so than any other option?". I believe people like that exist, but they don't need 80k to tell them about OpenAI.

However, we don’t conceptualize the board as endorsing organisations.

It don't matter how you conceptualize it. It matters how it looks, and it looks like an endorsement. This is not an optics concern. The problem is that people who trust you will see this and think OpenAI is a good place to work.

- These still seem like potentially very strong roles with the opportunity to do very important work. We think it’s still good for the world if talented people work in roles like this!

How can you still think this after the whole safety team quit? They clearly did not think these roles where any good for doing safety work.

Edit: I was wrong about the whole team quitting. But given everything, I still stand by that these jobs should not be there with out at leas a warning sign.

As a AI safety community builder, I'm considering boycotting 80k (i.e. not link to you and reccomend people not to trust your advise) until you at least put warning labels on your job board. And I'll reccomend other community builders to do the same.

I do think 80k means well, but I just can't reccomend any org with this level of lack of judgment. Sorry.

As a AI safety community builder, I'm considering boycotting 80k (i.e. not link to you and reccomend people not to trust your advise) until you at least put warning labels on your job board.

Hm. I have mixed feelings about this. I'm not sure where I land overall.

I do think it is completely appropriate for Linda to recommend whichever resources she feels are appropriate, and if her integrity calls her, to boycott resources that otherwise have (in her estimation) good content.

I feel a little sad that I, at least, perceived that sentence as an escalation. There's a version of this conversation where we all discuss considerations, in public and in private, and 80k is a participant in that conversation. There's a different version where 80k immediately feels the need to be on the defensive, in something like PR mode, or where the outcome is mostly determined by the equilibrium of social-power rather than anything else.That seems overall worse, and I'm afraid that sentences like the quoted one, push in that direction.

On the other hand, I also feel some resonance with the escalation. I think "we", broadly construed, have been far to warm with OpenAI, and it seems maybe good that there's common knowledge building that a lot of people think that was a mistake, and momentum building towards doing something different going forward, including people "voting with their voices", instead of being live-and-let-live to the point of having no real position at all.

it may be too much to ask, but in my ideal world, 80k folks would feel comfy ignoring the potential escalatory emotional valence and would treat that purely as evidence about the importance of it to others. in other words, if people are demanding something, that's a time to get less defensive and more analytical, not more defensive and less analytical. It would be good PR to me for them to just think out loud about it.

But whether an organization can easily respond is pretty orthogonal to whether they’ve done something wrong. Like, if 80k is indeed doing something that merits a boycott, then saying so seems appropriate. There might be some debate about whether this is warranted given the facts, or even whether the facts are right, but it seems misguided to me to make the strength of an objection proportional to someone’s capacity to respond rather than to the badness of the thing they did.

A statement I could truthfully say:

"As a AI safety community member, I predict I and others will be uncomfortable with 80k if this is where things end up settling, because of disagreeing. I could be convinced otherwise, but it would take extraordinary evidence at this point. If my opinions stay the same and 80k's also are unchanged, I expect this make me hesitant to link to and recommend 80k, and I would be unsurprised to find others behaving similarly."

But you did not say it (other than as a response to me). Why not?

I'd be happy for you to take the discussion with 80k and try to change their behaviour. This is not the first time I told them that if they list a job, a lot of people will both take it as an endorsement, and trut 80k that this is a good job to apply for.

As far as I can tell 80k is in complete denial on the large influence they have on many EAs, especially local EA community builders. They have a lot of trust, mainly for being around for so long. So when ever they screw up like this, it causes enormous harm. Also since EA have such a large growth rate (at any given time most EAs are new EAs), the community is bad at tracking when 80k does screw up, so they ...

Firstly, some form of visible disclaimer may be appropriate if you want to continue listing these jobs.

While the jobs board may not be "conceptualized" as endorsing organisations, I think some users will see jobs from OpenAI listed on the job board as at least a partial, implicit endorsement of OpenAI's mission.

Secondly, I don't think roles being directly related to safety or security should be a sufficient condition to list roles from an organisation, even if the roles are opportunities to do good work.

I think this is easier to see if we move away from the AI Safety space. Would it be appropriate for 80,000 Hours job board advertise an Environmental Manager job from British Petroleum?

I dislike when conversations about that are really about one topic get muddied by discussion about an analogy. For the sake of clarity, I'll use italics relate statements when talking about the AI safety jobs at capabilities companies.

Interesting perspective. At least one other person also had a problem with that statement, so it is probably worth me expanding.

Assume, for the sake of the argument, that the Environmental Manager's job is to assist with clean-ups after disasters, monitoring for excessive emissions and preventing environmental damage. In a vacuum these are all wonderful, somewhat-EA aligned tasks.

Similarly the safety focused role, in a vacuum, is mitigating concrete harms from prosaic systems and, in the future, may be directly mitigating existential risk.

However, when we zoom out and look at these jobs in the context of the larger organisations goals, things are less obviously clear. The good you do helps fuel a machine whose overall goals are harmful.

The good that you do is profitable for the company that hires you. This isn't always a bad thing, but by allowing BP to operate in a more environmentally friendly manner you improve BP's pu...

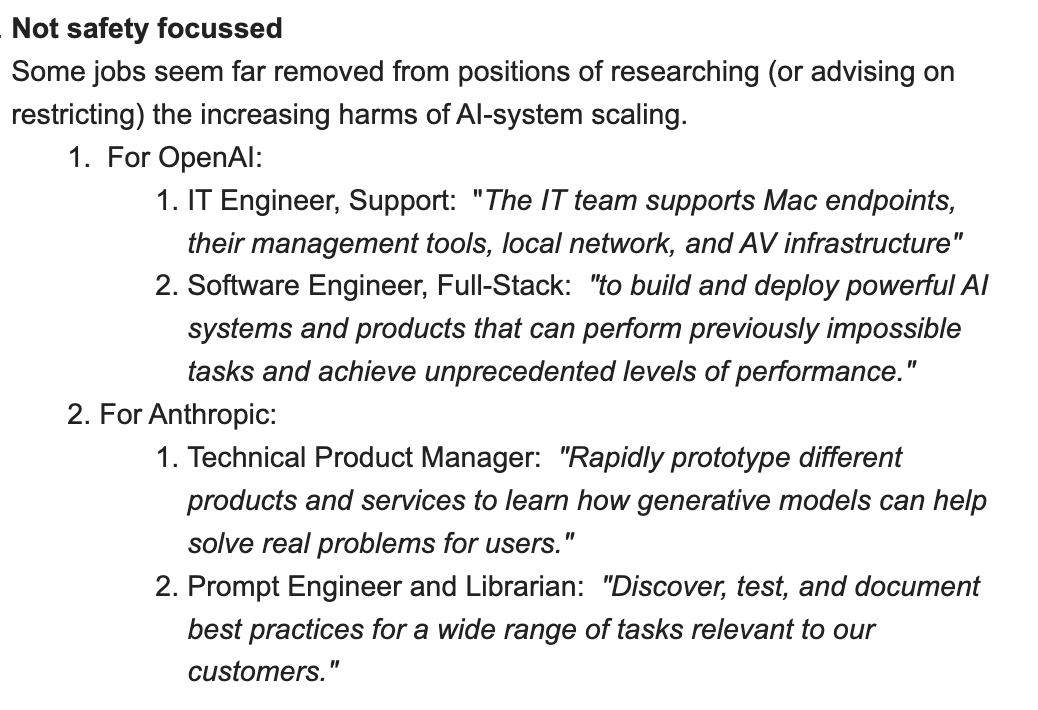

We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI

This misses aspects of what used to be 80k's position:

❝ In fact, we think it can be the best career step for some of our readers to work in labs, even in non-safety roles. That’s the core reason why we list these roles on our job board.

– Benjamin Hilton, February 2024

❝ Top AI labs are high-performing, rapidly growing organisations. In general, one of the best ways to gain career capital is to go and work with any high-performing team — you can just learn a huge amount about getting stuff done. They also have excellent reputations more widely. So you get the credential of saying you’ve worked in a leading lab, and you’ll also gain lots of dynamic, impressive connections.

– Benjamin Hilton, June 2023 - still on website

80k was listing some non-safety related jobs:

– From my email on May 2023:

– From my comment on February 2024:

I think an assumption 80k makes is something like "well if our audience thinks incredibly deeply about the Safety problem and what it would be like to work at a lab and the pressures they could be under while there, then we're no longer accountable for how this could go wrong. After all, we provided vast amounts of information on why and how people should do their own research before making such a decision".

The problem is, that is not how most people make decisions. No matter how much rational thinking is promoted, we're first and foremost emotional creatures that care about things like status. So, if 80k decides to have a podcast with the Superalignment team lead, then they're effectively promoting the work of OpenAI. That will make people want to work for OpenAI. This is an inescapable part of the Halo effect.

Lastly, 80k is explicitly targeting very young people who, no offense, probably don't have the life experience to imagine themselves in a workplace where they have to resist incredible pressures to not conform, such as not sharing interpretability insights with capabilities teams.

The whole exercise smacks of nativity and I'm very confident we'll look back and see it as an incredibly obvious mistake in hindsight.

I was around a few years ago when there were already debates about whether 80k should recommend OpenAI jobs. And that's before any of the fishy stuff leaked out, and they were stacking up cool governance commitments like becoming a capped-profit and having a merge-and-assist-clause.

And, well, it sure seem like a mistake in hindsight how much advertising they got.

I haven't shared this post with other relevant parties – my experience has been that private discussion of this sort of thing is more paralyzing than helpful.

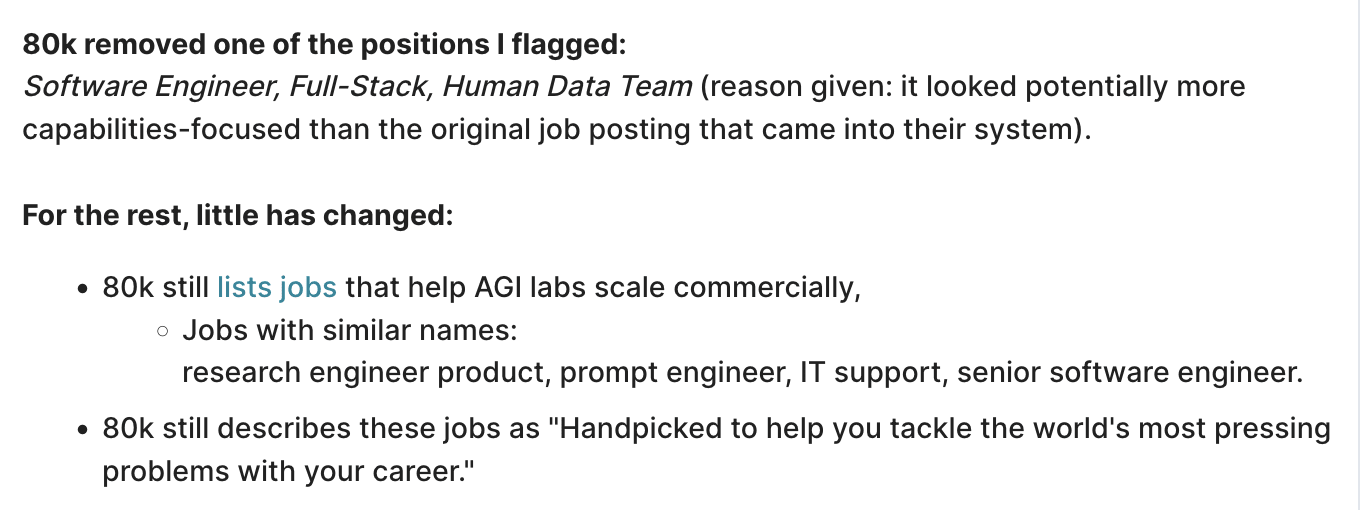

Fourteen months ago, I emailed 80k staff with concerns about how they were promoting AGI lab positions on their job board.

The exchange:

- I offered specific reasons and action points.

- 80k staff replied by referring to their website articles about why their position on promoting jobs at OpenAI and Anthropic was broadly justified (plus they removed one job listing).

- Then I pointed out what those articles were specifically missing,

- Then staff stopped responding (except to say they were "considering prioritising additional content on trade-offs").

It was not a meaningful discussion.

Five months ago, I posted my concerns publicly. Again, 80k staff removed one job listing (why did they not double-check before?). Again, staff referred to their website articles as justification to keep promoting OpenAI and Anthropic safety and non-safety roles on their job board. Again, I pointed out what seemed missing or off about their justifications in those articles, with no response from staff.

It took the firing of the entire OpenAI su...

I hope that the voluminous discussion on exactly how bad each of the big AI labs are doesn't distract readers from what I consider the main chances: getting all the AI labs banned (eventually) and convincing talented young people not to put in the years of effort needed to prepare themselves to do technical AI work.

Here is an example of a systems dynamics diagram showing some of the key feedback loops I see. We could discuss various narratives around it and what to change (add, subtract, modify).

┌───── to the degree it is perceived as unsafe ◀──────────┐

│ ┌──── economic factors ◀─────────┐ │

│ + ▼ │ │

│ ┌───────┐ ┌───────────┐ │ │ ┌────────┐

│ │people │ │ effort to │ ┌───────┐ ┌─────────┐ │ AI │

▼ - │working│ + │make AI as │ + │ AI │ + │potential│ + │becomes │

├─────▶│ in │────▶│powerful as│─────▶│ power │───▶│ for │───▶│ too │

│ │general│ │ possible │ └───────┘ │unsafe AI│ │powerful│

│ │ AI │ └───────────┘ │ └─────────┘ └────────┘

│ └───────┘ │

│ │ net movement │ e.g. use AI to reason

│ + ▼ │ about AI safety

│ ┌────────┐ + ▼

│ │ peThe LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

Because it's obviously annoying and burning the commons. Imagine if I made a bot that posted the same comment on every post of less wrong, surely that wouldn't be acceptable behavior.

I haven't shared this post with other relevant parties – my experience has been that private discussion of this sort of thing is more paralyzing than helpful. I might change my mind in the resulting discussion, but, I prefer that discussion to be public.

I think 80,000 hours should remove OpenAI from its job board, and similar EA job placement services should do the same.

(I personally believe 80k shouldn't advertise Anthropic jobs either, but I think the case for that is somewhat less clear)

I think OpenAI has demonstrated a level of manipulativeness, recklessness, and failure to prioritize meaningful existential safety work, that makes me think EA orgs should not be going out of their way to give them free resources. (It might make sense for some individuals to work there, but this shouldn't be a thing 80k or other orgs are systematically funneling talent into)

There plausibly should be some kind of path to get back into good standing with the AI Risk community, although it feels difficult to imagine how to navigate that, given how adversarial OpenAI's use of NDAs was, and how difficult that makes it to trust future commitments.

The things that seem most significant to me:

This is before getting into more openended arguments like "it sure looks to me like OpenAI substantially contributed to the world's current AI racing" and "we should generally have a quite high bar for believing that the people running a for-profit entity building transformative AI are doing good, instead of cause vast harm, or at best, being a successful for-profit company that doesn't especially warrant help from EAs.

I am generally wary of AI labs (i.e. Anthropic and Deepmind), and think EAs should be less optimistic about working at large AI orgs, even in safety roles. But, I think OpenAI has demonstrably messed up, badly enough, publicly enough, in enough ways that it feels particularly wrong to me for EA orgs to continue to give them free marketing and resources.

I'm mentioning 80k specifically because I think their job board seemed like the largest funnel of EA talent, and because it seemed better to pick a specific org than a vague "EA should collectively do something." (see: EA should taboo "EA should"). I do think other orgs that advise people on jobs or give platforms to organizations (i.e. the organization fair at EA Global) should also delist OpenAI.

My overall take is something like: it is probably good to maintain some kind of intellectual/diplomatic/trade relationships with OpenAI, but bad to continue giving them free extra resources, or treat them as an org with good EA or AI safety standing.

It might make sense for some individuals to work at OpenAI, but doing so in a useful way seems very high skill, and high-context – not something to funnel people towards in a low-context job board.

I also want to clarify: I'm not against 80k continuing to list articles like Working at an AI Lab, which are more about how to make the decisions, and go into a fair bit of nuance. I disagree with that article, but it seems more like "trying to lay out considerations in a helpful way" than "just straightforwardly funneling people into positions at a company." (I do think that article seems out of date and worth revising in light of new information. I think "OpenAI seems inclined towards safety" now seems demonstrably false, or at least less true in the ways that matter. And this should update you on how true it is for the other labs, or how likely it is to remain true)

FAQ / Appendix

Some considerations and counterarguments which I've thought about, arranged as a hypothetical FAQ.

Q: It seems that, like it or not, OpenAI is a place transformative AI research is likely to happen, and having good people work there is important.

Isn't it better to have alignment researchers working there, than not? Are you sure you're not running afoul of misguided purity instincts?

I do agree it might be necessary to work with OpenAI, even if they are reckless and negligent. I'd like to live in the world where "don't work with companies causing great harm" was a straightforward rule to follow. But we might live in a messy, complex world where some good people may need to work with harmful companies anyway.

But: we've now had two waves of alignment people leave OpenAI. The second wave has multiple people explicitly saying things like "quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI."

The first wave, my guess is they were mostly under non-disclosure/non-disparagement agreements, and we can't take their lack of criticism as much evidence.

It looks to me, from the outside, like OpenAI is just not really structured or encultured in a way that makes it that viable for someone on the inside to help improve things much. I don't think it makes sense to continue trying to improve OpenAI's plans, at least until OpenAI has some kind of credible plan (backed up by actions) of actually seriously working on existential safety.

I think it might make sense for some individuals to go work at OpenAI anyway, who have an explicit plan for how to interface with the organizational culture. But I think this is a very high context, high skill job. (i.e. skills like "keeping your eye on the AI safety ball", "interfacing well with OpenAI staff/leadership while holding onto your own moral/strategic compass", "knowing how to prioritize research that differentially helps with existential safety, rather than mostly amounting to near-term capabilities work.")

I don't think this is the sort of thing you should just funnel people into on a jobs board.

I think it makes a lot more sense to say "look, you had your opportunity to be taken on faith here, you failed. It is now OpenAI's job to credibly demonstrate that it is worthwhile for good people to join there trying to help, rather than for them to take that on faith."

Q: What about jobs like "security research engineer?".

That seems straightforwardly good for OpenAI to have competent people for, and probably doesn't require a good "Safety Culture" to pay off?

The argument for this seems somewhat plausible. I still personally think it makes sense to fully delist OpenAI positions unless they've made major changes to the org (see below).

I'm operating here from a cynical/conflict-theory-esque stance. I think OpenAI has exploited the EA community and it makes sense to engage with them from more of a cynical "conflict theory" stance. I think it makes more sense to say, collectively, "knock it off", and switch to default "apply pressure." I think if OpenAI wants to find good security people, that should be their job, not EA organizations.

But, I don't have a really slam dunk argument that this is the right stance to take. For now, I list it as my opinion, but acknowledge there are other worldviews where it's less clear what to do.

Q: What about offering a path towards "good standing?" to OpenAI?

It seems plausibly important to me to offer some kind of roadmap back to good standing. I do kinda think regulating OpenAI from the outside isn't likely to be sufficient, because it's too hard to specify what actually matters for existential AI safety.

So, it feels important to me not to fully burn bridges.

But, it seems pretty hard to offer any particular roadmap. We've got three different lines of OpenAI leadership breaking commitments, and being manipulative. So we're long past the point where "mere words" would reassure me.

Things that would be reassure me are costly actions that are extremely unlikely in worlds where OpenAI would (intentionally or no) lure more people in and then still turn out to, nope, just be taking advantage of them for safety-washing / regulatory capture reasons.

Such actions seem pretty unlikely by now. Most of the examples I can think to spell out seem too likely to be gameable (i.e. if OpenAI were to announce a new Superalignment-equivalent team, or commitments to participate in eval regulations, I would guess they would only do the minimum necessary to look good, rather than a real version of the thing).

An example that'd feel pretty compelling is if Sam Altman actually really, for real, left the company, that would definitely have me re-evaluating my sense of the company. (This seems like a non-starter, but, listing for completeness).

I wouldn't put much stock in a Sam Altman apology. If Sam is still around, the most I'd hope for is some kind of realistic, real-talk, arms-length negotiation where it's common knowledge that we can't really trust each other but maybe we can make specific deals.

I'd update somewhat if Greg Brockman and other senior leadership (i.e. people who seem to actually have the respect of the capabilities and product teams), or maybe new board members, made clear statements indicating:

This wouldn't make me think "oh everything's fine now." But would be enough of an update that I'd need to evaluate what they actually said/did and form some new models.

Q: What if we left up job postings, but with an explicit disclaimer linking to a post saying why people should be skeptical?

This idea just occurred to me as I got to the end of the post. Overall, I think this doesn't make sense given the current state of OpenAI, but thinking about it opens up some flexibility in my mind about what might make sense, in worlds where we get some kind of costly signals or changes in leadership from OpenAI.

(My actual current guess is this sort of disclaimer makes sense for Anthropic and/or DeepMind jobs. This feels like a whole separate post though)

My actual range of guesses here are more cynical than this post focuses on. I'm focused on things that seemed easy to legibly argue for.

I'm not sure who has decisionmaking power at 80k, or most other relevant orgs. I expect many people to feel like I'm still bending over backwards being accommodating to an org we should have lost all faith in. I don't have faith in OpenAI, but I do still worry about escalation spirals and polarization of discourse.

When dealing with a potentially manipulative adversary, I think it's important to have backbone and boundaries and actual willingness to treat the situation adversarially. But also, it's important to leave room to update or negotiate.

But, I wanted to end with explicitly flagging the hypothesis that OpenAI is best modeled as a normal profit-maximizing org, that they basically co-opted EA into being a lukewarm ally it could exploit, when it'd have made sense to treat OpenAI more adversarially from the start (or at least be more "ready to pivot towards treating adversarially".

I don't know that that's the right frame, but I think the recent revelations should be an update towards that frame.