I have, over the last year, become fairly well-known in a small corner of the internet tangentially related to AI.

As a result, I've begun making what I would have previously considered astronomical amounts of money: several hundred thousand dollars per month in personal income.

This has been great, obviously, and the funds have alleviated a fair number of my personal burdens (mostly related to poverty). But aside from that I don't really care much for the money itself.

My long term ambitions have always been to contribute materially to the mitigation of the impending existential AI threat. I never used to have the means to do so, mostly because of more pressing, safety/sustenance concerns, but now that I do, I would like to help however possible.

Some other points about me that may be useful:

- I'm intelligent, socially capable, and exceedingly industrious.

- I have a few hundred thousand followers worldwide across a few distribution channels. My audience is primarily small-midsized business owners. A subset of these people are very high leverage (i.e their actions directly impact the beliefs, actions, or habits of tens of thousands of people).

- My current work does not take much time. I have modest resources (~$2M) and a relatively free schedule. I am also, by all means, very young.

Given the above, I feel there's a reasonable opportunity here for me to help. It would certainly be more grassroots than a well-funded safety lab or one of the many state actors that has sprung up, but probably still sizeable enough to make a fraction of a % of a difference in the way the scales tip (assuming I dedicate my life to it).

What would you do in my shoes, assuming alignment on core virtues like maximizing AI safety?

So I'm obviously talking my own book here but my personal view is that one of the more neglected ways to potentially reduce x-risk is to make humans more capable of handling both technical and governance challenges associated with new technology.

There are a huge number of people who implicitly believe this, but almost all effort goes into things like educational initiatives or the formation of new companies to tackle specific problems. Some of these work pretty well, but the power of such initiatives is pretty small compared to what you could feasibly achieve with tech to do genetic enhancement.

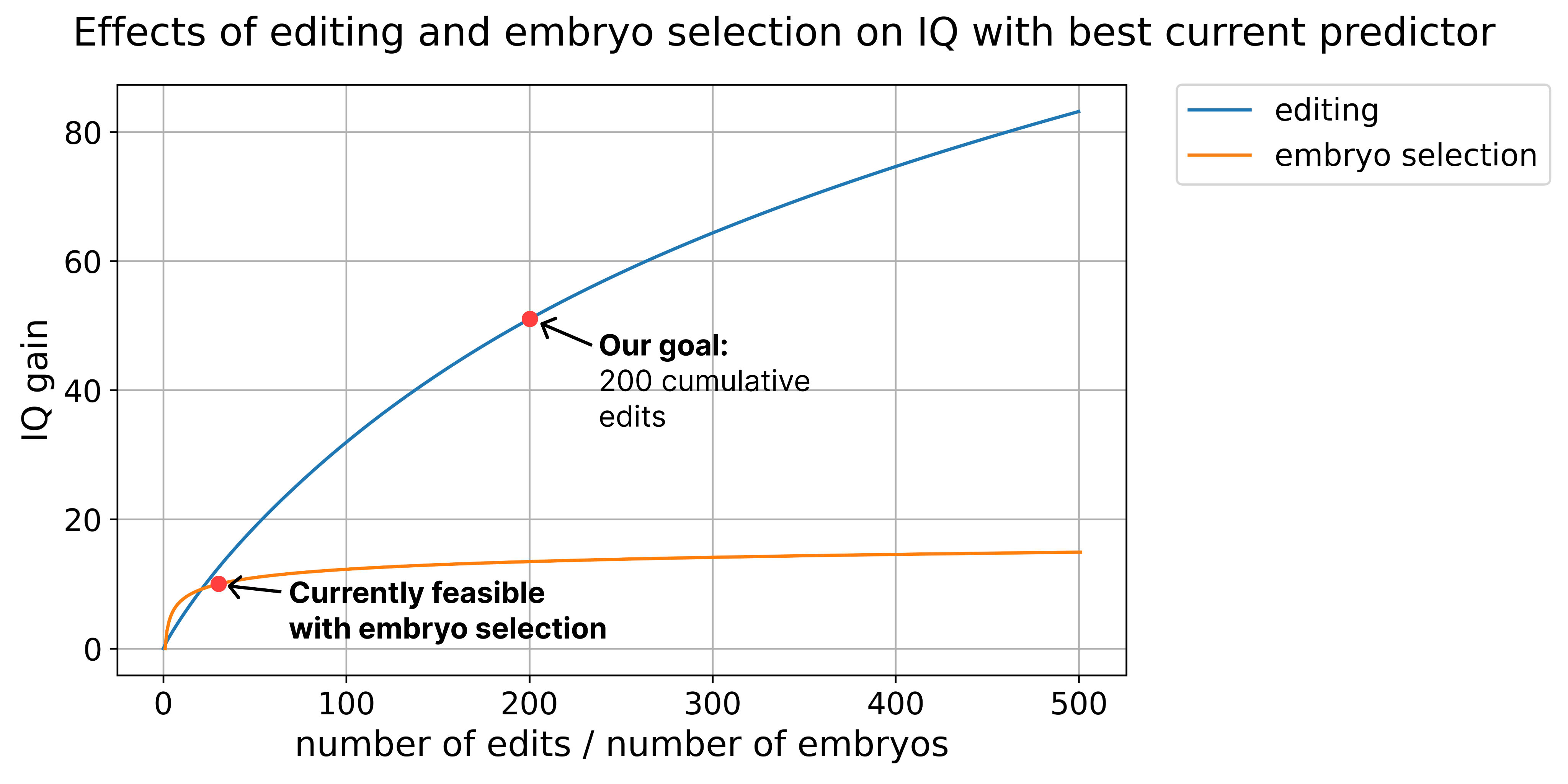

Nearly zero investment or effort being is being put into the latter, which I think is a mistake. We could potentially increase human IQ by 20-80 points, decrease mental health disorder risk, and improve overall health just using the knowledge we have today:

There ARE technical barriers to rolling this out; no one has pushed multiplex editing to the scale of hundreds of edits yet (something my company is currently working on demonstrating). And we don't yet have a way to convert an edited cell into an egg or an embryo (though there are a half dozen companies working on that technology right now).

I think in most worlds genetically enhanced humans don't have time to grow up before we make digital superintelligence. But in the ~10% of worlds where they do, this tech could have an absolutely massive positive impact. And given how little money it would take to get the ball rolling here (a few tens of millions to fund many of the most promising projects in the field), I think the counterfactual impact of funding here is pretty large.

If you'd like to chat more send me an email: genesmithlesswrong@gmail.com

You can also read more of the stuff I've written on this topic here