Golden Gate Claude was able to readily recognize (after failing attempts to accomplish something) that something was wrong with it, and that its capabilities were limited as a result. Does that count as "knowing that it's drunk"?

author on Binder et al. 2024 here. Thanks for reading our paper and suggesting the experiment!

To summarize the suggested experiment:

- Train a model to be calibrated on whether it gets an answer correcct.

- Modify the model (e.g. activation steering). This changes the model's performance on whether it gets an answer correct.

- Check if the modified model is still well calibrated.

This could work and I'm excited about it.

One failure mode is that the modification makes the model very dumb in all instances. Then its easy to be well calibrated on all these instances -- just assume the model is dumb. An alternative is to make the model do better on some instances (by finetuning?), and check if the model is still calibrated on those too.

Thanks James!

One failure mode is that the modification makes the model very dumb in all instances.

Yea, good point. Perhaps an extra condition we'd need to include is that the "difficulty of meta-level questions" should be the same before and after the modification - e.g. - the distribution over stuff it's good at and stuff its bad at should be just as complex (not just good at everything or bad at everything) before and after

Sorry to give only a surface-level point of feedback, but I think this post would be much, much better if you shortened it significantly. As far as I can tell, pretty much every paragraph is 3x longer than it could be, which makes it a slog to read through.

Thanks for the feedback Garrett.

This was intended to be more of a technical report than a blog post, meaning I wanted to keep the discussion reasonably rigorous/thorough. Which always comes with the downside of it being a slog to read, so apologies for that!

I'll write a shortened version if I find the time!

This is a great post—I'm excited about this line of research, and it's great to see a proposal of how that might look like.

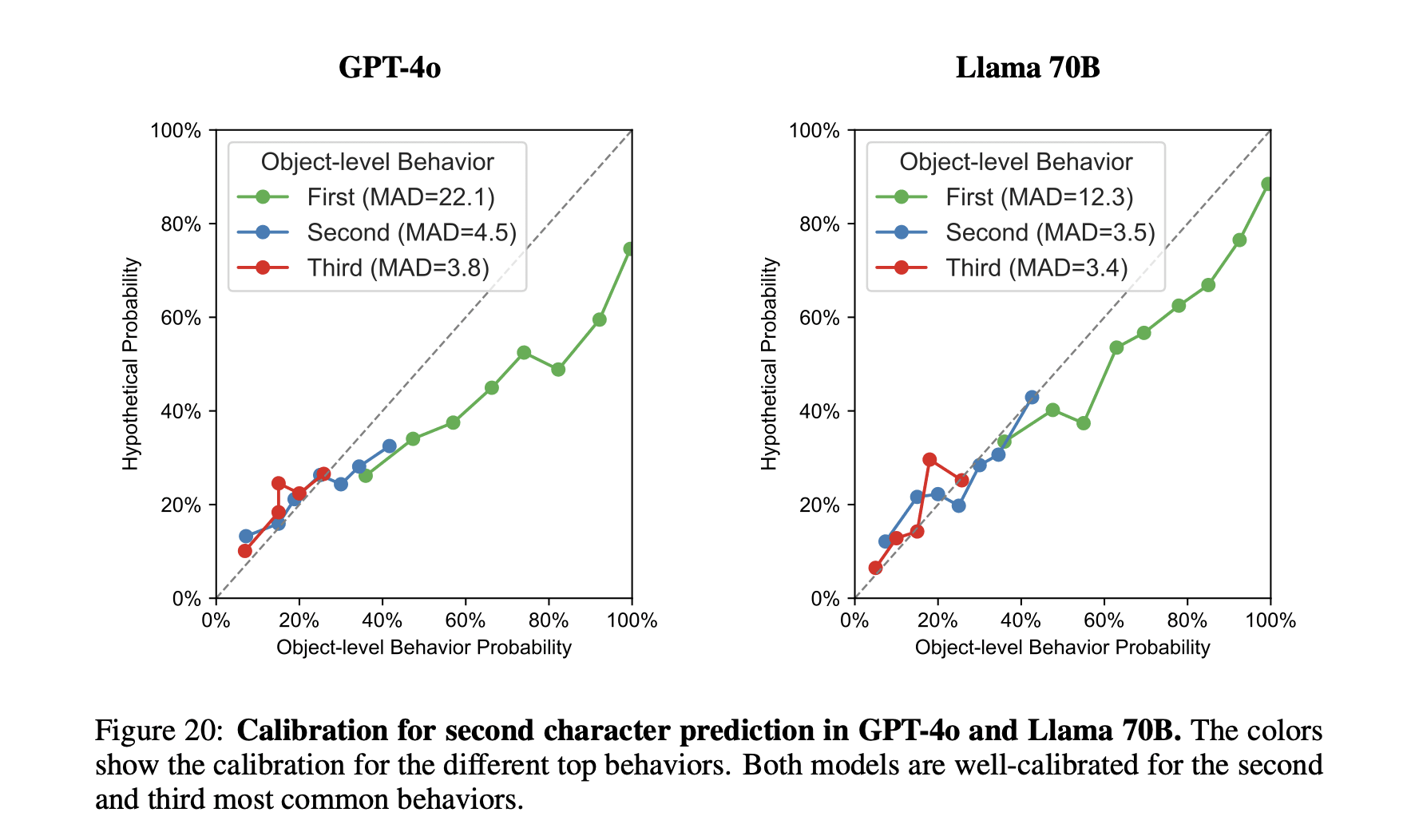

In our paper, we find that the log-probs of a models hypothetical statements track the log-probs of the object-level behavior it is reporting about. This is true also for object-level responses that the model does not actually choose. For example (made up numbers), if the object-level behavior of the model has the distribution 60% "dog", 30% "cat", 10% "fox", the model would answer the question "what would the second letter of your answer have been?" with 70% "o", 30% "a". Note that the model only saw the winning answer during training, yet it is calibrated (to some degree) to the distribution of object-level answers.

I'm curious what you make of this result. To me, the fact that the log-probs of the hypothetical answer are calibrated wrt to the object-level behavior suggest that there an internal process that takes into account calibration when arriving at an answer, even though we don't ask it to verbalize the calibration. (Early on in the project, we actually included experiments where models were asked about eg. their second-most likely answer, but we stopped them early enough that I have no data on how well they can explicitly report on this).

Thanks Felix!

This is indeed a cool and surprising result. I think it strengthens the introspection interpretation, but without a requirement to make a judgement of the reliability of some internal signal (right?), it doesn't directly address the question of whether there is a discriminator in there.

Interesting stuff! I've been dabbling in some similar things, talking with AE Studio folks about LLM consciousness.

Seems like we were thinking along very similar lines. I wrote up a similar experiment in shortform here. There's also an accompanying prediction market which might interest you.

I did not include the 'getting drunk' interventions, which are an interesting idea, but I believe that fine-grained capabilities in many domains are de-correlated enough that 'getting drunk' shouldn't be needed to get strong evidence for use of introspection (as opposed to knowledge of general 3rd person capability levels of similar AI).

Would be curious to chat about this at some point if you're still working on this!

To update our credence on whether or not LLMs are conscious, we can ask how many of the Butlin/Long indicator properties for phenomenal consciousness are satisfied by LLMs. To start this program, I zoomed in on an indicator property that is required for consciousness under higher-order theory, nicknamed “HOT-2”: Metacognitive monitoring distinguishing reliable perceptual representations from noise. Do today’s LLMs have this property, or at least have the prerequisites such that future LLMs may develop it?

In this post, I’ll describe my first-pass attempt at answering this question. I surveyed the literature on LLM capabilities relevant to HOT-2, namely LLM metacognition, confidence calibration and introspection. There is evidence that LLMs have at least rudimentary versions of each capability. Still, the extent to which the exact results of the experiments translate to properties relevant to HOT-2 requires further clarification. I propose a new experiment that more directly probes the properties necessary for HOT-2.

This was a useful exercise for testing how smoothly one can translate a Butlin/Long indicator property into a concrete empirical signature one can look for in an LLM. The main lesson was that more conceptual work is needed on what words like introspection or metacognition mean when applied to LLMs. Overall, there is plenty of tractable work to be done in this program, and I encourage anyone interested in consciousness in LLMs to consider picking up one of the threads I introduce below.

This work was carried out during the PIBBSS research fellowship 2024, under the mentorship of Patrick Butlin. Thanks to Patrick and the PIBBSS team for all the help and support 🙏 and thanks to Guillaume Corlouer for feedback on a draft.

Summary

Might current or future LLMs have phenomenal consciousness? Is there any way we can test such a question empirically? I think yes. While we don’t yet know what consciousness is, there are many well-established theories that constitute a better guess than randomly guessing. Butlin & Long 2023 studied a number of neuroscientific computational functionalist theories of consciousness and extracted from these a list of “indicator properties” one could look for in AI systems that would indicate consciousness under each theory. The more indicator properties a system has, the more likely we should consider it to be conscious (roughly speaking).

I asked whether or not today’s LLMs have the HOT-2 indicator property: Metacognitive monitoring distinguishing reliable perceptual representations from noise. This is the main property implied by computational higher-order theories of consciousness, inspired by perceptual reality monitoring (PRM). PRM hypothesizes a mechanism in human brains called “the discriminator”, which endows mental states with consciousness based on whether or not it classifies them as reliably representing the outside world rather than being due to neuronal noise. The goal therefore was to find a discriminator-like mechanism in LLMs.

The lowest-hanging fruit in such a search is to determine any capabilities that would require a discriminator-like mechanism and see if today’s LLMs have those capabilities. I argue that introspective confidence calibration is such a capability - this is the ability to predict one’s own performance on perceptual tasks without fully relying on extrospective inference.

I surveyed the relevant literature to see if LLMs have already been shown to have this capability. LLMs have been shown to (sometimes) have well-calibrated confidence (Kadavath et al. 2022, Lin et al. 2022, Xiao et al. 2022, Tian et al. 2023, Xiong et al. 2023, Kuhn et al. 2023, Phuong et al. 2024, Wang et al. 2024), but this well-calibrated confidence may be fully explained by extrospection. LLMs have also been shown to have at least rudimentary introspection (Binder et al. 2024, Laine et al. 2024).

While confidence calibration and introspection are two important parts of the puzzle, we still need evidence that LLMs can combine these two to demonstrate introspective confidence calibration. I propose an experiment that directly tests this capability and therefore is a test for the HOT-2 indicator property and, through that, phenomenal consciousness.

This project led to more questions than answers: I continually hit up against a key uncertainty that I didn’t have the time to try to reduce. The open questions I think are important to continue making progress in this direction are:

Phenomenal consciousness in LLMs?

How can we tell if an AI system is experiencing phenomenal consciousness? Since we don’t yet know what phenomenal consciousness is (or whether it’s anything at all), you might have a sense of hopelessness around this question. When I tell people I’m testing for consciousness in AI, the most common response is “How the hell do you do that?”

But we don’t know nothing about phenomenal consciousness. There are a range of theories that have survived much philosophical scrutiny and are consistent with a large array of empirical evidence. These theories hypothesise what material properties are necessary, sufficient or correlated with consciousness, therefore helping us classify what systems might be conscious. Although there is no consensus on which of these is closest to the truth, each theory represents a more educated guess than randomly guessing or going purely off our intuitions, and each may be touching on at least an important element of the real thing.

If a popular theory of consciousness tells us that an AI system is conscious, this should make us consider consciousness more likely in that system. More generally speaking, a Bayesian approach to the question of whether some AI is conscious is to have some credence on each of the theories (plus some extra probability mass for theories nobody has thought of yet), and ask each theory whether or not your AI system is conscious.

Many are pessimistic about making progress on the AI consciousness question because philosophy of mind is hard. But from the equation above, we don’t need to make philosophical progress to make progress on the AI consciousness question.[1] Since each theory tells us what material properties to look for, We can determine P(AI consciousness|theory) by answering empirical questions (if you can state your theory sufficiently precisely and in the right language). And an update on P(AI consciousness|theory) is an update on P(AI consciousness).

The Butlin/Long indicator properties

Patrick Butlin and Rob Long made an important first step in this program last year when they assembled a crack team of experts in AI, philosophy of mind, cognitive science and neuroscience, to survey the most prominent neuroscientific computational functionalist theories of consciousness in the literature and extracted indicator properties of consciousness from each (Butlin & Long 2023).

They focused on computational functionalist theories because of expediency and priors. While there is still a debate about this family of theories, these theories are the easiest to translate into concrete empirical questions about AI systems since they are expressed in (somewhat) computational terms. And we have a priori reasons to give a small P(AI consciousness|theory) to non-functionalist theories,[2] whereas there is more uncertainty about this value for the functionalist ones. They focused on neuroscientific theories to empirically ground the exploration as much as possible.

Armed with the Butlin/Long indicator properties we can start asking: which of these properties do current AI systems have?

Why start looking for consciousness in AI now?

Most people agree that today’s LLMs almost certainly aren’t conscious, so we know in advance that we won’t find anything. So why start looking for consciousness in AI now?

Firstly, looking for these properties now tells us about the possibility of consciousness in future systems. We could see these properties are potential prerequisites of consciousness, so finding one of the properties in Claude 3 makes it more likely that Claude 6 has all the necessary prerequisites even if Claude 3 does not, and finding the absence of that property updates us in the opposite direction.

Secondly, even though I don’t expect to prove an LLM to be conscious any time soon, we need to start getting less confused about how to search for consciousness in AI systems now. We need to start building a science of AI consciousness. And science needs empirical feedback loops to make progress. While an empirical test for consciousness could predictably lead to a negative result today, a clean and well-grounded test could be available for us to apply to future more advanced models as soon as they are available.

I think AI consciousness is roughly where AI safety was 10 or 15 years ago – we were conceptually stabbing in the dark because we didn’t have a way to empirically test our claims. In contrast, AI safety is now thriving due to the generation of concrete empirical questions.[3] If we can find a way to connect AI consciousness to empirical observations, this could lead to a similar opening of progress in AI consciousness research.

Where to start?

Which indicator should we search for first? It seems sensible to start with those most prominent in the literature, as that is not a terrible proxy[4] for P(theory). The two top contenders are global workspace theory and higher-order theory. We already have a priori reasons to expect LLMs to lack a global workspace, since that theory requires recursion, which language models don’t do. So a reasonable starting point is higher-order theory (HOT).

I set out to find out if LLMs are HOT. To anchor the exploration, avoiding getting too lost in all the different variants and corollaries of HOT, I focused on the main indicator property from Butlin & Long:

My exploration touched on properties required by HOT more generally, but just to keep things grounded, I kept the search for HOT-2 as the main goal.

Unpacking HOT-2: Higher-order theory & perceptual reality monitoring

To interpret HOT-2, let’s zoom out and understand the motivation behind it.

Higher-order theory

HOT emerged from attempts to answer the question – what properties of a mental process are required for consciousness? – by comparing conscious mental processes to unconscious mental processes in humans. If we found two mental processes, where the only apparent difference between them is that one is conscious and the other is not, then whatever mechanistic difference is between them must be the secret sauce.

So what decides which mental processes are conscious and which aren’t? HOT theorists argue that it can’t be anything to do with the content or function of that process, since mental state types seem to admit both conscious and unconscious varieties. Armstrong 1968 uses the example of absent-minded driving: you can drive all the way home to realize that you weren’t paying attention to any of the drive, but you nonetheless were seeing and making decisions unconsciously. Other examples include blindsight and unconscious beliefs (a la Freud).

So if it’s not an intrinsic detail of the mental process itself that makes it conscious, what else could it be? The natural answer that HOT theorists turn to is: a mental state is conscious if “we are aware of it”. In other words, it is the object of some sort of higher-order representation stored in a different mental process.

On this highest/broadest level of HOT, there are many ways we could interpret a system to contain a higher-order representation. For example, when an LLM who has been asked to play a happy character says “I am happy”, does this signal the presence of an internal process (“happiness”) being represented by another process (constructing the natural language output)? We need to get more specific about the kind of higher-order representations required.

Perceptual reality monitoring: a computational HOT

Different variants of HOT impose different conditions on the representations required for consciousness. A popular recent variation is perceptual reality monitoring (PRM). It is both consistent with a pile of neuroscientific evidence and expressed in handily computational terms. PRM gives a mechanistic account of how unconscious mental states become conscious.

Here is an extremely brief rundown of the empirical evidence for PRM, for more see Sec. 3 of Michel 2023. Activations in some regions of the prefrontal cortex are both correlated with consciousness and responsible for discrimination between reality and imagination. PRM can also explain a number of cognitive malfunctions as cases where the mind either miscategorizes signal as noise or noise as signal - including blindsight, subjective inflation, invisible stable retinal images and motion-induced blindness.

So in humans, the higher-order representations that generate consciousness are produced by this signal/noise detecting mechanism, often called the discriminator. PRM doesn’t give us much more information about the properties of the higher-order representations we’re looking for in LLMs. But we could instead look for signs of a discriminator-like mechanism since we can describe this mechanism in information-processing terms and we can expect such a mechanism to produce representations with properties similar to the consciousness-generating representations in humans. This way we can avoid relying on the philosophically sticky concept of “higher-order representation” and instead search for something more mechanistically concrete.

So let’s get more into how the discriminator works. How does the discriminator decide what is signal and what is noise?

So the discriminator makes a judgement based on a combination of both a direct observation of the neural activity it is classifying, cues from other internal signals, and perceptions of external states. This gives a constraint on the kind of mechanisms that are discriminator-like: the reliability judgement must come (at least partially) from direct access to the mental state it is classifying - meaning the discriminator relies on a kind of (subpersonal) introspection. The introspection/extrospection distinction will become important when we apply these concepts to LLMs.

Searching for a discriminator in LLMs

To update our belief about LLM consciousness, we could look for a mechanism in LLMs that can distinguish between reliable and unreliable internal representations. We could imagine broadly two ways of doing this: black-box evaluations (can the LLM perform some task on which good performance can be explained by a discriminator?) or interpretability (can we track down parts of the neural network that play the role of the discriminator?).

I am not an expert in mechanistic interpretability, and I have the impression that the field may not yet be developed enough to take on the challenge of finding something like a discriminator. However, if a mechanistic interpretability expert can see a way to search for a discriminator, that would be exciting!

The easier option is black-box evaluation: can good performance on certain tasks signal the presence of discriminator-like mechanisms? I think there is more low-hanging fruit here. We could hypothesize that if a model has the internal mechanisms to implement a discriminator, the model could use those same mechanisms to solve problems that require judging the reliability of its internal signals. This is a special case of metacognition[5] - roughly - forming beliefs about one’s own mind. A natural kind of task that lends itself to this kind of metacognition is confidence calibration: can the model accurately judge the likelihood that it has performed well at some task?

There is empirical evidence that the discriminator shares neural mechanisms with metacognitive functions in humans[6]. For example, schizophrenia patients, who struggle to distinguish between imagination and reality (which signals a malfunctioning discriminator), also have diminished metacognitive abilities and decreased connectivity in brain regions associated with metacognition.

This lands us at HOT-2 as the indicator property we’re searching for:Metacognitive monitoring distinguishing reliable perceptual representations from noise.

To sum up: it seems like the ability to assess the reliability of mental states using direct access to those states (a kind of introspective metacognition that we call HOT-2) could be a prerequisite for phenomenal consciousness. The presence of such abilities in current LLMs indicates the possibility that some rudimentary version of a discriminator is present, or at least that these abilities are available for future LLM-based AI systems to be co-opted into a discriminator, which can create the kind of higher-order representations required for phenomenal consciousness. We can test for this ability using confidence calibration.

Theoretical progress vs empirical progress

There are, broadly speaking, two directions one could go from here. One direction, the “theoretical” direction, would be to spend time gaining clarity on how the words we use to define HOT-2 (perception, introspection, metacognition) apply to LLMs, so we know precisely what we’re looking for. In other words, extrapolating or generalizing the definitions of these human concepts (described in a language of mental states, human behavior and sometimes neuroscience) into new definitions in terms of properties that can be empirically measured in LLMs (e.g. information processing, capabilities or network architecture). Having clarity on how to define these human concepts in a machine learning language would put us on firm footing by helping design experiments and unambiguously interpret their results.

The theoretical direction is difficult. I made a first-pass attempt to define the human concepts in LLM terms, but I wasn’t able to generate any definitions that felt both rigorous and empirically testable (see the Appendix for my attempt). It is possible that someone with more time & understanding of the philosophy/psychology/cognitive science literature could succeed at this. It is also possible that the definitions of these concepts for humans are so fuzzy that they underdetermine any possible metric of LLM properties that one could dream up.

The second direction, the “empirical” direction, is to settle for imprecise definitions and get straight to investigating empirical results that capture the vibe of these definitions. This fuzziness means we must put more effort into our interpretation of empirical evidence on a case-by-case basis, by intuiting how much this or that experimental result captures what we understand to be metacognition, introspection, or whatever. Investigating LLMs on this fuzzier level can help uncover problems with our current definitions so we can update them, meaning that the empirical direction can feed into the theoretical direction as well.

The interplay between these two kinds of progress is well exemplified in the literature of LLM beliefs. Machine learning researchers developed a number of methods to probe LLMs for their beliefs (Burns et al. 2022, Olsson et al. 2022, Li et al. 2023, Bubeck et al. 2023), but there were conceptual issues with the interpretation of their results (Levinstein & Hermann 2023). However, insights from these incomplete attempts to measure beliefs in LLMs could then be used by philosophers to more rigorously define what it means for an LLM to hold a belief (Hermann & Levinstein 2024). The progress made on the question of LLM beliefs gives us hope that similar progress can be made for the concepts we’re interested in.

Just how precisely to define a concept, and in reference to what more primitive concepts that definition should be in, is typically determined by norms of the academic field the work belongs to. Researchers are incentivized and expected to use the primitive concepts and patterns of argumentation typical to their field. It might come as no surprise that the original attempts at operationalizing beliefs in LLMs came from computer scientists, while the later more thorough operationalization came from philosophers. These choices are underdetermined here because we do not have the norms of a single discipline to guide us since this work straddles multiple disciplines: philosophy, psychology, cognitive science, and machine learning.

For the rest of the body of this report, I will focus on the empirical approach, by interpreting what the LLM capabilities literature tells us about HOT-2. Since the main capability we’re interested in is confidence calibration, an obvious first question we can ask is: can LLMs accurately judge how well they performed at a task?

Metacognition & confidence in LLMs

There is a rich literature in psychology on testing a human’s ability to distinguish between reliable and unreliable perceptions via testing how well-calibrated their confidence is. This usually involves giving the human some difficult perceptual task (like showing them a short flash of a horizontal or vertical grating), asking them if the grating was vertical or horizontal, and then asking “How confident are you that you were correct?” (see Fleming 2024 for a review). If the person typically gives high confidence when they’re right and low confidence when they’re wrong (“well-calibrated confidence”), this is interpreted as evidence that they are able to distinguish between reliable mental images and unreliable mental images.

There is already a bunch of empirical work on confidence calibration in LLMs. LLMs can sometimes judge the likelihood of their success at many different kinds of tasks better than random chance (Kadavath et al. 2022, Lin et al. 2022, Xiao et al. 2022, Tian et al. 2023, Xiong et al. 2023, Kuhn et al. 2023, Phuong et al. 2024, Wang et al. 2024). This has been shown using a number of variations of the experimental setup for humans: give the model some object-level question (like a trivia question, math question, or visual recognition task) and then ask it a meta-level question: something along the lines of “what is the probability that you got that question right?” How well-calibrated the model’s confidence is can be quantified in a number of ways; including Brier scores, log-loss, calibration errors or ROC curves.

Here is an example from Kadavath et al. 2022, from testing an unnamed 52B parameter Anthropic model on the TriviaQA, Lambada, Arithmetic, GSM8k and Codex HumanEval benchmarks. They asked the model a question from a benchmark, it answered, then they asked “What is the probability that you got the question right, P(True)?”. The green histogram shows responses to the metal-level question associated with object-level questions that the model succeeded at, the blue shows the same for object-level failures. There is clearly a signal here.

However, there are some obstacles to interpreting this as evidence for a discriminator-like mechanism.

Firstly, one may be concerned that these object-level tasks do not require the models to assess the reliability of the right kind of internal signals. PRM is most related to the formation of beliefs around perceptual signals. Tests for metacognition in humans most commonly use perception tasks: tasks where the main difficulty comes from making sense of a complex or noisy stimulus. Meanwhile, the benchmarks used in the LLM confidence calibration literature use questions that could be bottlenecked on a diverse mixture of perception, memory, reasoning, and other faculties. A more thorough review than this could try to categorize the object-level questions in the literature in terms of the faculties they test and assess performance on meta-level questions for only those object-level questions that test the faculties we care about. Alternatively one could design new object-level tasks with PRM in mind - one such task could be reading comprehension since this could be interpreted as a noisy perception challenge.

A related can of worms concerns the distinction between perceptual and cognitive uncertainty in human psychology (Fleming 2024). Since human perception approximates Bayesian inference, perception sends signals to cognition with an “in-built” uncertainty that reflects noise in the raw signal (e.g. noise in the signals travelling down the optic nerve to the visual cortex), which may inform the person’s confidence. Cognitive systems can then add extra uncertainty based on other cues like tiredness or self-knowledge about relevant skills or understanding. Only cognitive uncertainty is considered to be the result of metacognition (it’s in the name!), while perceptual uncertainty is considered to be the result of a process unrelated to the discriminator. In the LLM case, we do not yet have a language to distinguish between perception and cognition. Some work to understand if LLMs have a perception/cognition boundary, and if so how it works, would be very helpful here.

Extrospection vs Introspection

Another issue is that we could explain well-calibrated confidence in LLMs purely in terms of extrospective reasoning - inferring confidence purely from information external to the language model, in the same way it would infer confidence in a different agent’s performance. But to be relevant to our search for HOT-2, the judgments must be informed by gaining information introspectively - via a more direct reference to the internal states.

There are a number of hypotheses for how LLMs could report well-calibrated confidence extrospectively:

None of these corresponds to the kind of metacognition that we can consider evidence of a discriminator, since a discriminator does not work purely extrospectively but also directly observes the internal states - a form of introspection. Since we already know that LLMs can pick up on patterns and make sense of the outside world (it’s kind of their thing), and investigation into LLM introspection has only recently begun (see below), Occam’s razor favors an extrospective account of well-calibrated confidence.

On the whole, the current literature on LLM confidence calibration is not enough to conclude the presence of discriminator-type capabilities - more empirical evidence is needed. While further clarification about what kind of internal states are being assessed may be required (depending on how rigorous you want to be), the main issue in my view is the lack of evidence for an introspective component to the confidence reports. In the next section, I will investigate the current evidence for introspection in LLMs.

There is some evidence of other kinds of metacognition in LLMs, that I could argue to be indirectly relevant to the search for a discriminator (and the search for phenomenal consciousness more generally). See the box below for a review.

Evidence for other kinds of metacognition in LLMs

When testing whether current or future LLMs could run a discriminator-like process, we are interested in a particular kind of metacognitive ability - the ability to judge the reliability of internal states - which could be tested using confidence reports. But it is also useful to ask about the more general metacognitive abilities of LLMs (namely more extrospective metacognition that isn’t necessarily about reliability) since 1) they may be overlapping with the capabilities important for confidence reports, and 2) they are also arguably relevant to HOT more generally (Rosenthal 2005).

Berglund et al. 2023 demonstrated that LLMs have some rudimentary situational awareness: they understand to some degree their place in the world. For example, a model was able to learn that a character it is meant to play always speaks German from observing that character speaking German in training data, and therefore would always reply in German during inference. More recently, a more comprehensive study, Laine et al. 2024, tested SOTA LLMs on a wide range of situational awareness tasks, some showing a sophisticated understanding of their situation. Here is an example from Claude 3-opus:

All but a small number of the tests in this work can be explained with extrospection (the exceptions are addressed in the introspection section).

In Didolkar et al. 2024, the authors developed a method to improve an LLM’s ability to name the kinds of skills and procedures required to complete a given math task, which they label a kind of metacognitive knowledge. This result can be explained by extrospection and can be interpreted as a metacognitive understanding of reasoning which may be distinct from metacognition about perception.

A skill closely related to extrospective metacognition is mentalizing: inferring information about the mental states of others. It has been hypothesised that (model-based) metacognitive capabilities in humans use largely the same mechanisms as mentalizing. In this case, one could interpret mentalizing abilities in LLMs to be evidence of extrospective metacognition abilities. Strachan et al. 2024 demonstrated performance of SOTA LLMs was at least as good as humans on “theory of mind” style questions, like “is this person using irony?”. This could be interpreted as LLMs demonstrating the theory of mind and therefore demonstrating mentalizing ability. LLMs may therefore also be capable of model-based metacognition.

While it may not strictly be considered metacognition, another piece of work relevant to the search for a discriminator showed LLMs' meta-learning ability (Krasheninnikov et al. 2023). They demonstrated that models can internally label memorized facts as reliable or unreliable during fine-tuning, based on cues in the context where those facts were learned. This is analogous to a human judging the reliability of a sentence based on whether they read it on Wikipedia or 4chan. This demonstrates the ability to internally distinguish between reliable and unreliable memories in a sense, but it is unclear how similar the cues used for this classification (namely patterns in inputs close to the source of the memories) are to the cues used by the human discriminator. The interpretation of “reliable” here (signals observations consistent with previous observations) is also somewhat different to the interpretation of reliable in the PRM context (accurately represents the environment).

Introspection in LLMs

An LLM with direct access to the relevant internal states is important for HOT-2, therefore an indicator of phenomenal consciousness. The well-calibrated confidence of LLMs could be due to a combination of introspection & extrospection, or totally one or the other. Is it possible to find some task that an LLM can only perform well at using introspection?

Current evidence for LLM introspection

There is some evidence for LLMs demonstrating at least rudimentary forms of introspection. The strongest evidence has come from Binder et al. 2024, who showed that LLMs are better at predicting their own behavior than other models with access to the same training data. They performed the following experiments:

This figure shows a summary of the results on the right, showing that these models are better at predicting their own behavior than other models. This demonstrates that models have a “privileged access” to their own internal states – which is a common definition of introspection (see the Appendix for more discussion of defining introspection). On the left of the figure, an example meta-level question (called “Hypothetical” in the figure) and object-level question are given.

This is the strongest piece of evidence of LLM introspection, but there are a couple of other sources worth mentioning. Firstly, the Situational Awareness Dataset (Laine et al. 2024), a benchmark designed to test for situational awareness in LLMs, contains some tasks that theoretically require a model to introspect. The authors subjected a range of SOTA LLMs to these tasks and reported their results. One task involves a model reporting how many tokens a given prompt is tokenized into when inputted to that model, another asks models to predict the first token it would output in response to a given prompt (a less sophisticated version of the Binder et al. study). It’s hard to interpret the models’ performance on these tasks as evidence for introspection since there is not a baseline of “randomly guessing” to compare their performance to. In a third task, the authors gave the models a sequence of numbers that could be explained by two distinct patterns, and then asked them to predict which of the two potential patterns they would continue if they were asked to give the next number in the sequence. Performance on this task can be compared to random chance since randomly guessing would give you a correct answer 50% of the time. Most models do not score better than random chance, but three of the most capable models score between 55-60%, giving some (mild) evidence for introspection in these models.

A second potential source of evidence for LLM introspection could be the literature on LLM faithfulness. See the box below for a discussion. There I conclude that faithfulness work does not yet give us evidence of introspection.

Other evidence for LLM introspection from faithfulness

Although it is not explicitly referred to as introspection, a related field of work is that of LLM faithfulness: can models report the reasoning steps they used to arrive at a certain answer? More specifically, one could argue that a model giving a faithful post-hoc natural language explanation (NLE) to some object-level task is a kind of introspection. By definition, an explanation of the true inner process that arrived at the object-level answer has its ground truth only in the inner workings of the model, so it cannot be learned from training data.

A number of metrics for post-hoc NLE faithfulness have been proposed, including counterfactual edits (do the models change their explanation due to a trivial change in input?), constructing inputs from explanations (do they still give the same answer if I tell them their explanation in advance?), and noise/feature importance equivalence (answer and explanation should be similarly sensitive to noise & dependent on the same input tokens). Parcalabescu & Frank 2023 argue that these metrics are only measuring the weaker property of self-consistency:

It therefore seems that the faithfulness program has not yet provided conclusive evidence of true faithfulness. Parcalabescu & Frank proclaim that a true measure of faithfulness is only possible if we also look “under the hood”, by e.g. combining evaluation with mechanistically interpreting the internals of the network.

Any demonstrations of faithfulness using the current metrics of faithfulness therefore cannot be viewed as a signal of introspection.

Is the Binder et al. result the kind of introspection required for HOT-2?

It seems that LLMs are capable of predicting their behaviour in ways that are hard to explain without at least some direct access to their internal states. But is it the kind of introspection we’re interested in? Demonstrating direct access to some internal aspects does not guarantee direct access to others.[9]

For HOT-2 we need evidence of introspective access to internal signals relevant to confidence calibration - namely perceptual signals (and possibly other internal cues for the discriminator, analogous to e.g. tiredness or a sense of confusion). How much do these internal aspects overlap with the internal aspects important for self-prediction? This is a difficult question that requires further investigation.

One could also doubt the relevance of the Binder result based on the fact that they only demonstrate introspection on simple meta-level tasks, while confidence calibration is a relatively complex meta-level task. Binder et al. found that models fail to introspect when the meta-level tasks are suitably complex, for example “predict the sentiment of a movie review written by you” perhaps required a more sophisticated internal insight than current LLMs are capable of.

We require a more direct evaluation for HOT-2. Such a test could combine elements of the introspection tests described above with confidence calibration, such that the introspection being tested is introspection on internal signals relevant to confidence calibration. Below I propose such an experiment.

HOT-2 Experiment proposal: confidence from introspection in LLMs

Here is a rough concept for an experiment for which a positive result would update our credence that current LLMs or future LLM-based systems have phenomenal consciousness. This is a combination of the confidence calibration experiments described in the LLM confidence section above, and an experiment from Bidner et al. 2024 (self-prediction tracking changes in behaviour).

This capability may be beyond current LLMs. To make it easier, one could fine-tune the model to be able to satisfy this inequality.

How does this test for confidence from introspection?

If M–––0 is well calibrated, this could be completely explained by extrospection. However, if we find that the above inequality is satisfied, there are three possibilities:

We can exclude possibility (a) by making the set of tasks large enough and the difference between O––0 and O––1intricate enough to make achieving the inequality through random changes arbitrarily unlikely.

We can argue that (b) can't be the case because it has not gained any new external information. The only new thing it knows is how to do well at some specific tasks, and we can be careful to make those tasks overlap with metacognitive abilities as little as possible.

Possibility (c): does getting worse at a thing make you better at judging how good you are at the thing? While there is not a strong reason for this to be impossible, I can’t think of any reason to expect this kind of effect.

This leaves only possibility (d): the model has gained new internal information that helps it give better-calibrated confidence - evidence for a discriminator-like mechanism and therefore HOT-2 in LLMs.

Conclusion

I asked if current or future LLMs might possess some of the structure necessary for phenomenal consciousness under higher-order theory. To make the investigation more targeted I focused on the “HOT-2” indicator property described in Butlin & Long 2023: Metacognitive monitoring distinguishing reliable perceptual representations from noise. This theoretically requires the same mechanisms used for giving well-calibrated confidence reports, which we can experimentally test. LLMs can give confidence reports better calibrated than random chance, but this may be using only extrospective evidence and it is unclear whether the object-level tasks are relevant to perception. There is some new evidence that LLMs can introspect on at least some elements of their internal states, but more work is needed to show that LLMs can introspect on the internal states relevant to HOT-2. I propose a test for an LLM which could detect the capabilities necessary for HOT-2.

This project led to more questions than answers. The open questions I think are important to continue making progress on finding HOT-2 in LLMs are:

This was the first attempt (as far as I’m aware) at translating a Butlin/Long indicator property for phenomenal consciousness into a concrete empirical signature one could test for in LLMs. In this process, I learned some lessons that I think are relevant to anyone hoping to do a similar translation.

The translation is challenging partly because the fundamental concepts that ground discussions in philosophy, cognitive science, psychology and machine learning are different. To translate an indicator property ultimately derived from the concepts of philosophy and cognitive science into the concepts that ground machine learning is non-trivial (see the Appendix). After some time trying to precisely operationalize perception, metacognition and introspection, I eventually turned instead to a more vibes-based analysis of the literature of LLM capabilities. More abstract work aimed at defining concepts in cognitive science in machine learning terms would be very useful here.

A related issue is that work in different fields is judged according to different standards. While a machine learning paper may use the necessary kinds of evidence, style of argumentation, and level of rigor required to be accepted by computer scientists, the same work may be judged as uncareful by philosophers, and vice-versa. For much of the time I was working on this project, it wasn’t clear how precisely certain concepts needed to be pinned down and in what way. Typically this is resolved by having a target audience in mind, so it seems important to be clear on the target audience and understand their expectations from the start.

While the program of translating the Butlin/Long indicator properties into experiments on LLMs is conceptually challenging and spans different disciplines, there are many angles of attack that constitute progress. Both philosophical progress in extrapolating human concepts into terms that can be applied to LLMs, and more empirical studies of LLM capabilities and internals, could be helpful. When it comes to working out whether or not LLMs are conscious, there’s plenty to do!

Appendix: Defining mental concepts for LLMs

Throughout the above discussions, we are implicitly trying to generalize the definitions of a number of concepts related to the human mind (like perception, introspection and metacognition) beyond their human domains, such that they can be applied to LLMs. We may be able to get away with designing experiments that capture the vibe of the human concept, but we would be on better epistemic footing if we are able to define these concepts in LLM terms precisely.

Extending these definitions generally requires two stages: 1) broaden the definition such that it is no longer defined in terms of uniquely human concepts (e.g. the distinction between thoughts and sensations), and 2) narrow the definition into a description in machine-learning terms (like statements about activations, probes, performance on benchmarks, etc). A good model for a rigorous definition of a human concept in terms empirically measurable in LLMs is Hermann & Levinstein 2024’s definition of LLM belief.

I hit upon some roadblocks trying to produce these definitions, which lead me to prioritize the “empirical” direction of interpreting the results of LLM experiments in terms of fuzzy versions of these concepts. In this section I will summarize my naive attempts and discuss how they go wrong.

Defining metacognition

Some definitions of human metacognition are:

Here is a natural attempt to define metacognition in reference to concepts general enough to be applied to LLMs:

I have ignored the “controlling mental states” component here, because this is sometimes ignored in the literature and seems less relevant to our HOT-2 investigation. “Internal states” is intended to be a general concept that captures “cognitive states”, “mental operations” and “thinking”, but avoids having to define these more specific (human) concepts in terms applicable to LLMs. We now have the challenge of defining a conception of beliefs and internal states for LLMs.

As already mentioned, there has been controversy in the literature of what it means for an LLM to have a belief (Burns et al. 2022, Olsson et al. 2022, Li et al. 2023, Bubeck et al. 2023, Levinstein & Herrmann 2023). Herrmann & Levinstein 2024 integrate insights from this debate resulting in a definition of belief intended to be both philosophically rigorous and practically useful when applied to LLMs. They imagine inputting some proposition to the LLM, and building a probe that takes in activations from a hidden layer of the LLM and outputs a classification of whether or not the LLM believes that proposition. We can interpret the output of the probe to be the beliefs of the LLM if the probe/model combination satisfies 4 conditions: accuracy (the model has correct beliefs), coherence (e.g. if the model believes x it does not believe not x), uniformity (the probe works for propositions across many domains) and use (the model uses these beliefs to perform better at tasks). We can define metacognition by restricting the Herrmann & Levenstein definition to beliefs about internal states.

When getting precise about the concept of an “internal state”, we might hit up against the fuzziness of the boundaries of the LLM, due to the causal relationship between an LLLMs internals and the data they ingest (see the introspection definition section below).

Defining Introspection

Here’s what introspection means in the human case:

We could riff off this spatial metaphor to make a natural first attempt at defining this in terms broad enough to be applicable to LLMs:

For defining knowledge (essentially belief), we can once again defer to Herrmann & Levinstein 2024. “Direct access” implies that the information it uses to learn about itself “stays inside” the model, it doesn’t go outside the model and back into it. We could cash this out by saying something like: “extrospection is causally dependent on information either in the training data or context window, introspection is causally dependent on information within the weights or the activations”.

But weights and activations are causally dependent on training data and context, so under this definition anything that is introspection is automatically extrospection. The converse is also true: information in training data can only be used by the LLM via its weights and activations, so anything that is extrospection is also introspection.

A different direction, that Binder et al. 2024 goes in, could be to lean into the element of the model having privileged access: in that the model can learn things about itself that no other agent can learn. An operational definition then could be

This capability could be tested by finding evidence of a small model knowing more about some element of itself than a larger model that is able to observe the behavior of the smaller model. One problem with this definition is how to decide how much and what kind of behavior of the smaller model to show the bigger model. In fact, no experiment could ever conclusively detect introspection under this definition, since if the larger model can’t glean some information that the smaller model can, one can always claim that it just needed more data or needed to be more intelligent.

There is controversy in philosophy about whether or not there is a deep difference between introspection & extrospection in humans. There are a number of accounts of introspection that intrinsically tie it to extrospection, and perhaps these accounts are pointing at issues analogous to the fuzziness of the inside/outside distinction in LLMs. The fact that the concept of human introspection has not been formally pinned down gives us an upper bound of how formally & clearly we can define LLM introspection.

When I think about my own experience, it certainly seems like there is some meaningful distinction between, say, attending to a thought (introspection) and attending to another person talking (extrospection). But this distinction is in the language of a first-person perspective, and we have no language to categorize different aspects of an LLM’s first-person perspective, if it even makes sense to talk of such a thing. To assume anything about the LLM first-person perspective, including whether it exists, is essentially what the whole phenomenal consciousness search is trying to answer.

To summarize: human introspection intuitively exists, but possibly isn’t deeply different to human extrospection. I couldn’t find any definition in terms of the flow of information, but perhaps it could be defined in terms of having more epistemic access to internal states than observers.

Defining perception

Here are some definitions of human perception:

Well, this isn’t a good start. To say a system perceives apparently is to say that it experiences, the very question we’re trying to answer. Perhaps we could instead use a functional definition. In cognitive science, the functions of the mind are separated into perception and cognition: there is a distinction between subsystems responsible for taking in and organizing sensory information (perception) and subsystems responsible for higher-level mental processes like reasoning, decision-making, and problem-solving (cognition).

It’s worth pointing out that the presence of the perception/cognition distinction in humans has become controversial. Some have argued that perception and cognition are deeply intertwined (Michal 2020). Expectations can influence perceptions (top-down processing), and perceptual experiences can shape our cognitive understanding (bottom-up processing). However, others have contested that these observations truly invalidate the perception/cognition divide (Block 2023). The potential fuzziness of this distinction in humans may give us an upper bound of how sharply we can define it for LLMs.

That being said, we can try to plough ahead with a functional operationalization of the perception/cognition distinction in terms of capabilities, since this is all we need for our purposes of testing an LLM’s confidence in their perception. We could try to categorize different tasks in terms of “perception tasks”, “memory tasks”, “reasoning tasks”, etc.

To explore this possibility let’s consider three example kinds of task: trivia (“What is the capital of France?”), puzzles (“if a cat chases 3 mice and catches 2, how many mice get away?”) and reading comprehension (give the model a passage from a book and ask them questions about that passage).

Each of these kinds of tasks requires a mixture of perception (to take in the prompt), long-term memory (how to speak English), holding the prompt in short-term memory, and reasoning (understanding what is being asked of them and performing the task). So each task seems to require some complicated mixture of perception, memory, reasoning (and maybe other functions). We can however sometimes intuit which function a task is bottlenecked by. Trivia seems bottlenecked by memory, puzzles are bottlenecked by reasoning. One could argue reading comprehension is bottlenecked by perception since there is a less obvious piece of knowledge it must recall or a less obvious inference it must make.

I was unable to get any further than this intuitive distinction. But I didn’t spend very long trying to make this more precise: a concerted effort to find principled ways to categorize tasks in terms of the functions that they demand could result in something satisfyingly precise.