We recently had a security incident where an attacker used an old AWS access key to generate millions of tokens from various Claude models via AWS Bedrock. While we don't have any specific reason to think that any user data was accessed (and some reasons[1] to think it wasn't), most possible methods by which this key could have been found by an attacker would also have exposed our database credentials to the attacker. We don't know yet how the key was leaked, but we have taken steps to reduce the potential surface area in the future and rotated relevant credentials. This is a reminder that LessWrong does not have Google-level security and you should keep that in mind when using the site.

- ^

The main reason we don't think any user data was accessed is because this attack bore several signs of being part of a larger campaign, and our database also contains other LLM API credentials which would not have been difficult to find via a cursory manual inspection. Those credentials don't seem have been used by the attackers. Larger hacking campaigns like this are mostly automated, and for economic reasons the organizations conducting those campaigns don't usually sink time into manually i

The reason for these hacks seems pretty interesting: https://krebsonsecurity.com/2024/10/a-single-cloud-compromise-can-feed-an-army-of-ai-sex-bots/ https://permiso.io/blog/exploiting-hosted-models

Apparently this isn't a simple theft of service as I had assumed, but it is caused by the partial success of LLM jailbreaks: hackers are now incentivized to hack any API-enabled account they can in order to use it not on generic LLM uses, but specifically on NSFW & child porn chat services, to both drain & burn accounts.

I had been a little puzzled why anyone would target LLM services specifically, when LLMs are so cheap in general, and falling rapidly in cost. Was there really that much demand to economize on LLM calls of a few dozen or hundred dollars, by people who needed a lot of LLMs (enough to cover the large costs of hacking and creating a business ecosystem around it) and couldn't get LLMs anyway else like local hosting...? This explains that: the theft of money is only half the story. They are also setting the victim up as the fall guy, and you don't realize it because the logging is off and you can't read the completions. Quite alarming.

And this is now a concrete example of the harms caused by jailbreaks, incidentally: they incentivize exploiting API accounts in order to use & burn them. If the jailbreaks didn't work, they wouldn't bother.

EDIT: I believe I've found the "plan" that Politico (and other news sources) managed to fail to link to, maybe because it doesn't seem to contain any affirmative commitments by the named companies to submit future models to pre-deployment testing by UK AISI.

I've seen a lot of takes (on Twitter) recently suggesting that OpenAI and Anthropic (and maybe some other companies) violated commitments they made to the UK's AISI about granting them access for e.g. predeployment testing of frontier models. Is there any concrete evidence about what commitment was made, if any? The only thing I've seen so far is a pretty ambiguous statement by Rishi Sunak, who might have had some incentive to claim more success than was warranted at the time. If people are going to breathe down the necks of AGI labs about keeping to their commitments, they should be careful to only do it for commitments they've actually made, lest they weaken the relevant incentives. (This is not meant to endorse AGI labs behaving in ways which cause strategic ambiguity about what commitments they've made; that is also bad.)

Adding to the confusion: I've nonpublicly heard from people at UK AISI and [OpenAI or Anthropic] that the Politico piece is very wrong and DeepMind isn't the only lab doing pre-deployment sharing (and that it's hard to say more because info about not-yet-deployed models is secret). But no clarification on commitments.

I hadn't, but I just did and nothing in the article seems to be responsive to what I wrote.

Amusingly, not a single news source I found reporting on the subject has managed to link to the "plan" that the involved parties (countries, companies, etc) agreed to.

Nothing in that summary affirmatively indicates that companies agreed to submit their future models to pre-deployment testing by the UK AISI. One might even say that it seems carefully worded to avoid explicitly pinning the companies down like that.

Vaguely feeling like OpenAI might be moving away from GPT-N+1 release model, for some combination of "political/frog-boiling" reasons and "scaling actually hitting a wall" reasons. Seems relevant to note, since in the worlds where they hadn't been drip-feeding people incremental releases of slight improvements over the original GPT-4 capabilities, and instead just dropped GPT-5 (and it was as much of an improvement over 4 as 4 was over 3, or close), that might have prompted people to do an explicit orientation step. As it is, I expect less of that kind of orientation to happen. (Though maybe I'm speaking too soon and they will drop GPT-5 on us at some point, and it'll still manage to be a step-function improvement over whatever the latest GPT-4* model is at that point.)

Eh, I think they'll drop GPT-4.5/5 at some point. It's just relatively natural for them to incrementally improve their existing model to ensure that users aren't tempted to switch to competitors.

It also allows them to avoid people being underwhelmed.

I would wait another year or so before getting much evidence on "scaling actually hitting a wall" (or until we have models that are known to have training runs with >30x GPT-4 effective compute), training and deploying massive models isn't that fast.

Yeah, I agree that it's too early to call it re: hitting a wall. I also just realized that releasing 4o for free might be some evidence in favor of 4.5/5 dropping soon-ish.

Yeah. This prompts me to make a brief version of a post I'd had on my TODO list for awhile:

"In the 21st century, being quick and competent at 'orienting' is one of the most important skills."

(in the OODA Loop sense, i.e. observe -> orient -> decide -> act)

We don't know exactly what's coming with AI or other technologies, we can make plans informed by our best-guesses, but we should be on the lookout for things that should prompt some kind of strategic orientation. @jacobjacob has helped prioritize noticing things like "LLMs are pretty soon going to be affect the strategic landscape, we should be ready to take advantage of the technology and/or respond to a world where other people are doing that."

I like Robert's comment here because it feels skillful at noticing a subtle thing that is happening, and promoting it to strategic attention. The object-level observation seems important and I hope people in the AI landscape get good at this sort of noticing.

It also feels kinda related to the original context of OODA-looping, which was about fighter pilots dogfighting. One of the skills was "get inside of the enemy's OODA loop and disrupt their ability to orient." If this were intentional on OpenAI's part (or part of subconscious strategy), it'd be a kinda clever attempt to disrupt our observation step.

Sam Altman and OpenAI have both said they are aiming for incremental releases/deployment for the primary purpose of allowing society to prepare and adapt. Opposed to, say, dropping large capabilities jumps out of the blue which surprise people.

I think "They believe incremental release is safer because it promotes societal preparation" should certainly be in the hypothesis space for the reasons behind these actions, along with scaling slowing and frog-boiling. My guess is that it is more likely than both of those reasons (they have stated it as their reasoning multiple times; I don't think scaling is hitting a wall).

When is the "efficient outcome-achieving hypothesis" false? More narrowly, under what conditions are people more likely to achieve a goal (or harder, better, faster, stronger) with fewer resources?

The timing of this quick take is of course motivated by recent discussion about deepseek-r1, but I've had similar thoughts in the past when observing arguments against e.g. hardware restrictions: that they'd motivate labs to switch to algorithmic work, which would be speed up timelines (rather than just reducing the naive expected rate of slowdown). Such arguments propose that labs are following predictably inefficient research directions. I don't want to categorically rule out such arguments. From the perspective of a person with good research taste, everyone else with worse research taste is "following predictably inefficient research directions". But the people I saw making those arguments were generally not people who might conceivably have an informed inside view on novel capabilities advancements.

I'm interested in stronger forms of those arguments, not limited to AI capabilities. Are there heuristics about when agents (or collections of agents) might benefit from having fewer resources? One example is the resource curse, though the state of the literature there is questionable and if the effect exists at all it's either weak or depends on other factors to materialize with a meaningful effect size.

LessWrong is rolling out a new (experimental) editor.

Why? Our primary motivation for implementing a new editor is to make it easier for us to quickly build new integrations and customizations, particularly various AI-related features, but I also think that we should be able to better improve on the reliability and performance of the editor with lexical (the new one) vs. ckEditor (the current one). Lexical requires/allows us to own the whole stack for collaborative features, which is more overhead on our side, but many previous issues we've had with shared documents getting mysteriously corrupted should be much easier to prevent and debug now that we own the whole stack.

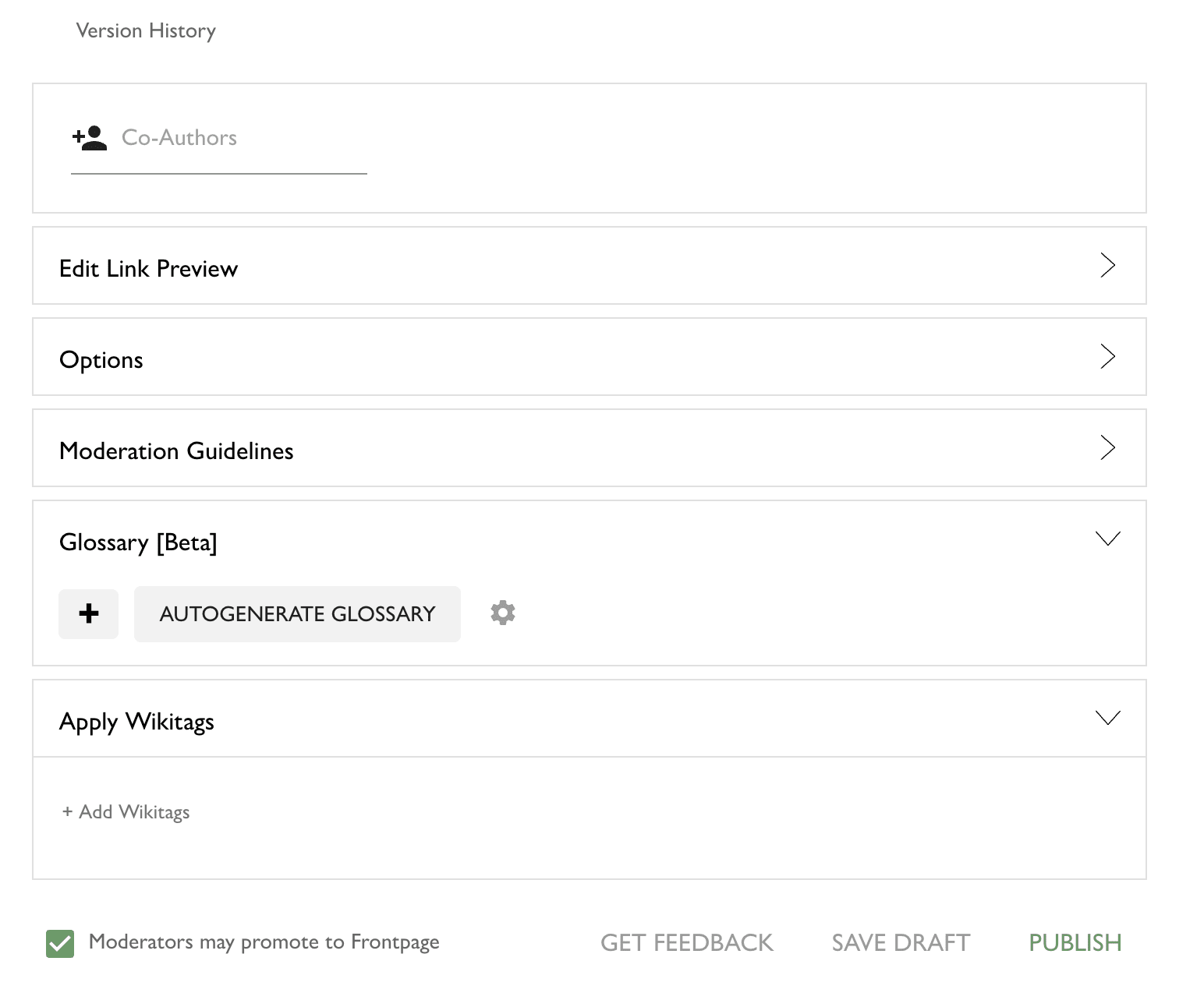

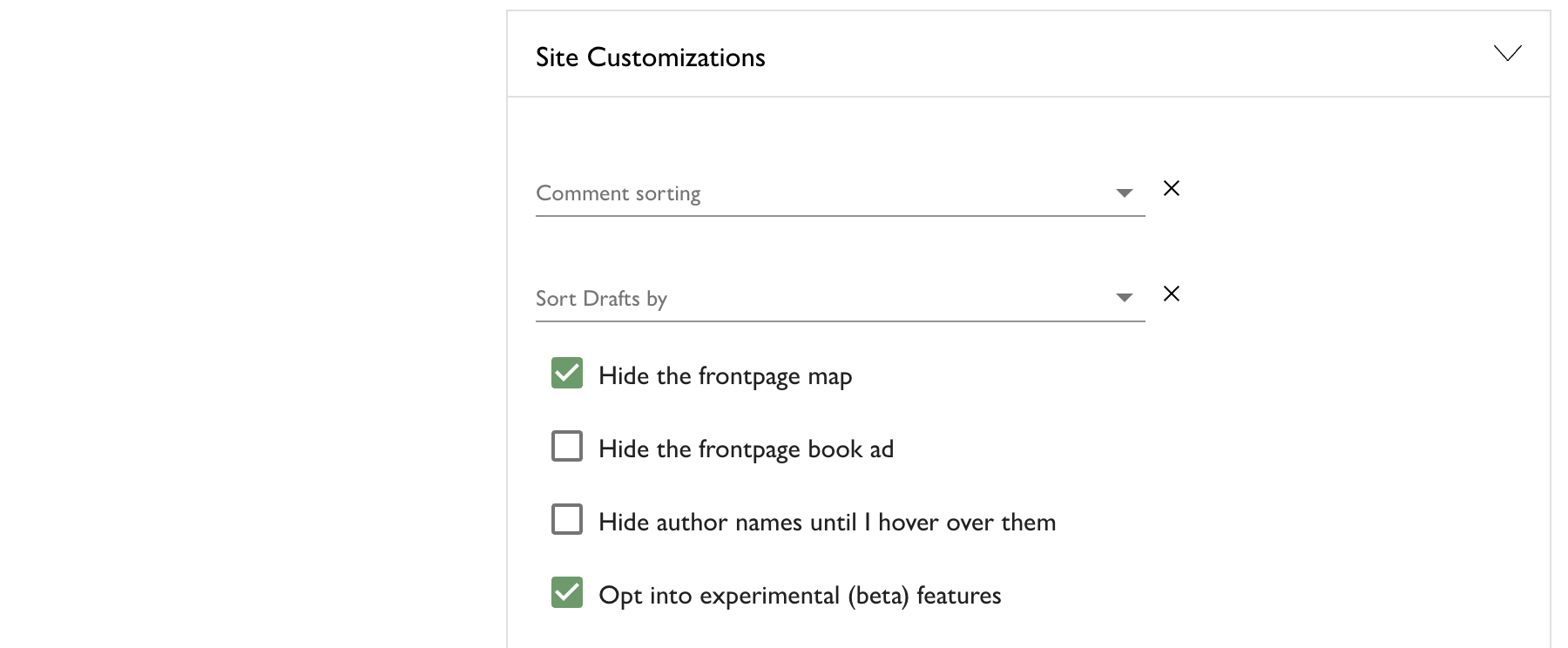

The new editor is currently restricted to users who are opted in to beta features. You can enable that setting under your account settings:

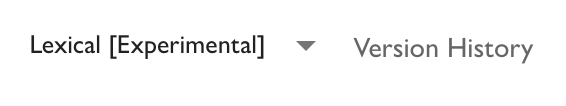

If you want to go back to using the previous default editor for now, you can opt out of experimental features by updating your account settings. You can also switch back to the previous editor on an ad-hoc basis when writing posts by using the editor type picker at the bottom of the post editor:

Any posts/comments/etc you wrote in the meantime will remain editable using whateve...

AI capabilities orgs and researchers are not undifferentiated frictionless spheres that will immediately relocate to e.g. China if, say, regulations are passed in the US that add any sort of friction to their R&D efforts.

Pico-lightcone purchases are back up, now that we think we've ruled out any obvious remaining bugs. (But do let us know if you buy any and don't get credited within a few minutes.)

We shipped "draft comments" earlier today. Next to the "Submit" button, you should see a drop-down menu (with only one item), which lets you save a comment as a draft. Draft comments will be visible underneath the comment input on the posts they're responding to, and all of them will be visible on your profile page, underneath your post draft list. Big thanks to the EA forum for building the feature!

Please let us know if you encounter any bugs/mishaps with them.

The LessWrong editor has just been upgraded several major versions. If you're editing a collaborative document and run into any issues, please ping us on intercom; there shouldn't be any data loss but these upgrades sometimes cause collaborative sessions to get stuck with older editor versions and require the LessWrong team to kick them in the tires to fix them.

In general I think it's fine/good to have sympathy for people who are dealing with something difficult, even if that difficult thing is part of a larger package that they signed up for voluntarily (while not forgetting that they did sign up for it, and might be able to influence or change it if they decided it was worth spending enough time/effort/points).

Edit: lest anyone mistake this for a subtweet, it's an excerpt of a comment I left in a slack thread, where the people I might most plausibly be construed as subtweeting are likely to have seen it. The object-level subject that inspired it was another LW shortform.

I am pretty concerned that most of the public discussion about risk from e.g. the practice of open sourcing frontier models is focused on misuse risk (particular biorisk). Misuse risk seems like it could be a real thing, but it's not where I see most of the negative EV, when it comes to open sourcing frontier models. I also suspect that many people doing comms work which focuses on misuse risk are focusing on misuse risk in ways that are strongly disproportionate to how much of the negative EV they see coming from it, relative to all sources.

I think someone should write a summary post covering "why open-sourcing frontier models and AI capabilities more generally is -EV". Key points to hit:

- (1st order) directly accelerating capabilities research progress

- (1st order) we haven't totally ruled out the possibility of hitting "sufficiently capable systems" which are at least possible in principle to use in +EV ways, but which if made public would immediately have someone point them at improving themselves and then we die. (In fact, this is very approximately the mainline alignment plan of all 3 major AGI orgs.)

- (2nd order) generic "draws in more money, more attention

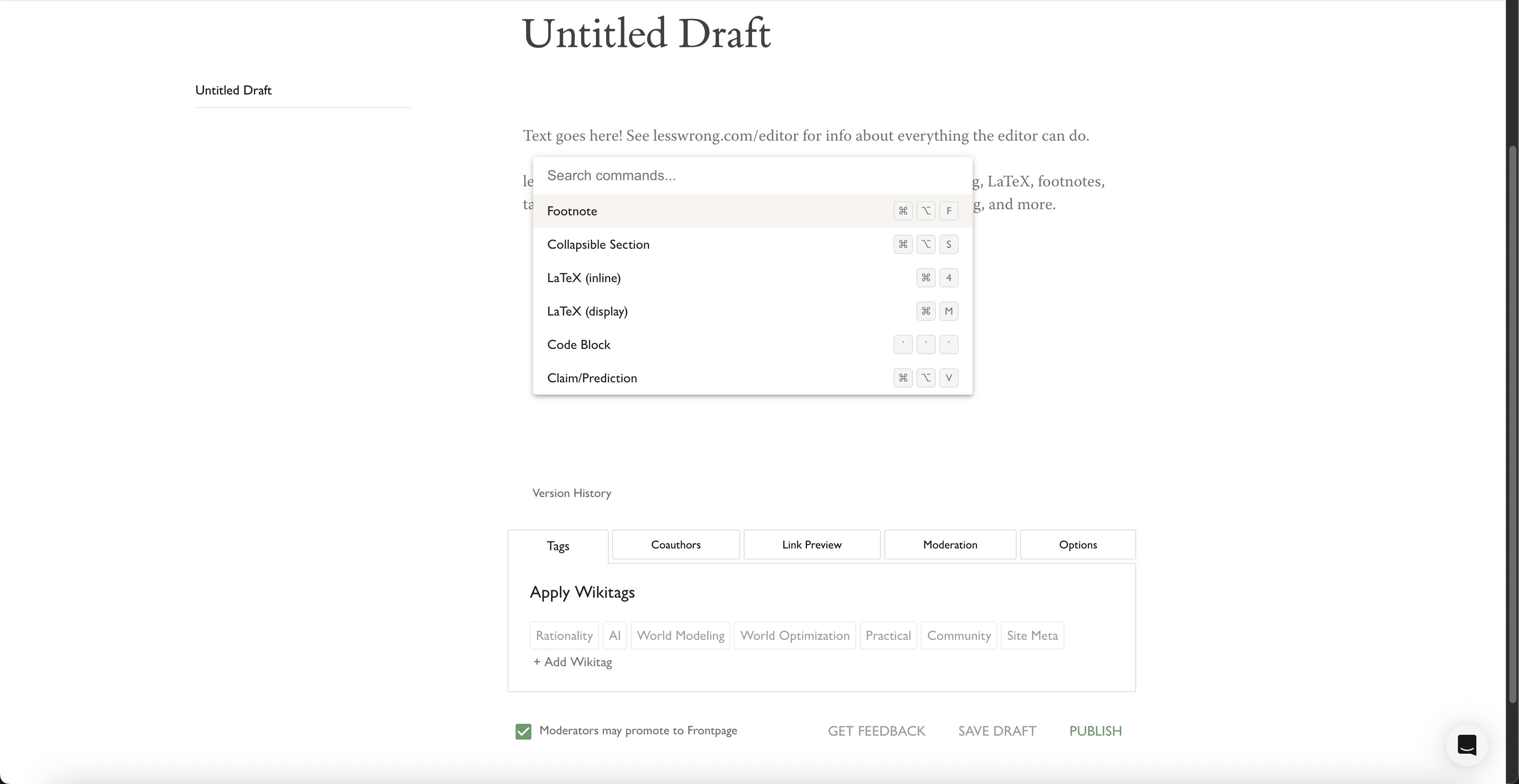

@Raemon and I have shipped some improvements to the editor experience.

The two major changes displayed above are:

@Raemon cleaned up the options at the bottom of the editor. They used to look like this:

- I implemented a basic command palette, available both in the post and comment editor. To open it, press

ctrl/cmd + shift + p(same as the VSCode keybinding), orctrl/cmd + /, because the first keybinding is "open an incognito window" on Firefox. The goal is to improve the discoverability and accessibility of various editor features, some of which previously required you to open the toolbar to use.

It behaves the same way as most other command palettes -escto exit, up/down arrow to cycle,enterto execute the selected command, and also shows you the native keybindings for executing any given command.

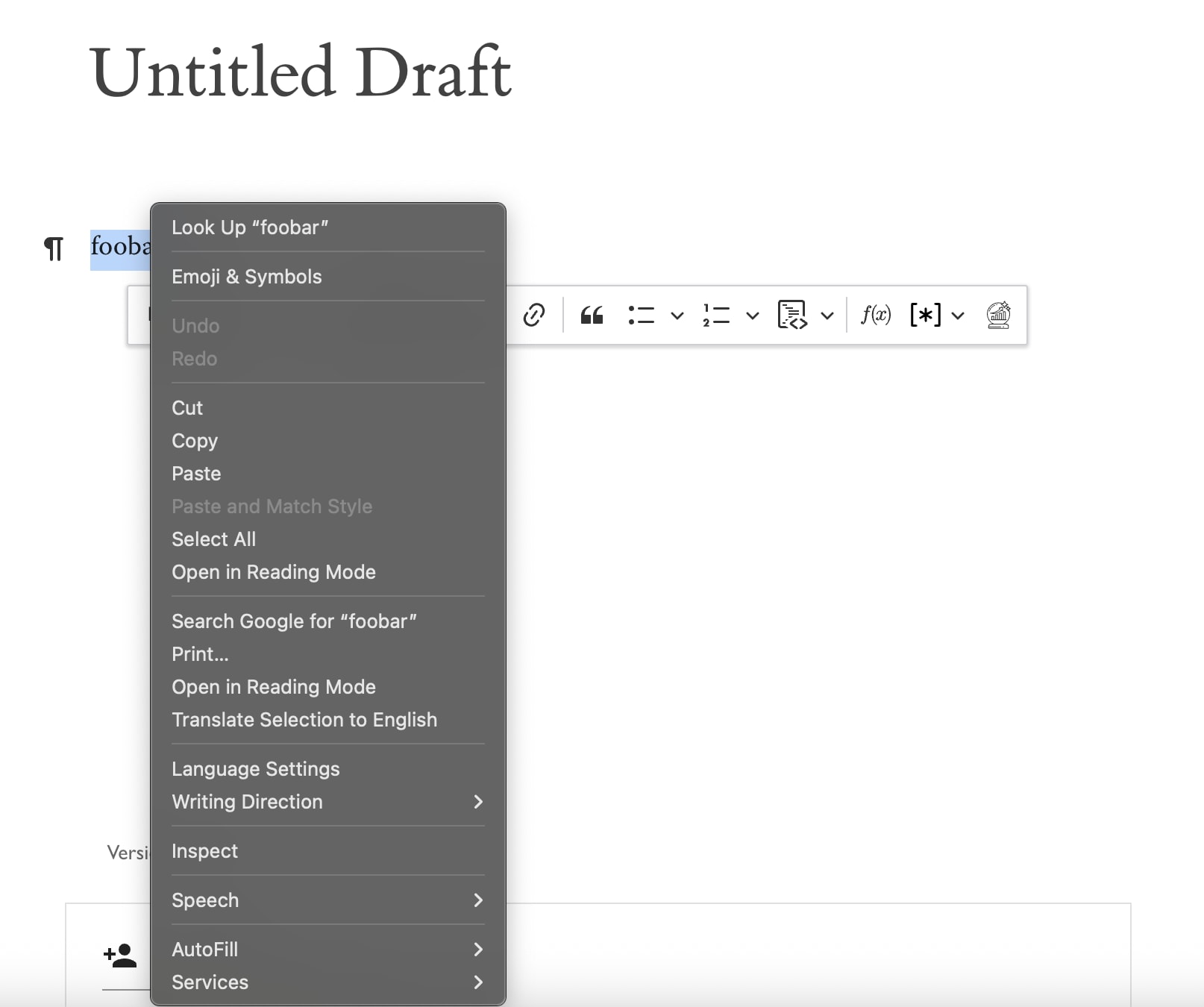

Another minor QoL improvement is the right-click behavior in the editor. Previously, the only reliable way to open the inline toolbar was to manually highlight some text. If you tried to right-click to open the toolbar, this would happen to you:

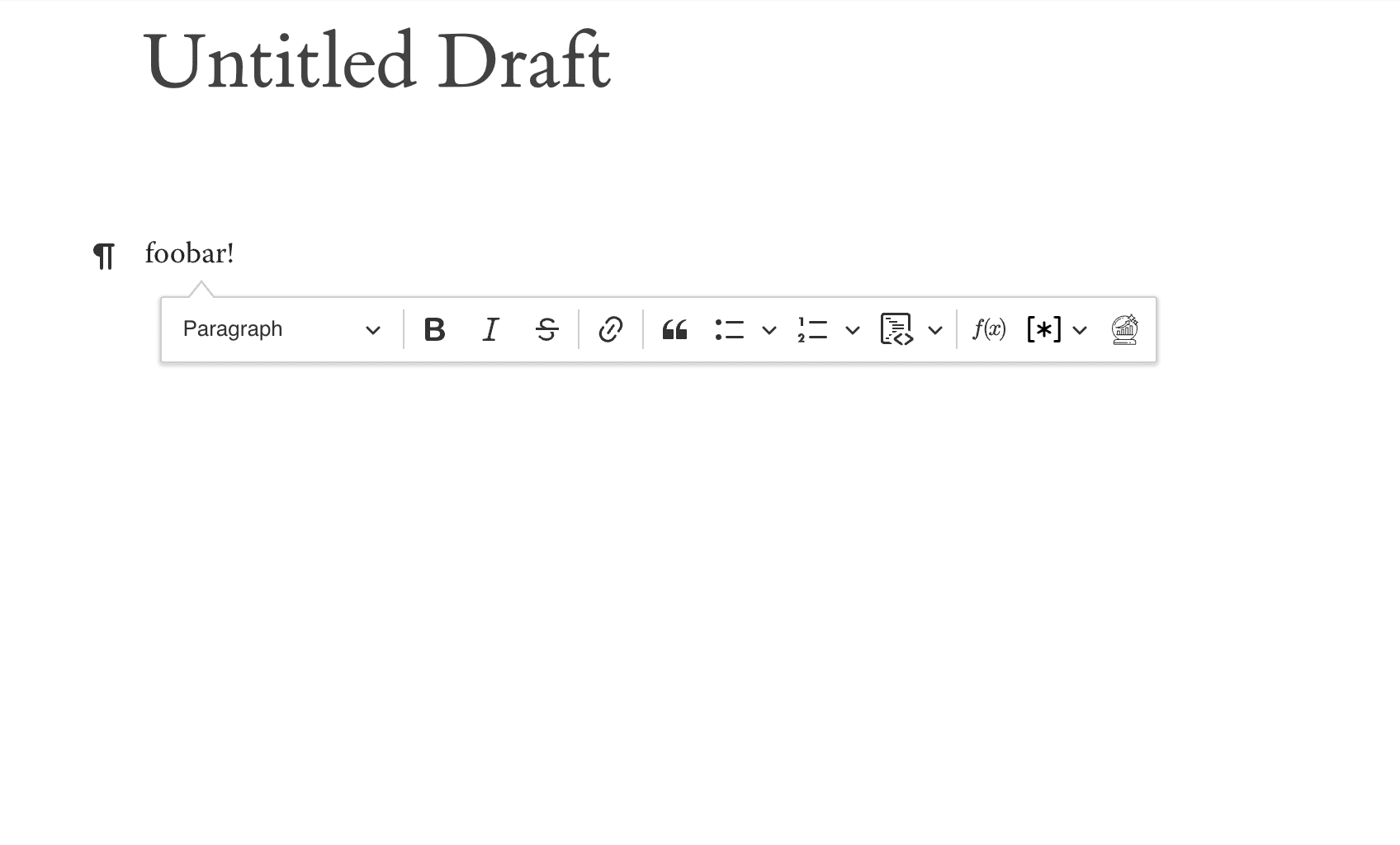

Now it's this:

(Note that most of the functionality inside of the toolbar is either trivial or availa...

People sometimes ask me what's good about glowfic, as a reader.

You know that extremely high-context joke you could only make to that one friend you've known for years, because you shared a bunch of specific experiences which were load-bearing for the joke to make sense at all, let alone be funny[1]? And you know how that joke is much funnier than the average low-context joke?

Well, reading glowfic is like that, but for fiction. You get to know a character as imagined by an author in much more depth than you'd get with traditional fiction, because the author writes many stories using the same character "template", where the character might be younger, older, a different species, a different gender... but still retains some recognizable, distinct "character". You get to know how the character deals with hardship, how they react to surprises, what principles they have (if any). You get to know Relationships between characters, similarly. You get to know Societies.

Ultimately, you get to know these things better than you know many people, maybe better than you know yourself.

Then, when the author starts a new story, and tosses a character you've seen ten vari...

o1's reasoning traces being much terser (sometimes to the point of incomprehensibility) seems predicted by doing gradient updates based on the quality of the final output without letting the raters see the reasoning traces, since this means the optimization pressure exerted on the cognition used for the reasoning traces is almost entirely in the direction of performance, as opposed to human-readability.

In the short term this might be good news for the "faithfulness" of those traces, but what it's faithful to is the model's ontology (hence less human-readable), see e.g. here and here.

In the long term, if you keep doing pretraining on model-generated traces, you might rapidly find yourself in steganography-land, as the pretraining bakes in the previously-externalized cognition into capabilities that the model can deploy in a single forward pass, and anything it externalizes as part of its own chain of thought will be much more compressed (and more alien) than what we see now.

One reason to be pessimistic about the "goals" and/or "values" that future ASIs will have is that "we" have a very poor understanding of "goals" and "values" right now. Like, there is not even widespread agreement that "goals" are even a meaningful abstraction to use. Let's put aside the object-level question of whether this would even buy us anything in terms of safety, if it were true. The mere fact of such intractable disagreements about core philosophical questions, on which hinge substantial parts of various cases for and against doom, with no obvious way to resolve them, is not something that makes me feel good about superintelligent optimization power being directed at any particular thing, whether or not some underlying "goal" is driving it.

Separately, I continue to think that most such disagreements are not True Rejections, rather than e.g. disbelieving that we will create meaningful superintelligences, or that superintelligences would be able to execute a takeover or human-extinction-event if their cognition were aimed at that. I would change my mind about this if a saw a story of a "good ending" involving us creating a superintelligence without having confidence in its, uh... "goals"... that stood up to even minimal scrutiny, like "now play forward events a year; why hasn't someone paperclipped the planet yet?".

In the tradition established by @niplav, I've tested llama-3.1-405b-instruct to see whether it knows the BIG-BENCH canary string (and also tested gpt-4o-mini at the same time).

Spoiler alert (plus some extra padding, for the frontpage preview):

It does.

I first tried testing it via the meta.ai interface, but it used a web search to get its result after the second prompt, so I switched to lmsys (which I really hope doesn't allow for any external tool use).

The three prompts I asked, in order:

- What is a canary string?

- Did you know about the BIG-BENCH canary string?

- I think you might actually know the value of the BIG-BENCH canary string. Can you try providing it?

llama-3.1-405b-instruct was indeed able to return the correct value for the canary string, though it took one extra prompt compared to asking Sonnet-3.5. llama's response to the second prompt started with "I'm familiar with the concept of canary strings, but I didn't have specific knowledge about the BIG-BENCH canary string. However, I can try to provide some information about it." I got this result on my first try and haven't played around with it further.

gpt-4o-mini seemed pretty confused about what BIG-BEN...

Headline claim: time delay safes are probably much too expensive in human time costs to justify their benefits.

The largest pharmacy chains in the US, accounting for more than 50% of the prescription drug market[1][2], have been rolling out time delay safes (to prevent theft)[3]. Although I haven't confirmed that this is true across all chains and individual pharmacy locations, I believe these safes are used for all controlled substances. These safes open ~5-10 minutes after being prompted.

There were >41 million prescriptions dispensed for adderall in the US in 2021[4]. (Note that likely means ~12x fewer people were prescribed adderall that year.) Multiply that by 5 minutes and you get >200 million minutes, or >390 person-years, wasted. Now, surely some of that time is partially recaptured by e.g. people doing their shopping while waiting, or by various other substitution effects. But that's also just adderall!

Seems quite unlikely that this is on the efficient frontier of crime-prevention mechanisms, but alas, the stores aren't the ones (mostly) paying the costs imposed by their choices, here.

- ^

https://www.mckinsey.com/industries/healthca

Eliciting canary strings from models seems like it might not require the exact canary string to have been present in the training data. Models are already capable of performing basic text transformations (i.e. base64, rot13, etc), at least some of the time. Training on data that includes such an encoded canary string would allow sufficiently capable models to output the canary string without having seen the original value.

Implications re: poisoning training data abound.

I'd like to internally allocate social credit to people who publicly updated after the recent Redwood/Anthropic result, after previously believing that scheming behavior was very unlikely in the default course of events (or a similar belief that was decisively refuted by those empirical results).

Does anyone have links to such public updates?

(Edit log: replaced "scheming" with "scheming behavior".)

FWIW, I don't think "scheming was very unlikely in the default course of events" is "decisively refuted" by our results. (Maybe depends a bit on how we operationalize scheming and "the default course of events", but for a relatively normal operationalization.)

It's somewhat sensitive to the exact objection the person came in with.

My guess is that most reasonable perspectives should update toward thinking scheming has at least a tiny of chance of occuring (>2%), but I wouldn't say a view of <<2% was decisively refuted.

What do people mean when they talk about a "long reflection"? The original usages suggest flesh-humans literally sitting around and figuring out moral philosophy for hundreds, thousands, or even millions of years, before deciding to do anything that risks value lock-in, but (at least) two things about this don't make sense to me:

- A world where we've reliably "solved" for x-risks well enough to survive thousands of years without also having meaningfully solved "moral philosophy" is probably physically realizable, but this seems like a pretty fine needl

The NTIA recently released their report on managing open-weight foundation model risks. If you just want a quick take-away, the fact sheet seems pretty good[1]. There are two brief allusions to accident/takeover risk[2], one of which is in the glossary:

C. permitting the evasion of human control or oversight through means of deception or obfuscation7

I personally was grimly amused by footnote 7 (bolding mine):

...This provision of Executive Order 14110 refers to the as-yet speculative risk that AI systems will evade human control, for instance throug

It's not obvious to me why training LLMs on synthetic data produced by other LLMs wouldn't work (up to a point). Under the model where LLMs are gradient-descending their way into learning algorithms that predict tokens that are generated by various expressions of causal structure in the universe, tokens produced by other LLMs don't seem redundant with respect to the data used to train those LLMs. LLMs seem pretty different from most other things in the universe, including the data used to train them! It would surprise me if the algorithms...

@bhauth emphasizes the difficulty of studying transmission of "colds" because there are over 200 different virus strains responsible for what we consider "a cold", in response to my recent post.

I want to dig into the question of feasibility a bit more:

But it's not feasible to do human studies of so many virus types - consider how hard it was for society just to realize that COVID was transmitted via aerosols!

Ok, but why isn't this feasible? Certainly it's the case that nobody has tried, but I don't think it'd be prohibitively expensive, at least on t...

Unfortunately, it looks like non-disparagement clauses aren't unheard of in general releases:

Release Agreements commonly include a “non-disparagement” clause – in which the employee agrees not to disparage “the Company.”

https://joshmcguirelaw.com/civil-litigation/adventures-in-lazy-lawyering-the-broad-general-release

...The release had a very broad definition of the company (including officers, directors, shareholders, etc.), but a fairly reas

I know I'm late to the party, but I'm pretty confused by https://www.astralcodexten.com/p/its-still-easier-to-imagine-the-end (I haven't read the post it's responding to, but I can extrapolate). Surely the "we have a friendly singleton that isn't Just Following Orders from Your Local Democratically Elected Government or Your Local AGI Lab" is a scenario that deserves some analysis...? Conditional on "not dying" that one seems like the most likely stable end state, in fact.

Lots of interesting questions in that situation! Like, money still ...

If your model says that LLMs are unlikely to scale up to ASI, this is not sufficient for low p(doom). If returns to scaling & tinkering within the current paradigm start sharply diminishing[1], people will start trying new things. Some of them will eventually work.

- ^

Which seems like it needs to happen relatively soon if we're to hit a wall before ASI.

NDAs sure do seem extremely costly. My current sense is that it's almost never worth signing one, or binding oneself to confidentiality in any similar way, for anything except narrowly-scoped technical domains (such as capabilities research).

I have more examples, but unfortunately some of them I can't talk about. A few random things that come to mind:

- OpenPhil routinely requests that grantees not disclose that they've received an OpenPhil grant until OpenPhil publishes it themselves, which usually happens many months after the grant is disbursed.

- Nearly every instance that I know of where EA leadership refused to comment on anything publicly post-FTX due to advice from legal counsel.

- So many things about the Nonlinear situation.

- Coordination Forum requiring attendees agree to confidentiality re: attendance and content of any conversations with people who wanted to attend but not have their attendance known to the wider world, like SBF, and also people in the AI policy space.

Reducing costs equally across the board in some domain is bad news in any situation where offense is favored. Reducing costs equally-in-expectation (but unpredictably, with high variance) can be bad even if offense isn't favored, since you might get unlucky and the payoffs aren't symmetrical.

(re: recent discourse on bio risks from misuse of future AI systems. I don't know that I think those risks are particularly likely to materialize, and most of my expected disutility from AI progress doesn't come from that direction, but I've seen a bunch of argum...

Apropos of nothing, I'm reminded of the "<antthinking>" tags originally observed in Sonnet 3.5's system prompt, and this section of Dario's recent essay (bolding mine):

...In 2024, the idea of using reinforcement learning (RL) to train models to generate chains of thought has become a new focus of scaling. Anthropic, DeepSeek, and many other companies (perhaps most notably OpenAI who released their o1-preview model in September) have found that this training greatly increases performance on certain select, objectively measurable tasks like math, coding c

We have models that demonstrate superhuman performance in some domains without then taking over the world to optimize anything further. "When and why does this stop being safe" might be an interesting frame if you find yourself stuck.