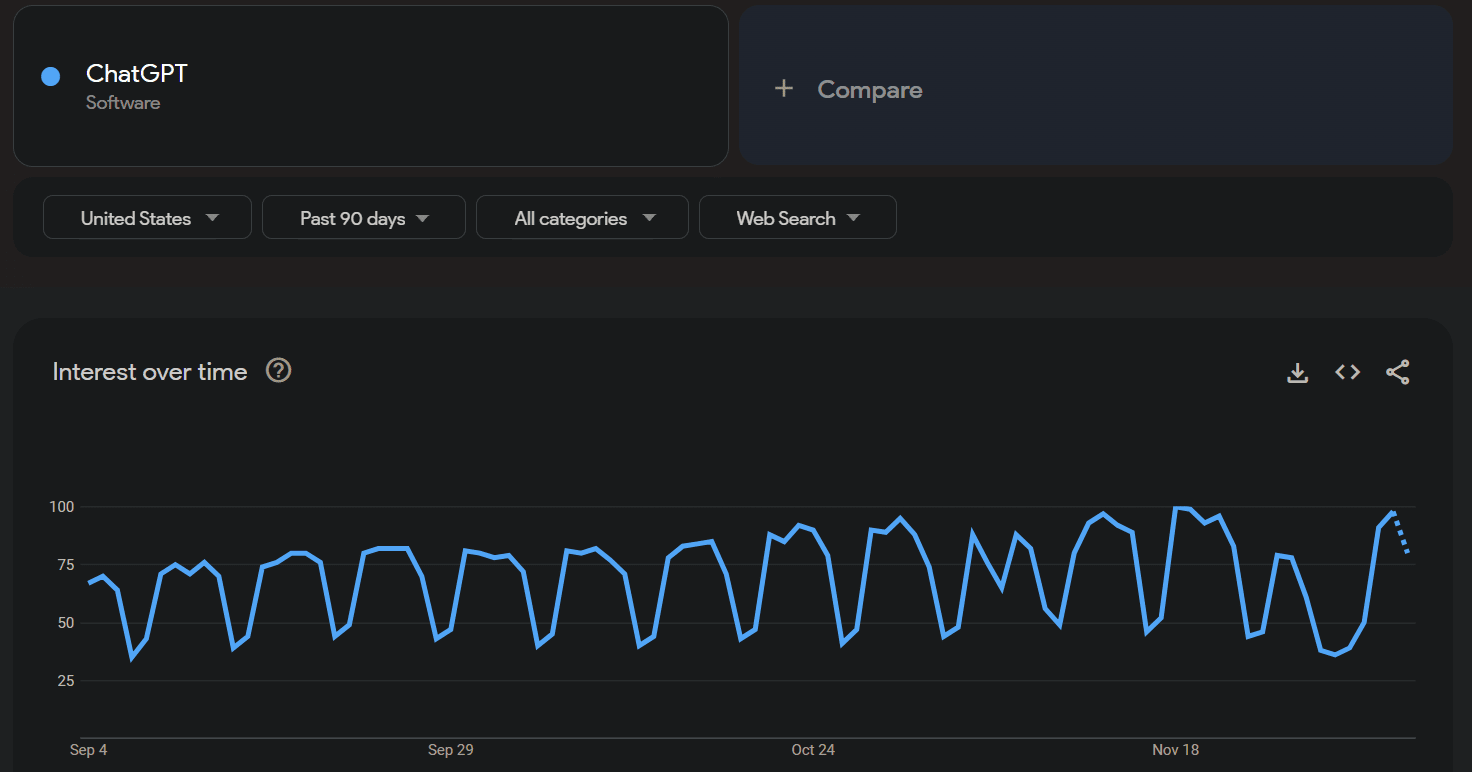

Was looking up Google Trend lines for chatGPT and noticed a cyclical pattern:

Where the dips are weekends, meaning it's mostly used by people in the workweek. I mostly expect this is students using it for homework. This is substantiated by two other trends:

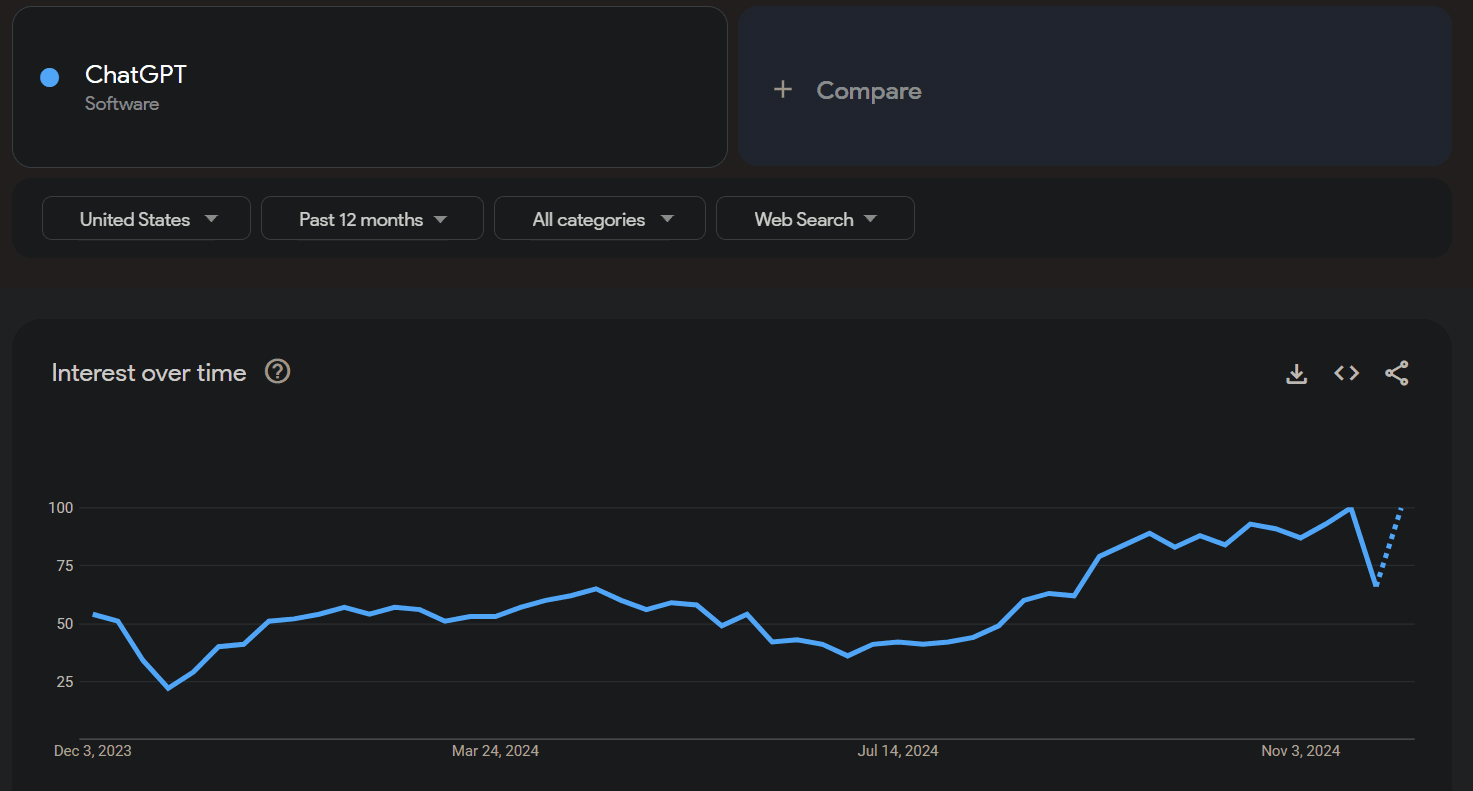

1. Dips in interest over winter and summer breaks (And Thanksgiving break in above chart)

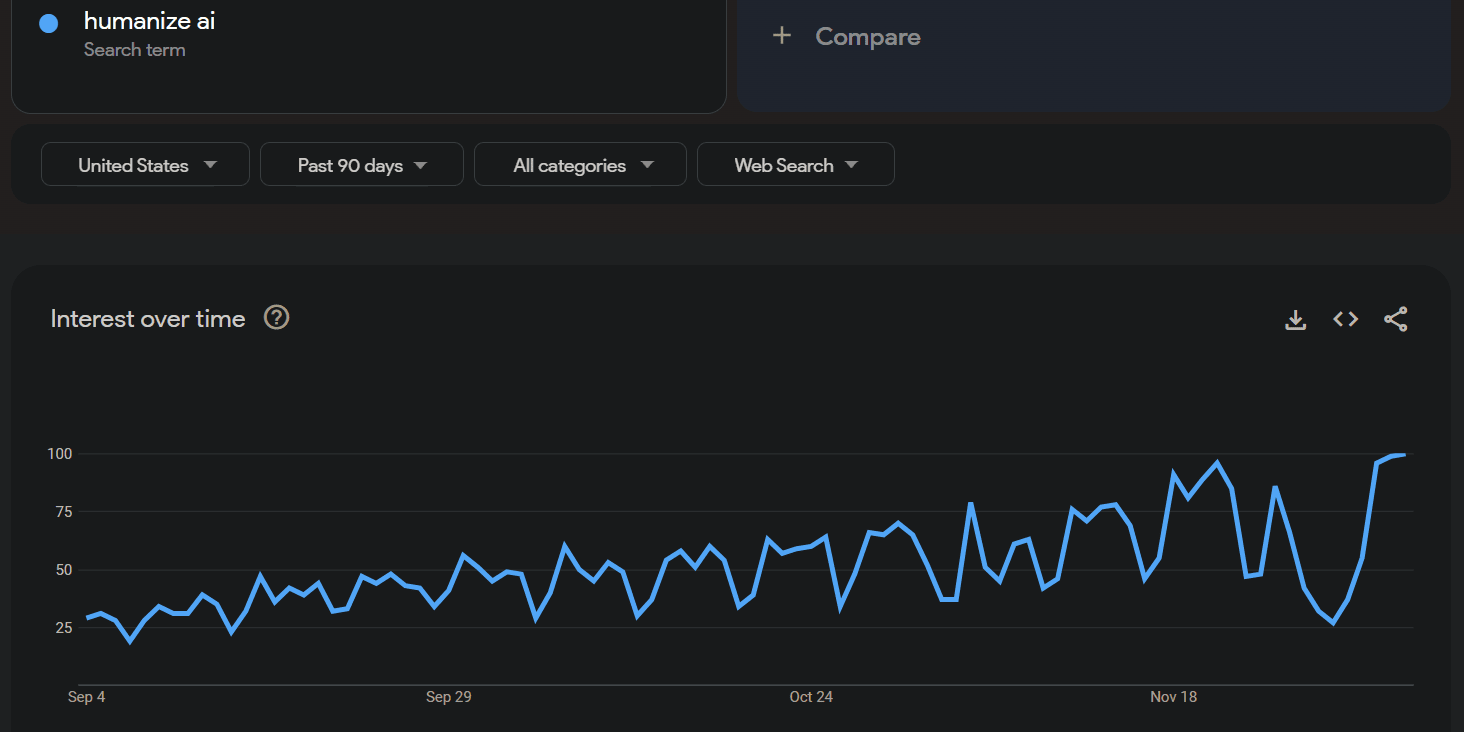

2. "Humanize AI" which is

Humanize AI™ is your go-to platform for seamlessly converting AI-generated text into authentic, undetectable, human-like content

[Although note that overall interest in ChatGPT is WAY higher than Humanize AI]

I’d guess that weekend dips come from office workers, since they rarely work on weekends, but students often do homework on weekends.

Implications of a Brain Scan Revolution

Suppose we were able to gather large amounts of brain-scans, lets say w/ millions of wearable helmets w/ video and audio as well,[1] then what could we do with that? I'm assuming a similar pre-training stage where models are used to predict next brain-states (possibly also video and audio), and then can be finetuned or prompted for specific purposes.

Jhana helmets

Jhana is a non-addicting high pleasure state. If we can scan people entering this state, we might drastically reduce the time it takes to learn to enter this state. I predict (maybe just hope) that being able to easily enter this state will lead to an increase in psychological well-being and a reduction in power-seeking behavior.

Jhana is great but is only temporary. It is a great foundation to then investigate the causes of suffering and remove it.

End Suffering

I currently believe the cause of suffering is an unnecessary mental motion which should show up in high fidelity brain scans. Again, eliminating suffering would go a long way to make decision-makers more selfless (they can still make poor decisions, just likely not out of selfish intent, unless it's habitual maybe? idk)

Additionally, this tech would also enable seeing the brain state of enlightened vs not, which might look like Steve Byrne's model here. This might not be a desirable state to be in, and understanding the structure of it could give insight.

Lie Detectors

If we can reliably detect lying, this could be used for better coordination between labs & governments, as well as providing trust in employees and government staff (that they won't e.g. steal algorithmic/state secrets).

It could also be used to maintain power in authoritarian regimes (e.g. repeatedly test for faithfulness of those in power under you), and I agree w/ this comment that it could destroy relationships that would've otherwise been fine.

Additionally this is useful for...

Super Therapy

Look! PTSD is just your brain repeating this same pattern in response to this trigger. CBT-related, negative thought spirals could be detected and brought up immediately.

Beyond helping w/ existing brands of therapy, I expect larger amounts of therapeutic progress could be made in short amounts of time, which would also be useful for coordination between labs/governments.

Super Stimuli & Hacking Brains

You could also produce extreme levels of stimulation of images/audio that most saliently capture attention and lead to continued engagement.

I'm unsure if there exist mind-state independent jail-breaks (e.g. a set of pixels that hacks a person's brains); however, I could see different mind states (e.g. just waking up, sleep/food/water deprivation) that, when combined w/ images/sounds could lead to crazy behavior.

Super Pursuasion

If you could simulate someone's brain state given different inputs, you could try to figure out the different arguments (or the surrounding setting or their current mood) that would be required to get them to do what you want. Using this for manipulating others is clear, but also could be used for better coordinating.

A more ethical way to use a simulation for pursuasion is to not decieve/leave-out-important truths. You could even run many simulations to figure out what those important factors that they find important.

However, simulating human brains for optimizing is also an ethical concern in general due to simulated suffering, especially if you do figure out what specific mental motion suffering is.

Reverse Engineering Social Instincts

Part of Steven Byrne's agenda. This should be pretty easy to reverse engineer given this tech (epistemic note: I know nothing in this field) since you could find what the initial reward components are and how specific image/audio/thoughts led to our values.

Reverse Engineering the secret sauce of human learning

With the good of learning human values comes the bad of figuring out how humans are so sample efficient!

Uploads

Simulating a full human brain is already an upload; however, I don't expect there to be optimized hardware ready for this. Additionally, running a human brain would be more complicated than just running normal NNs but w/ algorithmic insights from our brains. So this is still possible, but would have a large alignment tax.

[I believe I first heard this argument from Steve Byrnes]

Everything Else in Medical Literature

Beyond trying to understand how the brain works (which is covered already), likely studying diseases and analyzing the brain's reaction to drugs.

...

These are clearly dual-use; however, I do believe some of these are potentially pivotal acts (e.g. uploads, solving value alignment, sufficient coordination involving human actors), but would definitely require a lot of trust in the pursuers of this technology (e.g. existing power-seekers in power, potentially unaligned TAI, etc).

Hopefully things like jhana (if the benefits pan out) don't require a high fidelity helmet. The current SOTA is (AFAIK) 50% of people learning how to get into jhana given 1 week of a silent retreat, which is quite good for pedagogy alone! But maybe not sufficient to get e.g. all relevant decision-makers on board. Additionally, I think this percentage is only for getting into it once, as opposed to being able to enter that state at well.

- ^

Why would many people give up their privacy in this way? Well, when the technology actually becomes feasable, I predict most people will be out of jobs, so this would equivalent to a new job.

For pleasure/insight helmets you probably need intervention in the form of brain simulation (tDCS, tFUS, tMS). Biofeedback might help but you need to at least know where to steer towards.

The current SOTA is (AFAIK) 50% of people learning how to get into jhana given 1 week of a silent retreat

I'm pretty skeptical of those numbers, all exiting projects I know of don't have a better method of measurement other than surveys and that gets bitten hard by social desirability bias/not wanting to have committed a sunk cost. Seems relevant that jhourney isn't doing much EEG & biofeedback anymore.

Huh, those brain stimulation methods might actually be practical to use now, thanks for mentioning them!

Regarding skepticism of survey-data: If you're imagining it's only an end-of-the-retreat survey which asks "did you experience the jhana?", then yeah, I'll be skeptical too. But my understanding is that everyone has several meetings w/ instructors where a not-true-jhana/social-lie wouldn't hold up against scrutiny.

I can ask during my online retreat w/ them in a couple months.

As for brain stimulation, TMS devices can be bought for <$10k from ebay. tDCS devices are available for ~$100, though I don't expect them to have large effect sizes in any direction. There's been noises of consumer-level tFUS devices for <$10k, but that's likely >5 years in the future.

Regarding skepticism of survey-data: If you're imagining it's only an end-of-the-retreat survey which asks "did you experience the jhana?", then yeah, I'll be skeptical too. But my understanding is that everyone has several meetings w/ instructors where a not-true-jhana/social-lie wouldn't hold up against scrutiny.

The incentives of the people running jhourney are to over-claim attainments, especially on edge-cases, and hype the retreats. Organizations can be sufficiently on guard to prevent the extreme forms of over-claiming & turning into a positive-reviews-factory, but I haven't seen people from jhourney talk about it (or take action that shows they're aware of the problem).

How do you work w/ sleep consolidation?

Sleep consolidation/ "sleeping on it" is when you struggle w/ [learning a piano piece], sleep on it, and then you're suddenly much better at it the next day!

This has happened to me for piano, dance, math concepts, video games, & rock climbing, but it varies in effectiveness. Why? Is it:

- Duration of struggling activity

- Amount of attention paid to activity

- Having a frustrating experience

- Time of day (e.g. right before sleep)

My current guess is a mix of all four. But I'm unsure if you [practice piano] in the morning, you'll need to remind yourself about the experience before you fall asleep, also implying that you can only really consolidate 1 thing a day.

The decision relevance for me is I tend to procrastinate heavy maths paper, but it might be more efficient to spend 20 min for 3 days actually trying to understand it, sleep on it, then spend 1 hour in 1 day. This would be neat because it's easier for me to convince myself to really struggle w/ a hard topic for [20] minutes, knowing I'm getting an equivalent [40] minutes if I sleep on it.

I think the way to learn any skill is to basically:

- Practice it

- Sleep

- Goto 1

And the time spent in each iteration of item 1 is capped in usefulness or at least has diminishing returns. I think this has nothing to do with frustration. Also, I think reminding yourself of the experience is not that important and I think there is no cap of 1 thing a day.

Oh, I've thought a lot about something similar that I call "background processing" - I think it happens during sleep, but also when awake. I think for me it works better when something is salient to my mind / my mind cares about it. According to this theory, if I was being forced to learn music theory but really wanted to think about video games, I'd get less new ideas about music theory from background processing, and maybe it'd be less entered into my long term memory from sleep.

I'm not sure how this effects more 'automatic' ('muscle memory') things (like playing the piano correctly in response to reading sheet music).

I'm unsure if you [practice piano] in the morning, you'll need to remind yourself about the experience before you fall asleep, also implying that you can only really consolidate 1 thing a day

I'm not sure about this either. It could also be formulated as there being some set amount of consolidation you do each night, and you can divide them between topics, but it's theoretically (disregarding other factors like motivation; not practical advice) most efficient if you do one area per day (because of stuff in the same topic having more potential to relate to each other and be efficiently compressed or generalized from or something. Alternatively, studying multiple different areas in a day could lead to creative generalization between them).

If I'm playing anagrams or Scrabble after going to a church, and I get the letters "ODG" I'm going to be predisposed towards a different answer than if I've been playing with a German Shepard. I suspect sleep has very little to do with it, and simply coming at something with a fresh load of biases on a different day with different cues and environmental factors may be a larger part of it.

Although Marvin Minsky made a good point about the myth of introspection: we are only aware of a think sliver of our active mental processes at any given moment, when you intensely focus on a maths problem or practicing the piano for a protracted period of time, some parts of the brain working on that may not abandon it just because your awareness or your attention drifts somewhere else. This wouldn't just be during sleep, but while you're having a conversation with your friend about the game last night, or cooking dinner, or exercising. You're just not aware of it, it's not in the limelight of your mind, but it still plugs away at it.

In my personal experience, most Eureka moments are directly attributable to some irrelevant thing that I recently saw that shifted my framing of the problem much like my anagram example.

Stores Already Have Empty Shelves.

Just saw two empty shelves (~toilet paper & peanut butter) at my local grocery store. Curious how to prepare for this? Currently we've stocked up on:

1. toilet paper

2. feminine products

3. Canned soup (that we like & already eat)

Additionally any models to understand the situation? For example:

- Self-fulfilling prophecy - Other people will believe that toilet paper will run out, so it will

- Time for Sea Freights to get to US from China is 20-40 days, so even if the trade war ends, it will still take ~ a month for things to (mostly) go back from normal.

Isn't toilet paper almost always produced domestically? It takes up a lot of space compared to its value, so it's inefficient to transport. Potato chips are similar.

Toilet paper is an example of "self-fulfilling prophecy". It will run out because people will believe it will run out, causing a bank toilet paper run.

Makes me feel confused about Economics 101. If people can easily predict that the toiler paper will run out, why aren't the prices increasing enough to prevent that.

Standard price-gouging and auction problem. Same reason Taylor Swift doesn't raise her prices or auction tickets off, but blames her shabbos goy instead.

There's also a question of what does 'running out of toilet paper' mean? Back in Covid Round One, there wasn't a real toilet paper shortage. I didn't see a single empty shelf of toilet paper. (I did, however, see a giant pyramid of toilet paper rolls at Costco stacked on a pallet/forklift thingy, serving as a reminder that "every shortage is followed by a glut", but the glut never gets reported.) Maybe it happened in my town in between my visits or in another store, maybe it didn't, but if I didn't read social media, I would have no idea there was supposed to be any such thing. Millions of people were not being forced for months to relieve themselves in an open field like they were in India... When people say 'America ran out of toilet paper', what they really mean is, 'I saw a lot of photos on social media of empty shelves and people talking about how late capitalism has failed' (and at this point, even if they claim to have had toilet paper problems themselves, we know from retrospective memory studies like of 9/11 that a lot of them didn't). There's no obvious way for everyone who sells toilet paper to ensure that there is never an empty shelf anywhere ever which would yield a photo to go viral. (Especially not these days when people will just fake the photo with GPT-4o.) And that wouldn't be economically viable to begin with: stockouts are normal, healthy, and good, because the level of stock which it would take to ensure 0% stockouts would be exorbitantly expensive. To the extent that an empty shelf becomes a political weapon to poison peoples' minds, it's a negative externality to whoever owns and operates that shelf. So that is no reason for them to raise the price of toilet paper enough to ensure it never happened.

(Another point to make would be that people can easily endure any brief fluctuations in toilet paper supply. It's easy to economize on it and there are usually a lot of rolls in any household and lots of mediocre substitutes of the sort that were used before 'toilet paper' as a specialized category became the norm - like newspapers. There's also paper towel rolls. If it were some big deal, then after the supposed horrible disaster of shortages during COVID, everyone would've immediately gone out and bought bidets as the most effective permanent method to insulate yourself from future shortages. But they didn't.)

I didn't see a single empty shelf

I don't recall toilet paper specifically, but I definitely saw lots of empty shelves at the start of covid (in the UK).

I didn't see a single empty shelf.

In that case, as a communism survivor, I agree that there definitely was no toilet paper shortage.

lots of mediocre substitutes of the sort that were used before 'toilet paper' as a specialized category became the norm - like newspapers.

Newspapers? What is this, 20th century? Try using a smartphone. :D

But yes, paper towel rolls. (I don't remember whether such thing existed during communism.)

A trending youtube video w/ 500k views in a day brings up Dario Amodei's Machines of Loving Grace (Timestamp for the quote):

[Note: I had Claude help format, but personally verified the text's faithfulness]

I am an AI optimist. I think our world will be better because of AI. One of the best expressions of that I've seen is this blog post by Dario Amodei, who is the CEO of Anthropic, one of the biggest AI companies. I would really recommend reading this - it's one of the more interesting articles and arguments I have read. He's basically saying AI is going to have an incredibly positive impact, but he's also a realist and is like "AI is going to really potentially fuck up our world"

He's notable and more trustworthy because his company Anthropic has put WAY more effort into safety, way way more effort into making sure there are really high standards for safety and that there isn't going to be danger what these AIs are doing. So I really really like Dario and I've listened to a lot of what he's said. Whereas with some other AI leaders like Sam Altman who runs OpenAI, you don't know what the fuck he's thinking. I really like [Dario] - he also has an interesting background in biological work and biotech, so he's not just some tech-bro; he's a bio-tech-bro. But his background is very interesting.

But he's very realistic. There is a lot of bad shit that is going to happen with AI. I'm not denying that at all. It's about how we maximize the positive while reducing the negatives. I really want AI to solve all of our diseases. I would really like AI to fix cancer - I think that will happen in our lifetimes. To me, I'd rather we fight towards that future rather than say 'there will be problems, let's abandon the whole thing.'

Other notes: This is youtuber/Streamer DougDoug (2.8M subscribers), with this video posted on his other channel DougDougDoug ("DougDoug content that's too rotten for the main channel") who often streams/posts coding/AI integrated content.

The full video is also an entertaining summary of case law on AI generated art/text copyright.

making sure there are really high standards for safety and that there isn't going to be danger what these AIs are doing

Ah yes, a great description of Anthropic's safety actions. I don't think anyone serious at Anthropic believes that they "made sure there isn't going to be danger from these AIs are doing". Indeed, many (most?) of their safety people assign double-digits probabilities to catastrophic outcomes from advanced AI system.

I do think this was a predictable quite bad consequence of Dario's essay (as well as his other essays which heavily downplay or completely omit any discussion of risks). My guess is it will majorly contribute to reckless racing while giving people a false impression of how good we are doing on actually making things safe.

I think the fuller context,

Anthropic has put WAY more effort into safety, way way more effort into making sure there are really high standards for safety and that there isn't going to be danger what these AIs are doing

implies it's just the amount of effort is larger than other companies (which I agree with), and not the Youtuber believing they've solved alignment or are doing enough, see:

but he's also a realist and is like "AI is going to really potentially fuck up our world"

and

But he's very realistic. There is a lot of bad shit that is going to happen with AI. I'm not denying that at all.

So I'm not confident that it's "giving people a false impression of how good we are doing on actually making things safe." in this case.

I do know DougDoug has recommended Anthropic's Alignment Faking paper to another youtuber, which is more of a "stating a problem" paper than saying they've solved it.