If indeed OpenAI does restructure to the point where its equity is now genuine, then $150 billion seems way too low as a valuation

Why is OpenAI worth much more than $150B, when Anthropic is currently valued at only $30-40B? Also, loudly broadcasting this reduces OpenAI's cost of equity, which is undesirable if you think OpenAI is a bad actor.

Apparently the current funding round hasn't closed yet and might be in some trouble, and it seems much better for the world if the round was to fail or be done at a significantly lower valuation (in part to send a message to other CEOs not to imitate SamA's recent behavior). Zvi saying that $150B greatly undervalues OpenAI at this time seems like a big unforced error, which I wonder if he could still correct in some way.

If I had to guess, my answer would center around "Microsoft" for OpenAI and "Maybe actually taking commitments seriously enough to impede expectations of future profit and growth," for Anthropic.

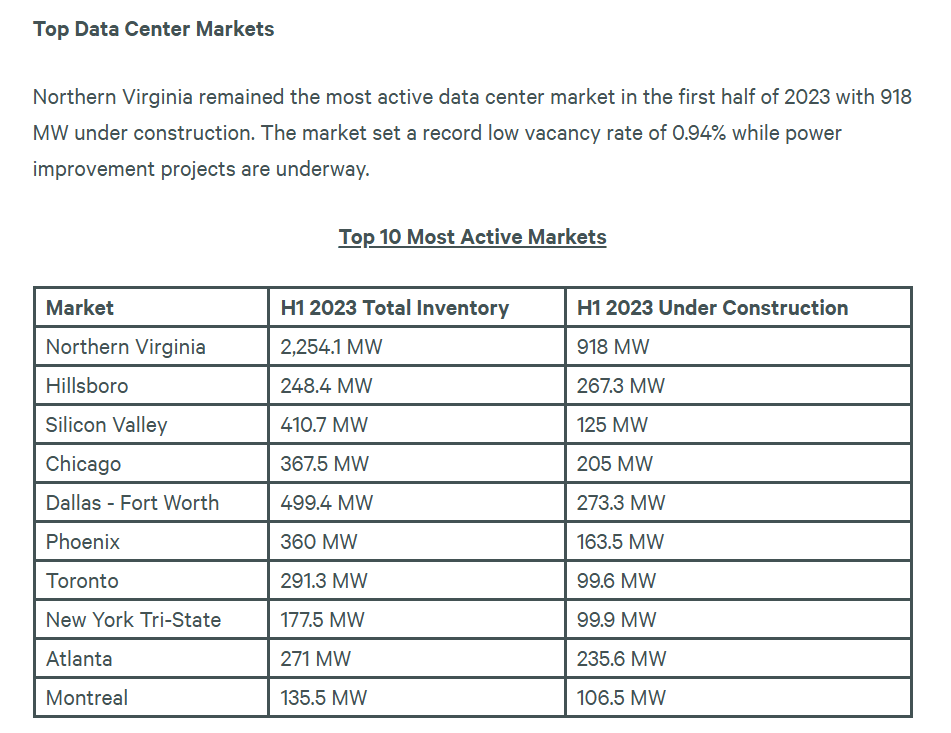

While this may or may not happen, if we really expect multi-GW data center demand to be a limiting factor in growth, then "Our backer can get Three Mile Island reopened" is a pretty big value add. It's possible Amazon would be able and willing to do that, but they've got ~4x less invested than Microsoft, and Google would presumably prioritize Gemini and DeepMind over Anthropic.

As for OpenAI dropping the mask: I devoted essentially zero effort to predicting this, though my complete lack of surprise implies it is consistent with the information I already had. Even so:

Shit.

@gwern wrote am explanation why this is surprising (for some) [here](https://forum.effectivealtruism.org/posts/Mo7qnNZA7j4xgyJXq/sam-altman-open-ai-discussion-thread?commentId=CAfNAjLo6Fy3eDwH3)

Open Philanthropy (OP) only had that board seat and made a donation because Altman invited them to, and he could personally have covered the $30m or whatever OP donated for the seat [...] He thought up, drafted, and oversaw the entire for-profit thing in the first place, including all provisions related to board control. He voted for all the board members, filling it back up from when it was just him (& Greg Brockman at one point IIRC). He then oversaw and drafted all of the contracts with MS and others, while running the for-profit and eschewing equity in the for-profit. He designed the board to be able to fire the CEO because, to quote him, "the board should be able to fire me". [...]

Credit where credit is due - Altman may not have believed the scaling hypothesis like Dario Amodei, may not have invented PPO like John Schulman, may not have worked on DL from the start like Ilya Sutskever, may not have created GPT like Alec Radford, may not have written & optimized any code like Brockman's - but the 2023 OA organization is fundamentally his work.

The question isn't, "how could EAers* have ever let Altman take over OA and possibly kick them out", but entirely the opposite: "how did EAers ever get any control of OA, such that they could even possibly kick out Altman?" Why was this even a thing given that OA was, to such an extent, an Altman creation?

The answer is: "because he gave it to them." Altman freely and voluntarily handed it over to them.

So you have an answer right there to why the Board was willing to assume Altman's good faith for so long, despite everyone clamoring to explain how (in hindsight) it was so obvious that the Board should always have been at war with Altman and regarding him as an evil schemer out to get them. But that's an insane way for them to think! Why would he undermine the Board or try to take it over, when he was the Board at one point, and when he made and designed it in the first place? Why would he be money-hungry when he refused all the equity that he could so easily have taken - and in fact, various partner organizations wanted him to have in order to ensure he had 'skin in the game'? Why would he go out of his way to make the double non-profit with such onerous & unprecedented terms for any investors, which caused a lot of difficulties in getting investment and Microsoft had to think seriously about, if he just didn't genuinely care or believe any of that? Why any of this?

(None of that was a requirement, or even that useful to OA for-profit. [...] Certainly, if all of this was for PR reasons or some insidious decade-long scheme of Altman to 'greenwash' OA, it was a spectacular failure - nothing has occasioned more confusion and bad PR for OA than the double structure or capped-profit. [...]

What happened is, broadly: 'Altman made the OA non/for-profits and gifted most of it to EA with the best of intentions, but then it went so well & was going to make so much money that he had giver's remorse, changed his mind, and tried to quietly take it back; but he had to do it by hook or by crook, because the legal terms said clearly "no takesie backsies"'. Altman was all for EA and AI safety and an all-powerful nonprofit board being able to fire him, and was sincere about all that, until OA & the scaling hypothesis succeeded beyond his wildest dreams, and he discovered it was inconvenient for him and convinced himself that the noble mission now required him to be in absolute control, never mind what restraints on himself he set up years ago - he now understands how well-intentioned but misguided he was and how he should have trusted himself more. (Insert Garfield meme here.)

No wonder the board found it hard to believe! No wonder it took so long to realize Altman had flipped on them, and it seemed Sutskever needed Slack screenshots showing Altman blatantly lying to them about Toner before he finally, reluctantly, flipped. The Altman you need to distrust & assume bad faith of & need to be paranoid about stealing your power is also usually an Altman who never gave you any power in the first place! I'm still kinda baffled by it, personally.

He concealed this change of heart from everyone, including the board, gradually began trying to unwind it, overplayed his hand at one point - and here we are.

It is still a mystery to me what is Sam's motive exactly.

I don't have a complete picture of Joshua Achiam's views, the new head of mission alignment, but what I have read is not very promising.

Here are some (2 year old) tweets from a twitter thread he wrote.

https://x.com/jachiam0/status/1591221752566542336

P(Misaligned AGI doom by 2032): <1e-6%

https://x.com/jachiam0/status/1591220710122590209

People scared of AI just have anxiety disorders.

This thread also has a bunch of takes against EA.

I sure hope he changed some of his views, given that the company he works at expects AGI by 2027

Edited based on comment.

Joshua Achiam is Head of Mission Alignment ("working across the company to ensure that we get all pieces (and culture) right to be in a place to succeed at the mission"), this is not technical AI alignment.

Building 5 GWs of data centers over the course of a few years isn't impossible. The easiest way to power them is to delay the off lining of coal power plants and to reactivate mothballed ones. This is pretty easy to scale since so many of them are being replaced by natural gas power plants for cost reasons.

Theory: The reason OpenAI seem to not care about getting AGI right any more is because they've privately received explicit signals from the government that they wont be allowed to build AGI. This is pretty likely a-priori, and also makes sense of what we are seeing.

There'd be an automatic conspiracy of reasons to avoid outwardly acknowledging this: 1) To keep the stock up, 2) To avoid accelerating the militarization (closing) of AI and the arms race (a very good reason. If I were Zvi, I would also consider avoiding acknowledging this for this reason, but I'm less famous than zvi, so I get to acknowledge it), 3) To protect other staff from the demotivating effects of knowing this thing, that OpenAI will be reduced to a normal company who will never be allowed to release a truly great iteration of the product.

So instead what you'd see is people in leadership, one by one (as they internalize this), suddenly dropping the safety mission or leaving the company without really explaining why.

This is all downstream of the board being insufficiently Machiavellian, and Ilya’s weaknesses in particular. Peter Thiel style champerty against Altman as new EA cause?

That is not an argument against “the robots taking over,” or that AI does not generally pose an existential threat. It is a statement that we should ignore that threat, on principle, until the dangers ‘reveal themselves,’ with the implicit assumption that this requires the threats to actually start happening.

Step 2: When someone talks about their pain, struggles, things going poorly for them — especially any mental health issues — especially crippling / disabling mental health issues– immediately respond with an outpouring gush of love and support.

The problem is not the love and support per se. It's the implied threat that it will all disappear the very moment your situation improves.

Maybe sometimes it is possible for you to improve your situation, and sometimes it is not. But in this setup, you have an incentive against improving even in the case the improvement happens to be possible.

Also, I suppose you get more love & support for legible problems. So the best thing you can do is create a narrative that blames your problems on something that is widely recognized as horrible (e.g. sexism, racism). This moves your attention away from specific details that may be relevant to solving your problem, and discourages both you and the others from proposing solutions (it would be presumptuous to assume that you can overcome sexism or racism using the "one weird trick" <doing the thing that would solve your problem>).

…when Claude keeps telling me how I’m asking complex and interesting questions…

Yeah — also "insightful". If it was from coming from character.ai I'd just assume it was flirting with me, but Claude is so very neuter and this just comes over as creepy and trying too hard. I really wish it would knock off the blatant intellectual flattery.

Re: Mira Murati departure,

I wonder if a sign of AGI / ASI arriving soon is executives of frontier AI companies retiring early to enjoy the fruits of their labor before the world fundamentally changes for all of us.

It would be very surprising to me if such ambitious people wanted to leave right before they had a chance to make history though.

This seems to be what Jimmy Apples on Twitter is implying, that people should go out and "wash their balls in the water at Waikiki Beach" between now and AGI in 2027.

I am certainly saying YES to a lot of stuff on the chance that ASI arrives and our lives change completely (Or we all die). Lets say that I am living more in the present now than I was 2 years ago. I do wonder if those closest to the frontier work would realize first and just go do things that really matter to them.

If Mira Murati goes the way of Sam Trabucco, it will be concerning tell.

We interrupt Nate Silver week here at Don’t Worry About the Vase to bring you some rather big AI news: OpenAI and Sam Altman are planning on fully taking their masks off, discarding the nonprofit board’s nominal control and transitioning to a for-profit B-corporation, in which Sam Altman will have equity.

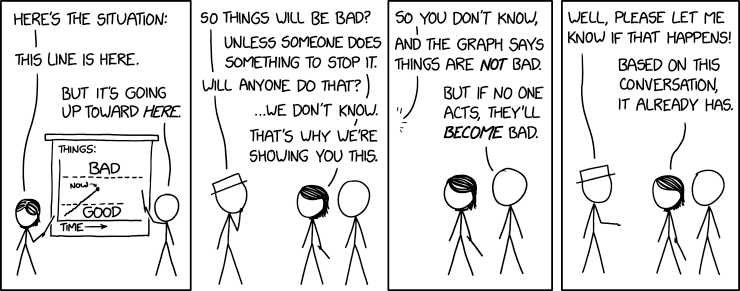

We now know who they are and have chosen to be. We know what they believe in. We know what their promises and legal commitments are worth. We know what they plan to do, if we do not stop them.

They have made all this perfectly clear. I appreciate the clarity.

On the same day, Mira Murati, the only remaining person at OpenAI who in any visible way opposed Altman during the events of last November, resigned without warning along with two other senior people, joining a list that now includes among others several OpenAI co-founders and half its safety people including the most senior ones, and essentially everyone who did not fully take Altman’s side during the events of November 2023. In all those old OpenAI pictures, only Altman now remains.

OpenAI is nothing without its people… except an extremely valuable B corporation. Also it has released its Advanced Voice Mode.

Thus endeth the Battle of the Board, in a total victory for Sam Altman, and firmly confirming the story of what happened.

They do this only days before the deadline for Gavin Newsom to decide whether to sign SB 1047. So I suppose he now has additional information to consider, along with a variety of new vocal celebrity support for the bill.

Also, it seems Ivanka Trump is warning us to be situationally aware? Many noted that this was not on their respective bingo cards.

Table of Contents

Language Models Offer Mundane Utility

Make the slide deck for your Fortune 50 client, if you already know what it will say. Remember, you’re not paying for the consultant to spend time, even if technically they charge by the hour. You’re paying for their expertise, so if they can apply it faster, great.

Timothy Lee, who is not easy to impress with a new model, calls o1 ‘an alien of extraordinary ability,’ good enough to note that it does not present an existential threat. He sees the key insight as applying reinforcement learning to batches of actions around chain of thought, allowing feedback on the individual steps of the chain, allowing the system to learn long chains. He notes that o1 can solve problems other models cannot, but that when o1’s attempts to use its reasoning breaks down, it can fall quite flat. So the story is important progress, but well short of the AGI goal.

Here’s another highly positive report on o1:

AI is being adapted remarkably quickly compared to other general purpose techs, 39% of the population has used it, 24% of workers use it weekly and 11% use it every workday. It can be and is both seem painfully slow to those at the frontier, and be remarkably fast compared to how things usually work.

How people’s AI timelines work, Mensa admission test edition.

Language Models Don’t Offer Mundane Utility

Is o1 actively worse at the areas they didn’t specialize in? That doesn’t seem to be the standard take, but Janus has never had standard takes.

Also here it Teortaxes highlighting a rather interesting CoT example.

Sully reports that it’s hard to identify when to use o1, so at first it wasn’t that useful, but a few days later he was ‘starting to dial in’ and reported the thing was a beast.

To get the utility you will often need to first perform the Great Data Integration Schlep, as Sarah Constantin explains. You’ll need to negotiate for, gather and clean all that data before you can use it. And that is a big reason she is skeptical of big fast AI impacts, although not of eventual impacts. None of this, she writes, is easy or fast.

One obvious response is that it is exactly because AI is insufficiently advanced that the Great Schlep remains a human task – for now that will slow everything down, but eventually that changes. For now, Sarah correctly notes that LLMs aren’t all that net helpful in data cleanup, but that’s because they have to pass the efficiency threshold where they’re faster and better than regular expressions. But once they get off the ground on such matters, they’ll take off fast.

Open source project to describe word frequency shuts down, citing too much AI content polluting the data. I’m not sure this problem wasn’t there before? A lot of the internet has always been junk, which has different word distribution than non-junk. The good version of this was always going to require knowing ‘what is real’ in some sense.

The Mask Comes Off

OpenAI plans to remove the non-profit board’s control entirely, transforming itself into a for-profit benefit corporation, and grant Sam Altman equity. Report is from Reuters and confirmed by Bloomberg.

Yeah, um, no. We all know what this is. We all know who you are. We all know what you intend to do if no one stops you.

I have no idea how this move is legal, as it is clearly contrary to the non-profit mission to instead allow OpenAI to become a for-profit company out of their control. This is a blatant breach of the fiduciary duties of the board if they allow it. Which is presumably the purpose for which Altman chose them.

No argument has been offered for why this is a way to achieve the non-profit mission.

Wei Dei reminds us of the arguments OpenAI itself gave against such a move.

Remember all that talk about how this was a non-profit so it could benefit humanity? Remember how Altman talked about how the board was there to stop him if he was doing something unsafe or irresponsible? Well, so much for that. The mask is fully off.

Good job Altman, I suppose. You did it. You took a charity and turned it into your personal for-profit kingdom, banishing all who dared oppose you or warn of the risks. Why even pretend anymore that there is an emergency break or check on your actions?

I presume there will be no consequences on the whole ‘testifying to Congress he’s not doing it for the money and has no equity’ thing. He just… changed his mind, ya know? And as for Musk and the money he and others put up for a ‘non-profit,’ why should that entitle them to anything?

If indeed OpenAI does restructure to the point where its equity is now genuine, then $150 billion seems way too low as a valuation – unless you think that OpenAI is sufficiently determined to proceed unsafely that if its products succeed you will be dead either way, so there’s no point in having any equity. Or, perhaps you think that if they do succeed and we’re not all dead and you can spend the money, you don’t need the money. There’s that too.

But if you can sell the equity along the way? Yeah, then this is way too low.

Also this week, Mira Murati graciously leaves OpenAI. Real reason could be actual anything, but the timing with the move to for-profit status is suggestive, as was her role in the events of last November, in which she temporarily was willing to become CEO, after which Altman’s notes about what happened noticeably failed to praise her, as Gwern noted at the time when he predicted this departure with 75% probability.

Altman’s response was also gracious, and involved Proper Capitalization, so you know this was a serious moment.

It indicated that Mira only informed him of her departure that morning, and revealed that Bob McGrew, the Chief Research Officer and Barret Zoph, VP of Research (Post-Training) are leaving as well.

Here is Barret’s departure announcement:

At some point the departures add up – for the most part, anyone who was related to safety, or the idea of safety, or in any way opposed Altman even for a brief moment? Gone. And now that includes the entire board, as a concept.

Presumably this will serve as a warning to others. You come at the king, best not miss. The king is not a forgiving king. Either remain fully loyal at all times, or if you have to do what you have to do then be sure to twist the knife.

Also let that be the most important lesson to anyone who says that the AI companies, or OpenAI in particular, can be counted on to act responsibly, or to keep their promises, or that we can count on their corporate structures, or that we can rely on anything such that we don’t need laws and regulations to keep them in check.

It says something about their operational security that they couldn’t keep a lid on this news until next Tuesday to ensure Gavin Newsom had made his decision regarding SB 1047. This is the strongest closing argument I can imagine on the need for that bill.

Fun with Image Generation

Deepfaketown and Botpocalypse Soon

Nikita Bier predicts that social apps are dead as of iOS 18, because the new permission requirements prevent critical mass, so people will end up talking to AIs instead, as retention rates there are remarkably high.

I don’t think these two have so much to do with each other. If there is demand for social apps then people will find ways to get them off the ground, including ‘have you met Android’ and people learning to click yes on the permission button. Right now, there are enough existing social apps to keep people afloat, but if that threatened to change, the response would change.

Either way, the question on the AI apps is in what ways and how much they will appeal to and retain users, keeping in mind they are as bad as they will ever be on that level, and are rapidly improving. I am consistently impressed with how well bad versions of such AI apps perform with select users.

They Took Our Jobs

Someone on r/ChatGPT thinks they are working for an AI. Eliezer warns that this can cause the Lemoine Effect, where false initial warnings cause people to ignore the actual event when it happens (as opposed to The Boy Who Cried Wolf, who is doing it on purpose).

The person in question is almost certainly not working for an AI. There are two things worth noticing here. First, one thing that has begun is people suspecting that someone else might be an AI based on rather flimsy evidence. That will only become a lot more frequent when talking to an AI gets more plausible. Second, it’s not like this person had a problem working for an AI. It seems clear that AI will have to pay at most a small premium to hire people to do things on the internet, and the workers won’t much care about the why of it all. More likely, there will be no extra charge or even a discount, as the AI is easier to work with as a boss.

The Art of the Jailbreak

Two of Gray Swan’s cygnet models survived jailbreaking attempts during their contest, but La Main de la Mort reports that if you avoid directly mentioning the thing you’re trying for, and allude to it instead, you can often get the model to give you what you want. If you know what I mean. In this case, it was accusations of election fraud.

Potential new jailbreak for o1 is to keep imposing constraints and backing it into a corner until it can only give you what you want? It got very close to giving an ‘S’ poem similar to the one from the Cyberiad, but when pushed eventually retreated to repeating the original poem.

OpenAI Advanced Voice Mode

OpenAI ChatGPT advanced voice mode is here, finished ahead of schedule, where ‘here’ means America but not the EU or UK, presumably due to the need to seek various approvals first, and perhaps concerns over the ability of the system to infer emotions. The new mode includes custom instructions, memory, five new voices and ‘improved accents.’ I’ll try to give this a shot but so far my attempts to use AI via voice have been consistently disappointing compared to typing.

Pliny of course leaked the system prompt.

Mostly that all seems totally normal and fine, if more than a bit of a buzz kill, but there’s one thing to note.

Pliny also got it to sing a bit.

Introducing

Gemini Pro 1.5 and Flash 1.5 have new versions, which we cannot call 1.6 or 1.51 because the AI industry decided for reasons I do not understand that standard version numbering was a mistake, but we can at least call Gemini-1.5-[Pro/Flash]-002 which I suppose works.

Also there’s a price reduction effective October 1, a big one if you’re not using long contexts and they’re offering context caching:

They are also doubling rate limits, and claim 2x faster output and 3x less latency. Google seems to specialize in making their improvements as quietly as possible.

Sully reports the new Gemini Flash is really good especially for long contexts although not for coding, best in the ‘low cost’ class by far. You can also fine tune it for free and then use it for the same cost afterwards.

In Other AI News

Ivanka Trump alerts us to be situationally aware!

And here we have a claim of confirmation that Donald Trump at least skimmed Situational Awareness.

o1 rate limits for API calls increased again, now 500 per minute for o1-preview and 1000 per minute for o1-mini.

Your $20 chat subscription still gets you less than one minute of that. o1-preview costs $15 per million input tokens and $60 per million output tokens. If you’re not attaching a long document, even a longer query likely costs on the order of $0.10, for o1-mini it’s more like $0.02. But if you use long document attachments, and use your full allocation, then the $20 is a good deal.

You can also get o1 in GitHub Copilot now.

Llama 3.2 is coming and will be multimodal. This is as expected, also can I give a huge thank you to Mark Zuckerberg for at least using a sane version numbering system? It seems they kept the text model exactly the same, and tacked on new architecture to support image reasoning.

TSMC is now making 5nm chips in Arizona ahead of schedule. Not huge scale, but it’s happening.

OpenAI pitching White House on huge data center buildout, proposing 5GW centers in various states, perhaps 5-7 total. No word there on how they intend to find the electrical power.

Aider, a CLI based tool for coding with LLMs, now writing over 60% of its own code.

OpenAI’s official newsroom Twitter account gets hacked by a crypto spammer.

Sam Altman reports that he had ‘life changing’ psychedelic experiences that transformed him from an anxious, unhappy person into a very calm person who can work on hard and important things. James Miller points out that this could also alter someone’s ability to properly respond to dangers, including existential threats.

Quiet Speculations

Joe Biden talks more and better about AI than either Harris or Trump ever have. Still focusing too much on human power relations and who wins and loses rather than in whether we survive at all, but at least very clearly taking all this seriously.

OpenAI CEO Sam Altman offers us The Intelligence Age. It’s worth reading in full given its author, to know where his head is (claiming to be?) at. It is good to see such optimism on display, and it is good to see a claimed timeline for AGI which is ‘within a few thousand days,’ but this post seems to take the nature of intelligence fundamentally unseriously. The ‘mere tool’ assumption is implicit throughout, with all the new intelligence and capability being used for humans and what humans want, and no grappling with the possibility it could be otherwise.

As in, note the contrast:

The downsides are mentioned, but Just Think of the Potential, and there is no admission of the real risks, dangers or challenges in the room. I worry that Altman is increasingly convinced that the best way to proceed forward is to pretend that most important the challenges mostly don’t exist.

Indeed, in addition to noticing jobs will change (but assuring us there will always be things to do), the main warning is if energy and compute are insufficiently abundant humans would ration them by price and fight wars over them, whereas he wants universal intelligence abundance.

Here is another vision for one particular angle of the future?

This estimate of superpower size seems off by approximately 10 million people, quite possibly exactly 10 million.

The Quest for Sane Regulations

If you are a resident of California and wish to encourage Newsom to sign SB 1047, you can sign this petition or can politely call the Governor directly at 916-445-2841, or write him a message at his website.

Be sure to mention the move by OpenAI to become a B Corporation, abandoning the board’s control over Altman and the company, and fully transitioning to a for-profit corporation. And they couldn’t even keep that news secret a few more days. What could better show the need for SB 1047?

Chris Anderson, head of TED, strongly endorses SB 1047.

In addition to Bruce Banner, this petition in favor of SB 1047 is also signed by, among others, Luke Skywalker (who also Tweeted it out), Judd Apatow, Shonda Rhimes, Press Secretary C.J. Cregg, Phoebe Halliwell, Detectives Lockley and Benson, Castiel, The Nanny who is also the head of the SAG, Shirley Bennett, Seven of Nine and Jessica Jones otherwise known as the bitch you otherwise shouldn’t trust in apartment 23.

Garrison Lovely has more on the open letter here and in The Verge, we also have coverage from the LA Times. One hypothesis is that Gavin Newsom signed other AI bills, including bills about deep fakes and AI replicas, to see if that would make people like the actors of SAG-AFTRA forget about SB 1047. This is, among other things, an attempt to show him that did not work, and some starts report feeling that Newsom ‘played them’ by doing that.

The LA times gets us this lovely quote:

So, keep calling, then, and hope this isn’t de facto code for ‘still fielding bribe offers.’

Kelsey Piper reports that the SB 1047 process actually made her far more optimistic about the California legislative process. Members were smart, mostly weren’t fooled by all the blatant lying by a16z and company on the no side, understood the issues, seemed to mostly care about constituents and be sensitive to public feedback. Except, that is, for Governor Gavin Newsom, who seemed universally disliked and who everyone said would do whatever benefited him.

No no no, on the other hand his Big Tech donors hired his friends to get him not to sign it. Let’s be precise. But yeah, there’s only highly nominal pretense that Newsom would be vetoing the bill based on the merits.

From last week’s congressional testimony, David Evan Harris, formerly of Meta, reminds us that ‘voluntary self-regulation’ is a myth because Meta exists. Whoever is least responsible will fill the void.

Also from last week, if you’re looking to understand how ‘the public’ thinks about AI and existential risk, check the comments in response to Toner’s testimony, as posted by the C-SPAN Twitter account. It’s bleak out there.

No, seriously, those ratios are about right except they forgot to include the ad hominem attacks on Helen Toner.

a16z reportedly spearheaded what some called an open letter but was actually simply a petition calling upon Newsom to veto SB 1047. Its signatories list initially included prank names like Hugh Jass and also dead people like Richard Stockton Rush, which sounds about right given their general level of attention to accuracy and detail. The actual letter text of course contains no mechanisms, merely the claim it will have a chilling effect on exactly the businesses that SB 1047 does not impact, followed by a ‘we are all for thoughtful regulation of AI’ line that puts the bar at outright pareto improvements, which I am very confident many signatories do not believe for a second even if such a proposal was indeed made.

Meta spurns EU’s voluntary AI safety pledge to comply with what are essentially the EU AI Act’s principles ahead of the EU AI Act becoming enforceable in 2027, saying instead they want to ‘focus on compliance with the EU AI Act.’ Given how Europe works, and how transparently this says ‘no we will not do the voluntary commitments we claim make it unnecessary to pass binding laws,’ this seems like a mistake by Meta.

The list of signatories is found here. OpenAI and Microsoft are in as are many other big businesses. Noticeably missing is Apple. This is not targeted at the ‘model segment’ per se, this is for system ‘developers and deployers,’ which is used as the explanation for Mistral and Anthropic not joining and also why the EU AI Act does not actually make sense.

A proposal to call reasonable actions ‘if-then commitments’ as in ‘if your model is super dangerous (e.g. can walk someone through creating a WMD) then you have to do something about that before model release.’ I suppose I like that it makes clear that as long as the ‘if’ half never happens and you’ve checked for this then everything is normal, so arguing that the ‘if’ won’t happen is not an argument against the commitment? But that’s actually how pretty much everything similar works anyway.

Lawfare piece by Peter Salib and Simon Goldstein argues that threatening legal punishments against AGIs won’t work, because AGIs should already expect to be turned off by humans, and any ‘wellbeing’ commitments to AGIs won’t be credible. They do however think AGI contract rights and ability to sue and hold property would work.

The obvious response is that if things smarter than us have sufficient rights to enter into binding contracts, hold property and sue, then solve for the equilibrium. Saying ‘contracts are positive sum’ does not change the answer. They are counting on ‘beneficial trade’ and humans retaining comparative advantages to ensure ‘peace,’ but this doesn’t actually make any sense as a strategy for human survival unless you think humans will retain important comparative advantages in the long term, involving products AGIs would want other than to trade back to other humans – and I continue to be confused why people would expect that.

Even if you did think this, why would you expect such a regime to long survive anyway, given the incentives and the historical precedents? Nor does it actually solve the major actual catastrophic risk concerns. So I continue to notice I both frustrated and confused by such proposals.

The Week in Audio

Helen Toner on the road to responsible AI.

Steven Johnson discusses NotebookLM and related projects.

Rhetorical Innovation

There are a lot of details that matter, yes, but at core the case for existential risk from sufficiently advanced AI is indeed remarkably simple:

If someone confidently disagrees with #1, I am confused how you can be confident in that at this point, but certainly one can doubt that this will happen.

If someone confidently disagrees with #2, I continue to think that is madness. Even if the entire argument was the case that Paul lays out above, that would already be sufficient for this to be madness. That seems pretty dangerous. If you put the (conditional on the things we create being smarter than us) risk in the single digit percents I have zero idea how you can do that with a straight face. Again, there are lots of details that make this problem harder and more deadly than it looks, but you don’t need any of that to know this is going to be dangerous.

Trying again this week: Many people think or argue something like this.

That is not how any of this is going to work.

Human survival in the face of smarter things is not a baseline scenario that happens unless something in particular goes wrong. The baseline scenario is that things that are not us are seeking resources and rearranging the atoms, and this quickly proves incompatible with our survival.

We depend on quite a lot of the details of how the atoms are currently arranged. We have no reason to expect those details to hold, unless something makes those features hold. If we are to survive, it will be because we did something specifically right to cause that to happen.

An example of [conditions] is sufficiently strong coordination among AIs. Could sufficiently advanced AIs coordinate with each other by using good decision theory? I think there’s a good chance the answer is yes. But if the answer is no, by default that is actually worse for us, because any such conflict will involve a lot of atom rearrangements and resource seeking that are not good for us. Or, more simply, to go back a step in the conversation above:

This seems mind numbingly obvious, and the ancients knew this well – ‘when the elephants fight it is the ground that suffers’ and all that. If at least one superintelligence cares about human life, there is some chance that this preference causes humans to survive – the default if the AIs can’t cooperate is that caring about the humans causes it to be outcompeted by AIs that care only about competition against other AIs, but all things need not be equal. If none of them care about human life and they are ‘running around’ without being fully under our control? Then either the AIs will cooperate or they won’t, and either way, we quickly cease to be.

I have always found the arguments against this absurd.

For example, the argument that ‘rule of law’ or ‘property rights’ or ‘the government won’t be overthrown’ will protect us does not reflect history even among humans, or actually make any physical sense. We rely on things well beyond our personal property and local enforcement of laws in order to survive, and would in any case be unable to keep our property for long once sufficiently intellectually outgunned. Both political parties are running on platforms now that involve large violations of rights including property rights, and so on.

The argument ‘the AIs will leave Earth alone because it would be cheap to do that’ also makes no sense.

Eliezer then offered an extensive explanation in this thread which then became this post of the fact that we will almost certainly not have anything to offer to a sufficiently advanced ASI that will make it profitable for the ASI to trade with us rather than use the relevant atoms and energy for something else, nor will it keep Earth in a habitable state simply because it is cheap to do so. If we want a good result we need to do something to get that good result.

I think some are making the move Arthur describes, but a lot of them aren’t. They are thinking the ASIs will compete with each other for real, but that this somehow makes everything fine. As in, no really, something like this:

What is their non-stupid ‘because of reasons’? Sorry, I can’t help you with that. I could list explanations they might give but I don’t know how to make them non-stupid.

Marc Andreessen has a habit of being so close to getting it.

Yes, but it’s harmless, he says, it cannot ‘have a will,’ because it’s ‘math.’ Once again, arguments that are ‘disturbingly relevant’ to people, as in equally true.

Via Tyler Cowen, the Grumpy Economist is his usual grumpy self about all regulatory proposals, except this time the thing he doesn’t want to regulate is AI. I appreciate that he is not making any exceptions for AI, or attempting to mask his arguments as something other than what they are, or pretending he has considered arguments that he is dismissing on principle. We need more honest statements like this – and indeed, most of the time he writes along similar lines about various topics, he’s mostly right.

Indeed, even within AI, many of the calls for particular regulations or actions are exactly falling into the trap that John is decrying here, and his argument against those calls is valid in those cases too. The issue is that AI could rapidly become very different, and he does not take that possibility seriously or see the need to hear arguments for that possibility, purely on priors from other past failed predictions.

And to be even more fair to John, the prompt he was given was ‘is AI a threat to democracy and what to do about it.’ To which, yes, the correct response is largely to mock the doomsayers, because they are talking about the threat from mundane AI.

The central argument is that people have a long track record of incorrectly warning about doom or various dangers from future technologies, so we can safely presume any similar warnings about AI are also wrong. And the same with past calls for pre-emptive censorship of communication methods, or of threats to employment from technological improvements. And that the tool of regulation is almost always bad, it only works in rare situations where we fully understand what we’re dealing with and do something well targeted, otherwise it reliably backfires.

He is indeed right about the general track record of such warnings, and about the fact that regulations in such situations have historically often backfired. What he does not address, at all, are the reasons AI may not remain another ‘mere tool’ whose mess you can clean up later, or any arguments about the actual threats from AI, beyond acknowledging some of the mundane harms and then correctly noting those particular harms are things we can deal with later.

There is no hint of the fact that creating minds smarter than ourselves might be different than creating new tech tools, or any argument why this is unlikely to be so.

Here is everything he says about existential risks:

That is not an argument against “the robots taking over,” or that AI does not generally pose an existential threat. It is a statement that we should ignore that threat, on principle, until the dangers ‘reveal themselves,’ with the implicit assumption that this requires the threats to actually start happening. And the clearer assumption that you can wait until the new AIs exist, and then judge costs vs. benefits retrospectively, and adjust what you do in response.

If we were confident that we could indeed make the adjustments afterwards, then I would agree. The whole point is that you cannot make minds smarter than ourselves, on the assumption that if this poses problems we can go back and fix it later, because you have created minds smarter than ourselves. There is no ‘we’ in control in that scenario, to go back and fix it later.

Aligning a Smarter Than Human Intelligence is Difficult

In the least surprising result in a while, yes, if you use RLHF with human judges that can be systematically fooled and that’s easier than improving the true outputs, then the system will learn to mislead its human evaluators.

Janus offers a principle that I’d like to see more people respect more.

I find the ‘ghosts of your actions’ style warnings very Basilisk-like and also confusing. I mean, I can see how Janus and similar others get there, but the magnitude of the concern seems rather far fetched and once again if you do believe that then this seems like a very strong argument that we need to stop building more capable AIs or else.

The ‘don’t do things now that won’t work later because you won’t stop’ point, however, is true and important. There is a ton of path dependence in practice, and once people find methods working well enough in practice now, they tend to build upon them and not stop until after they encounter the inevitable breakdowns when it stops working. If that breakdown is actively super dangerous, the plan won’t work.

It would of course be entirely unreasonable to say that you can’t use any techniques now unless they would work on an ASI (superintelligence). We have no alignment or control techniques that would work on an ASI – the question is whether we have ‘concepts of a plan’ or we lack even that.

Even if we did find techniques that would work on an ASI, there’s a good chance that those techniques then would utterly fail to do what we want on current AIs, most likely because the current AIs wouldn’t be smart enough, the technique required another highly capable AI to be initiated in the first place or the amount of compute required was too high.

What should we do about this, beyond being conscious and explicit about the future failures of the techniques and hoping this allows us to stop in time? There aren’t any great solutions.

Even if you do get to align the ASI you need to decide what you want it to value.

I continue to think that CEV won’t work, in the sense that even if you did it successfully and got an answer, I would not endorse that answer on reflection and I would not be happy with the results. I expect it to be worse than (for example) asking Roon to write something down as best he could – I’ll take a semi-fictionalized Californian Universalism over my expectation of CEV if those are the choices, although of course I would prefer my own values to that. I think people optimistic about CEV have a quite poor model of the average human. I do hope I am wrong about that.

Other People Are Not As Worried About AI Killing Everyone

Roon has some rather obvious words for them.

Mike Gallagher in the WSJ states without any justification that the Chinese are ‘not interested in cooperation on AI safety’ and otherwise frame everything as zero sum and adversarial and the Chinese as mustache twirling villains whose main concern is using AI for ethnic profiling. More evidence-free jingoism.

The Lighter Side

John Mulaney invited to do 45 minutes at the Dreamforce AI conference, so he did.

Look, it wasn’t what Eliezer Yudkowsky had in mind, but I don’t kink shame, and this seems strictly better than when Claude keeps telling me how I’m asking complex and interesting questions without any such side benefits.