I have found in my own life that I often hear this argument from people with some sort of religious faith, such that at some (maybe unconscious) level there is an assumption that nothing truly bad can happen unless it is "supposed" to. Not always, not exclusively, but could affect what counterarguments might be effective.

This argument makes no sense since religion bottoms out at deontology, not utilitarianism.

In a Christianity for example, if you think God would stop existential catastrophes, you have a deontological duty to do the same. And the vast majority of religions have some sort of deontological obligation to stop disasters (independently of whether divine intervention would have counter-factually happened).

I don't know that there is a single counter argument, but I would generalize across two groupings:

Arguments from the first group of religious people involve those who are capable of applying rationality to their belief systems, when pressed. For those, if they espouse a "god will save us" (in the physical world) then I'd suggest the best way to approach them is to call out the contradiction between their stated beliefs--e.g., Ask first "do you believe that god gave man free will?" and if so "wouldn't saving us from our bad choices obviate free will?"

That's just an example, first and foremost though, you cannot hand wave away their religious belief system. You have to apply yourself to understanding their priors and to engage with those priors. If you don't, it's the same thing as having a discussion with an accelerationist who refuses to agree to assumptions like the "Orthogonality Thesis" or "Instrumental Convergence." You'll spend an unreasonable amount of time debating assumptions that you'll likely make no meaningful progress on the topic you actually care about.

But in so questioning the religious person, you might find they fall into a different grouping. The group of people who are nihilistic in essence. Since "god will save us" could be metaphysical, they could mean instead that so long as they live as a "good {insert religious type of person}" that god will save them in the afterlife, then whether they live or die here in the physical world matters less to them. This is inclusive of those who believe in a rapture myth-- that man is, in fact, doomed to be destroyed.

And I don't know how to engage with someone in the second group. A nihilist will not be moved by rational arguments that are antithetical to their nihilism.

The larger problem (as I see it) is that their beliefs may not contain an inherent contradiction. They may be aligned to eventual human doom.

(Certainly rationality and nihilism are not on a single spectrum, so there are other variations possible, but for the purposes of generalizing... those are the two main groups, I believe.)

Or, if you prefer less religiously, the bias is: Everything that has a beginning has an end.

I don't think this specific free will argument is convincing. Preventing someone's death doesn't obviate their free will, whether the savior is human, deity, alien, AI, or anything else. Think of doctors, parents, firefighters, etc. So I don't see that there's a contradiction between "God will physically save humans from extinction" and "God gave humans free will". Our forthcoming extinction is not a matter of conscious choice.

I also think this would be a misdirected debate. Suppose, for a moment, that God saves us, physically, from extinction. Due to God's subtle intervention the AI hits an integer overflow after killing the first 2^31 people and shuts down. Was it therefore okay to create the AI? Obviously not. Billions of deaths are bad under a very wide range of belief systems.

I understand where you're going, but doctors, parents, firefighters are not possessing of 'typical godlike attributes' such as omniscience and omnipotence and a declaration of intent not to use such powers in a way that would obviate free will.

Nothing about humans saving other humans using fallible human means is remotely the same as a god changing the laws of physics to effect a miracle. And one human taking actions does not obviate the free will of another human. But when God can, through omnipotence, set up scenarios so that you have no choice at all... obviating free will... its a different class of thing all together.

So your responds reads like strawman fallacy to me.

In conclusion: I accept that my position isn't convincing for you.

I agree with you that "you have to apply yourself to understanding their priors and to engage with those priors". If someone's beliefs are, for example:

- God will intervene to prevent human extinction

- God will not obviate free will

- God cannot prevent human extinction without obviating free will

Then I agree there is an apparent contradiction, and this is a reasonable thing to ask them about. They could resolve it in three ways.

- Maybe god will not intervene. (very roughly: deism)

- Maybe god will intervene and obviate free will. (very roughly: conservative theism)

- Maybe god will intervene and preserve free will. (very roughly: liberal theism)

However they resolve it, discussion can go from there.

Depending on the tradition, people with religious faith may have been exposed to a higher number of literal doomsday prophets. The word "prophet" is perhaps a clue? So I think it's natural for them to pattern-match technological "prophets" of doom with religious prophets of doom and dismiss both.

To argue against this, I would emphasize the differences. Technological predictions of extinction are not based on mysterious texts from the past, or personal unverifiable revelations from God, but on concrete evidence that anyone can look at and form their own opinions. Also, forecasters generally have a lot of uncertainty about exactly what will happen, and when. Forecasts change in response to new information, such as the recent success of generative AI. Highlighting these differences can help break the immediate pattern match. Pointing to other technological catastrophic risks, such as climate change, nuclear warfare, etc, can also properly locate the discussion.

The suggested arguments in the opening post are great ideas as well. Given the examples of climate change and nuclear warfare, I think we have every reason to work towards further engagement with religious leaders on these issues.

Interesting insight. Sadly there isn't much to be done against the beliefs of someone who is certain that god will save us.

Maybe the following: Assuming the frame of a believer, the signs of AGI being a dangerous technology seem obvious on closer inspection. If god exists, then we should therefore assume that this is an intentional test he has placed in front of us. God has given us all the signs. God helps those who help themselves.

#7: (Scientific) Doomsday Track Records Aren't That Bad

Historically, the vast majority of doomsday claims are based on religious beliefs, whereas only a small minority have been supported by a large fraction of relevant subject matter experts. If we consider only the latter, we find:

A) Malthusian crisis: false...but not really a doomsday prediction per se.

B) Hole in the ozone layer: true, but averted because of global cooperation in response to early warnings.

C) Climate change: probably true if we did absolutely nothing; probably mostly averted because of moderate, distributed efforts to mitigate (i.e. high investment in alternative energy sources and modest coordination).

D) Nuclear war: true, but averted because of global cooperation, with several terrifying near-misses...and could still happen.

This is not an exhaustive list as I am operating entirely from memory, but I am including everything I can think of and not deliberately cherry-picking examples--in fact, part of the reason I included (A) was to err on the side of stretching to include counter-examples. Also, the interpretations obviously contain a fair bit of subjectivity / lack of rigor. Nonetheless, in this informal survey, we see a clear pattern where, more often than not, doomsday scenarios that are supported by many leading relevant experts depict actual threats to human existence and the reason we are still around is because of active global efforts to prevent these threats from being realized.

Given all of the above counterarguments (especially #6), there is strong reason to categorize x-risk from AI alongside major environmental and nuclear threats. We should therefore assume by default that it is real and will only be averted if there is an active global effort to prevent it from being realized.

It is an argument by induction based on a naive extrapolation of a historic trend.

This characterization could be a good first step to construct a convincing counter argument. Are there examples of other arguments by induction that simply extrapolate historic trends, where it is much more apparent that it is an unreliable form of reasoning? To be intuitive it must not be too technical, e.g. "people claiming to have found a proof to Fermat's last theorem have always been wrong in the past (until Andrew Wiles came along)" would probably not work well.

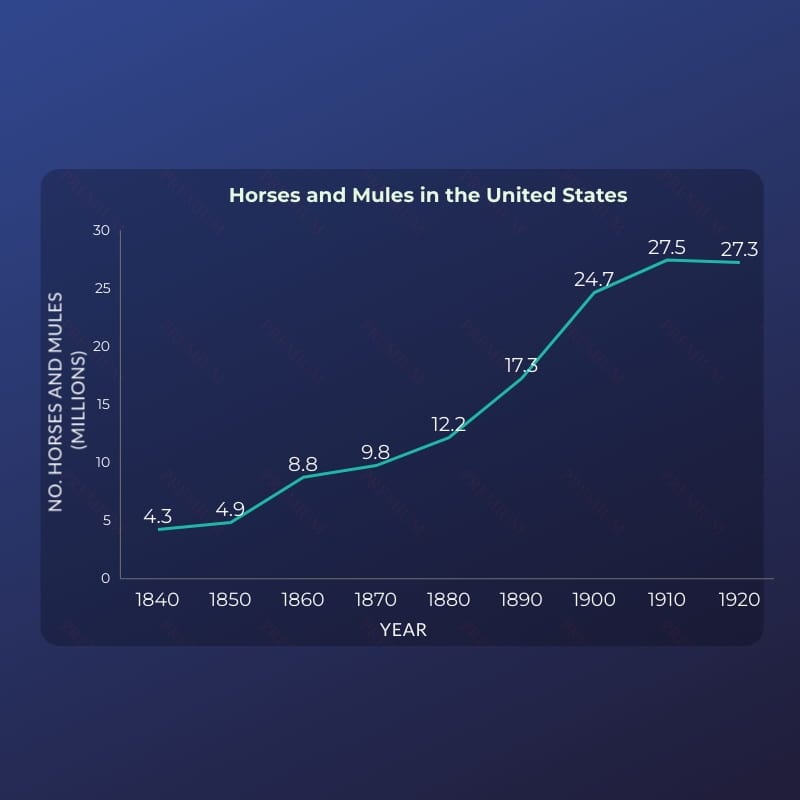

Good idea! I thought of this one: https://energyhistory.yale.edu/horse-and-mule-population-statistics/

I think the strongest "historical" argument is the concept of quenching/entropy/nature abhores complex systems.

What I mean by this is that generations of humans before us, through blood sweat and tears, have built many forms of machine. And they all have flaws and when you run them at full power they eventually fall apart. Quite a bit early in the prototype phase.

And generations of hypesters have over promised as well. This time will be different, this won't fail, this is safe, the world is about to change. Almost all their proclamations were falsified.

A rampant ASI is this machine you built. And instead of just leaving it's operating bounds and failing - which I mean all kinda stupid things could end the ASIs run instantly like a segfault that kills every single copy at the same time because a table for tracking peers ran out of memory or similar - we're predicting it starts as this seed, badly outmatched by the humans and their tools and weapons. It's so smart it stealthily acquires resources and develops technology and humans are helpless to stop it and it doesn't fail spontaneously from faults in the software it runs on. Or satisfying it's value function and shutting down. And its so smart it finds some asymmetry - some way to win against overwhelming odds. And it kills the humans, and takes over the universe, and from the perspective of alien observers they see all the stars dim from Dyson swarms capturing some of the light in an expanding sphere.

Can this happen? Yes. The weight of time and prior examples makes it seem unlikely though. (The weight of time is that it's about 14 billion years from the hypothesized beginning of the universe and we observe no Dyson swarms and we exist)

It may not BE unlikely. Though the inverse case - having high confidence that it's going to happen this way, that pDoom is 90 percent plus - how can you know this?

The simplest way for Doom to not be possible is simply that the compute requirements are too high and there are not enough GPUs on earth and won't be for decades. The less simple way is that a machine humans built that IS controllable may not be as stupid as we think in utility terms vs an unconstrained machine. (So as long as the constrained machines have more resources - weapons I mean - under their control, they can methodically hunt down and burn out any rampant escapees. Burn refers to how you would use a flamethrower against rats or thermite on unauthorized equipment (since you can't afford to reuse components built by an illegal factory or nanoforge)

Isn't that a response to a completely different kind of argument? I am probably not going to discuss this here, since it seems very off-topic, but if you want I can consider putting it on my list for arguments I might discuss in this form in a future article.

There is a popular tendency to dismiss people who are concerned about AI-safety as "doomsday prophets", carrying with it the suggestion that predicting an existential risk in the near future would automatically discredit them (because "you know; they have always been wrong in the past").

Example Argument Structure

Discussion/Difficulties

This argument is persistent and kind of difficult to approach/deal with, in particular because it is technically a valid (yet, I argue, weak) point. It is an argument by induction based on a naive extrapolation of a historic trend. Therefore it cannot be completely dismissed by simple falsification through the use of an inconsistency or invalidation of one of its premises. Instead it becomes necessary to produce a convincing list of weaknesses - the more, the better. A list like the one that follows.

#1: Unreliable Heuristic

If you look at history, these kind of ad-hoc "things will stay the same" predictions are often incorrect. An example of this that is also related to technological developments would be the horse and mule populations in the US (back to below 10 million at present).

#2: Survivorship Bias

Not only are they often incorrect, there is a class of predictions for which they, by design/definition, can only be correct ONCE, and for these they are an even weaker argument, because your sample is affected by things like survivorship bias. Existential risk arguments are in this category, because you can only go extinct once.

#3: Volatile Times

We live in an highly unstable and unpredictable age shaped by rampant technological and cultural developments. The world today from the perspective of your grandparents is barely recognizable. In such times, this kind of argument becomes even weaker. This trend doesn't seem to slow down and there are strong arguments that even benign AI would flip the table on many of such inductive predictions.

#4: Blast Radius Induction (thanks Connor Leahy)

Leahy has introduced an analogy of "technological blast radius" that represents an abstract way of thinking about different technologies in terms of their potential power, including their potential for causing harm either intentionally or by human error. As we are progressing through the tech tree - while many corners of it are relatively harmless or benign, the maximum "blast radius" of technology available to us necessarily increases. You can inflict more damage with a sword than with a club, even more if you have access to gunpowder, modern weapons etc. An explosion in a TNT factory can destroy a city block, and a nuclear arsenal could be used to level many cities. Now it seems to be very sensible (by induction!) that eventually, this "blast radius" will encompass all of earth. There are strong indicators that this will be the case for strong AI, and even that it is likely to occur BY ACCIDENT, once this technology has been developed.

#5: Supporting Evidence & Responsibility

Having established this as a technically valid, yet weak argument - a heuristic for people-who-don't-know-any-better - it is your responsibility to look at the concrete evidence and available arguments our concerns about AI existential risk are based on, in order to decide whether to confirm or dismiss your initial hypothesis (which is valid). Because the topic is obviously extremely important, I implore you to do that.

#6: Many Leading Researchers Worried

The list of AI researchers worried about existential risk from AI includes extremely big names such as Geoffrey Hinton, Yoshua Bengio and Stuart Russel.

#7: (Scientific) Doomsday Track Records Aren't That Bad

authored by Will Petillo

Historically, the vast majority of doomsday claims are based on religious beliefs, whereas only a small minority have been supported by a large fraction of relevant subject matter experts. If we consider only the latter, we find:

This is not an exhaustive list as I am operating entirely from memory, but I am including everything I can think of and not deliberately cherry-picking examples--in fact, part of the reason I included (A) was to err on the side of stretching to include counter-examples. Also, the interpretations obviously contain a fair bit of subjectivity / lack of rigor. Nonetheless, in this informal survey, we see a clear pattern where, more often than not, doomsday scenarios that are supported by many leading relevant experts depict actual threats to human existence and the reason we are still around is because of active global efforts to prevent these threats from being realized.

Given all of the above counterarguments (especially #6), there is strong reason to categorize x-risk from AI alongside major environmental and nuclear threats. We should therefore assume by default that it is real and will only be averted if there is an active global effort to prevent it from being realized.

Final Remarks

I consider this list a work-in-progress, so feel free to tell me about missing points (or your criticism!) in the comments.

I also intend to make this a series about anti-x-risk arguments based on my personal notes and discussions related to my activism. Suggestions of popular or important arguments are welcome!