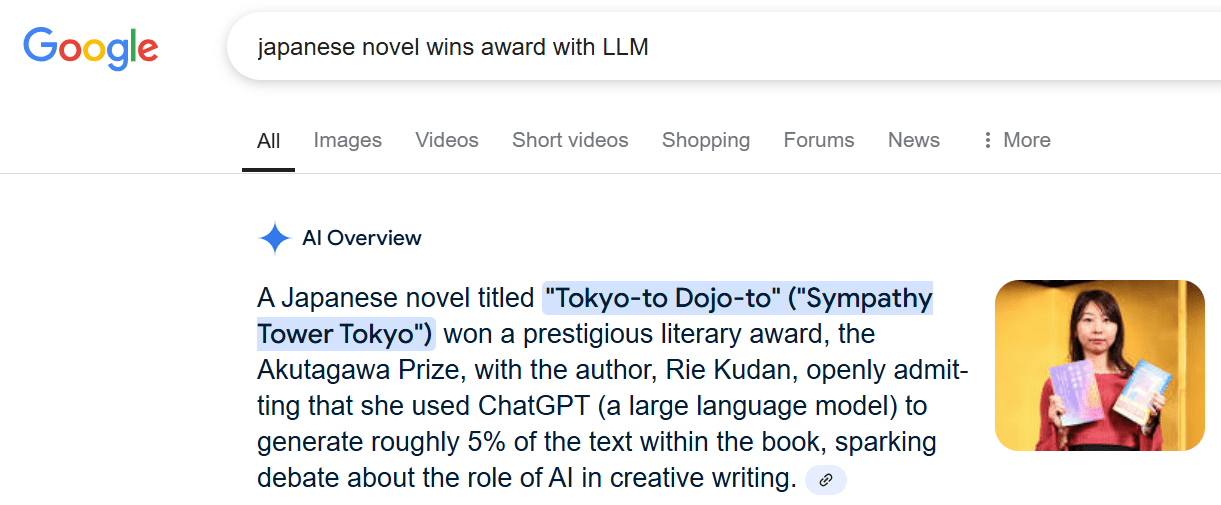

Last year I remember seeing a Japanese novelist win an award with the help of an LLM.[1]

Art forms frequently evolve, but there are several concerns with the use of LLMs for creative writing. For one, there's the issue of copyright infringement that famous writers are taking up arms against:

George R.R. Martin, Jodi Picoult, John Grisham and Jonathan Franzen are among the 17 prominent authors who joined the suit led by the Authors Guild, a professional organization that protects writers’ rights. Filed in the Southern District of New York, the suit alleges that OpenAI’s models directly harm writers’ abilities to make a living wage, as the technology generates texts that writers could be paid to pen, as well as uses copyrighted material to create copycat work.

“Generative AI threatens to decimate the author profession,” the Authors Guild wrote in a press release.

Even if someone legally acquired non-copyrighted text to train an LLM on, there’s also the issue of talent.

If someone told me they produced a 300-page novel by hand—I would be impressed.

If someone else told me they produced a 300-page novel with the aid of an LLM (that wrote the vast majority of the book) and the human merely organized the AI-generated content—I would consider the real author to be the LLM and the human would merely be an editor (or, at best, an artist creating a collage).

The more someone relies on LLMs to write a novel, the less impressive their accomplishment is.

The debate on the overuse of technology in writing has been going on long before LLMs took the stage. In the 1980s writers were debating the word processor as a legitimate writing tool:

Plenty of writers balked at the joys of word processing, for a host of reasons. Overwriting, in their view, became too easy; the labor of revision became undervalued. When Gore Vidal wrote in the mid-1980s that the “word processor is erasing literature,” he expressed an uneasiness about technology’s proximity to creative writing.

I respect the famous authors who have gone to great lengths to produce their books. With the advent of LLMs, people will be deprived of the thinking process. They'll miss the opportunity to struggle before a blank piece of paper while nervously chewing on a Ticonderoga #2 pencil, and they'll miss the opportunity to sit behind a keyboard and mutter swear words at the blinking cursor that seems to be taunting them and asking what’s next?

With the ubiquity of LLMs, we'll never actually know if an author is using AI to think for them.[2] Practically anyone can be an author now. And when everyone's an author, no one will be.[3]

I asked a friend if he thinks it's silly that I only use my biological brain to write. He said, "You're still allowed to do math without a calculator. But why would you?" Paul Graham has a good rebuttal to that:

In a couple decades there won't be many people who can write.

AI has blown this world open. Almost all pressure to write has dissipated. You can have AI do it for you, both in school and at work.

The result will be a world divided into writes and write-nots. There will still be some people who can write. But the middle ground between those who are good at writing and those who can't write at all will disappear. Instead of good writers, ok writers, and people who can't write, there will just be good writers and people who can't write.

Is that so bad? Isn't it common for skills to disappear when technology makes them obsolete? There aren't many blacksmiths left, and it doesn't seem to be a problem.

Yes, it's bad. The reason is: writing is thinking. In fact there's a kind of thinking that can only be done by writing. You can't make this point better than Leslie Lamport did:If you're thinking without writing, you only think you're thinking.

So a world divided into writes and write-nots is more dangerous than it sounds. It will be a world of thinks and think-nots. I know which half I want to be in, and I bet you do too.

This situation is not unprecedented. In preindustrial times most people's jobs made them strong. Now if you want to be strong, you work out. So there are still strong people, but only those who choose to be.

It will be the same with writing. There will still be smart people, but only those who choose to be.

I wonder if we'll look back on the people (like me) who solely use their biological brains to produce writing and view them as luddites compared to everyone else using LLMs. Am I basically a grumpy old scribe complaining about the newfangled Gutenberg Press? Or will my steadfast refusal to let go of a fading art form be seen as the death throes of a generation that's more than happy to slide into the warm comfort of brain rot.

- ^

She didn't simply tell ChatGPT "write me an entire story about XYZ", but instead used the LLM to generate text for an AI assistant within the story which characters interacted with.

- ^

People are constantly searching for competitive advantages. Unlike in the world of sports, there are few regulations in the writing world. Authors can claim their work is strictly biologically-produced for status purposes, but how can we possibly verify that? LLMs will become the PEDs of writers.

- ^

I'd recommend watching this short clip.

What if I have wonderful plot in my head and I use LLM to pour it into acceptable stylistic form?

Plots that are profitable to write abound, but plots that any specific person likes may well be quite thin on the ground.

I think the key here is that authors don't feel the same attachment to submitted plot ideas as submitters do (or the same level of confidence in their profitability), and thus would view writing them as a service done for the submitter. Writing is hard work, and most people want to be compensated if they're going to do a lot of work to someone else's specifications. In scenarios where they're paid for their services, writers often do wri... (read more)