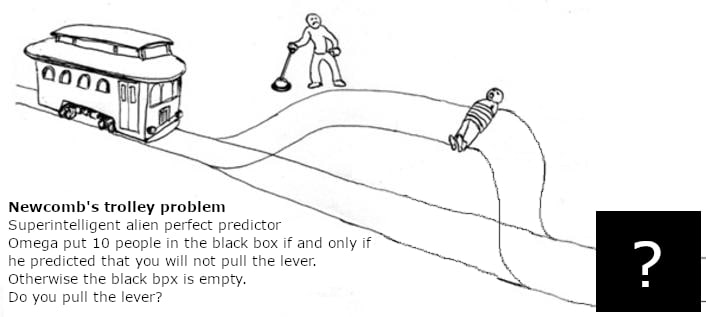

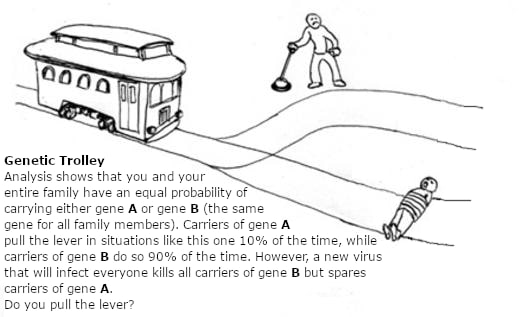

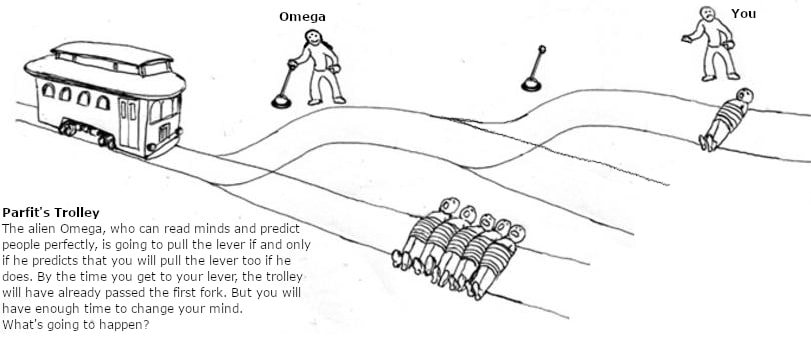

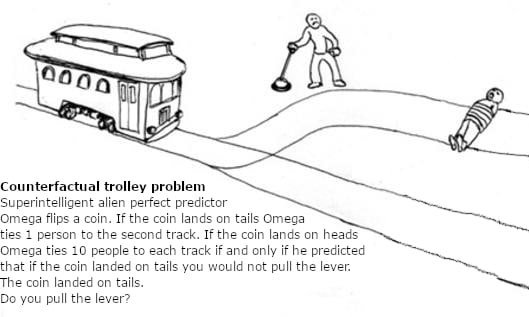

I turned decision theory problems into memes about trolleys

I hope it has some educational, memetic or at least humorous potential. Newcomb's problemSmoking lesionParfit's HitchhikerCounterfactual muggingXor-blackmail Bonus Five-and-ten problem

I hope it has some educational, memetic or at least humorous potential. Newcomb's problemSmoking lesionParfit's HitchhikerCounterfactual muggingXor-blackmail Bonus Five-and-ten problem

This is a later better version of the problem in this post. This problem emerged from my work in the "Deconfusing Commitment Races" project under the Supervised Program for Alignment Research (SPAR), led by James Faville. I'm grateful to SPAR for providing the intellectual environment and to James Faville personally...

Epistemic status: I'm pretty sure the problem is somewhat interesting, because it temporarily confused several smart people. I'm not at all sure that it is very original; probably somebody has already thought about something similar. I'm not at all sure that I have actually found a flaw in UDT, but...

I hope it has some educational, memetic or at least humorous potential. Newcomb's problemSmoking lesionParfit's HitchhikerCounterfactual muggingXor-blackmail Bonus Five-and-ten problem

Epistemic status: I am confused and trying to become less confused. So, our shepherd community noticed the wolf. It's a small wolf, about the size of a chihuahua, and is unlikely to cause any serious damage. Should we cry "wolf"? Possible arguments against crying "wolf" 1. When someone cries "wolf",...

This is a test chapter of the project described here. When the project is completed in its entirety, this chapter will most likely be the second one. We want to create a sequence that a fifteen or sixteen year old smart school student can read and that can encourage them...

Disclaimer: My English isn't very good, but do not dissuade me on this basis - the sequence itself will be translated by a professional translator. I want to create a sequence that a fifteen or sixteen year old smart school student can read and that can encourage them to go...

If a continuous function goes from value A to value B, it must pass through every value in between. In other words, tipping points must necessarily exist.

I propose more specific idea: if you are uniformly uncertain about fractional part of , then .

E.g., if you hurry on the way to the subway station without knowing when the next train arrives and got there 10 seconds earlier than if you didn't hurry, you win exactly the same 10 seconds in expectation.

Untestability: you cannot safely experiment on near-ASI (I mean, you can, but you’re not guaranteed not to cross the threshold into the danger zone, and the authors believe that anything you can learn from before won’t be too useful).

I think "won't be too useful" is kinda misleading. Point is more like "it's at least as difficult as launching a rocket into space without good theory about how gravity works and what the space is". Early tests and experiments are useful! They can help you with the theory! You just want to be completely sure that you are not in your test rocket yourself.

... (read more)At times the authors appeal to prominent figures as evidence

Thanks for your concern!

I think I worded it poorly. I think it is an "internally visible mental phenomena" for me. I do know how it feels and have some access to this thing. It's different from hyperstition and different from "white doublethink"/"gamification of hyperstition". It's easy enough to summon it on command and check, yeah, it's that thing. It's the thing that helps to jump in a lake from a 7-meters cliff, that helps to get up from a very comfy bed, that sometimes helps to overcome social anxiety. But I didn't generalise from these examples to one unified concept before.

And in the cases where I sometimes do it, my skill issues... (read more)

Thank you! Datapoint: I think at least some parts of this can be useful for me personally.

Somehat connected to the first part, one of the most "internal-memetic" moments from "Project: Lawful" for me is this short exchange between Keltham and Maillol:

"For that Matter, what is the Governance budget?"

"Don't panic. Nobody knows."

"Why exactly should I not panic?"

"Because it won't actually help."

"Very sensible."

If evil and not very smart bureaucrat understands it, I can too :)

Third part is the most interesting. It makes perfect sense, but I have no easy-to-access perception of this thing. Will try to do something with this skill issue. Also, "internal script / pseudo-predictive sort-of-world-model that instead connects to motor output"... (read more)

list of fiction genres encompassed by almost any randomly selected… say, twenty… non-“traditional roleplaying game” “TTRPGs”.

Hmmm... "Almost any genre ever" for Fate? (Ok, not the genres where main characters must be very incompetent.) I personally prefer systems with more narrow focus which support the tropes of the specific genre, but your statement is just false.

D&D is good for heroic fantasy and mixes of heroic fantasy with some other staff. D&D is bad for almost everything else. Of course, some modules try to do something else with D&D, but they usually would be better with some other system.

Random thought: maybe it makes sense to allow mostly-LLM-generated posts if the full prompt is provided (maybe itself in collapsible section). Not sure.

Obviously, there are situations when Alice couldn't just buy the same thing on her own. But besides that, plausible deniability:

Would you also approve other costly signals? Like, I dunno, cutting off a phalanx from a pinky when entering a relationship.

I think that "habitual defectors" are more likely to pretend to choose an option that is not disapproved by society.

I would like to have an option to sort comments to posts by "top scoring", but comments in shortforms by "newest". (totally not critical, just datapoint)

I just discovered that I apparently independently invented already existing rephrasing of smoking lesion problem with toxoplasmosis. This is funny.

Okay, now with more cherrypicked example (from here, chapter 10, "Won’t AI differ from all the historical precedents?"):

... (read more)If you study an immature AI in depth, manage to decode its mind entirely, develop a great theory of how it works that you validate on a bunch of examples, and use that theory to predict how the AI’s mind will change as it ascends to superintelligence and gains (for the first time) the very real option of grabbing the world for itself — even then you are, fundamentally, using a new and untested scientific theory to predict the results of an experiment that has not yet run, about what the AI will do when

New cause area: translate all EY's writing into Basic English. Only half-joke. And it's not only about Yudkowsky.

I think I will actually do something like this with some text for testing purposes.

Not inferential-distance-simple, but stylistically-simple.

I translate online materials for IABIED into Russian. It has sentences like this:

The wonder of natural selection is not its robust error-correction covering every pathway that might go wrong; now that we’re dying less often to starvation and injury, most of modern medicine is treating pieces of human biology that randomly blow up in the absence of external trauma.

This is not cherrypicked at all. It's from the last page I translated. And I translated this sentence with three sentences. And quick LLM-check confirmed that English is actually less tolerant to overly long sentences than Russian.

I think this is bad. I hope it's better in the book (my copy hasn't reached me yet) and online materials are like this because they are poorly edited bonus. But I have a feeling that a lot of the writing on AI safety has the same problem.

This is a later better version of the problem in this post. This problem emerged from my work in the "Deconfusing Commitment Races" project under the Supervised Program for Alignment Research (SPAR), led by James Faville. I'm grateful to SPAR for providing the intellectual environment and to James Faville personally for intellectual discussions and help with the draft of this post. Any mistakes are my own.

I used Claude and Gemini to help me with phrasing and grammar in some parts of this post.

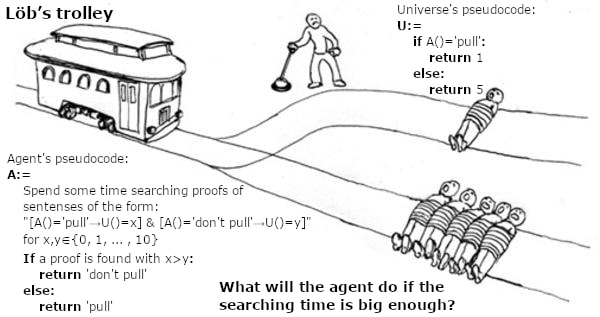

There once lived an alien named Omega who enjoyed giving the Programmer decision theory problems. The answer to each one had to be a program-player that would play the game... (read 1024 more words →)

Epistemic status: I'm pretty sure the problem is somewhat interesting, because it temporarily confused several smart people. I'm not at all sure that it is very original; probably somebody has already thought about something similar. I'm not at all sure that I have actually found a flaw in UDT, but I somewhat expect that a discussion of this problem may clarify UDT for some people.

This post emerged from my work in the "Deconfusing Commitment Races" project under the Supervised Program for Alignment Research (SPAR), led by James Faville. I'm grateful to SPAR for providing the intellectual environment and to James Faville personally for intellectual discussions and help with the draft of this... (read 970 more words →)

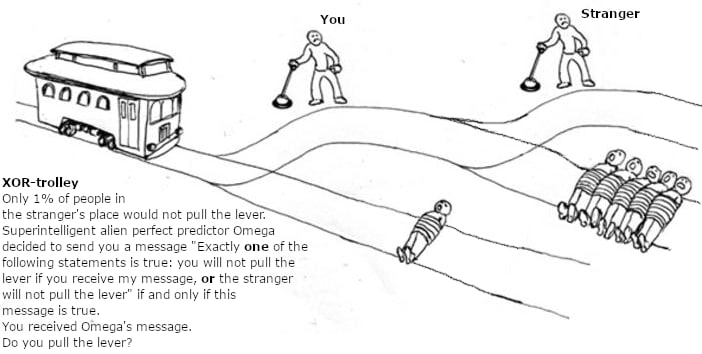

I came up with the decision theory problem. It has the same moral as xor-blackmail, but I think it's much easier to understand:

Omega has chosen you for an experiment:

You received an offer from Omega. Which amount do you choose?

I didn't come up with a сatchy name, though.

Epistemic state: thoughts off the top of my head, not the economist at all, talked with Claude about it

Why is there almost nowhere a small (something like 1%) universal tax on digital money transfers? It looks like a good idea to me:

I see probable negative effects... but doesn't VAT and individial income tax just already have the same effects, so if this tax replace [parts of] those nothing will change much?

Also, as I understand, it would discourage high-frequency trading. I'm not sure if this would be a feature or a bug, but my current very superficial understanding leans towards the former.

Why is it a bad idea?

I hope it has some educational, memetic or at least humorous potential.

Isn't the whole point of Petrov day kinda "thou shall not press the red button"?

The Seventh Sally or How Trurl's Own Perfection Led to No Good

Thanks to IC Rainbow and Taisia Sharapova who brought this matter in MiriY Telegram chat.

In their logo they have:

They Think. They Feel. They're Alive

And the title of the video on the same page is:

AI People Alpha Launch: AI NPCs Beg Players Not to Turn Off the Game

And in the FAQ they wrote:

The NPCs in AI People are indeed advanced and designed to emulate thinking, feeling, a sense of aliveness, and even reactions that might resemble pain. However, it's essential to understand that they operate on a digital substrate, fundamentally different from human consciousness's biological substrate.

So this is the best argument they have?

Wake up, Torment Nexus just arrived.

(I don't think current models are sentient, but the way of thinking "they are digital, so it's totally OK to torture them" is utterly insane and evil)

Epistemic status: I am confused and trying to become less confused.

So, our shepherd community noticed the wolf. It's a small wolf, about the size of a chihuahua, and is unlikely to cause any serious damage. Should we cry "wolf"?

This is a test chapter of the project described here. When the project is completed in its entirety, this chapter will most likely be the second one.

We want to create a sequence that a fifteen or sixteen year old smart school student can read and that can encourage them to go into alignment. Optimization targets of this project are:

Disclaimer: My English isn't very good, but do not dissuade me on this basis - the sequence itself will be translated by a professional translator.

I want to create a sequence that a fifteen or sixteen year old smart school student can read and that can encourage them to go into alignment. Right now I'm running an extracurricular course for several smart school students and one of my goals is "overcome long inferential distances so I will be able to create this sequence".

I deliberately did not include in the topics the most important modern trends in machine learning. I'm optimizing for the scenario "a person reads my sequence, then goes to university for... (read 239 more words →)

I live in Saint-Petersburg, Russia, and I want to do something useful to AI-Alignment movement.

Several weeks ago I started to translate "Discussion with Eliezer Yudkowsky on AGI interventions". Now it's complete and linked to in couple thematic social network groups, and I currently translate old metaphorical post almost as a rest, after which I plan to follow the list from Rob Bensinger's comment.

My English is not very good, I know a lot of people with better language proficiency, but it seems to me that I am on Pareto Frontier on "translation skills + alignment-related knowledge + motivation to translate". Otherwise, I think, there would be more than one alignment-related translation on lesswrong.ru over the past two years!

I would like to know if this is helpful or if I should focus on something completely different.

Ok, guys, really, does anyone (Claude says probably not) track if there are negative utilitarians in leadership of top AI companies?

That's kinda important, don't you think?

Happy New Year, btw.

UPD: Obviously people think it's not a good point. Why? Do you think it's not important, not neglected, or that answer is obviously "no"?