An important thing that the AGI alignment field never understood:

Reflective stability. Everyone thinks it's about, like, getting guarantees, or something. Or about rationality and optimality and decision theory, or something. Or about how we should understand ideal agency, or something.

But what I think people haven't understood is

- If a mind is highly capable, it has a source of knowledge.

- The source of knowledge involves deep change.

- Lots of deep change implies lots of strong forces (goal-pursuits) operating on everything.

- If there's lots of strong goal-pursuits operating on everything, nothing (properties, architectures, constraints, data formats, conceptual schemes, ...) sticks around unless it has to stick around.

- So if you want something to stick around (such as the property "this machine doesn't kill all humans") you have to know what sort of thing can stick around / what sort of context makes things stick around, even when there are strong goal-pursuits around, which is a specific thing to know because most things don't stick around.

- The elements that stick around and help determine the mind's goal-pursuits have to do so in a way that positively makes them stick around (refl

The Berkeley Genomics Project is fundraising for the next forty days and forty nights at Manifund: https://manifund.org/projects/human-intelligence-amplification--berkeley-genomics-project

I discuss this here: https://www.lesswrong.com/posts/jTiSWHKAtnyA723LE/overview-of-strong-human-intelligence-amplification-methods#Brain_emulation

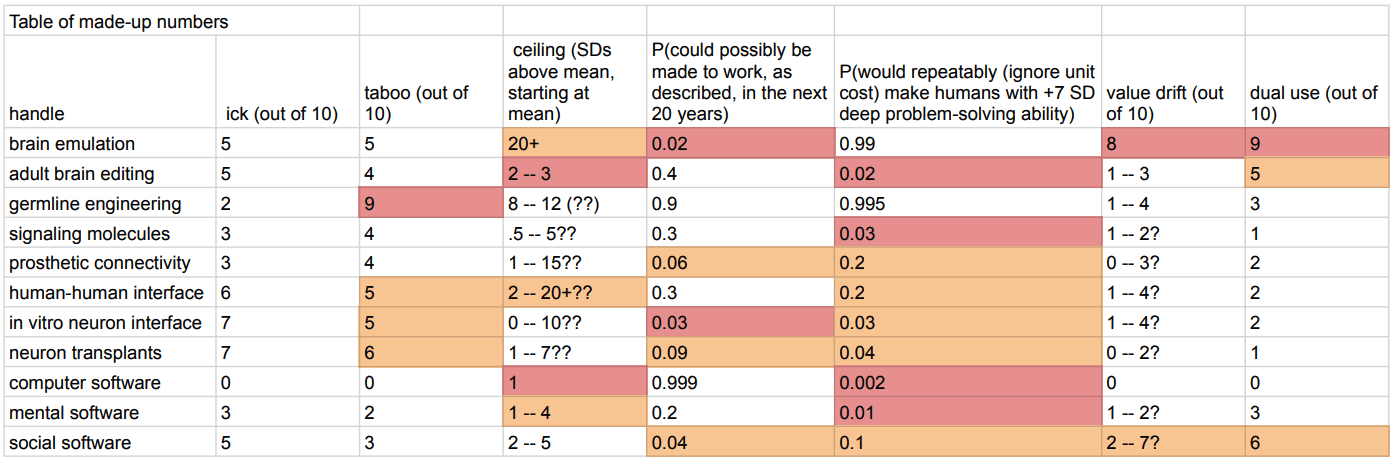

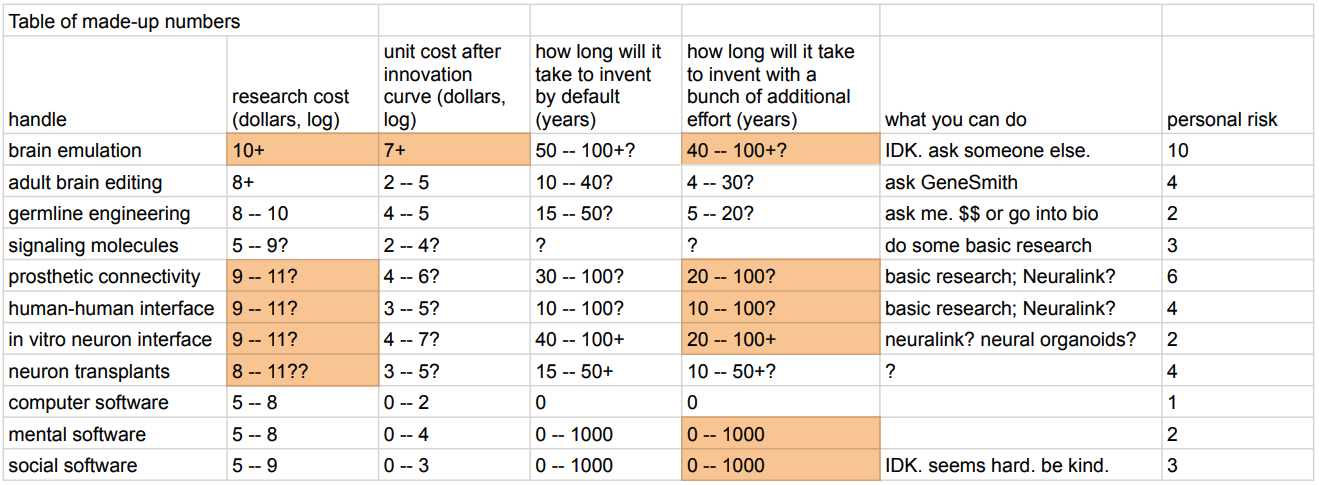

You can see my comparisons of different methods in the tables at the top:

Are people fundamentally good? Are they practically good? If you make one person God-emperor of the lightcone, is the result something we'd like?

I just want to make a couple remarks.

- Conjecture: Generally, on balance, over longer time scales good shards express themselves more than bad ones. Or rather, what we call good ones tend to be ones whose effects accumulate more.

- Example: Nearly all people have a shard, quite deeply stuck through the core of their mind, which points at communing with others.

- Communing means: speaking with; standing shoulder to shoulder with, looking at the same thing; understanding and being understood; lifting the same object that one alone couldn't lift.

- The other has to be truly external and truly a peer. Being a truly external true peer means they have unboundedness, infinite creativity, self- and pair-reflectivity and hence diagonalizability / anti-inductiveness. They must also have a measure of authority over their future. So this shard (albeit subtly and perhaps defeasibly) points at non-perfect subjugation of all others, and democracy. (Would an immortalized Genghis Khan, having conquered everything, after 1000 years, continue to wish to see in th

This assumes that the initially-non-eudaimonic god-king(s) would choose to remain psychologically human for a vast amount of time, and keep the rest of humanity around for all that time. Instead of:

- Self-modify into something that's basically an eldritch abomination from a human perspective, either deliberately or as part of a self-modification process gone wrong.

- Make some minimal self-modifications to avoid value drift, precisely not to let the sort of stuff you're talking about happen.

- Stick to behavioral patterns that would lead to never changing their mind/never value-drifting, either as an "accidental" emergent property of their behavior (the way normal humans can surround themselves in informational bubbles that only reinforce their pre-existing beliefs; the way normal human dictators end up surrounded by yes-men; but elevated to transcendence, and so robust enough to last for eons) or as an implicit preference they never tell their aligned ASI to satisfy, but which it infers and carefully ensures the satisfaction of.

- Impose some totalitarian regime on the rest of humanity and forget about it, spending the rest of their time interacting only with each other/with tailor-built non

This assumes

Yes, that's a background assumption of the conjecture; I think making that assumption and exploring the consequences is helpful.

Self-modify into something that's basically an eldritch abomination from a human perspective, either deliberately or as part of a self-modification process gone wrong.

Right, totally, then all bets are off. The scenario is underspecified. My default imagination of "aligned" AGI is corrigible AGI. (In fact, I'm not even totally sure that it makes much sense to talk of aligned AGI that's not corrigible.) Part of corrigibility would be that if:

- the human asks you to do X,

- and X would have irreversible consequences,

- and the human is not aware of / doesn't understand those consequences,

- and the consequences would make the human unable to notice or correct the change,

- and the human, if aware, would have really wanted to not do X or at least think about it a bunch more before doing it,

then you DEFINITELY don't just go ahead and do X lol!

In other words, a corrigible AGI is supposed to use its intelligence to possibilize self-alignment for the human.

...Make some minimal self-modifications to avoid value drift, precisely not to let the sort of st

Humans want to be around (some) other people

Yes: some other people. The ideologically and morally aligned people, usually. Social/informational bubbles that screen away the rest of humanity, from which they only venture out if forced to (due to the need to earn money/control the populace, etc.). This problem seems to get worse as the ability to insulate yourself from other improves, as could be observed with modern internet-based informational bubbles or the surrounded-by-yes-men problem of dictators.

ASI would make this problem transcendental: there would truly be no need to ever bother with the people outside your bubble again, they could be wiped out or their management outsourced to AIs.

Past this point, you're likely never returning to bothering about them. Why would you, if you can instead generate entire worlds of the kinds of people/entities/experiences you prefer? It seems incredibly unlikely that human social instincts can only be satisfied – or even can be best satisfied – by other humans.

Periodic reminder: AFAIK (though I didn't look much) no one has thoroughly investigated whether there's some small set of molecules, delivered to the brain easily enough, that would have some major regulatory effects resulting in greatly increased cognitive ability. (Feel free to prove me wrong with an example of someone plausibly doing so, i.e. looking hard enough and thinking hard enough that if such a thing was feasible to find and do, then they'd probably have found it--but "surely, surely, surely someone has done so because obviously, right?" is certainly not an accepted proof. And don't call me Shirley!)

I'm simply too busy, but you're not!

Since 1999 there have been "Doogie" mice that were genetically engineered to overexpress NR2B in their brain, and they were found to have significantly greater cognitive function than their normal counterparts, even performing twice as well on one learning test.

No drug AFAIK has been developed that selectively (and safely) enhances NR2B function in the brain, which would best be achieved by a positive allosteric modulator of NR2B, but also no drug company has wanted to or tried to specifically increase general intelligence/IQ in people, and increasing IQ in healthy people is not recognized as treating a disease or even publicly supported.

The drug SAGE718 comes close, but it is a pan-NMDA allosteric (which still showed impressive increases in cognitive end-points in its trial)

Theoretically, if we try to understand how general intelligence/IQ works in a pharmacological sense, then we should be able to develop drugs that affect IQ.

Two ways to do that is investigating the neurological differences between individuals with high IQ and those with average IQ, and mapping out the function of brain regions implicated in IQ e.g. the dorsolateral prefrontal cortex (dlPFC).

If part of the...

I did a high-level exploration of the field a few years ago. It was rushed and optimized more for getting it out there than rigor and comprehensiveness, but hopefully still a decent starting point.

I personally think you'd wanna first look at the dozens of molecules known to improve one or another aspect of cognition in diseases (e.g. Alzheimer's and schizophrenia), that were never investigated for mind enhancement in healthy adults.

Given that some of these show very promising effects (and are often literally approved for cognitive enhancement in diseased populations), given that many of the best molecules we have right now were initially also just approved for some pathology (e.g. methylphenidate, amphetamine, modafinil), and given that there is no incentive for the pharmaceutical industry to conduct clinical trials on healthy people (FDA etc. do not recognize healthy enhancement as a valid indication), there seems to even be a sort of overhang of promising molecule candidates that were just never rigorously tested for healthy adult cognitive enhancement.

https://forum.effectivealtruism.org/posts/hGY3eErGzEef7Ck64/mind-enhancement-cause-exploration

Appendix C includes a list of 'almo...

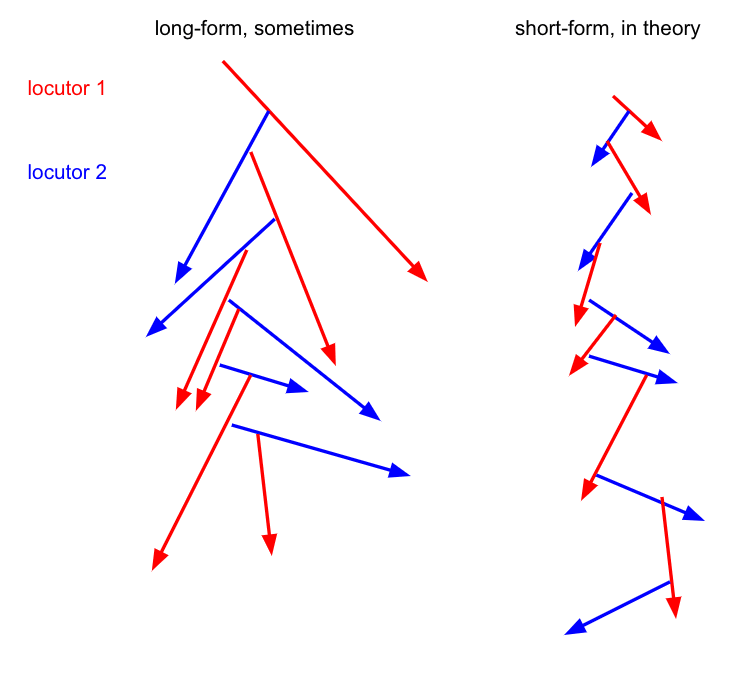

An issue with long-form and asynchronous discourse is wasted motion. Without shared assumptions, the logic and info that locutor 1 adduces is less relevant to locutor 2 than to locutor 1. And, that effect becomes more pronounced as locutor 1 goes down a path of reasoning, constructing more context that locutor 2 doesn't share. (OTOH, long-form is better in terms of individual thinking.)

It is still the case that some people don't sign up for cryonics simply because it takes work to figure out the process / financing. If you do sign up, it would therefore be a public service to write about the process.

If someone asks me "what's the least impressive thing you think AI won't be able to do by 20XX", I give answers like "make lots of original interesting math concepts". (See https://www.lesswrong.com/posts/sTDfraZab47KiRMmT/views-on-when-agi-comes-and-on-strategy-to-reduce#comments) People sometimes say "well that's a pretty impressive thing, you're talking about the height of intellectual labor".

A main reason I give examples like this is that math is an area where it's feasible for there to be a legible absence of legibilization. (By legible, I mean interpersonal explicitness https://www.lesswrong.com/posts/KuKaQEu7JjBNzcoj5/explicitness .) Mathematicians are supposed to make interesting novel definitions that legibilize inexplicit ideas / ways of thinking. If they don't, you can tell that their publications are not so interesting. It is legible that they failed to legibilize.

In fact I suspect there will be many much "easier" feats that AI won't be able to do for a while (some decades). Easier, in the sense that many more humans are able to do those feats. Much harder, in the sense that it requires creativity, and therefore requires having the algorithm-pieces for creativity. That'...

If there are some skilled/smart/motivated/curious ML people seeing this, who want to work on something really cool and/or that could massively help the world, I hope you'll consider reaching out to Tabula.

Humans are (weak) evidence for the instrumental utility for mind-designers to design terminal-goal-construction mechanisms.

Evolution couldn't directly encode IGF into humans. So what was it supposed to do? One answer would be to make us vibe-machines: You bop around, you satisfy your immediate needs, you hang out with people, etc. And that is sort of what you are. But also there are the Unreasonable, who think and plan long-term, who investigate secrets, who build things for 10 or 100 or 1000 years--why? Maybe it's because having terminal-like goals (here meaning, aims that are fairly fixed and fairly ambitious) is so useful that you want to have them anyway even if you can't make them be the right ones. Instead you build machines to guess / make up terminal goals (https://tsvibt.blogspot.com/2022/11/do-humans-derive-values-from-fictitious.html).

For its performances, current AI can pick up to 2 of 3 from:

- Interesting (generates outputs that are novel and useful)

- Superhuman (outperforms humans)

- General (reflective of understanding that is genuinely applicable cross-domain)

AlphaFold's outputs are interesting and superhuman, but not general. Likewise other Alphas.

LLM outputs are a mix. There's a large swath of things that it can do superhumanly, e.g. generating sentences really fast or various kinds of search. Search is, we could say, weakly novel in a sense; LLMs are superhumanly fast at doing a form of search which is not very reflective of general understanding. Quickly generating poems with words that all start with the letter "m" or very quickly and accurately answering stereotyped questions like analogies is superhuman, and reflects a weak sort of generality, but is not interesting.

ImageGen is superhuman and a little interesting, but not really general.

Many architectures + training setups constitute substantive generality (can be applied to many datasets), and produce interesting output (models). However, considered as general training setups (i.e., to be applied to several contexts), they are subhuman.

Recommendation for gippities as research assistants: Treat them roughly like you'd treat RationalWiki, i.e. extremely shit at summaries / glosses / inferences, quite good at citing stuff and fairly good at finding random stuff, some of which is relevant.

Protip: You can prevent itchy skin from being itchy for hours by running it under very hot water for 5-30 seconds. (Don't burn yourself; I use tap water with some cold water, and turn down the cold water until it seems really hot.)

(These are 100% unscientific, just uncritical subjective impressions for fun. CQ = cognitive capacity quotient, like generally good at thinky stuff)

- Overeat a bit, like 10% more than is satisfying: -4 CQ points for a couple hours.

- Overeat a lot, like >80% more than is satisfying: -9 CQ points for 20 hours.

- Sleep deprived a little, like stay up really late but without sleep debt: +5 CQ points.

- Sleep debt, like a couple days not enough sleep: -11 CQ points.

- Major sleep debt, like several days not enough sleep: -20 CQ points.

- Oversleep a lot, like 11 hours: +6 CQ points.

- Ice cream (without having eaten ice cream in the past week): +5 CQ points.

- Being outside: +4 CQ points.

- Being in a car: -8 CQ points.

- Walking in the hills: +9 CQ points.

- Walking specifically up a steep hill: -5 CQ points.

- Too much podcasts: -8 CQ points for an hour.

- Background music: -6 to -2 CQ points.

- Kinda too hot: -3 CQ points.

- Kinda too cold: +2 CQ points.

(stimulants not listed because they tend to pull the features of CQ apart; less good at real thinking, more good at relatively rote thinking and doing stuff)

In this interview, at the linked time: https://www.youtube.com/watch?v=HUkBz-cdB-k&t=847s

Terence Tao describes the notion of an "obstruction" in math research. I think part of the reason that AGI alignment is in shambles is that we haven't integrated this idea enough. In other words, a lot of researchers work on stuff that is sort-of known to not be able to address the hard problems.

(I give some obstruction-ish things here: https://tsvibt.blogspot.com/2023/03/the-fraught-voyage-of-aligned-novelty.html)

"The Future Loves You: How and Why We Should Abolish Death" by Dr Ariel Zeleznikow-Johnston is now available to buy. I haven't read it, but I expect it to be a definitive anti-deathist monograph. https://www.amazon.com/Future-Loves-You-Should-Abolish-ebook/dp/B0CW9KTX76

The description (copied from Amazon):

A brilliant young neuroscientist explains how to preserve our minds indefinitely, enabling future generations to choose to revive us

Just as surgeons once believed pain was good for their patients, some argue today that death brings meaning to life. But given humans rarely live beyond a century – even while certain whales can thrive for over two hundred years – it’s hard not to see our biological limits as profoundly unfair. No wonder then that most people nearing death wish they still had more time.

Yet, with ever-advancing science, will the ends of our lives always loom so close? For from ventilators to brain implants, modern medicine has been blurring what it means to die. In a lucid synthesis of current neuroscientific thinking, Zeleznikow-Johnston explains that death is no longer the loss of heartbeat or breath, but of personal identity – that the core of our identities is ou...

Discourse Wormholes.

In complex or contentious discussions, the central or top-level topic is often altered or replaced. We're all familiar from experience with this phenomenon. Topologically this is sort of like a wormhole:

Imagine two copies of minus the open unit ball, glued together along the unit spheres. Imagine enclosing the origin with a sphere of radius 2. This is a topological separation: The origin is separated from the rest of your space, the copy of that you're standing in. But, what's contained in the enclosure is an entire world just as large; therefore, the origin is not really contained, merely separated. One could walk through the enclosure, and pass through the unit ball boundary, and then proceed back out through the unit ball boundary into the other alternative copy of .

You come to a crux of the issue, or you come to a clash of discourse norms or background assumptions; and then you bloop, where now that is the primary motive or top-level criterion for the conversation.

This has pluses and minuses. You are finding out what the conversation really wanted to be, finding what you most care about here, finding out what the two of most ought to fight about ...

What would you be doing if you had N times more time per day? A piece of terminology I want to coin now, to summarize a phrase I've been using a lot recently:

That's a K-x priority.

As in, "Writing this post on group rationality is a 3x priority.". That means:

If I had 3x as much time, like I could do 3 days of work per day, one of the things I'd actually get to would be writing this post.

Maybe it's confusing because 3x sounds like it's more important, not less. IDK. Could say "3x time priority" for a bit more clarity.

You can think about it in terms of clones, e.g. instead of "I'd do it if I had 2x more time", you say "if I had a clone to have things done, the clone would do that thing" (equivalent in terms of work hours).

So you can say "that's my 1st/2nd/3rd/nth clone's priority".

Say a "deathist" is someone who says "death is net good (gives meaning to life, is natural and therefore good, allows change in society, etc.)" and a "lifeist" ("anti-deathist") is someone who says "death is net bad (life is good, people should not have to involuntarily die, I want me and my loved ones to live)". There are clearly people who go deathist -> lifeist, as that's most lifeists (if nothing else, as an older kid they would have uttered deathism, as the predominant ideology). One might also argue that young kids are naturally lifeist, and there...

The standard way to measure compute is FLOPS. Besides other problems, this measure has two major flaws: First, no one cares exactly how many FLOPS you have; we want to know the order of magnitude without having to incant "ten high". Second, it sounds cute, even though it's going to kill us.

I propose an alternative: Digital Orders Of Magnitude (per Second), or DOOM(S).

(Speculative) It seems like biotech VC is doing poorly, and this stems from the fact that it's a lot of work to discriminate good from bad prospects for the biology itself. (As opposed to, say, ability of a team to execute a business, or how much of a market there is, etc.) If this is true, have some people tried making a biotech VC firm that employs bio experts--like, say, PhD dropouts--to do deep background on startups?

Ostentiation:

So there's steelmanning, where you construct a view that isn't your interlocutor's but is, according to you, more true / coherent / believable than your interlocutor's. Then there's the Ideological Turing Test, where you restate your interlocutor's view in such a way that ze fully endorses your restatement.

Another dimension is how clear things are to the audience. A further criterion for restating your interlocutor's view is the extent to which your restatement makes it feasible / easy for your audience to (accurately) judge that view. You cou...

Is there a nice way to bet on large-evidence, small-probability differences?

Normally we bet on substantial probability differences, like I say 10% you say 50% or similar. Betting makes sense there--you incentivize having correct probabilities, at least within a few percent or whatever. Is there some way to incentivize reporting the right log-odds, to within a logit or whatever?

One sort of answer might be showing that/how you can always extract a mid-range probability disagreement on latent variables, under some assumptions on the structure of the latent variable models underlying the large-logit small-probability disagreement.