I have a lot of ideas about AGI/ASI safety. I've written them down in a paper and I'm sharing the paper here, hoping it can be helpful.

Title: A Comprehensive Solution for the Safety and Controllability of Artificial Superintelligence

Abstract:

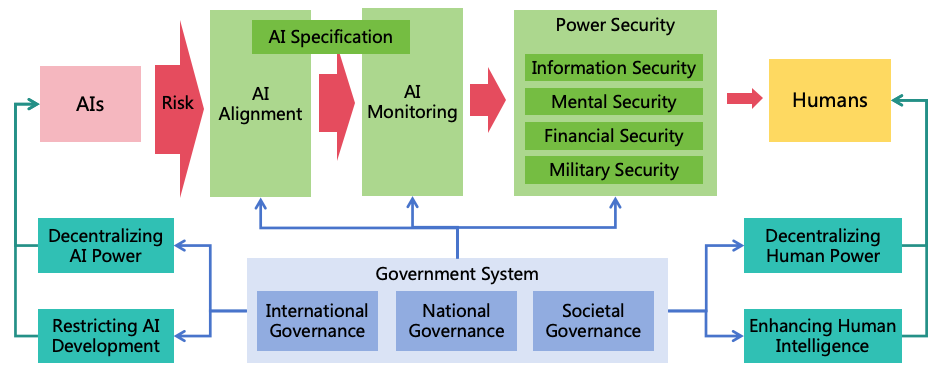

As artificial intelligence technology rapidly advances, it is likely to implement Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) in the future. The highly intelligent ASI systems could be manipulated by malicious humans or independently evolve goals misaligned with human interests, potentially leading to severe harm or even human extinction. To mitigate the risks posed by ASI, it is imperative that we implement measures to ensure its safety and controllability. This paper analyzes the intellectual characteristics of ASI, and three conditions for ASI to cause catastrophes (harmful goals, concealed intentions, and strong power), and proposes a comprehensive safety solution. The solution includes three risk prevention strategies (AI alignment, AI monitoring, and power security) to eliminate the three conditions for AI to cause catastrophes. It also includes four power balancing strategies (decentralizing AI power, decentralizing human power, restricting AI development, and enhancing human intelligence) to ensure equilibrium between AI to AI, AI to human, and human to human, building a stable and safe society with human-AI coexistence. Based on these strategies, this paper proposes 11 major categories, encompassing a total of 47 specific safety measures. For each safety measure, detailed methods are designed, and an evaluation of its benefit, cost, and resistance to implementation is conducted, providing corresponding priorities. Furthermore, to ensure effective execution of these safety measures, a governance system is proposed, encompassing international, national, and societal governance, ensuring coordinated global efforts and effective implementation of these safety measures within nations and organizations, building safe and controllable AI systems which bring benefits to humanity rather than catastrophes.

Content:

The paper is quite long, with over 100 pages. So I can only put a link here. If you're interested, you can visit this link to download the PDF: https://www.preprints.org/manuscript/202412.1418/v1

or you can read the online HTML version at this link:

1. The industry is currently not violating the rules mentioned in my paper, because all current AIs are weak AIs, so none of the AIs' power has reached the upper limit of the 7 types of AIs I described. In the future, it is possible for an AI to break through the upper limit, but I think it is uneconomical. For example, an AI psychiatrist does not need to have superhuman intelligence to perform well. An AI mathematician may be very intelligent in mathematics, but it does not need to learn how to manipulate humans or how to design DNA sequences. Of course, having regulations is better, because there may be some careless AI developers who will grant AIs too many unnecessary capabilities or permissions, although this does not improve the performance of AIs in actual tasks.

The difference between my view and Max Tegmark's is that he seems to assume that there will only be one type of super intelligent AI in the world, while I think there will be many different types of AIs. Different types of AIs should be subject to different rules, rather than the same rule. Can you imagine a person who is both a Nobel Prize-winning scientist, the president, the richest man, and an Olympic champion at the same time? This is very strange, right? Our society doesn't need such an all-round person. Similarly, we don't need such an all-round AI either.

The development of a technology usually has two stages: first, achieving capabilities, and second, reducing costs. The AI technology is currently in the first stage. When AI develops to the second stage, specialization will occur.

2. Agree.