Hi all, I've been working on some AI forecasting research and have prepared a draft report on timelines to transformative AI. I would love feedback from this community, so I've made the report viewable in a Google Drive folder here.

With that said, most of my focus so far has been on the high-level structure of the framework, so the particular quantitative estimates are very much in flux and many input parameters aren't pinned down well -- I wrote the bulk of this report before July and have received feedback since then that I haven't fully incorporated yet. I'd prefer if people didn't share it widely in a low-bandwidth way (e.g., just posting key graphics on Facebook or Twitter) since the conclusions don't reflect Open Phil's "institutional view" yet, and there may well be some errors in the report.

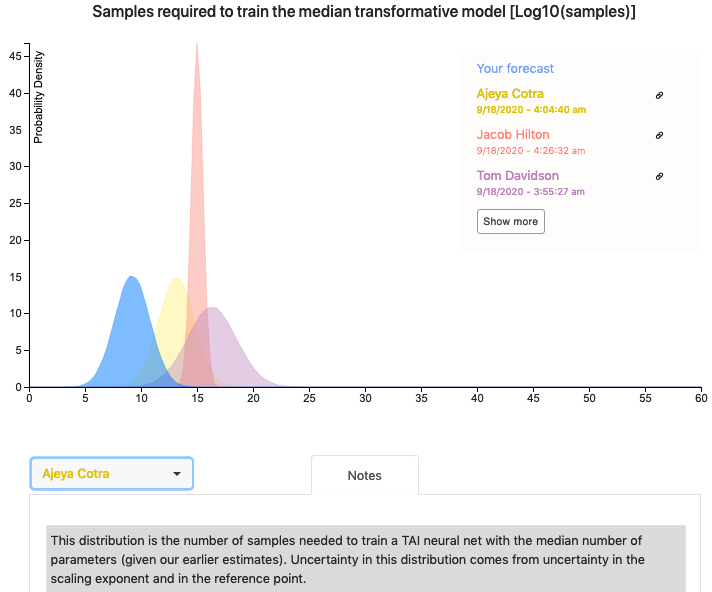

The report includes a quantitative model written in Python. Ought has worked with me to integrate their forecasting platform Elicit into the model so that you can see other people's forecasts for various parameters. If you have questions or feedback about the Elicit integration, feel free to reach out to elicit@ought.org.

Looking forward to hearing people's thoughts!

Thanks for doing this, this is really good!

Some quick thoughts, will follow up later with more once I finish reading and digesting:

--I feel like it's unfair to downweight the less-compute-needed scenarios based on recent evidence, without also downweighting some of the higher-compute scenarios as well. Sure, I concede that the recent boom in deep learning is not quite as massive as one might expect if one more order of magnitude would get us to TAI. But I also think that it's a lot bigger than one might expect if fifteen more are needed! Moreover I feel that the update should be fairly small in both cases, because both updates are based on armchair speculation about what the market and capabilities landscape should look like in the years leading up to TAI. Maybe the market isn't efficient; maybe we really are in an AI overhang.

--If we are in the business of adjusting our weights for the various distributions based on recent empirical evidence (as opposed to more a priori considerations) then I feel like there are other pieces of evidence that argue for shorter timelines. For example, the GPT scaling trends seem to go somewhere really exciting if you extrapolate it four more orders of magnitude or so.

--Relatedly, GPT-3 is the most impressive model I know of so far, and it has only 1/1000th as many parameters as the human brain has synapses. I think it's not crazy to think that maybe we'll start getting some transformative shit once we have models with as many parameters as the human brain, trained for the equivalent of 30 years. Yes, this goes against the scaling laws, and yes, arguably the human brain makes use of priors and instincts baked in by evolution, etc. But still, I feel like at least a couple percentage points of probability should be added to "it'll only take a few more orders of magnitude" just in case we are wrong about the laws or their applicability. It seems overconfident not to. Maybe I just don't know enough about the scaling laws and stuff to have as much confidence in them as you do.

Thanks! No need to wait for a more official release (that could take a long time since I'm prioritizing other projects).