Hi all, I've been working on some AI forecasting research and have prepared a draft report on timelines to transformative AI. I would love feedback from this community, so I've made the report viewable in a Google Drive folder here.

With that said, most of my focus so far has been on the high-level structure of the framework, so the particular quantitative estimates are very much in flux and many input parameters aren't pinned down well -- I wrote the bulk of this report before July and have received feedback since then that I haven't fully incorporated yet. I'd prefer if people didn't share it widely in a low-bandwidth way (e.g., just posting key graphics on Facebook or Twitter) since the conclusions don't reflect Open Phil's "institutional view" yet, and there may well be some errors in the report.

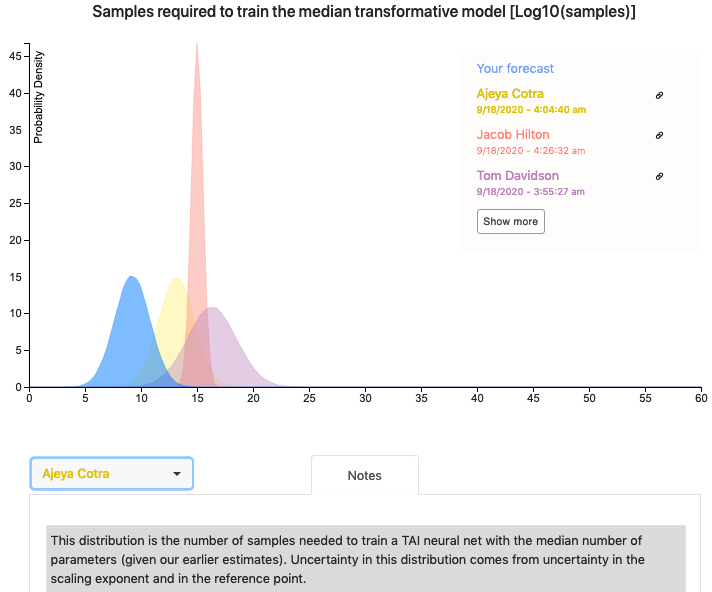

The report includes a quantitative model written in Python. Ought has worked with me to integrate their forecasting platform Elicit into the model so that you can see other people's forecasts for various parameters. If you have questions or feedback about the Elicit integration, feel free to reach out to elicit@ought.org.

Looking forward to hearing people's thoughts!

Using Steve's analogy would make for much shorter timeline estimates. Steve guesses 10-100 runs of online-learning needed, i.e. 10-100 iterations to find the right hyperparameters before you get a training run that produces something actually smart like a human. This is only 1-2 orders of magnitude more compute than the human-brain-human-lifetime anchor, which is the nearest anchor (and which Ajeya assigns only 5% credence to!) Eyeballing the charts it looks like you'd end up with something like 50% probability by 2035, holding fixed all of Ajeya's other assumptions.