Hi all, I've been working on some AI forecasting research and have prepared a draft report on timelines to transformative AI. I would love feedback from this community, so I've made the report viewable in a Google Drive folder here.

With that said, most of my focus so far has been on the high-level structure of the framework, so the particular quantitative estimates are very much in flux and many input parameters aren't pinned down well -- I wrote the bulk of this report before July and have received feedback since then that I haven't fully incorporated yet. I'd prefer if people didn't share it widely in a low-bandwidth way (e.g., just posting key graphics on Facebook or Twitter) since the conclusions don't reflect Open Phil's "institutional view" yet, and there may well be some errors in the report.

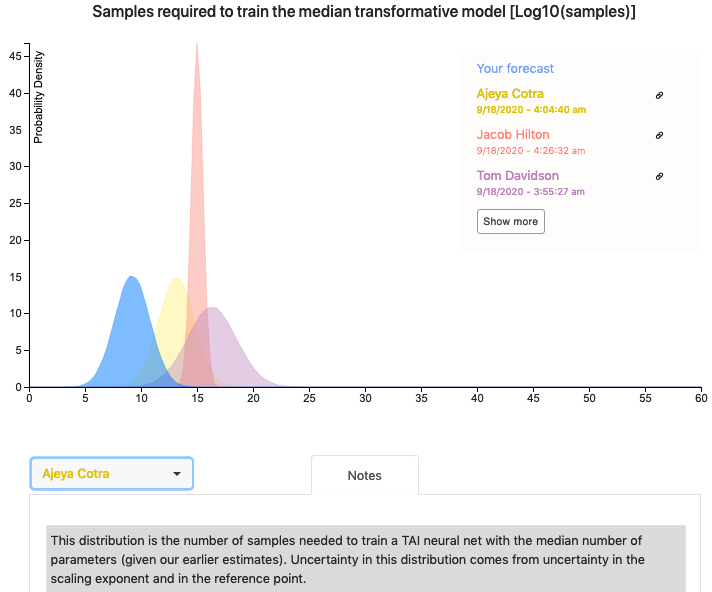

The report includes a quantitative model written in Python. Ought has worked with me to integrate their forecasting platform Elicit into the model so that you can see other people's forecasts for various parameters. If you have questions or feedback about the Elicit integration, feel free to reach out to elicit@ought.org.

Looking forward to hearing people's thoughts!

One reason I put a bit more weight on short / medium horizons was that even if transformative tasks are long-horizon, you could use self-supervised pretraining to do most learning, thus reducing the long-horizon data requirements. Now that Scaling Laws for Transfer is out, we can use it to estimate how much this might help. So let's do some bogus back-of-the-envelope calculations:

We'll make the very questionable assumption that the law relating our short-horizon pretraining task and our long-horizon transformative task will still be DT=19,000(DF)0.18N0.38.

Let's assume that the long-horizon transformative task has a horizon that is 7 orders of magnitude larger than the short-horizon pretraining task. (The full range is 9 orders of magnitude.) Let the from-scratch compute of a short-horizon transformative task be Mshort. Then the from-scratch compute for our long horizon task would be Mshort⋅1e7, if we had to train on all 1e13 data points.

Our key trick is going to be to make the model larger, and pretrain on a short-horizon task, to reduce the amount of long-horizon data we need. Suppose we multiply the model size by a factor of c. We'll estimate total compute as a function of c, and then find the value that minimizes it.

Making the model bigger increases the necessary (short-horizon) pretraining data by a factor of c0.8, so pretraining compute goes up by a factor of c1.8. For transfer, DT goes up by a factor of c0.18.

We still want to have DE=DT+DF=1e13 data points for the long-horizon transformative task. To actually get computational savings, we need to get this primarily from transfer, i.e. we have DT=1.9⋅104(DF)0.18(3e14⋅c)0.38∼1e13, which we can solve to get DF∼7.74⋅1017⋅c−2.1.

Then the total compute is given by Mshort⋅c1.8+Mshort⋅107⋅DF1013=Mshort(c1.8+7.74⋅1011⋅c−2.1).

Minimizing this gives us c=1163, in which case total compute is Mshort⋅6e5.

Thus, we've taken a base time of Mshort⋅1e7, and reduced it down to Mshort⋅6e5, a little over an order of magnitude speedup. This is solidly within my previous expectations (looking at my notes, I said "a couple of orders of magnitude"), so my timelines don't change much.

Some major caveats: