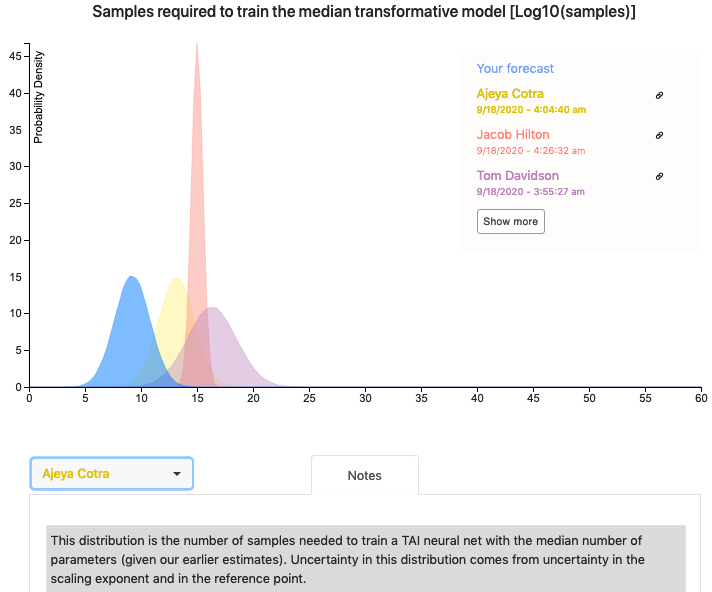

Hi all, I've been working on some AI forecasting research and have prepared a draft report on timelines to transformative AI. I would love feedback from this community, so I've made the report viewable in a Google Drive folder here.

With that said, most of my focus so far has been on the high-level structure of the framework, so the particular quantitative estimates are very much in flux and many input parameters aren't pinned down well -- I wrote the bulk of this report before July and have received feedback since then that I haven't fully incorporated yet. I'd prefer if people didn't share it widely in a low-bandwidth way (e.g., just posting key graphics on Facebook or Twitter) since the conclusions don't reflect Open Phil's "institutional view" yet, and there may well be some errors in the report.

The report includes a quantitative model written in Python. Ought has worked with me to integrate their forecasting platform Elicit into the model so that you can see other people's forecasts for various parameters. If you have questions or feedback about the Elicit integration, feel free to reach out to elicit@ought.org.

Looking forward to hearing people's thoughts!

Let O1 and O2 be two optimization algorithms, each searching over some set of programs. Let V be some evaluation metric over programs such that V(p) is our evaluation of program p, for the purpose of comparing a program found by O1 to a program found by O2. For example, V can be defined as a subjective impressiveness metric as judged by a human.

Intuitive definition: Suppose we plot a curve for each optimization algorithm such that the x-axis is the inference compute of a yielded program and the y-axis is our evaluation value of that program. If the curves of O1 and O2 are similar up to scaling along the x-axis, then we say that O1 and O2 are similarly-scaling w.r.t inference compute, or SSIC for short.

Formal definition: Let O1 and O2 be optimization algorithms and let V be an evaluation function over programs. Let us denote with Oi(n) the program that Oi finds when it uses n flops (which would correspond to the training compute if Oi is an ML algorithms). Let us denote with C(p) the amount of compute that program p uses. We say that O1 and O2 are SSIC with respect to V if for any n1,n′1,n2,n′2 such that C(O1(n1))C(O2(n2))≈C(O1(n′1))C(O2(n′2)), if V(O1(n1))≈V(O2(n2)) then V(O1(n′1))≈V(O2(n′2)).

I think the report draft implicitly uses the assumption that human evolution and the first ML algorithm that will result in TAI are SSIC (with respect to a relevant V). It may be beneficial to discuss this assumption in the report. Clearly, not all pairs of optimization algorithms are SSIC (e.g. consider a pure random search + any optimization algorithm). Under what conditions should we expect a pair of optimization algorithms to be SSIC with respect to a given V?

Maybe that question should be investigated empirically, by looking at pairs of optimization algorithms, were one is a popular ML algorithm and the other is some evolutionary computation algorithm (searching over a very different model space), and checking to what extent the two algorithms are SSIC.